Amazon S3 Storage - dcm4che/dcm4chee-arc-light GitHub Wiki

S3 Storage may be used as an alternative to filesystems as storage systems for DICOM objects stored to archive. This page explains how an Amazon S3 can be configured as an ONLINE or NEARLINE storage backend.

-

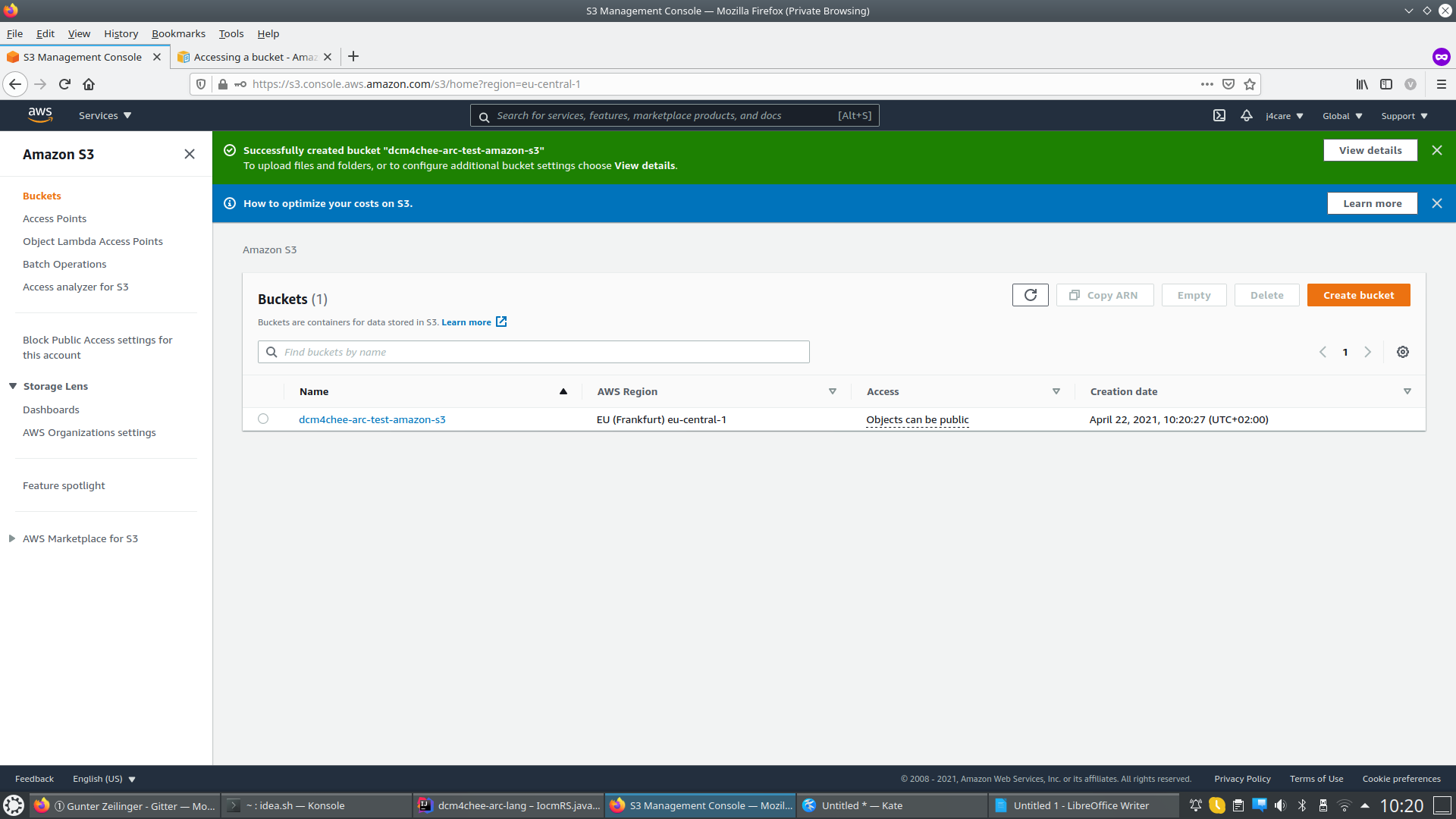

Login to Amazon S3 console for your region

-

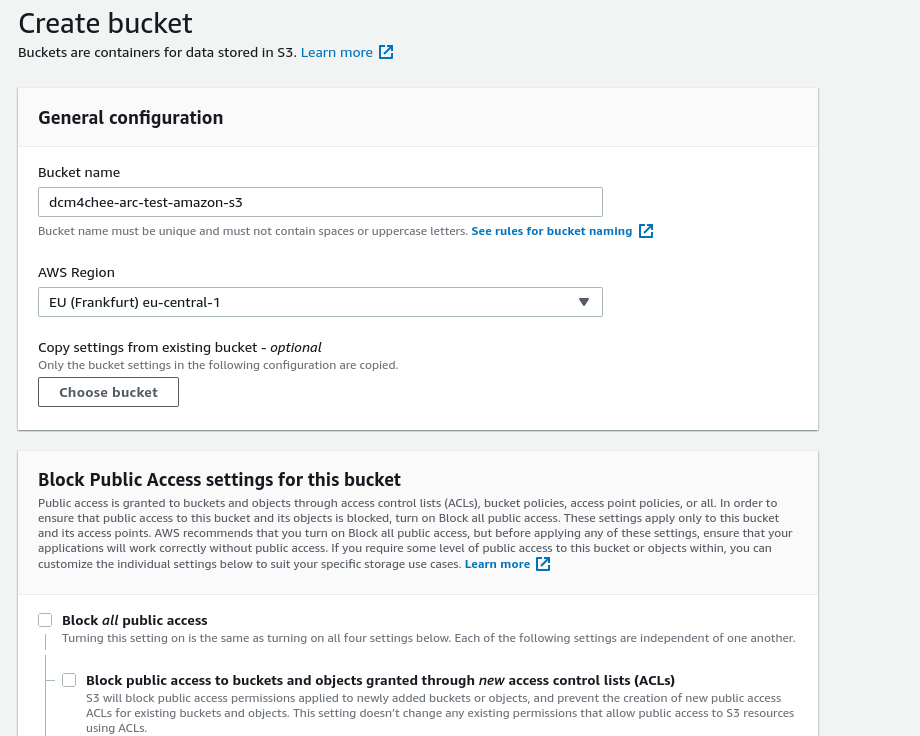

Create a bucket

-

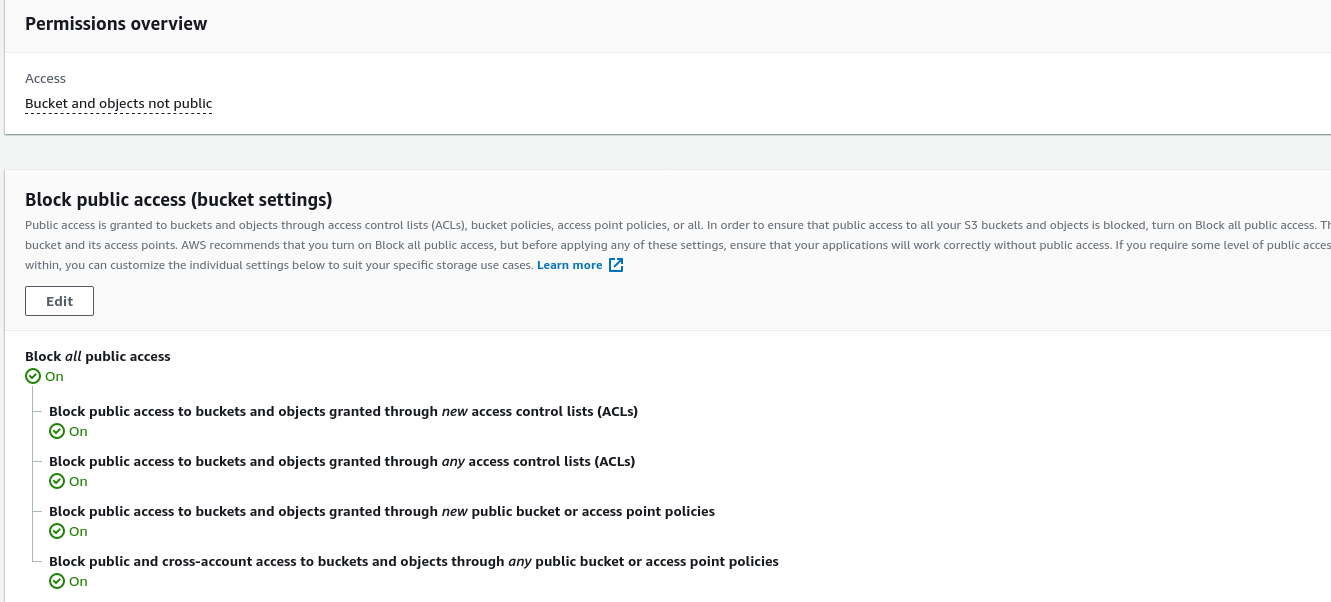

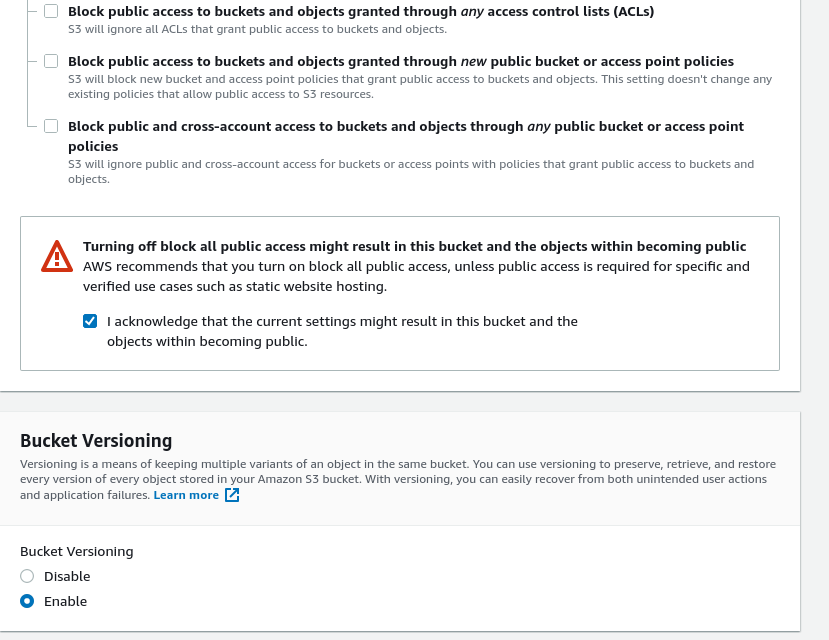

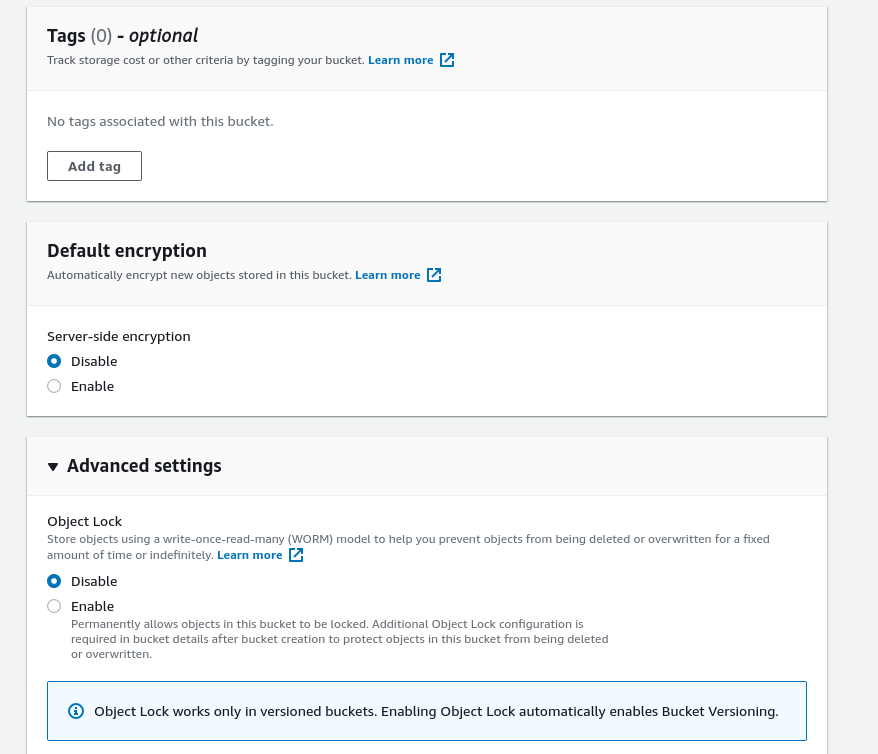

By default

Block Public Access settings for this bucketis enabled. You may or may not choose to turn off this setting.Bucket versioningis disabled by default, optionally choose to enable it

-

Keep other parameters unchanged and save the bucket settings.

-

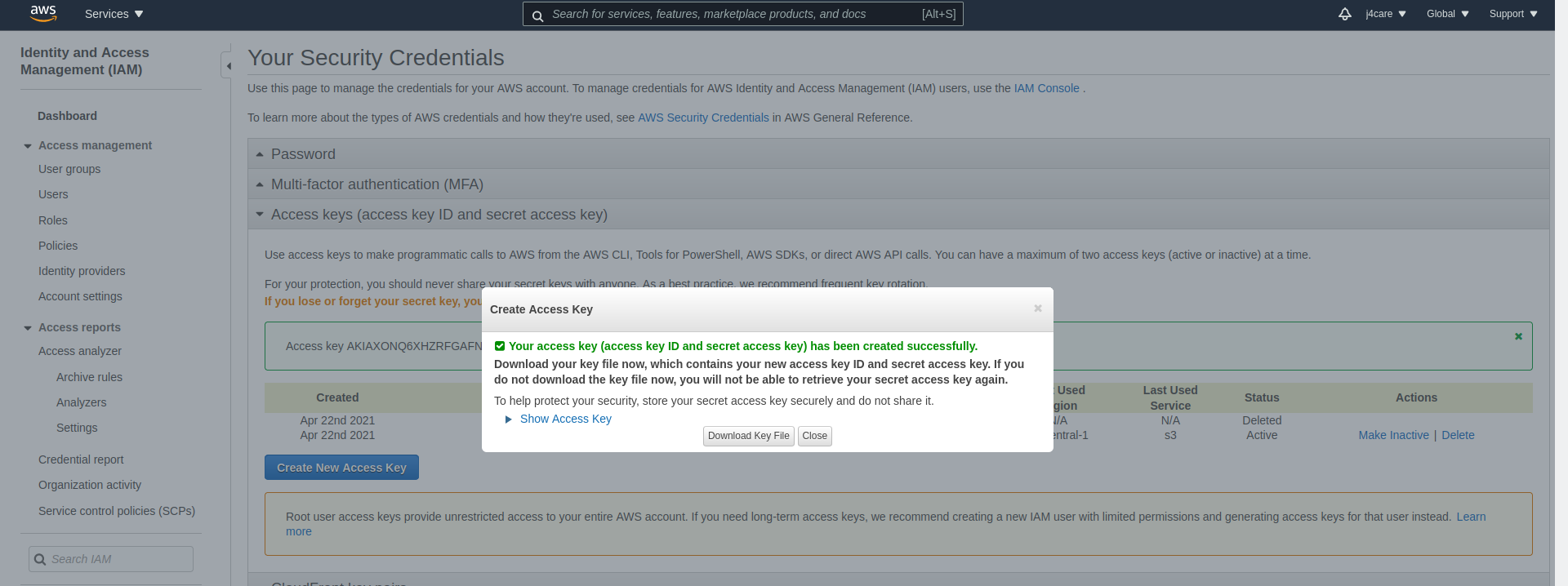

Next create an Access Key and download it. This file contains the values required

aws-s3storage configurations in archive for<access-key>and<secret-key>.

Refer Generic Storage for general information on storage configuration.

To configure Amazon S3 as a storage, configure using one of the following two ways. Replace <bucket-name>,

<secret-key>, <access-key> and eu-central-1 with values corresponding to your Amazon S3 bucket and related

configurations.

- Go to Storage Descriptors of archive device (

Menu -> Configuration -> Devices -> dcm4chee-arc -> Device Extension -> Archive Device Extension -> Child Objects) - Add a new Storage Descriptor as :

Storage ID : amazon-s3 Storage URI : jclouds:aws-s3:https://<bucket-name>.s3.eu-central-1.amazonaws.com Storage Property : container=<bucket-name> Storage Property : jclouds.s3.virtual-host-buckets=true Storage Property : credential=<secret-key> Storage Property : identity=<access-key> Storage Property : jclouds.strip-expect-header=true Storage Property : containerExists=true Storage Property : jclouds.relax-hostname=true Storage Property : jclouds.trust-all-certs=true

- Either create an LDIF file (e.g.):

version: 1 dn: dcmStorageID=amazon-s3,dicomDeviceName=dcm4chee-arc,cn=Devices,cn=DICOM Configuration,dc=dcm4che,dc=org objectClass: dcmStorage dcmStorageID: amazon-s3 dcmURI: jclouds:aws-s3:https://<bucket-name>.s3.eu-central-1.amazonaws.com dcmDigestAlgorithm: MD5 dcmProperty: container=<bucket-name> dcmProperty: jclouds.s3.virtual-host-buckets=true dcmProperty: credential=<secret-key> dcmProperty: identity=<access-key> dcmProperty: jclouds.strip-expect-header=true dcmProperty: containerExists=true dcmProperty: jclouds.relax-hostname=true dcmProperty: jclouds.trust-all-certs=true

and import it to the LDAP Server by using the ldapadd command line utility or the LDIF Import... function of Apache Directory Studio

- or directly use the New Entry... function to create corresponding Exporter entries.

Refer Storage Descriptor to understand the description of attributes.

For debugging HTTP requests to the S3 provider, configure additional Loggers org.jclouds and jclouds.headers with

level DEBUG.

Choose to configure and test either or both of the following two use cases :

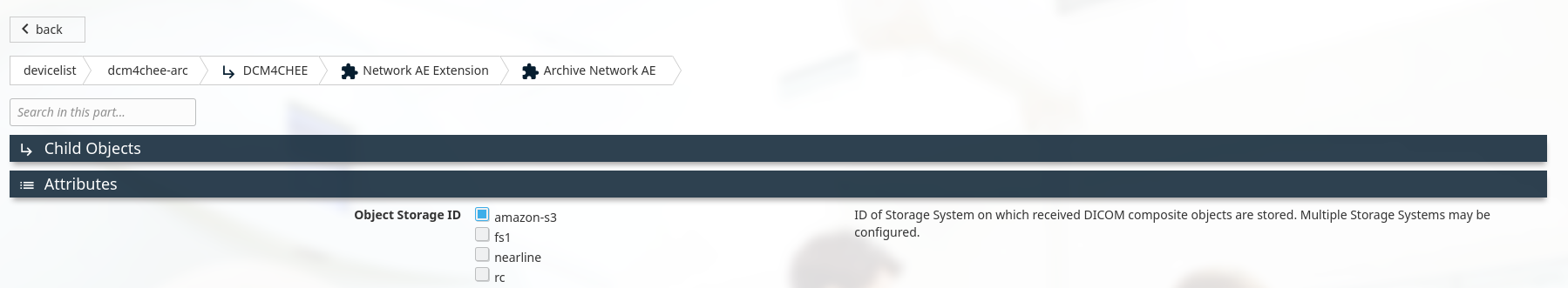

-

Configured

amazon-s3asObject Storage IDforDCM4CHEEarchive AE

-

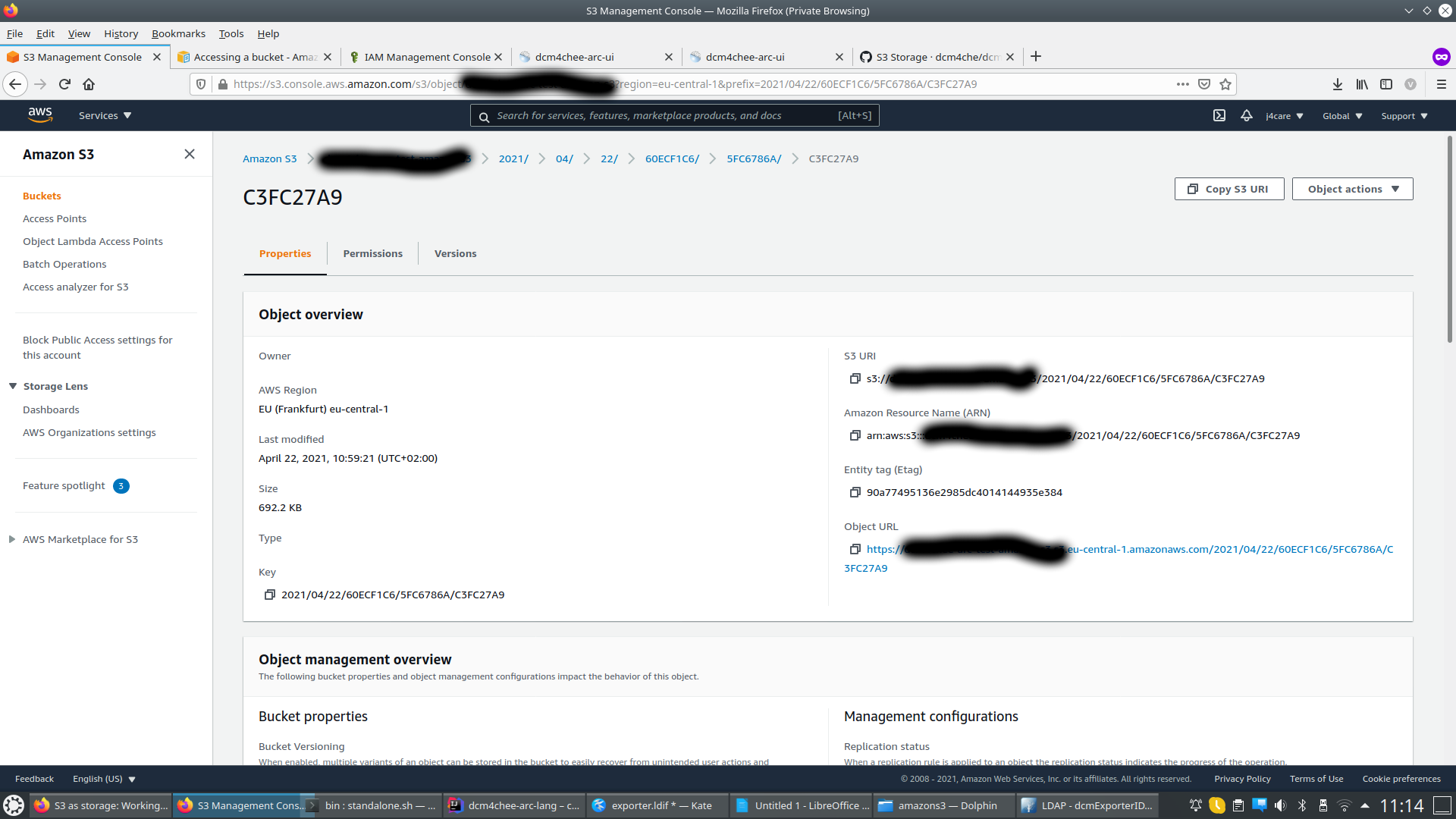

Stored study to archive. Verify

Storage IDs of Studyin study's attributes using Archive UI and corresponding objects in Amazon S3 console.

-

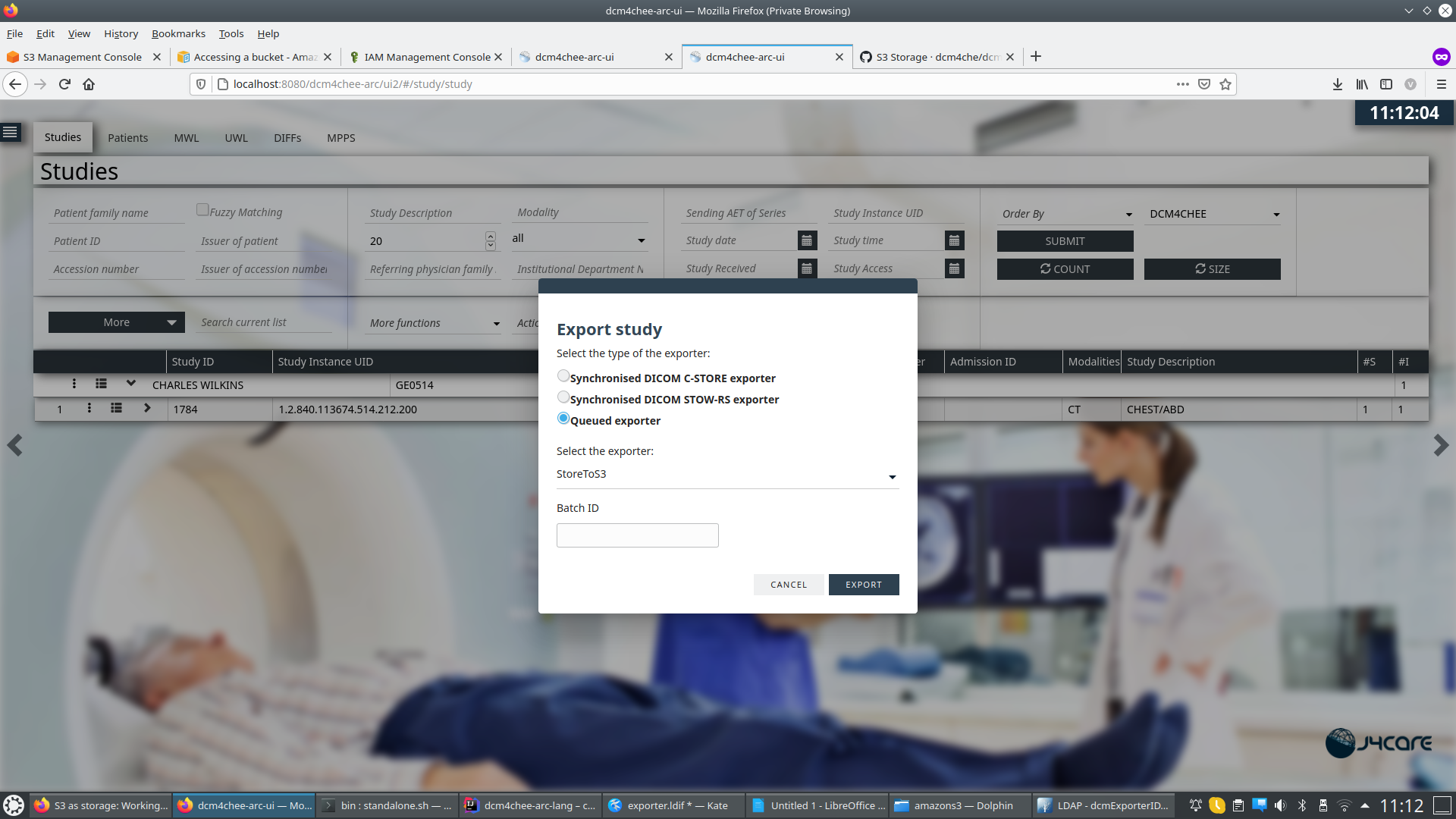

Create an

Exporter Descriptorin archive (Menu -> Configuration -> Devices -> dcm4chee-arc -> Device Extension -> Archive Device Extension -> Child Objects)version: 1 dn: dcmExporterID=StoreToS3,dicomDeviceName=dcm4chee-arc,cn=Devices,cn=DICOMConfiguration,dc=dcm4che,dc=org objectClass: dcmExporter dcmExporterID: StoreToS3 dcmQueueName: Export6 dcmURI: storage:amazon-s3 dicomAETitle: DCM4CHEE -

Export any study (

Navigation -> Studies) usingQueued Exporteroption to S3 Storage by selecting the above createdExporter Descriptor.

-

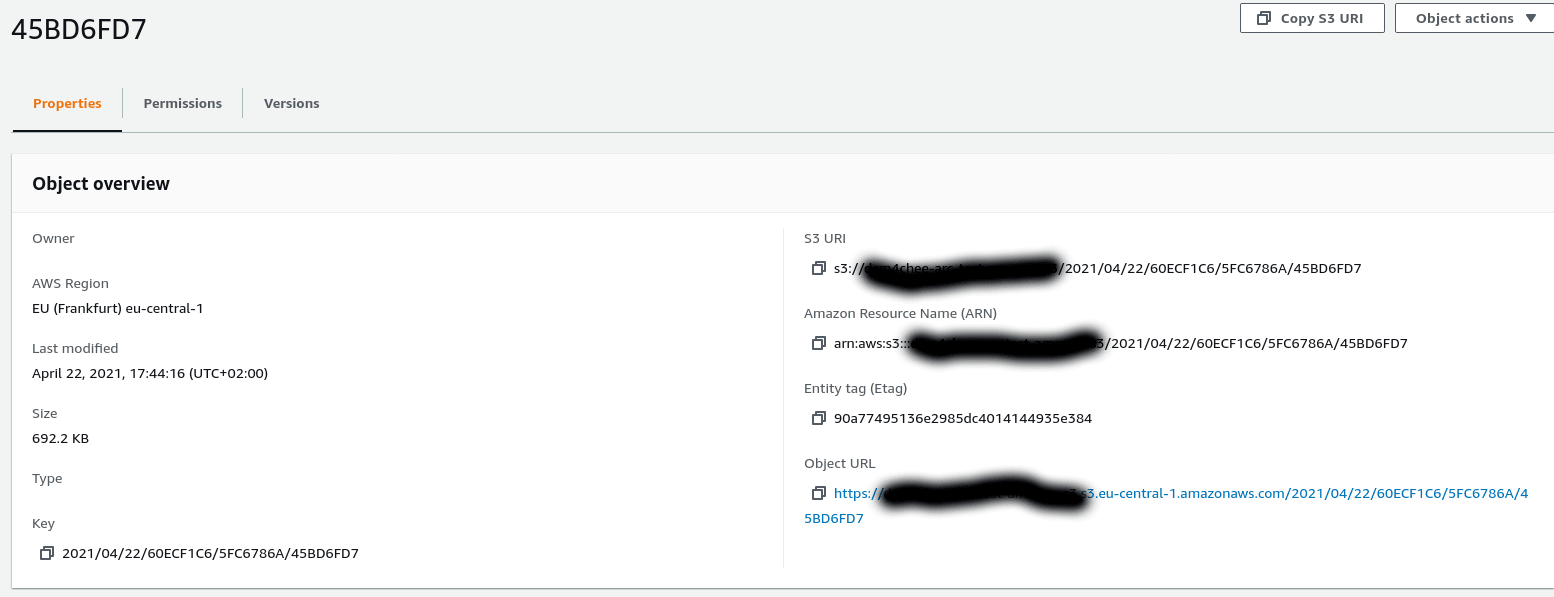

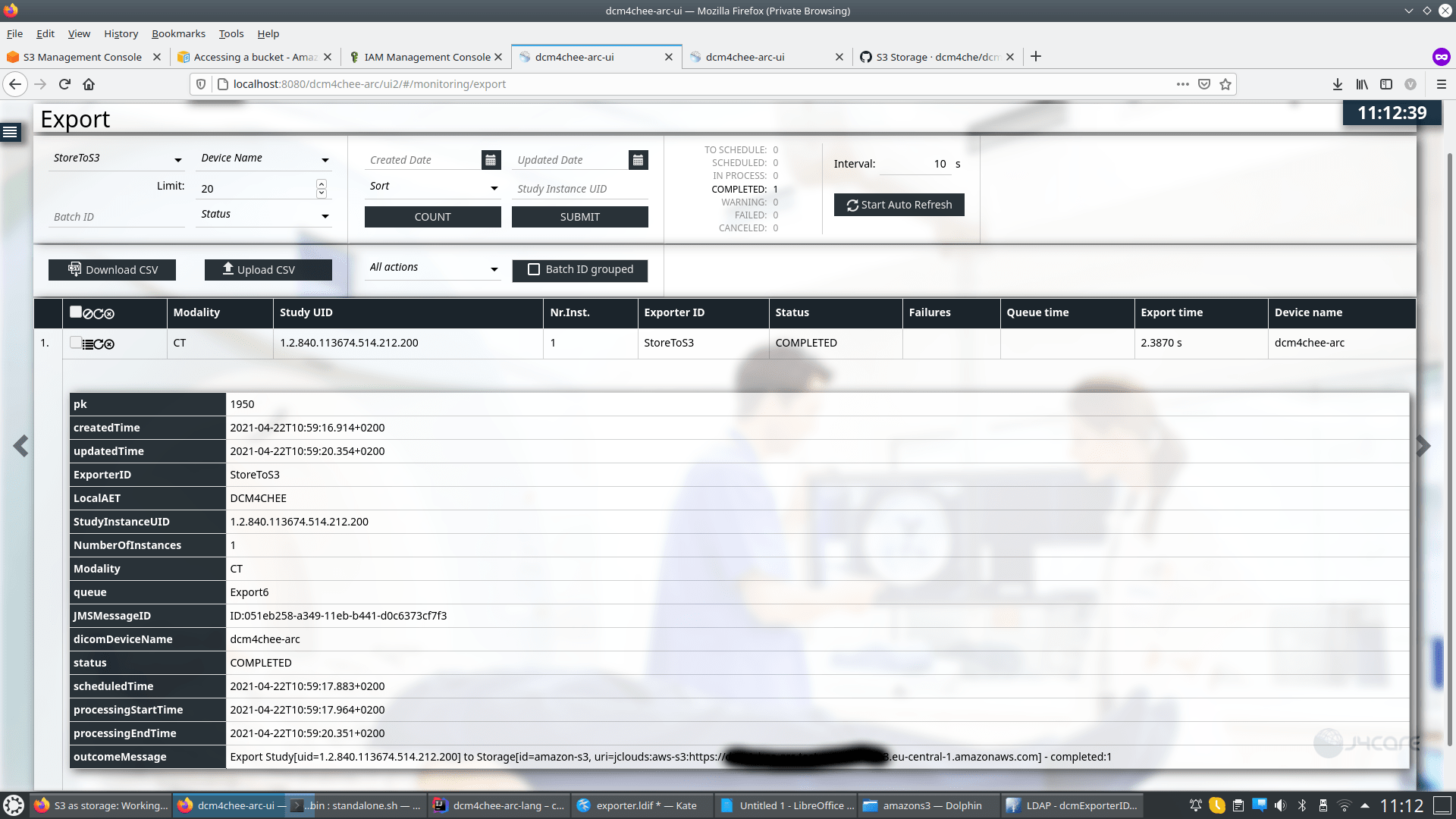

Verify in

Monitoring -> Exportthat the export task to store objects inamazon-s3storage isCOMPLETED.

-

Verify stored objects in Amazon S3 console