Setting up alternative data stores - chuwy/snowplow-ci GitHub Wiki

HOME > SNOWPLOW SETUP GUIDE > Step 4: Setup alternative data stores

Snowplow supports storing your data into four different data stores:

| Storage | Description | Status |

|---|---|---|

| S3 (EMR, Kinesis) | Data is stored in the S3 file system where it can be analysed using EMR (e.g. Hive, Pig, Mahout) | Production-ready |

| Redshift setup-redshift | A columnar database offered as a service on EMR. Optimized for performing OLAP analysis. Scales to Petabytes | Production-ready |

| [PostgreSQL] setup-postgres | A popular, open source, RDBMS database | Production-ready |

| Elasticsearch | A search server for JSON documents | Production-ready |

| [Amazon DynamoDB][setup-dynamodb] | Fast NoSQL database used as auxilliary storage for de-duplication process | Production-ready |

By setting up the EmrEtlRunner (in the previous step), you are already successfully loading your Snowplow event data into S3 where it is accessible to EMR for analysis.

If you wish to analyse your data using a wider range of tools (e.g. BI tools like Looker looker, ChartIO chartio or Tableau tableau, or statistical tools like R r), you will want to load your data into a database like Amazon Redshift setup-redshift or [PostgreSQL] setup-postgres to enable use of these tools.

The StorageLoader storage-loader-setup is an application to make it simple to keep an updated copy of your data in Redshift. To setup Snowplow to automatically populate a PostgreSQL and/or Redshift database with Snowplow data, you need to first:

- [Create a database and table for Snowplow data in Redshift] setup-redshift and/or

- [Create a database and table for Snowplow data in PostgreSQL] setup-postgres

Then, afterwards, you will need to [set up the StorageLoader to regularly transfer Snowplow data into your new store(s)] storage-loader-setup

Note that instructions on setting up both Redshift and PostreSQL on EC2 are included in this setup guide and referenced from the links above.

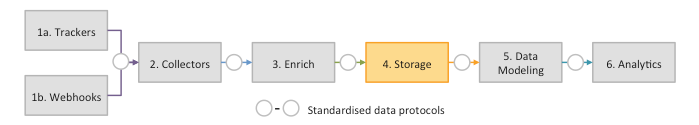

All done? Then get started with data modeling or start analysing your event-level data.

Note: We recommend running all Snowplow AWS operations through an IAM user with the bare minimum permissions required to run Snowplow. Please see our IAM user setup page for more information on doing this.