Image filter (Electron) - chung-leong/zigar GitHub Wiki

In this example we're going to build a dekstop app that apply a filter on an image. We'll first implement just the user interface and the image loading/saving functionalities. After getting the basics working, we'll bring in our Zig-powered image filter.

We begin by initializing the project:

npm init electron-app@latest filter

cd filter

mkdir zig img

npm install node-zigarThen we add a very simple UI:

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Image filter</title>

<link rel="stylesheet" href="index.css">

</head>

<body>

<div class="App">

<div class="contents">

<div class="pane align-right">

<canvas id="srcCanvas"></canvas>

</div>

<div class="pane align-left">

<canvas id="dstCanvas"></canvas>

<div class="controls">

Intensity: <input id="intensity" type="range" min="0" max="1" step="0.0001" value="0.3">

</div>

</div>

</div>

</div>

</body>

<script src="./renderer.js"></script>

</html>Basically, we have two HTML canvases in our app, one for displaying the original image and the other the outcome. There's also a range input for controlling the intensity of the filter's effect.

Our UI code is in renderer.js:

const srcCanvas = document.getElementById('srcCanvas');

const dstCanvas = document.getElementById('dstCanvas');

const intensity = document.getElementById('intensity');

window.electronAPI.onLoadImage(loadImage);

window.electronAPI.onSaveImage(saveImage);

intensity.oninput = () => applyFilter();

async function loadImage(url) {

const img = new Image;

img.src = url;

await img.decode();

const bitmap = await createImageBitmap(img);

srcCanvas.width = bitmap.width;

srcCanvas.height = bitmap.height;

const ctx = srcCanvas.getContext('2d', { willReadFrequently: true });

ctx.drawImage(bitmap, 0, 0);

applyFilter();

}

function applyFilter() {

const srcCTX = srcCanvas.getContext('2d', { willReadFrequently: true });

const { width, height } = srcCanvas;

const params = { intensity: parseFloat(intensity.value) };

const srcImageData = srcCTX.getImageData(0, 0, width, height);

const dstImageData = srcImageData;

dstCanvas.width = width;

dstCanvas.height = height;

const dstCTX = dstCanvas.getContext('2d');

dstCTX.putImageData(dstImageData, 0, 0);

}

async function saveImage(path, type) {

const blob = await new Promise((resolve, reject) => {

const callback = (result) => {

if (result) {

resolve(result);

} else {

reject(new Error('Unable to encode image'));

}

};

dstCanvas.toBlob(callback, type);

});

const buffer = await blob.arrayBuffer();

await window.electronAPI.writeFile(path, buffer);

}The code should be largely self-explanatory if you've worked with HTML canvas before. The following lines are Electron-specific:

window.electronAPI.onLoadImage(loadImage);

window.electronAPI.onSaveImage(saveImage);These two lines enable us to initiate loading and saving from the application menu, something handled by the Node side of our app.

await window.electronAPI.writeFile(path, buffer);This line at the bottom of saveImage() sends data obtained from the canvas to the Node side.

onLoadImage(), onSaveImage(), and writeFile() are defined in preload.js:

const { contextBridge, ipcRenderer } = require('electron')

contextBridge.exposeInMainWorld('electronAPI', {

onLoadImage: (callback) => ipcRenderer.on('load-image', (_event, url) => callback(url)),

onSaveImage: (callback) => ipcRenderer.on('save-image', (_event, path, type) => callback(path, type)),

writeFile: (path, data) => ipcRenderer.invoke('write-file', path, data),

});Consult the Electron documentation if you need a refresher on what a preload script does.

Let us move on to the Node side of things. In index.js we add our menu code at the bottom of

createWindow():

// Open the DevTools.

// mainWindow.webContents.openDevTools();

const filters = [

{ name: 'Image files', extensions: [ 'gif', 'png', 'jpg', 'jpeg', 'jpe', 'webp' ] },

{ name: 'GIF files', extensions: [ 'gif' ], type: 'image/gif' },

{ name: 'PNG files', extensions: [ 'png' ], type: 'image/png' },

{ name: 'JPEG files', extensions: [ 'jpg', 'jpeg', 'jpe' ], type: 'image/jpeg' },

{ name: 'WebP files', extensions: [ 'webp' ], type: 'image/webp' },

];

const onOpenClick = async () => {

const { canceled, filePaths } = await dialog.showOpenDialog({ filters, properties: [ 'openFile' ] });

if (!canceled) {

const [ filePath ] = filePaths;

const url = pathToFileURL(filePath);

mainWindow.webContents.send('load-image', url.href);

}

};

const onSaveClick = async () => {

const { canceled, filePath } = await dialog.showSaveDialog({ filters });

if (!canceled) {

const { ext } = path.parse(filePath);

const filter = filters.find(f => f.type && f.extensions.includes(ext.slice(1).toLowerCase()));

const type = filter?.type ?? 'image/png';

mainWindow.webContents.send('save-image', filePath, type);

}

};

const isMac = process.platform === 'darwin'

const menuTemplate = [

(isMac) ? {

label: app.name,

submenu: [

{ role: 'quit' }

]

} : null,

{

label: '&File',

submenu: [

{ label: '&Open', click: onOpenClick },

{ label: '&Save', click: onSaveClick },

{ type: 'separator' },

isMac ? { role: 'close' } : { role: 'quit' }

]

},

].filter(Boolean);

const menu = Menu.buildFromTemplate(menuTemplate)

Menu.setApplicationMenu(menu);

};In the whenReady handler, we connect writeFile() from the

fs` module to Electron's

IPC mechanism:

app.whenReady().then(() => {

ipcMain.handle('write-file', async (_event, path, buf) => writeFile(path, new DataView(buf)));All this addition code requires additional calls to require():

const { app, dialog, ipcMain, BrowserWindow, Menu } = require('electron');

const { writeFile } = require('fs/promises');

const path = require('path');

const { pathToFileURL } = require('url');Finally, our app needs new CSS styles:

:root {

font-family: Inter, system-ui, Avenir, Helvetica, Arial, sans-serif;

line-height: 1.5;

font-weight: 400;

color-scheme: light dark;

color: rgba(255, 255, 255, 0.87);

background-color: #242424;

font-synthesis: none;

text-rendering: optimizeLegibility;

-webkit-font-smoothing: antialiased;

-moz-osx-font-smoothing: grayscale;

}

* {

box-sizing: border-box;

}

body {

margin: 0;

display: flex;

flex-direction: column;

place-items: center;

min-width: 320px;

min-height: 100vh;

}

#root {

flex: 1 1 100%;

width: 100%;

}

.App {

display: flex;

position: relative;

flex-direction: column;

width: 100%;

height: 100%;

}

.App .nav {

position: fixed;

width: 100%;

color: #000000;

background-color: #999999;

font-weight: bold;

flex: 0 0 auto;

padding: 2px 2px 1px 2px;

}

.App .nav .button {

padding: 2px;

cursor: pointer;

}

.App .nav .button:hover {

color: #ffffff;

background-color: #000000;

padding: 2px 10px 2px 10px;

}

.App .contents {

display: flex;

width: 100%;

margin-top: 1em;

}

.App .contents .pane {

flex: 1 1 50%;

padding: 5px 5px 5px 5px;

}

.App .contents .pane CANVAS {

border: 1px dotted rgba(255, 255, 255, 0.10);

max-width: 100%;

max-height: 90vh;

}

.App .contents .pane .controls INPUT {

vertical-align: middle;

width: 50%;

}

@media screen and (max-width: 600px) {

.App .contents {

flex-direction: column;

}

.App .contents .pane {

padding: 1px 2px 1px 2px;

}

.App .contents .pane .controls {

padding-left: 4px;

}

}

.hidden {

position: absolute;

visibility: hidden;

z-index: -1;

}

.align-left {

text-align: left;

}

.align-right {

text-align: right;

}And a sample image. Either download the following or choose one of your own:

Save it in img.

With everything in place, it's time to launch the app:

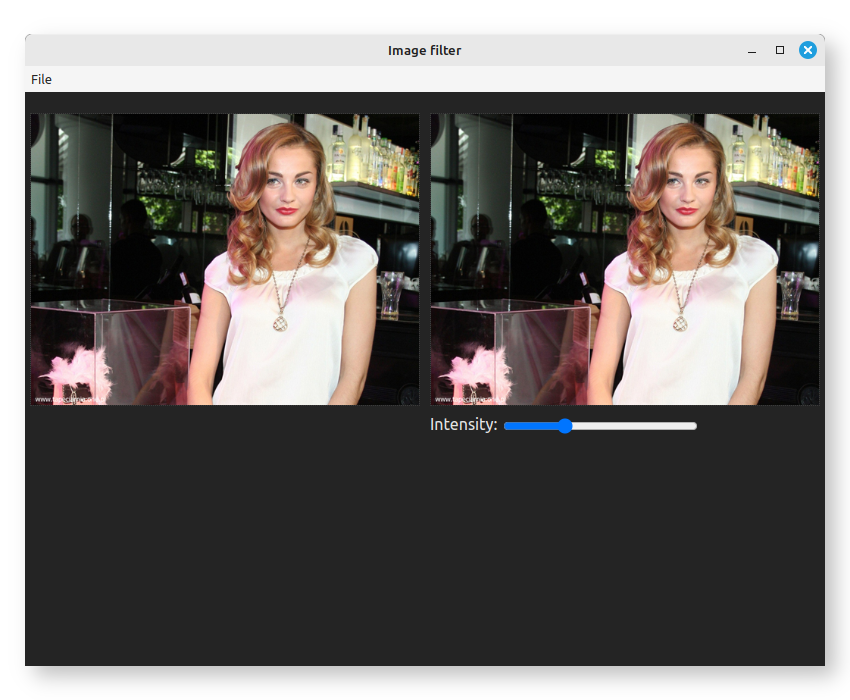

npm run startYou should see something like this:

Moving the slider won't do anything but image loading and saving should work.

Okay, we'll now put in the Zig-powered image filter. Download

sepia.zig

into the zig directory.

The code in question was translated from a Pixel Bender filter using pb2zig. Consult the intro page for an explanation of how it works.

In index.js, add these require statements at the top:

require('node-zigar/cjs');

const { createOutput } = require('../zig/sepia.zig');Add a handler for filter-image in the whenReady handler:

ipcMain.handle('filter-image', (_event, width, height, data, params) => {

const src = { width, height, data };

const { dst } = createOutput(width, height, { src }, params);

return dst.data;

});The Zig function createOutput() has the follow declaration:

pub fn createOutput(

allocator: std.mem.Allocator,

width: u32,

height: u32,

input: Input,

params: Parameters,

) !Outputallocator is automatically provided by Zigar. We get width and height from the frontend.

params contains a single f32: intensity. That comes from the frontend too.

Input is a parameterized type:

pub const Input = KernelInput(u8, kernel);Which expands to:

pub const Input = struct {

src: Image(u8, 4, false);

};Then further to:

pub const Input = struct {

src: struct {

pub const Pixel = @Vector(4, u8);

pub const FPixel = @Vector(4, f32);

pub const channels = 4;

data: []const Pixel,

width: u32,

height: u32,

colorSpace: ColorSpace = .srgb,

offset: usize = 0,

};

};Image was purposely defined in a way so that it is compatible with the browser's

ImageData. Its

data field is []const @Vector(4, u8), a slice pointer that accepts a Uint8ClampedArray

as target without casting. Normally, we would just use the ImageData as src. It wouldn't get

correctly recreated over IPC though, so we'll only send the Uint8ClampedArray fron the front

end and recreate the object in the Node-side handler.

Like Input, Output is a parameterized type. It too can potentially contain multiple images. In

this case (and most cases), there's only one:

pub const Output = struct {

dst: {

pub const Pixel = @Vector(4, u8);

pub const FPixel = @Vector(4, f32);

pub const channels = 4;

data: []Pixel,

width: u32,

height: u32,

colorSpace: ColorSpace = .srgb,

offset: usize = 0,

},

};dst.data points to memory allocated from allocator. Normally, the field would be represented by

a Zigar object on the JavaScript side. In sepia.zig however,

there's a meta-type declaration that changes this:

pub const @"meta(zigar)" = struct {

pub fn isFieldClampedArray(comptime T: type, comptime name: std.meta.FieldEnum(T)) bool {

if (@hasDecl(T, "Pixel")) {

// make field `data` clamped array if output pixel type is u8

if (@typeInfo(T.Pixel).vector.child == u8) {

return name == .data;

}

}

return false;

}

pub fn isFieldTypedArray(comptime T: type, comptime name: std.meta.FieldEnum(T)) bool {

if (@hasDecl(T, "Pixel")) {

// make field `data` typed array (if pixel value is not u8)

return name == .data;

}

return false;

}

pub fn isDeclPlain(comptime T: type, comptime _: std.meta.DeclEnum(T)) bool {

// make return value plain objects

return true;

}

};During the export process, when Zigar encounters a field consisting of u8's, it'll call

isFieldClampedArray() to see if you want it to be an

Uint8ClampedArray.

If the function does not exist or returns false, it'll try isFieldTypedArray() next, which

would make the field a regular

Uint8Array.

Meanwhile, isDeclPlain() makes the output of all export functions plain JavaScript objects. The

end result is that we get something like this from createOutput():

{

dst: {

data: Uint8ClampedArray(60000) [ ... ],

width: 150,

height: 100,

colorSpace: 'srgb'

}

}Without this metadata we would need to do this instead in our IPC handler:

return dst.data.clampedArray;In preload.js we add in the needed plumbing:

contextBridge.exposeInMainWorld('electronAPI', {

/* ... */

filterImage: (width, height, data, params) => ipcRenderer.invoke('filter-image', width, height, data, params),

});In renderer.js, we change applyFilter() so that it uses the backend to create the output image data:

async function applyFilter() {

const srcCTX = srcCanvas.getContext('2d', { willReadFrequently: true });

const { width, height } = srcCanvas;

const params = { intensity: parseFloat(intensity.value) };

const srcImageData = srcCTX.getImageData(0, 0, width, height);

const outputData = await window.electronAPI.filterImage(width, height, srcImageData.data, params);

const dstImageData = new ImageData(outputData, width, height);

dstCanvas.width = width;

dstCanvas.height = height;

const dstCTX = dstCanvas.getContext('2d');

dstCTX.putImageData(dstImageData, 0, 0);

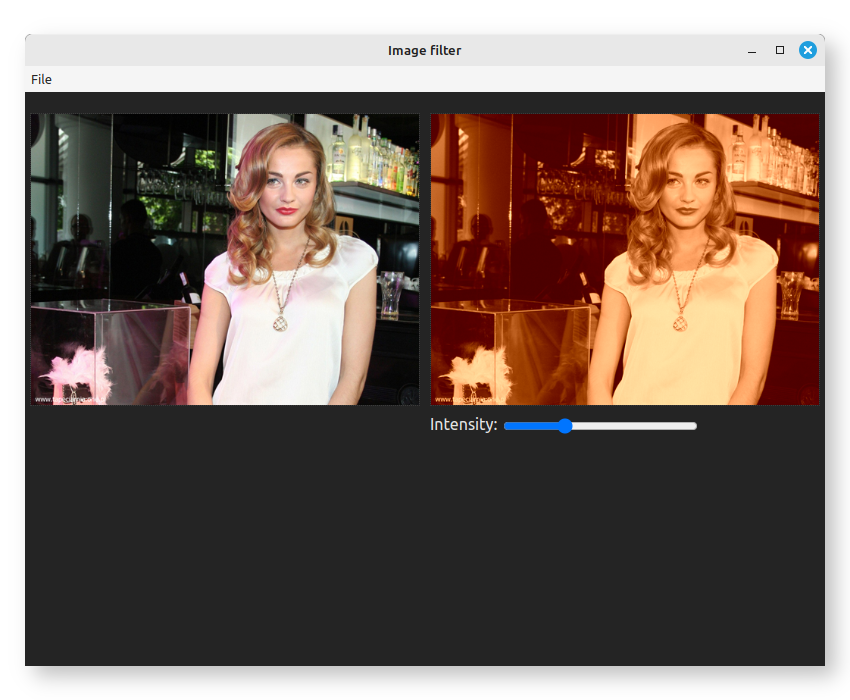

}That's it! When you start the app again, a message will appear informing you that the Node-API addon and the module "sepia" are being built. After a minute or so you should see this:

The function createOutput() is synchronous. When it's processing an image, it blocks Bun's

event loop. This is generally undesirable. What we want to do instead is process the image in a

separate thread.

We'll first replace our import statement:

const { createOutput } = require(`../zig/${filter}.zig`);with the following:

const { createOutputAsync, startThreadPool } = require(`../zig/${filter}.zig`);We'll also need availableParallelism():

const { availableParallelism } = require('os');At the top level, we create the thread pool:

startThreadPool(availableParallelism());Finally, we change the IPC handler in index.js so it uses the async function:

ipcMain.handle('filter-image', async (_event, width, height, data, params) => {

const src = { width, height, data };

const { dst } = await createOutputAsync(width, height, { src }, params);When you start up the app again, it'll seem to work correctly. As implemented though, there's a

serious flaw. Because Node.js's message loop is no longer blocked, many invocations of

createOutputAsync() can be initiated while a previous call is still in progress. Each of these

would take up a certain amount of memory. With a large image and large amount of mouse movement

you can cause the app to run out of system memory and crash.

We need additional logic that ensures only the most recent settings received from the UI get worked on. Any unfinished work triggered by prior changes should simply be abandoned.

Add the following code to the bottom of index.js:

class AsyncTaskManager {

activeTask = null;

async call(cb) {

const controller = (cb?.length > 0) ? new AbortController : null;

const promise = this.perform(cb, controller?.signal);

const thisTask = this.activeTask = { controller, promise };

try {

return await thisTask.promise;

} finally {

if (thisTask === this.activeTask) {

this.activeTask = null;

}

}

}

async perform(cb, signal) {

if (this.activeTask) {

this.activeTask.controller?.abort();

await this.activeTask.promise?.catch(() => {});

if (signal?.aborted) {

// throw error now if the operation was aborted before the function is even called

throw new Error('Aborted');

}

}

return cb?.(signal);

}

}

const atm = new AsyncTaskManager();The class above creates an

AbortController and

passes its signal to the callback function. It expects a promise as return value. If

this promise fails to resolve before call() is invoked again, then we abort it and

await its rejection.

Change the IPC handler such that the invocation of createOutputAsync() is done through the

async task manager:

ipcMain.handle('filter-image', async (_event, width, height, data, params) => {

try {

const src = { width, height, data };

const { dst } = await atm.call(signal => createOutputAsync(width, height, { src }, params, { signal }));

return dst.data.clampedArray;

} catch (err) {

if (err.message !== 'Aborted') {

console.error(err);

}

}

});We need to put everything in a try/catch, as createOutputAsync() would throw an Abort error

when a call is aborted. We also need to adjust renderer.js, since the IPC call may now return

undefined:

const outputData = await window.electronAPI.filterImage(width, height, inputData, params);

if (outputData) {

// ...

}With this mechanism in place preventing excessive calls, the app is no longer at risk of crashing.

Now, let us examine our Zig code. We'll start with startThreadPool():

pub fn startThreadPool(count: u32) !void {

try work_queue.init(.{

.allocator = internal_allocator,

.stack_size = 65536,

.n_jobs = count,

});

}work_queue is a struct containing a thread pool and non-blocking queue. It has the following

declaration:

var work_queue: WorkQueue(thread_ns) = .{};The queue stores requests for function invocation and runs them in separate threads. thread_ns

contains public functions that can be used. For this example we only have one:

const thread_ns = struct {

pub fn processSlice(signal: AbortSignal, width: u32, start: u32, count: u32, input: Input, output: Output, params: Parameters) !Output {

var instance = kernel.create(input, output, params);

if (@hasDecl(@TypeOf(instance), "evaluateDependents")) {

instance.evaluateDependents();

}

const end = start + count;

instance.outputCoord[1] = start;

while (instance.outputCoord[1] < end) : (instance.outputCoord[1] += 1) {

instance.outputCoord[0] = 0;

while (instance.outputCoord[0] < width) : (instance.outputCoord[0] += 1) {

instance.evaluatePixel();

if (signal.on()) return error.Aborted;

}

}

return output;

}

};The logic is pretty straight forward. We initialize an instance of the kernel then loop

through all coordinate pairs, running evaluatePixel() for each of them. After each iteration

we check the abort signal to see if termination has been requested.

createOutputAsync() pushes multiple processSlice call requests into the work queue to

process an image in parellel. Let us first look at its arguments:

pub fn createOutputAsync(allocator: Allocator, promise: Promise, signal: AbortSignal, width: u32, height: u32, input: Input, params: Parameters) !void {Allocator, Promise, and

AbortSignal are special parameters that Zigar provides

automatically. On the JavaScript side, the function has only four required arguments. It will also

accept a fifth argument: options, which may contain an alternate allocator, a callback function,

and an abort signal.

The function starts out by allocating memory for the output struct:

var output: Output = undefined;

// allocate memory for output image

const fields = std.meta.fields(Output);

var allocated: usize = 0;

errdefer inline for (fields, 0..) |field, i| {

if (i < allocated) {

allocator.free(@field(output, field.name).data);

}

};

inline for (fields) |field| {

const ImageT = @TypeOf(@field(output, field.name));

const data = try allocator.alloc(ImageT.Pixel, width * height);

@field(output, field.name) = .{

.data = data,

.width = width,

.height = height,

};

allocated += 1;

}Then it divides the image into multiple slices. It divides the given Promise struct as well:

// add work units to queue

const workers: u32 = @intCast(@max(1, work_queue.thread_count));

const scanlines: u32 = height / workers;

const slices: u32 = if (scanlines > 0) workers else 1;

const multipart_promise = try promise.partition(internal_allocator, slices);partition() creates a new promise

that fulfills the original promise when its resolve() method has been called a certain number of

times. It is used as the output argument for work_queue.push():

var slice_num: u32 = 0;

while (slice_num < slices) : (slice_num += 1) {

const start = scanlines * slice_num;

const count = if (slice_num < slices - 1) scanlines else height - (scanlines * slice_num);

try work_queue.push(thread_ns.processSlice, .{ signal, width, start, count, input, output, params }, multipart_promise);

}

}The first argument to push() is the function to be invoked. The second is a tuple containing

arguments. The third is the output argument. The return value of processSlice(), either the

Output struct or error.Aborted, will be fed to this promise's resolve() method. When the

last slice has been processed, the promise on the JavaScript side becomes fulfilled.

We're going to follow the same steps as described in the hello world tutorial. First, we'll alter the require statement so it references a node-zigar module instead of a Zig file:

const { createOutputAsync, startThreadPool } = require('../lib/sepia.zigar');Then we add node-zigar.config.json to the app's root directory:

{

"optimize": "ReleaseFast",

"modules": {

"lib/sepia.zigar": {

"source": "zig/sepia.zig"

}

},

"targets": [

{ "platform": "win32", "arch": "x64" },

{ "platform": "win32", "arch": "arm64" },

{ "platform": "win32", "arch": "ia32" },

{ "platform": "linux", "arch": "x64" },

{ "platform": "linux", "arch": "arm64" },

{ "platform": "darwin", "arch": "x64" },

{ "platform": "darwin", "arch": "arm64" }

]

}We build the library files:

npx zigar buildAnd make necessary changes to forge.config.js:

const { FusesPlugin } = require('@electron-forge/plugin-fuses');

const { FuseV1Options, FuseVersion } = require('@electron/fuses');

module.exports = {

packagerConfig: {

asar: {

unpack: '*.{dll,dylib,so}',

},

ignore: [

/\/(zig|\.?zig-cache|\.?zigar-cache)(\/|$)/,

/\/node-zigar\.config\.json$/,

],

},

rebuildConfig: {},

makers: [

{

name: '@electron-forge/maker-squirrel',

config: {},

},

{

name: '@electron-forge/maker-zip',

platforms: ['darwin'],

},

{

name: '@electron-forge/maker-deb',

config: {},

},

{

name: '@electron-forge/maker-rpm',

config: {},

},

],

plugins: [

{

name: '@electron-forge/plugin-auto-unpack-natives',

config: {},

},

// Fuses are used to enable/disable various Electron functionality

// at package time, before code signing the application

new FusesPlugin({

version: FuseVersion.V1,

[FuseV1Options.RunAsNode]: false,

[FuseV1Options.EnableCookieEncryption]: true,

[FuseV1Options.EnableNodeOptionsEnvironmentVariable]: false,

[FuseV1Options.EnableNodeCliInspectArguments]: false,

[FuseV1Options.EnableEmbeddedAsarIntegrityValidation]: true,

[FuseV1Options.OnlyLoadAppFromAsar]: true,

}),

],

};We're now ready to create the installation packages:

npm run make -- --platform linux --arch x64,arm64

npm run make -- --platform win32 --arch x64,ia32,arm64

npm run make -- --platform darwin --arch x64,arm64The packages will be in the out/make directory:

📁 out

📁 make

📁 deb

📁 arm64

📦 filter_1.0.0_arm64.deb

📁 x64

📦 filter_1.0.0_amd64.deb

📁 rpm

📁 arm64

📦 filter-1.0.0-1.arm64.rpm

📁 x64

📦 filter-1.0.0-1.x86_64.rpm

📁 squirrel.windows

📁 arm64

📦 filter-1.0.0-full.nupkg

📦 filter-1.0.0 Setup.exe

📄 RELEASES

📁 ia32

📦 filter-1.0.0-full.nupkg

📦 filter-1.0.0 Setup.exe

📄 RELEASES

📁 x64

📦 filter-1.0.0-full.nupkg

📦 filter-1.0.0 Setup.exe

📄 RELEASES

📁 zip

📁 darwin

📁 arm64

📦 filter-darwin-arm64-1.0.0.zip

📁 x64

📦 filter-darwin-x64-1.0.0.zip

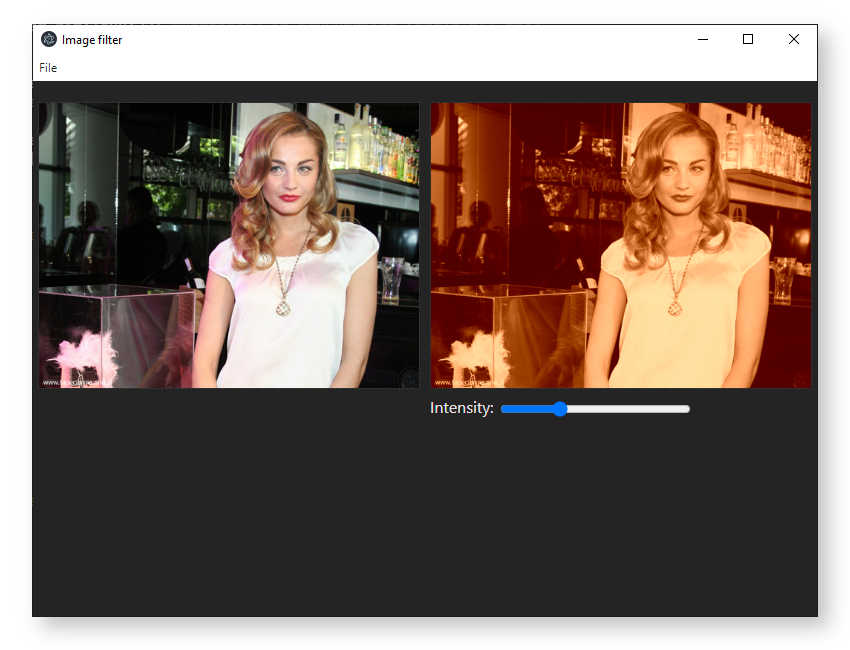

The app running in Windows 10:

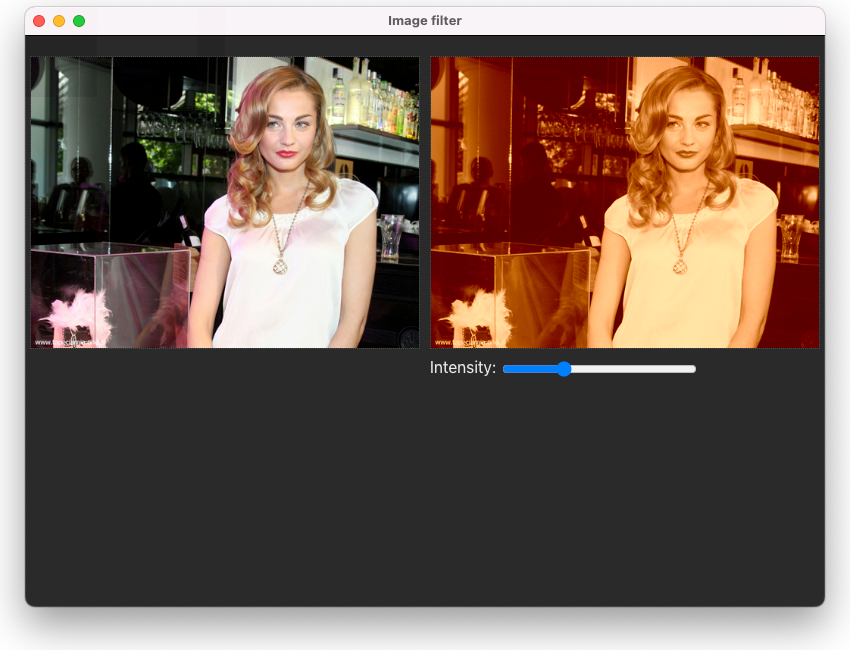

And in MacOS:

You can find the complete source code for this example here.

The need to move data between the "browser-side" and "node-side" made this example somewhat complicated. It makes the app inefficient as well, since image data is unnecessarily copied. node-zigar might not actually be the best solution for an Electron app in this instance. Using rollup-zigar-plugin to transcode our Zig code to WebAssembly and running it on the browser side might make more sense. Consult the Vite version of this example to learn how to do that.

The image filter employed for this example is very rudimentary. Check out pb2zig's project page to see more advanced code.

That's it for now. I hope this tutorial is enough to get you started with using Zigar.