Generating API Keys - bcgov/common-hosted-form-service GitHub Wiki

This documentation is no longer being updated. For the most up to date information please visit our techdocs

Home > CHEFS Capabilities > Data Management > Generating API keys

Connect your 3rd party applications with CHEFS to give it access to any of your form's data through our documented CHEFS API specifications.

On this page:

- How to generate and regenerate an API key

- How to make a call to the CHEFS API

- Which API endpoints can be called?

- How to handle API Rate Limiting

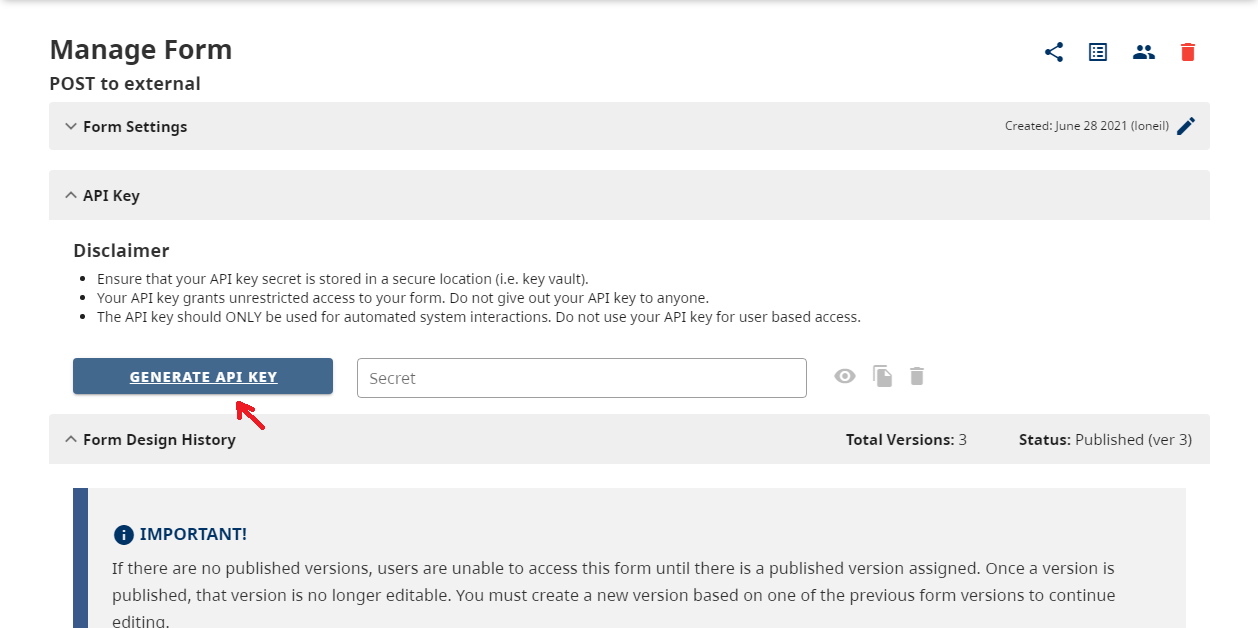

You can generate an API key by going to your "Form Settings" and opening the API Key Panel. If you want to generate a new API key, click the Generate API Key button below and confirm your selection.

Once you have generated your key, you can click on the Show Secret icon to view and use this key in your applications to make calls to our endpoints.

Please note to never keep your API keys in an unsecure area.

Once you have an API Key, the Regenerate API Key button will invalidate the old key and create a new key. The old key will no longer work to access the API, and you will have to update your applications to use the new key.

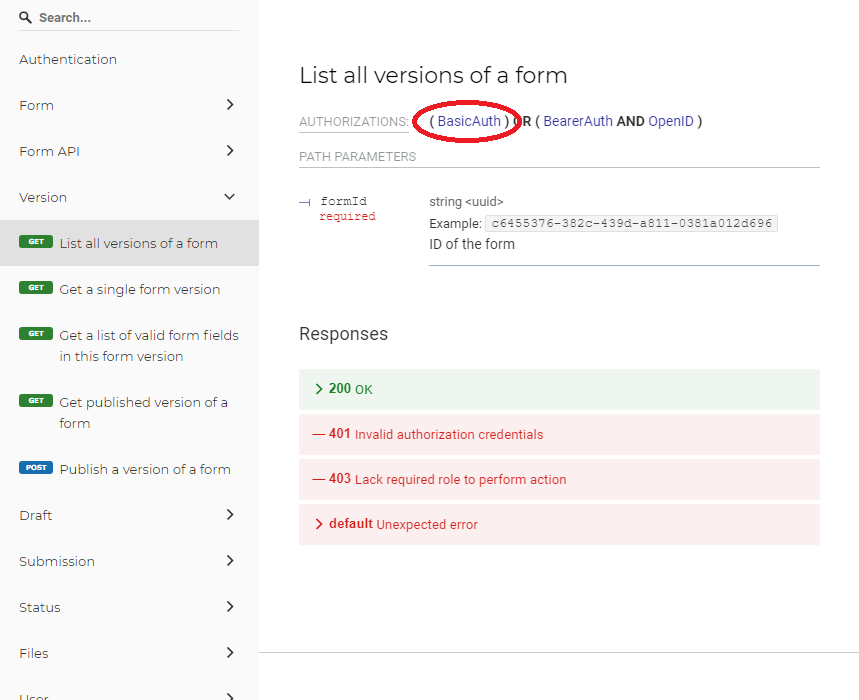

You can make calls to our endpoints by using Basic Authorization.

If you require more information about how to use Basic Authorization, follow this link: https://datatracker.ietf.org/doc/html/rfc7617#section-2

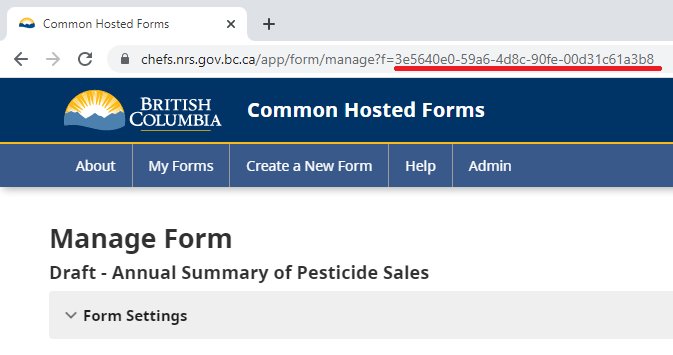

- Your Username corresponds to your

Form's ID

You can find your Form's ID by going to your Form Settings page, and copying it from the URL

- Your Password corresponds to your

Generated API Key

You can only make requests to the endpoints that have a BasicAuth Authorization type from our API Specifications.

The CHEFS API uses "Rate Limiting" to, as the name suggests, limit the rate at which the API will accept calls. This is to prevent malfunctioning code from accidentally overloading the API and affecting other users.

Below is a simplified example:

Request #1: when the API is called it returns two rate limiting HTTP headers:

HTTP/1.1 200 OK

RateLimit-Policy: 20;w=60

RateLimit: limit=20, remaining=19, reset=60

These correspond to:

-

RateLimit-Policy: the maximum number of allowed requests is20in a60second window. -

RateLimit: thelimitis20, same as above. This is the first request, so there are19remaining in the window. The window resets in60seconds.

Request #2: If you wait 15 seconds and make another API call, the headers will be:

HTTP/1.1 200 OK

RateLimit-Policy: 20;w=60

RateLimit: limit=20, remaining=18, reset=45

These correspond to:

-

RateLimit-Policy: unchanged from above - this will rarely change -

RateLimit: thelimitis20, and again will rarely change. This is the second request within the window, so there are18remaining in the window. Since we waited 15 seconds after the first request, the window now resets in45seconds.

Rapidly sending in Request #3 through Request #19 will gradually diminish both the remaining and reset fields.

Request #20: Sending in the next request within the window will produce headers like:

HTTP/1.1 200 OK

RateLimit-Policy: 20;w=60

RateLimit: limit=20, remaining=0, reset=24

These correspond to:

-

RateLimit: thelimitis20, and again will rarely change. This is the 20th request within the window, so there are0remaining in the window. The window now only has24seconds left before it resets.

Request #21: Sending another request before the window resets will produce headers like:

HTTP/1.1 429 Too Many Requests

RateLimit-Policy: 20;w=60

RateLimit: limit=20, remaining=0, reset=13

Retry-After: 13

These correspond to:

-

RateLimit: thelimitis20, and again will rarely change. This is the 21st request within the window and fails with HTTP 429, and there are still0remaining in the window. The window now only has13seconds left before it resets. -

Retry-After: this is the same as theresetvalue in theRateLimitheader (13), but is easier to use in code if you want to wait (please wait) before making the next call

Once the remaining 13 seconds in the window elapse, the counter is reset to 20 and API calls will again succeed.

The example above is simplified, but in the real world there are always multiple containers that run the API. The count of requests is (currently) not shared between containers. In other words, if the limit is 60 requests and there are two containers running, then worst case is 60 requests per minute, best case is 120 per minute, and probably it will be close to - but under - 120.