AndroidAv - aopacloud/aopa-rtc GitHub Wiki

Realize audio and video interaction

This article describes how to integrate the Opa real-time interactive SDK and implement a simple real-time interactive app from 0 through a small amount of code, which is suitable for interactive live broadcast and video call scenarios.

First, you need to understand the following basic concepts about real-time audio and video interaction:

- Opa real-time interaction SDK: an SDK developed by Opa to help developers realize real-time audio and video interaction in the app.

- Channel: the channel used to transmit data. Users in the same channel can interact in real time.

- Anchor: You can * * publish * * audio and video in the channel, and also * * subscribe to * * audio and video published by other anchors.

- Audience: You can * * subscribe to * * audio and video in the channel, and do not have * * publish * * audio and video permissions.

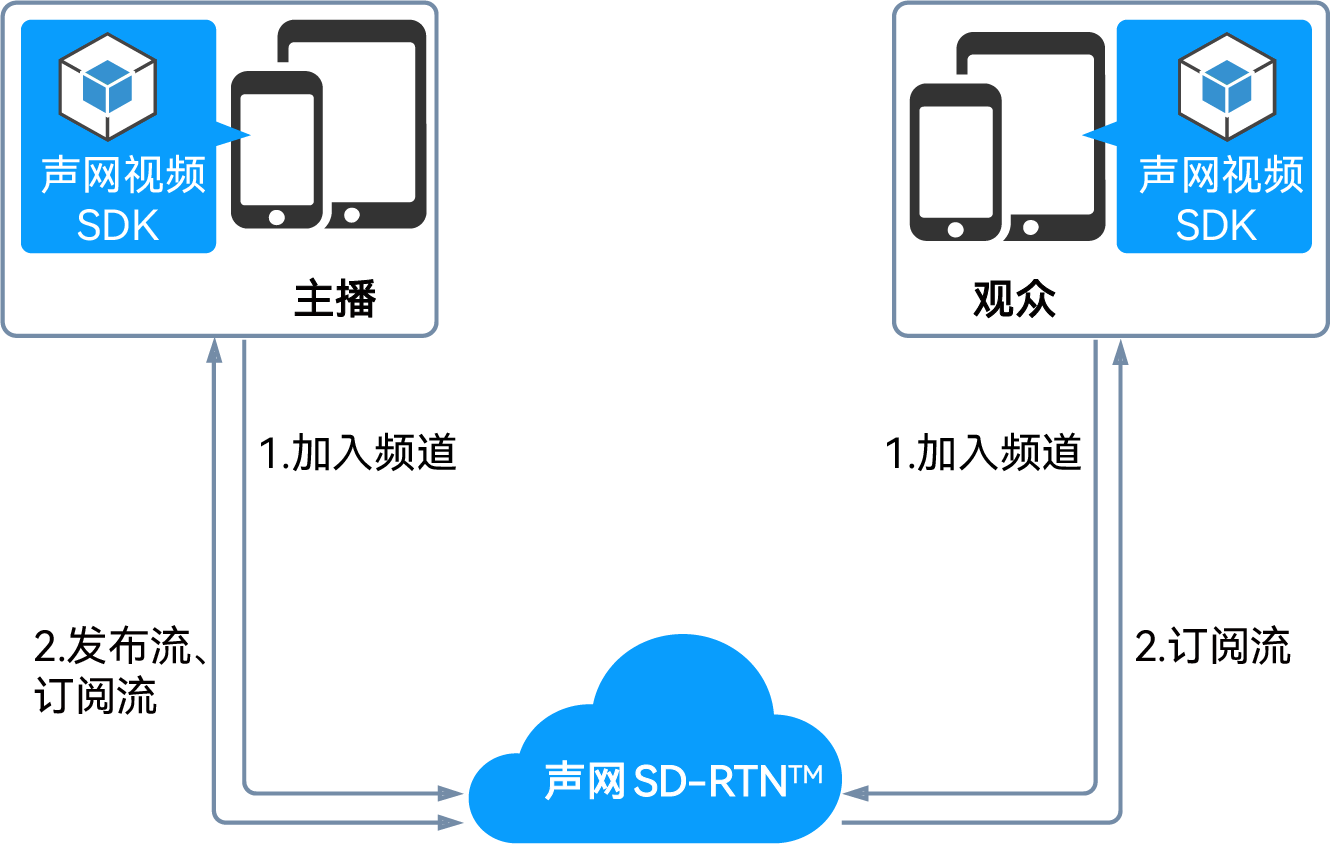

The following figure shows the basic workflow of realizing audio and video interaction in the app:

- All users call the 'joinChannel' method to join the channel and set user roles as needed:

- Interactive live broadcast: if the user needs to stream in the channel, set it as the anchor; If the user only needs stream collection, it is set as audience.

- Video call: use all user roles as anchors.

- After joining the channel, users in different roles have different behaviors:

- All users can receive audio and video streams in the channel by default.

- The anchor can publish audio and video streams in the channel.

- If viewers need to send streams, they can call the 'setClientRole' method in the channel to modify the user role so that it has the permission to send streams. Precondition

Before implementing the function, please prepare the development environment according to the following requirements:

- Android Studio 4.1 or above.

- Android API level 16 or above.

- Two mobile devices running Android 4.1 or above.

- A computer that can access the Internet. If you have deployed a firewall in your network environment, refer to [Coping with firewall restrictions] to use the Orpa service normally.

- A valid Opa account and Opa project. Please refer to [Opening Service] to obtain the following information from the Opa console:

- App ID:A string randomly generated by Opa to identify your project.

- Temporary Token: Token is also called dynamic key, which authenticates the user when the client joins the channel. The temporary token is valid for 24 hours.

Create project

This section describes how to create a project and add the permissions required to experience real-time interaction for the project.

-

(Optional) Create a new project. See [Create a project]( https://developer.android.com/studio/projects/create-project ).

-

Open * * Android Studio * * and select * * New Project * *.

-

Select * * Phone and Tablet>Empty Views Activity * *, and click * * Next * *.

-

Set the project name and storage path, select * * Java * * as the language, and click * * Finish * * to create an Android project.

take care -

After the project is created, * * Android Studio * * will automatically start to synchronize the gradle. Wait a moment until the synchronization is successful before proceeding to the next step.

-

Add network and device permissions.

Open the file '/app/src/main/AndroidManifest.xml', and add the following permissions after '':

XML

<!--Required permissions--> <uses-permission android:name="android.permission.INTERNET"/> <!-- Optional permissions--> <uses-permission android:name="android.permission.CAMERA"/> <uses-permission android:name="android.permission.RECORD_AUDIO"/> <uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS"/> <uses-permission android:name="android.permission.ACCESS_WIFI_STATE"/> <uses-permission android:name="android.permission.ACCESS_NETWORK_STATE"/> <uses-permission android:name="android.permission.BLUETOOTH"/> <!-- 对于 Android 12.0 and above devices integrated with SDK under v4.1.0, you need to add the following permissions --> <uses-permission android:name="android.permission.BLUETOOTH_CONNECT"/> <!-- 对于 Android 12.0 and above devices, you need to add the following permissions --> <uses-permission android:name="android.permission.READ_PHONE_STATE"/> <uses-permission android:name="android.permission.BLUETOOTH_SCAN"/> -

Prevent code confusion.

Open the '/app/proguard-rules. pro' file and add the following lines to prevent the code of the OPA SDK from being confused:

Java

-keep class io.aopa.**{*;}

Integration SDK

You can choose one of the following ways to integrate the Opa real-time interactive SDK.

- Integration via Maven Central

- Manual integration

-

Open the 'settings. gradle' file in the root directory of the project, and add Maven Central dependency (if any, it can be ignored):

Java

repositories { ... mavenCentral() ...}take care

If your Android project has set [dependencyResolutionManagement]( https://docs.gradle.org/current/userguide/declaring_repositories.html#sub:centralized -Repository declaration). There may be differences in the way Maven Central dependencies are added.

-

Open the file '/app/build. gradle', and add dependencies of the Orpa RTC SDK in 'dependencies'. You can query the latest version of the SDK from [Release Notes], and replace the 'x.y.z' with a specific version number. Java

... dependencies { ... // x.y.z replaced with the specific SDK version number,如:4.0.0 或 4.1.0-1 implementation 'io.aopa.rtc:full-sdk:x.y.z'} -

Download the latest version of Android real-time interactive SDK on the [Download] page and unzip it.

-

Open the unzipped file and copy the following files or subfolders to your project path.

|File or subfolder | project path| |: -- |: --| |'aopa rtc-sdk. aar' File | `/app/libs/'| |'arm64-v8a' folder | '/app/src/main/jniLibs/'| |'armeabi-v7a' folder | '/app/src/main/jniLibs/'|

-

On the left navigation bar of * * Android Studio * *, select the 'Project Files/app/libs/aopa-rtc-sdk. jar' file, right-click, and select 'add as a library' from the drop-down menu.

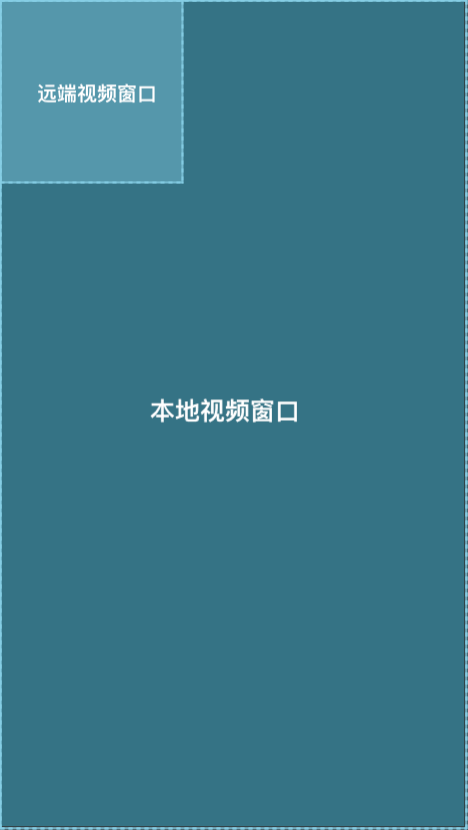

Create user interface

According to the needs of real-time audio and video interaction scenarios, create two view boxes for your project to display local video and remote video. As shown in the figure below:

Copy the following code to the '/app/src/main/res/layout/activity_main. xml' file to replace the original content, so that you can quickly create the user interface required for the scenario. Create user interface sample code

XML

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<TextView

android:id="@+id/RoomIDTextView"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_marginLeft="50dp"

android:layout_marginTop="40dp"

android:text="房间ID"

android:textColor="@android:color/black" />

<EditText

android:id="@+id/RoomIdEditText"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/RoomIDTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/RoomIDTextView"

android:gravity="left"

android:inputType="text"

android:singleLine="true"

android:text="room123"

android:textColor="@android:color/black"

android:textSize="15dp" />

<TextView

android:id="@+id/AppIDTextView"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/RoomIDTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="APP ID"

android:textColor="@android:color/black" />

<Spinner

android:id="@+id/AppIdSpinner"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/AppIDTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/AppIDTextView"

android:gravity="center"

android:textSize="15dp" />

<TextView

android:id="@+id/UserIdTextView"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/AppIDTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="用户ID"

android:textColor="@android:color/black" />

<EditText

android:id="@+id/UserIdEditText"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/UserIdTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/UserIdTextView"

android:gravity="left"

android:inputType="text"

android:singleLine="true"

android:text=""

android:textColor="@android:color/black"

android:textSize="15dp" />

<TextView

android:id="@+id/ServerAddrTextView"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/UserIdTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="服务环境"

android:textColor="@android:color/black"

android:visibility="visible"

tools:visibility="visible" />

<Spinner

android:id="@+id/ServerSpinner"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/ServerAddrTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/ServerAddrTextView"

android:gravity="center"

android:textSize="15dp"

android:visibility="visible" />

<TextView

android:id="@+id/AudioQualityTextView"

android:textColor="@android:color/black"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/ServerAddrTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="音质" />

<Spinner

android:id="@+id/AudioQualitySpinner"

android:layout_width="wrap_content"

android:layout_height="30dp"

android:layout_alignTop="@+id/AudioQualityTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/AudioQualityTextView"

android:gravity="center"

android:textSize="15dp" />

<TextView

android:id="@+id/ScenarioTextView"

android:textColor="@android:color/black"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/AudioQualityTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="场景" />

<Spinner

android:id="@+id/ScenarioSpinner"

android:layout_width="wrap_content"

android:layout_height="30dp"

android:layout_toRightOf="@+id/ScenarioTextView"

android:layout_alignTop="@+id/ScenarioTextView"

android:layout_alignParentRight="true"

android:layout_marginRight="50dp"

android:layout_marginTop="-6dp"

android:textSize="15dp"

android:gravity="center" />

<TextView

android:id="@+id/RoleTextView"

android:layout_width="60dp"

android:layout_height="30dp"

android:layout_below="@+id/ScenarioTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="角色"

android:textColor="@android:color/black" />

<Spinner

android:id="@+id/RoleSpinner"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/RoleTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/RoleTextView"

android:gravity="center"

android:textSize="15dp" />

<TextView

android:id="@+id/ResolutionTextView"

android:layout_width="wrap_content"

android:layout_height="30dp"

android:layout_below="@+id/RoleTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="采集分辨率"

android:textColor="@android:color/black" />

<Spinner

android:id="@+id/ResolutionSpinner"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/ResolutionTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/ResolutionTextView"

android:gravity="center"

android:textSize="15dp" />

<TextView

android:id="@+id/CustomServerTextView"

android:layout_width="80dp"

android:layout_height="30dp"

android:layout_below="@+id/ResolutionSpinner"

android:layout_marginLeft="50dp"

android:layout_marginTop="4dp"

android:text="自定义服务"

android:textColor="@android:color/black" />

<EditText

android:id="@+id/CustomServerEditText"

android:layout_width="wrap_content"

android:layout_height="40dp"

android:layout_alignTop="@+id/CustomServerTextView"

android:layout_alignParentRight="true"

android:layout_marginTop="-6dp"

android:layout_marginRight="50dp"

android:layout_toRightOf="@+id/CustomServerTextView"

android:gravity="left"

android:inputType="text"

android:singleLine="true"

android:text=""

android:textColor="@android:color/black"

android:textSize="15dp"

android:tooltipText="输入服务器IP"

android:visibility="invisible"

tools:visibility="visible" />

<CheckBox

android:id="@+id/TokenCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/CustomServerTextView"

android:layout_marginLeft="50dp"

android:layout_marginTop="10dp"

android:text="Token校验" />

<CheckBox

android:id="@+id/StatsCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_alignTop="@+id/TokenCheckBox"

android:layout_marginLeft="10dp"

android:layout_marginTop="0dp"

android:layout_toRightOf="@+id/TokenCheckBox"

android:text="统计数据" />

<CheckBox

android:id="@+id/SpeakerCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/TokenCheckBox"

android:layout_marginLeft="50dp"

android:layout_marginTop="10dp"

android:text="扬声器输出" />

<CheckBox

android:id="@+id/QuicCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_alignTop="@+id/SpeakerCheckBox"

android:layout_marginLeft="10dp"

android:layout_marginTop="0dp"

android:layout_toRightOf="@+id/InearCheckBox"

android:text="QUIC" />

<CheckBox

android:id="@+id/InearCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/SpeakerCheckBox"

android:layout_marginLeft="50dp"

android:layout_marginTop="10dp"

android:text="耳返" />

<CheckBox

android:id="@+id/ReverbCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_alignTop="@+id/InearCheckBox"

android:layout_marginLeft="10dp"

android:layout_marginTop="0dp"

android:layout_toRightOf="@+id/InearCheckBox"

android:text="混响" />

<CheckBox

android:id="@+id/AAACheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/InearCheckBox"

android:layout_marginLeft="50dp"

android:layout_marginTop="5dp"

android:text="3A处理" />

<CheckBox

android:id="@+id/VideoCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/InearCheckBox"

android:layout_alignLeft="@+id/ReverbCheckBox"

android:layout_marginTop="5dp"

android:text="开启视频" />

<CheckBox

android:id="@+id/MulticastCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/AAACheckBox"

android:layout_marginLeft="50dp"

android:layout_marginTop="5dp"

android:text="大小视频" />

<CheckBox

android:id="@+id/DetachCheckBox"

android:layout_width="120dp"

android:layout_height="30dp"

android:layout_below="@+id/VideoCheckBox"

android:layout_alignLeft="@+id/VideoCheckBox"

android:layout_marginTop="5dp"

android:text="推拉流分离" />

<Button

android:id="@+id/RoomBnt"

android:layout_width="120dp"

android:layout_height="48dp"

android:layout_below="@id/MulticastCheckBox"

android:layout_centerHorizontal="true"

android:layout_marginTop="20dp"

android:onClick="onJoinClick"

android:text="多人视频" />

<Button

android:id="@+id/SingleBnt"

android:layout_width="120dp"

android:layout_height="48dp"

android:layout_below="@id/RoomBnt"

android:layout_centerHorizontal="true"

android:layout_marginTop="5dp"

android:onClick="onSingleClick"

android:text="单人视频" />

<TextView

android:id="@+id/VersionText"

android:layout_width="60dp"

android:layout_height="20dp"

android:singleLine="true"

android:text=""

android:textSize="14dp"

android:layout_alignParentRight="true"

android:layout_alignParentBottom="true" />

</RelativeLayout>

Implementation process

This section describes how to implement a real-time audio and video interactive app. You can first copy the complete sample code into your project to quickly experience the basic functions of real-time audio and video interaction, and then follow the implementation steps to understand the core API calls.

The following figure shows the basic process of using OPA RTC SDK to achieve audio and video interaction:

The complete code for realizing the basic process of real-time interaction is listed below for reference. Copy the following code to the file '/app/src/main/java/com/example//MainActivity. java' to replace 'package com. example< Projectname>', you can quickly experience the basic functions of real-time interaction.

information

In the 'appId', 'token' and 'channelName' fields, pass in the App ID, temporary token you obtained on the console, and the channel name you filled in when generating the temporary token.

Example code for real-time audio and video interaction

Java

import android.Manifest;

import android.content.pm.PackageManager;

import android.os.Bundle;

import android.view.SurfaceView;

import android.widget.FrameLayout;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import org.banban.rtc.ChannelMediaOptions;

import org.banban.rtc.Constants;

import org.banban.rtc.IRtcEngineEventHandler;

import org.banban.rtc.RtcEngine;

import org.banban.rtc.RtcEngineConfig;

import org.banban.rtc.video.VideoCanvas;

public class MainActivity extends AppCompatActivity {

private String appId = "<#Your App ID#>";

private String channelName = "<#Your channel name#>";

private String token = "<#Your Token#>";

private RtcEngine mRtcEngine;

private final IRtcEngineEventHandler mRtcEventHandler = new IRtcEngineEventHandler() {

@Override

public void onJoinChannelSuccess(String channel, int uid, int elapsed) {

super.onJoinChannelSuccess(channel, uid, elapsed);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "Join channel success", Toast.LENGTH_SHORT).show();

});

}

@Override

public void onUserJoined(int uid, int elapsed) {

runOnUiThread(() -> {

setupRemoteVideo(uid);

});

}

@Override

public void onUserOffline(int uid, int reason) {

super.onUserOffline(uid, reason);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "User offline: " + uid, Toast.LENGTH_SHORT).show();

});

}

};

private void initializeAndJoinChannel() {

try {

RtcEngineConfig config = new RtcEngineConfig();

config.mContext = getBaseContext();

config.mAppId = appId;

config.mEventHandler = mRtcEventHandler;

mRtcEngine = RtcEngine.create(config);

} catch (Exception e) {

throw new RuntimeException("Check the error.");

}

mRtcEngine.enableVideo();

FrameLayout container = findViewById(R.id.local_video_view_container);

SurfaceView surfaceView = new SurfaceView (getBaseContext());

container.addView(surfaceView);

mRtcEngine.setupLocalVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, 0));

mRtcEngine.startPreview();

ChannelMediaOptions options = new ChannelMediaOptions();

options.clientRoleType = Constants.CLIENT_ROLE_BROADCASTER;

options.channelProfile = Constants.CHANNEL_PROFILE_LIVE_BROADCASTING;

options.publishMicrophoneTrack = true;

options.publishCameraTrack = true;

options.autoSubscribeAudio = true;

options.autoSubscribeVideo = true;

mRtcEngine.joinChannel(token, channelName, 0, options);

}

private void setupRemoteVideo(int uid) {

FrameLayout container = findViewById(R.id.remote_video_view_container);

SurfaceView surfaceView = new SurfaceView (getBaseContext());

surfaceView.setZOrderMediaOverlay(true);

container.addView(surfaceView);

mRtcEngine.setupRemoteVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, uid));

}

private static final int PERMISSION_REQ_ID = 22;

private String[] getRequiredPermissions(){

if (android.os.Build.VERSION.SDK_INT >= android.os.Build.VERSION_CODES.S) {

return new String[]{

Manifest.permission.RECORD_AUDIO,

Manifest.permission.CAMERA,

Manifest.permission.READ_PHONE_STATE,

Manifest.permission.BLUETOOTH_CONNECT

};

} else {

return new String[]{

Manifest.permission.RECORD_AUDIO,

Manifest.permission.CAMERA

};

}

}

private boolean checkPermissions() {

for (String permission : getRequiredPermissions()) {

int permissionCheck = ContextCompat.checkSelfPermission(this, permission);

if (permissionCheck != PackageManager.PERMISSION_GRANTED) {

return false;

}

}

return true;

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

if (checkPermissions()) {

initializeAndJoinChannel();

} else {

ActivityCompat.requestPermissions(this, getRequiredPermissions(), PERMISSION_REQ_ID);

}

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

if (checkPermissions()) {

initializeAndJoinChannel();

}

}

@Override

protected void onDestroy() {

super.onDestroy();

if (mRtcEngine != null) {

mRtcEngine.stopPreview();

mRtcEngine.leaveChannel();

mRtcEngine = null;

RtcEngine.destroy();

}

}

}

Process permission request

This section describes how to import Android related classes and obtain Android device camera, recording and other permissions.

-

Import Android related classes

Java

import android.Manifest; import android.content.pm.PackageManager; import android.os.Bundle; import android.view.SurfaceView; import android.widget.FrameLayout; import android.widget.Toast; import androidx.annotation.NonNull; import androidx.appcompat.app.AppCompatActivity; import androidx.core.app.ActivityCompat; import androidx.core.content.ContextCompat; -

Get Android permission

When starting the application, check whether the permissions required for real-time interaction have been granted in the App.

Java

private static final int PERMISSION_REQ_ID = 22; private String[] getRequiredPermissions(){ if (android.os.Build.VERSION.SDK_INT >= android.os.Build.VERSION_CODES.S) { return new String[]{ Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA, Manifest.permission.READ_PHONE_STATE, Manifest.permission.BLUETOOTH_CONNECT }; } else { return new String[]{ Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA }; } } private boolean checkPermissions() { for (String permission : getRequiredPermissions()) { int permissionCheck = ContextCompat.checkSelfPermission(this, permission); if (permissionCheck != PackageManager.PERMISSION_GRANTED) { return false; } } return true; }

Import the class related to Opa

Import the classes and interfaces related to OPA RTC SDK:

Java

import org.banban.rtc.ChannelMediaOptions;

import org.banban.rtc.Constants;

import org.banban.rtc.IRtcEngineEventHandler;

import org.banban.rtc.RtcEngine;

import org.banban.rtc.RtcEngineConfig;

import org.banban.rtc.video.VideoCanvas;

Define App ID and Token

Pass in the App ID, temporary token obtained from the Opa console, and the channel name filled in when generating the temporary token for subsequent engine initialization and channel addition.

Java

// Fill in the App ID obtained from the Opa console

private String appId = "<#Your App ID#>";

// Fill in the channel name

private String channelName = "<#Your channel name#>";

// Fill in the temporary token generated in the Opa console

private String token = "<#Your Token#>";

Initialize the engine

Call the 'create' method to initialize the 'RtcEngine'.

take care

Before initializing the SDK, ensure that the end user has fully understood and agreed to the relevant privacy policy.

Java

private RtcEngine mRtcEngine;

private final IRtcEngineEventHandler mRtcEventHandler = new IRtcEngineEventHandler() {

...};

RtcEngineConfig config = new RtcEngineConfig();

config.mContext = getBaseContext();

config.mAppId = appId;

config.mEventHandler = mRtcEventHandler;

mRtcEngine = RtcEngine.create(config);

Enable video module

Follow these steps to enable the video module:

- Call the 'enableVideo' method to enable the video module.

- Call the 'setupLocalVideo' method to initialize the local view and set the local video display properties.

- Call the 'startPreview' method to open the local video preview.

Java

// Call the 'startPreview' method to open the local video preview.

mRtcEngine.enableVideo();

FrameLayout container = findViewById(R.id.local_video_view_container);

SurfaceView surfaceView = new SurfaceView (getBaseContext());

container.addView(surfaceView);

mRtcEngine.setupLocalVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, 0));

mRtcEngine.startPreview();

Join the channel and publish the audio and video stream

Call 'joinChannel' to join the channel. Configure the following in 'ChannelMediaOptions':

- Set the channel scenario to 'BROADCASTING' and set the user role to 'BROADCASTER' or 'AUDIENCE'.

- Set 'publishMicrophoneTrack' and 'publishCameraTrack' to 'true' to publish the audio collected by the microphone and the video collected by the camera.

- Set 'autoSubscribeAudio' and 'autoSubscribeVideo' to 'true' to automatically subscribe to all audio and video streams.

Java

ChannelMediaOptions options = new ChannelMediaOptions();

options.clientRoleType = Constants.CLIENT_ROLE_BROADCASTER;

options.channelProfile = Constants.CHANNEL_PROFILE_LIVE_BROADCASTING;

options.publishMicrophoneTrack = true;

options.publishCameraTrack = true;

options.autoSubscribeAudio = true;

options.autoSubscribeVideo = true;

mRtcEngine.joinChannel(token, channelName, 0, options);

Set remote view

Call the 'setupRemoteVideo' method to initialize the remote user view, and set the local display properties of the remote user's view. You can obtain the 'uid' of the remote user through the 'onUserJoined' callback.

Java

private void setupRemoteVideo(int uid) {

FrameLayout container = findViewById(R.id.remote_video_view_container);

SurfaceView surfaceView = new SurfaceView (getBaseContext());

surfaceView.setZOrderMediaOverlay(true);

container.addView(surfaceView);

mRtcEngine.setupRemoteVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, uid));

}

Implement common callback

Define necessary callbacks according to usage scenarios. The following example code shows how to implement 'onJoinChannelSuccess',' onUserJoined 'and' onUserOffline 'callbacks.

Java

@Override

public void onJoinChannelSuccess(String channel, int uid, int elapsed) {

super.onJoinChannelSuccess(channel, uid, elapsed);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "Join channel success", Toast.LENGTH_SHORT).show();

});

}

@Override

public void onUserJoined(int uid, int elapsed) {

runOnUiThread(() -> {

setupRemoteVideo(uid);

});

}

@Override

public void onUserOffline(int uid, int reason) {

super.onUserOffline(uid, reason);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "User offline: " + uid, Toast.LENGTH_SHORT).show();

});

}

Start audio and video interaction

Call a series of methods in 'onCreate' to load the interface layout, check whether the App has obtained the permissions required for real-time interaction, and add channels to start audio and video interaction.

Java

@Override

public void onJoinChannelSuccess(String channel, int uid, int elapsed) {

super.onJoinChannelSuccess(channel, uid, elapsed);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "Join channel success", Toast.LENGTH_SHORT).show();

});

}

@Override

public void onUserJoined(int uid, int elapsed) {

runOnUiThread(() -> {

setupRemoteVideo(uid);

});

}

@Override

public void onUserOffline(int uid, int reason) {

super.onUserOffline(uid, reason);

runOnUiThread(() -> {

Toast.makeText(MainActivity.this, "User offline: " + uid, Toast.LENGTH_SHORT).show();

});

}

End audio and video interaction

Follow these steps to end the audio/video interaction:

-

Call 'stopPreview' to stop the video preview.

-

Call 'leaveChannel' to leave the current channel and release all session related resources.

-

Call 'destroy' to destroy the engine, and release all resources used in the Opa SDK.

warning

After calling 'destroy', you will no longer be able to use all the SDK methods and callbacks. To use the real-time audio and video interaction function again, you must create a new engine. See [Initialization Engine] (about:blank#%E5%88%9D%E5%A7%8B%E5%8C%96%E5%BC%95%E6%93%8E)。Java

@Override protected void onDestroy() { super.onDestroy(); if (mRtcEngine != null) { mRtcEngine.stopPreview(); mRtcEngine.leaveChannel(); mRtcEngine = null; RtcEngine.destroy(); } }

Test App

Follow the steps below to test the live app:

-

Open the Android device developer option, open USB debugging, connect the Android device to the computer through the USB cable, and check your Android device in the Android device option.

-

In Android Studio, click! [Picture]( https://web-cdn.agora.io/docs-files/1689672727614 )(* * Sync Project with Gradle Files * *).

-

After the synchronization is successful, click! [Picture]( https://web-cdn.agora.io/docs-files/1687670569781 )(* * Run 'app' * *) Start compiling. In a moment, the app will be installed on your Android device.

-

Start the app and grant recording and camera permissions. If you set the user role as the anchor, you will see yourself in the local view.

-

Use the second Android device, repeat the above steps, install the app on the device, open the app to join the channel, and observe the test results:

- If both devices join the channel as anchors, you can see each other and hear each other's voice.

- If the two devices are joined as anchors and viewers respectively, the anchors can see themselves in the local video window; Viewers can see the anchor and hear his voice in the remote video window.

Next Steps

After completing the audio and video interaction, you can read the following documents to further understand:

- The example in this article uses a temporary token to join the channel. In the test or production environment, in order to ensure communication security, OPA recommends obtaining tokens from the server. For details, please refer to [Using Token Authentication].

- If you want to achieve fast live scenes, you can change the delay level of the audience to low delay ('AUDIENCE_LATENCY_LEVEL_LOW_LATENCY') on the basis of real-time audio and video interaction. For details, please refer to [Achieve Fast Live Broadcasting]

Related information

This section provides additional information for reference.

Sample project

Opa has provided an open source example project of real-time audio and video interaction for your reference. You can go to download or view the source code.

- GitHub: AopaRtcAndroid