IosAv - aopacloud/aopa-rtc GitHub Wiki

Realize audio and video interaction

This article describes how to integrate the Opa real-time interactive SDK and implement a simple real-time interactive app from 0 through a small amount of code, which is suitable for interactive live broadcast and video call scenarios.

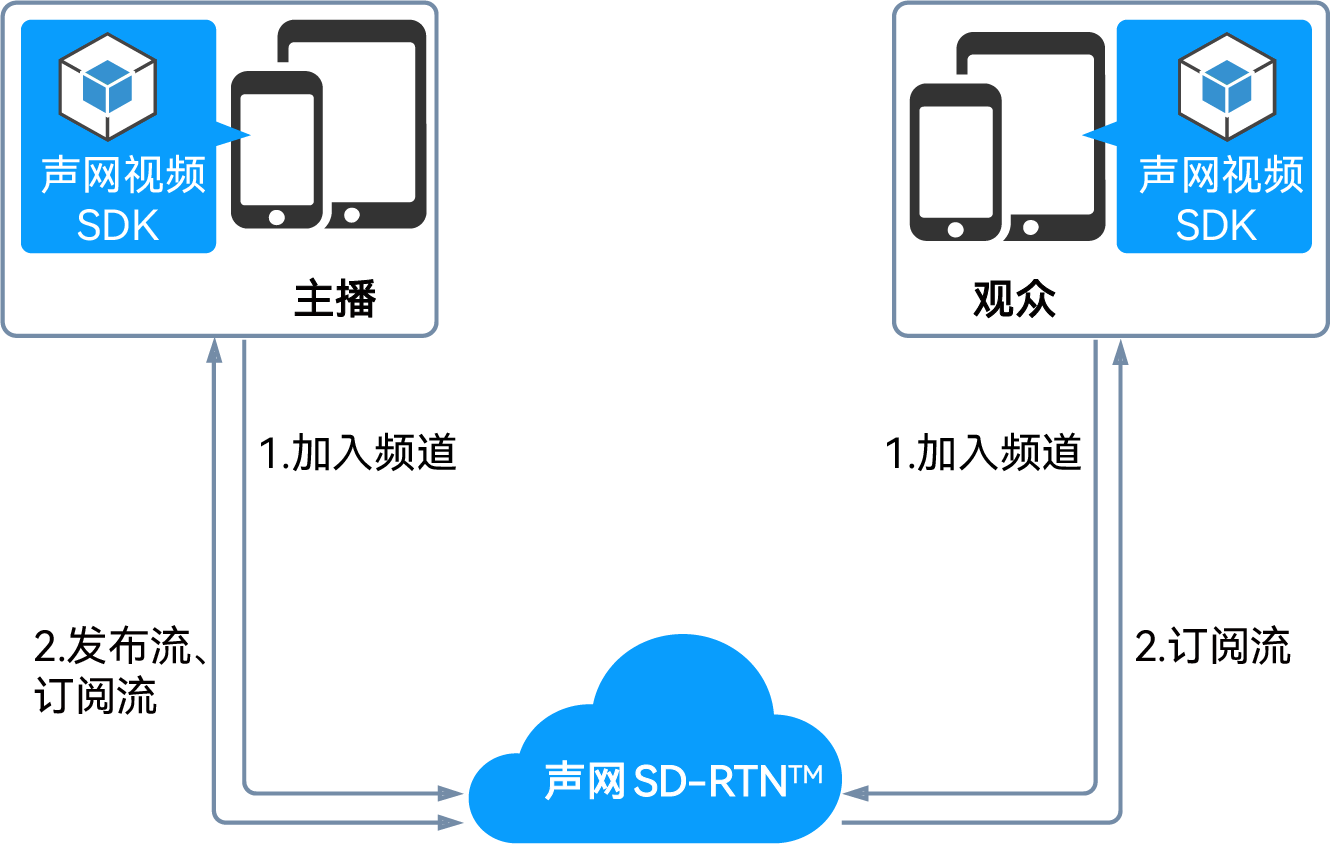

First, you need to understand the following basic concepts about real-time audio and video interaction:

- Opa real-time interaction SDK: an SDK developed by Opa to help developers realize real-time audio and video interaction in the app.

- Channel: the channel used to transmit data. Users in the same channel can interact in real time.

- Anchor: You can * * publish * * audio and video in the channel, and also * * subscribe to * * audio and video published by other anchors.

- Audience: You can * * subscribe to * * audio and video in the channel, and do not have * * publish * * audio and video permissions.

The following figure shows the basic workflow of realizing audio and video interaction in the app:

- All users call the 'joinChannel' method to join the channel and set user roles as needed: * Interactive live broadcast: if the user needs to stream in the channel, set it as the anchor; If the user only needs stream collection, it is set as audience. * Video call: use all user roles as anchors.

- After joining the channel, users in different roles have different behaviors:

- All users can receive audio and video streams in the channel by default.

- The anchor can publish audio and video streams in the channel.

- If viewers need to send streams, they can call the 'setClientRole' method in the channel to modify the user role so that it has the permission to send streams.

Precondition

Before implementing the function, please prepare the development environment according to the following requirements:

- Xcode 12.0 or above.

- Apple developer account.

- If you need to use the Cocoapods integration SDK, make sure that Cocoapods is installed. Otherwise, please refer to [Getting Started with CocoaPods]( https://guides.cocoapods.org/using/getting-started.html#getting -Started).

- Two iOS 9.0 or above devices.

- A computer that can access the Internet. If you have deployed a firewall in your network environment, refer to [Coping with firewall restrictions] to use the Orpa service normally.

- A valid Opa account and Opa project. Please refer to [Opening Service] to obtain the following information from the Opa console:

- App ID: A string randomly generated by Opa to identify your project.

- Temporary Token: Token is also called dynamic key, which authenticates the user when the client joins the channel. The temporary token is valid for 24 hours.

Create project

Follow these steps to create a project in Xcode:

-

Refer to [Create a project]( https://help.apple.com/xcode/mac/current/#/dev07db0e578 )Create a new project** For Application * *, select * * App * *, for * * Interface * * select * * Storyboard * *, and for * * Language * * select * * Obk * *.

information

If you have not added the development team information, you will see the * * Add account... * * button. Click this button and follow the screen prompts to log in to Apple ID, click * * Next * *, and then select your Apple account as the development team.

-

Set [Auto Sign] for your projec(https://help.apple.com/xcode/mac/current/#/dev23aab79b4)。

-

Set the [target device] for deploying your app](https://help.apple.com/xcode/mac/current/#/deve69552ee5)。

-

Add device permissions for the project.

Open the 'info. plist' file in the project navigation bar, and edit [Attribute List]( https://help.apple.com/xcode/mac/current/#/dev3f399a2a6 ), add recording and camera permissions required for real-time interaction.

information

- The permissions listed in the following table are optional. However, if you do not add the listed permissions, you will not be able to use the microphone and camera for real-time audio and video interaction.

- If you need to add a third-party plug-in or library (such as a third-party camera) to your project, and the signature of the plug-in or library is inconsistent with the signature of the project, you also need to check * * Disable Library Validation * * in * * Hardened Runtime * > * Runtime Exceptions * *.

- For more precautions, please refer to [Preparing Your App for Distribution]( https://developer.apple.com/documentation/xcode/preparing_your_app_for_distribution ).

Key type value Privacy - Microphone Usage Description String The purpose of using the microphone, such as for a call or live interactive streaming Privacy - Camera Usage Description String The purpose of using the camera, such as for a call or live interactive streaming

Integration SDK

According to the actual situation, choose one of the following integration methods to integrate the SDK in your project.

- Manual integration

-

Go to the [Download] page, get the latest SDK, and then unzip it.

-

Copy the files in the 'libs' path of the SDK package to your project path.

-

Open Xcode, [Add corresponding dynamic library]( https://help.apple.com/xcode/mac/current/#/dev51a648b07 )Ensure that the * * Embed * * attribute of the added dynamic library is set to * * Embed&Sign * *.

information

The Orpa SDK uses libc++(LLVM) by default. The library provided by the SDK is FAT Image, which contains 32/64 bit emulators and 32/64 bit real machine versions.

-

In Xcode, enter * * File>Swift Packages>Add Package Dependencies... * *, Paste the following URL:

HTTP

https://github.com/aopacloud/aopa-rtc -

Specify the SDK version you want to integrate in * * Choose Package Options * *. You can also refer to [Apple Official Document]( https://help.apple.com/xcode/mac/current/#/devb83d64851 )Make settings.

Create user interface

Create a user interface for your project according to the usage scenario, including local video window and remote video window. Replace the contents of the 'ViewController. swift' file with the following code:

Swift

import UIKit

import AopaRtcKit

class ViewController: UIViewController {

var localView: UIView!

var remoteView: UIView!

var AopaKit: AopaRtcEngineKit!

override func viewDidLoad() {

super.viewDidLoad()

localView = UIView(frame: UIScreen.main.bounds)

remoteView = UIView(

frame: CGRect(x: self.view.bounds.width - 135, y: 50, width: 135, height: 240))

self.view.addSubview(localView)

self.view.addSubview(remoteView)

}

}

Implementation process

The following figure shows the basic process of using OPA RTC SDK to achieve audio and video interaction.

The complete code for realizing the basic process of real-time interaction is listed below for reference. Copy the following code to the 'ViewController. swift' file to replace the original content.

information

In the 'appId', 'token' and 'channelName' fields, pass in the App ID, temporary token you obtained on the console, and the channel name you filled in when generating the temporary token.

Example code for realizing audio and video interaction

Swift

// ViewController.swift

import UIKit

import AopaRtcKit

class ViewController: UIViewController {

var localView: UIView!

var remoteView: UIView!

var AopaKit: AopaRtcEngineKit!

override func viewDidLoad() {

super.viewDidLoad()

localView = UIView(frame: UIScreen.main.bounds)

remoteView = UIView(frame: CGRect(x: self.view.bounds.width - 135, y: 50, width: 135, height: 240))

self.view.addSubview(localView)

self.view.addSubview(remoteView)

AopaKit = AopaRtcEngineKit.sharedEngine(withAppId:<#Your App ID#>, delegate: self)

AopaKit.enableVideo()

startPreview()

joinChannel()

}

deinit {

AopaKit.stopPreview()

AopaKit.leaveChannel(nil)

AopaRtcEngineKit.destroy()

}

func startPreview(){

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.view = localView

videoCanvas.renderMode = .hidden

AopaKit.setupLocalVideo(videoCanvas)

AopaKit.startPreview()

}

func joinChannel(){

let options = AopaRtcChannelMediaOptions()

options.channelProfile = .liveBroadcasting

options.clientRoleType = .broadcaster

options.publishMicrophoneTrack = true

options.publishCameraTrack = true

options.autoSubscribeAudio = true

AopaKit.joinChannel(byToken: <#Your Token#>, channelId: <#Your Channel Name#>, uid: 0, mediaOptions: options)

}

}

extension ViewController: AopaRtcEngineDelegate{

func rtcEngine(_ engine: AopaRtcEngineKit, didJoinChannel channel: String, withUid uid: UInt, elapsed: Int) {

print("didJoinChannel: \(channel), uid: \(uid)")

}

func rtcEngine(_ engine: AopaRtcEngineKit, didJoinedOfUid uid: UInt, elapsed: Int){

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.uid = uid

videoCanvas.view = remoteView

videoCanvas.renderMode = .hidden

AopaKit.setupRemoteVideo(videoCanvas)

}

func rtcEngine(_ engine: AopaRtcEngineKit, didOfflineOfUid uid: UInt, reason: AopaUserOfflineReason) {

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.uid = uid

videoCanvas.view = nil

AopaKit.setupRemoteVideo(videoCanvas)

}

}

Import Opa component

Import 'AopaRtcKit' into the 'ViewController. swift' file.

Swift

import AopaRtcKit

Initialize the engine

Call the 'sharedEngineWithConfig' method to create and initialize 'AopaRtcEngineKit'.

take care

Before initializing the SDK, ensure that the end user has fully understood and agreed to the relevant privacy policy.

Swift

AopaKit = AopaRtcEngineKit.sharedEngine(withAppId:<#Your App ID#>, delegate: self)

Enable video module

Follow the steps below to enable the video module.

- Call the 'enableVideo' method to enable the video module.

- Call the 'setupLocalVideo' method to initialize the local view and set the local video display properties.

- Call the 'startPreview' method to open the local video preview.

Swift

AopaKit.enableVideo()

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.view = localView

videoCanvas.renderMode = .hidden

AopaKit.setupLocalVideo(videoCanvas)

AopaKit.startPreview()

Join the channel and publish the audio and video stream

Call the 'joinChannelByToken' method, fill in the temporary token you obtained on the console, and add the channel name filled in when you obtained the token to the channel, and set the user role. Swift

let options = AopaRtcChannelMediaOptions()

options.channelProfile = .liveBroadcasting

options.clientRoleType = .broadcaster

options.publishMicrophoneTrack = true /

options.publishCameraTrack = true

options.autoSubscribeAudio = true

options.autoSubscribeVideo = true

AopaKit.joinChannel(byToken: <#Your Token#>, channelId: <#Your Channel Name#>, uid: 0, mediaOptions: options)

Set remote user view

Call the 'setupRemoteVideo' method to initialize the remote user view, and set the local display properties of the remote user's view. You can obtain the 'uid' of the remote user through the 'didJoinedOfUid' callback. Swift

func rtcEngine(_ engine: AopaRtcEngineKit, didJoinedOfUid uid: UInt, elapsed: Int){

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.uid = uid

videoCanvas.view = remoteView

videoCanvas.renderMode = .hidden

AopaKit.setupRemoteVideo(videoCanvas)}

Implement common callback

Define necessary callbacks according to usage scenarios. The following example code shows how to implement the 'didJoinChannel' and 'didOfflineOfUid' callbacks. Swift

func rtcEngine(_ engine: AopaRtcEngineKit, didJoinChannel channel: String, withUid uid: UInt, elapsed: Int) {

print("didJoinChannel: \(channel), uid: \(uid)")}

func rtcEngine(_ engine: AopaRtcEngineKit, didOfflineOfUid uid: UInt, reason: AopaUserOfflineReason) {

let videoCanvas = AopaRtcVideoCanvas()

videoCanvas.uid = uid

videoCanvas.view = nil

AopaKit.setupRemoteVideo(videoCanvas)}

Start audio and video interaction

After the view is loaded, call a series of methods in 'viewDidLoad' to add channels and start audio/video interaction.

Swift

override func viewDidLoad() {

super.viewDidLoad()

AopaKit.enableVideo()

startPreview()

joinChannel()}

End audio and video interaction

-

Call the 'leaveChannel' method to leave the channel, and the session related resources will also be released.

Swift

AopaKit.stopPreview() AopaKit.leaveChannel(nil) -

Call 'destroy' to destroy the engine, and release all resources used in the Opa SDK.

Swift

AopaRtcEngineKit.destroy()warning

After calling this method, you will no longer be able to use all the SDK methods and callbacks. To use the real-time audio and video interaction function again, you must create a new engine. See Initialization Engine。

Test App

Refer to the following steps to test your app:

- Connect the iOS device to the computer.

- Click * * Build * * to run your project, and wait a few seconds until the app installation is complete.

- Allow the app to access the microphone and camera permissions of the device.

- ((Optional) If the * * Untrusted developer * * prompt pops up on the device, first click * * Cancel * * to close the prompt, then open * * Settings>General>VPN and Device Management * * on the iOS device, and select the trusted developer in the * * Developer APP * *.

- Use the second iOS device, repeat the above steps, install the app on the device, open the app to join the channel, and observe the test results:

- If both devices join the channel as anchors, you can see each other and hear each other's voice.

- If the two devices are joined as anchors and viewers respectively, the anchors can see themselves in the local video window; Viewers can see the anchor and hear his voice in the remote video window.

Next Steps

- The example in this article uses a temporary token to join the channel. In the test or production environment, in order to ensure communication security, VoIP recommends using a token server to generate a token. See [Using Token Authentication] for details

- If you want to achieve fast live scenes, you can change the delay level of the audience end to low delay (` AopaAudienceLatencyLevelLowLatency ') on the basis of interactive live broadcast. For details, please refer to [Achieve Fast Live Broadcasting] Related information

Sample project

Opa provides open source real-time interactive sample projects for your reference. You can go to download or view the source code.

- Github: [AopaRtcIos]( https://github.com/aopacloud/aopa-rtc )