Navigation Fundamentals - UTRA-ART/Caffeine GitHub Wiki

Written by: Erick Mejia Uzeda

Navigation is a process which involves motion tracking, environment mapping and path planning. This problem is well studied in robotics and ROS has a variety of packages that aid in navigation. The goal of this wiki is to introduce you to 2 packages that we will use to perform autonomous navigation: robot_localization (Localization) and navigation(Navigation). The topics covered are:

- Localization

- Computing the odom frame

- Computing the map frame

- Computing the utm frame

- Navigation Stack

To localize the robot, various types of odometry sensors are used together to provide a robust estimate of the robot's position. For an overview of what is odometry see Odometry General Information and to get a primer of how we will use odometry in ROS, see Configuring Odometry in ROS.

From wikipedia, odometry is defined as: "[...] the use of data from motion sensors to estimate change in position over time. It is used in robotics by some legged or wheeled robots to estimate their position relative to a starting location."

As mentioned earlier, we will be using the robot_localization package to fuse odometry. Furthermore the nodes this package contains will also publish 3 frames which are necessary to localize the robot. See REP-105 for all standard coordinate frames.

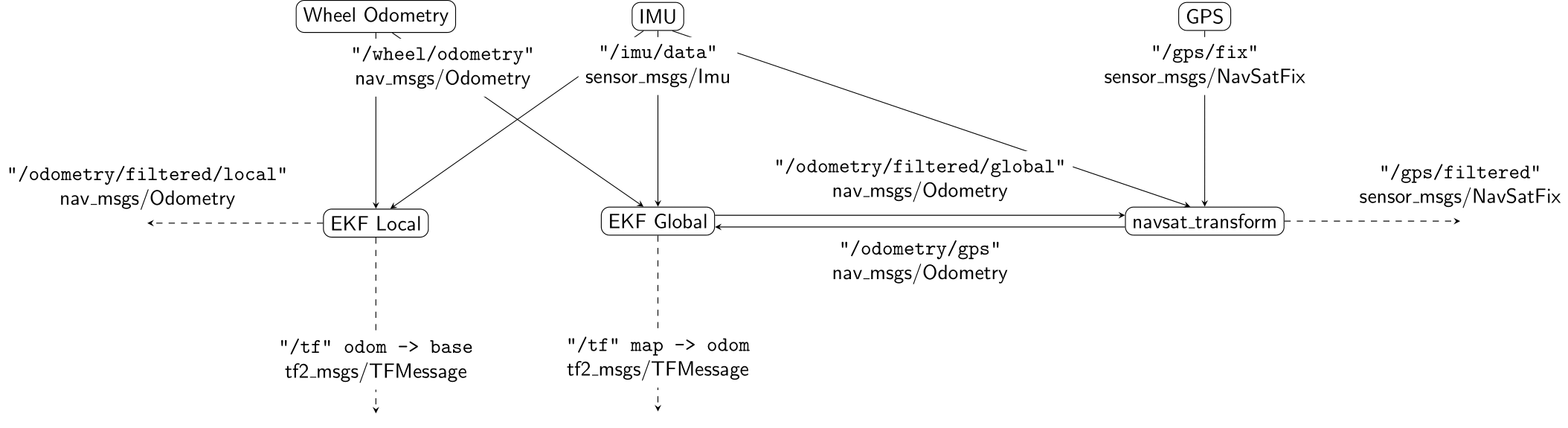

The set-up we will use for robot_localization will be similar to this:

ekf_localwill compute a locally consistent odometry reading + publish the odom frame

ekf_globalwill compute a globally consistent odometry reading + publish the map frame

navsat_transformwill relate the robot's position to GPS coordinates

The odom frame is a world-fixed frame in which the movement of the robot is continuous (no discrete jumps) relative to it. Because it is continuous, the odom frame is subject to drift due to the accumulation of small sensor errors over time. These properties make it accurate for short term planning but a bad reference frame in the long run.

The following measurements would be connected to ekf_local:

- Encoders. Good at measuring translational movement

- IMU. Good at measuring angular movement

- Visual Odometry. Used to supplement both translation and angular estimates

The map frame is a world-fixed frame that references an actual position in the real world. In efforts to maintain a most accurate reference, the transform between map and base_link is subject to discrete jumps. This makes the map frame bad for path planning but accurate when wanting know the robot's actual position.

The following measurements would be connected to ekf_global:

-

local odom. Computed by

ekf_local, used for locally accurate motion tracking - IMU. Provides world orientation (using a magnetometer)

-

GPS odom. Computed by

navsat_transform, provides world position

The utm (Universal Transverse Mercator) frame is an earth-fixed frame that enables associating GPS coordinates to a frame's known to the robot. This frame has the x-y-z axes pointing east-north-up.

Note. the odom and map frame generally have their origin set to the robot's starting position and orientation. The utm frame's origin is dependent on the robot's location on the earth!

The following measurements would be connected to navsat_transform:

-

global odom. Computed by

ekf_global, used to initialize relationship between UTM and map frames - IMU. Provides initial world orientation (using a magnetometer)

- GPS. Measures world position. In reality they are accurate to at most 1 meter and subject to GPS drift

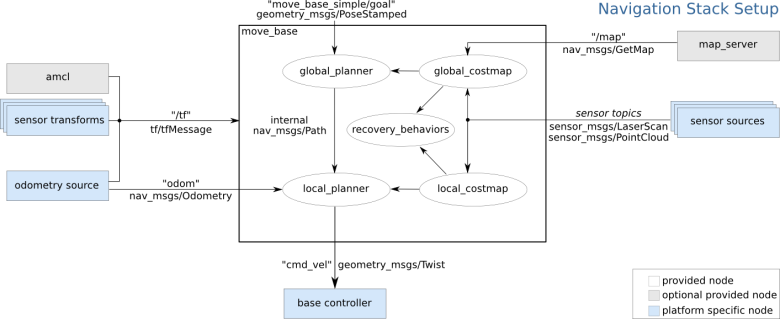

The navigation stack works as a collection of nodes. At a high level, quoting the Navigation ROS wiki: "It takes in information from odometry and sensor streams and outputs velocity commands to send to a mobile base". The trick to setting up the Navigation Stack is to know which topics to connect (<remap>) and which configurations to use. For a more in-depth overview of the different components of the navigation stack, see Understanding the Navigation Stack.

Note. the simulation team will work on setting up the

tf_treeand simulating sensors via Gazebo plugins.

To get the navigation stack running, you will use the move_base node. An example launch file looks like:

<launch>

<node pkg="move_base" type="move_base" respawn="false" name="move_base" output="screen">

<rosparam file="$(find package)/config/costmap_common_params.yaml" command="load" ns="global_costmap" />

<rosparam file="$(find package)/config/costmap_common_params.yaml" command="load" ns="local_costmap" />

<rosparam file="$(find package)/config/local_costmap_params.yaml" command="load" />

<rosparam file="$(find package)/config/global_costmap_params.yaml" command="load" />

<rosparam file="$(find package)/config/base_local_planner_params.yaml" command="load" />

</node>

</launch>4 configuration files (written in YAML) are used to configure the navigation stack. To understand how to set them up, see this ROS wiki.

In theory one can work on Localization and Navigation in parallel in simulation if we had an odometry source which faithfully reports the robot's world position. Otherwise, Autonomous Navigation is done in two big steps: first perform odometry fusion which will publish the odom, map and utm frames; second connect the navigation stack. Here is my suggested approach:

- Connect

ekf_local

Local odometry is continuous and locally accurate. This node will publish the odom frame.

- Connect

navsat_transform

Use GPS and IMU and local odom temporarily until global odom is ready. Ensure the IMU satisfies the ENU convention. Note. GPS odom is invariant to rotations! This node will publish the UTM frame.

- Connect

ekf_global

The odometry outputs from

ekf_localandnavsat_transformas well as the IMU are fed into this node. This node will publish the map frame.

- Default set up

Create all needed configuration files and make a launch file which calls

move_base. The goals is to use expected values for whatever is required to get things working.

- Tune parameters

Look into parameters more carefully and ensure the appropriates ones are set to adequately represent the robot. Furthermore we would look into improving robustness of how the navigation is executed.