VGG - AshokBhat/ml GitHub Wiki

About

- Deep convolutional network for object recognition

- Trained by Oxford's renowned Visual Geometry Group (VGG)

- VGG models computationally very intensive

Variants

|[Model]]](/AshokBhat/ml/wiki/[Weight) Layers|Params|[Top-1 Accuracy]]| |-|-|-|-|-| |VGG16|16|138M|70.5%|90.0%| |VGG19|19|143M|75.2%|92.5%|

Application

- Given image, find object name in the image.

- Can detect any one of 1000 images

- Takes input image of size 224 * 224 * 3 (RGB image)

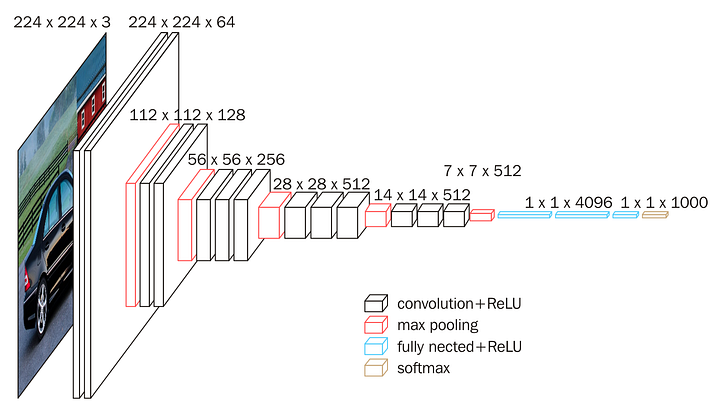

Architecture

Built using:

- Convolutional layers (used only 3*3 size )

- Max pooling layers (used only 2*2 size)

- Fully connected layers at end

- Total 16 layers

Paper

- https://arxiv.org/pdf/1409.1556.pdf

- Title: Very deep convolutional networks for large-scale image recognition

- Abstract

In this work we investigate the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting.

Our main contribution is a thorough evaluation of networks of increasing depth using an architecture with very small (3×3) convolution filters, which shows that a significant improvement on the prior-art configurations can be achieved by pushing the depth to 16–19 weight layers.

These findings were the basis of our ImageNet Challenge 2014 submission, where our team secured the first and the second places in the localisation and classification tracks respectively.

We also show that our representations generalise well to other datasets, where they achieve state-of-the-art results.

We have made our two best-performing ConvNet models publicly available to facilitate further research on the use of deep visual representations in computer vision.

VGG16 Keras application model summary

| Layer | (type) | Output Shape | Param # |

|---|---|---|---|

| input_2 | (InputLayer) | (None, 224, 224, 3) | 0 |

| block1_conv1 | (Conv2D) | (None, 224, 224, 64) | 1792 |

| block1_conv2 | (Conv2D) | (None, 224, 224, 64) | 36928 |

| block1_pool | (MaxPooling2D) | (None, 112, 112, 64) | 0 |

| - | - | - | - |

| block2_conv1 | (Conv2D) | (None, 112, 112, 128) | 73856 |

| block2_conv2 | (Conv2D) | (None, 112, 112, 128) | 147584 |

| block2_pool | (MaxPooling2D) | (None, 56, 56, 128) | 0 |

| - | - | - | - |

| block3_conv1 | (Conv2D) | (None, 56, 56, 256) | 295168 |

| block3_conv2 | (Conv2D) | (None, 56, 56, 256) | 590080 |

| block3_conv3 | (Conv2D) | (None, 56, 56, 256) | 590080 |

| block3_pool | (MaxPooling2D) | (None, 28, 28, 256) | 0 |

| - | - | - | - |

| block4_conv1 | (Conv2D) | (None, 28, 28, 512) | 1180160 |

| block4_conv2 | (Conv2D) | (None, 28, 28, 512) | 2359808 |

| block4_conv3 | (Conv2D) | (None, 28, 28, 512) | 2359808 |

| block4_pool | (MaxPooling2D) | (None, 14, 14, 512) | 0 |

| - | - | - | - |

| block5_conv1 | (Conv2D) | (None, 14, 14, 512) | 2359808 |

| block5_conv2 | (Conv2D) | (None, 14, 14, 512) | 2359808 |

| block5_conv3 | (Conv2D) | (None, 14, 14, 512) | 2359808 |

| block5_pool | (MaxPooling2D) | (None, 7, 7, 512) | 0 |

| - | - | - | - |

| flatten | (Flatten) | (None, 25088) | 0 |

| fc1 | (Dense) | (None, 4096) | 102764544 |

| fc2 | (Dense) | (None, 4096) | 16781312 |

| - | - | - | - |

| predictions | (Dense) | (None, 1000) | 4097000 |

See also

- [State-of-the-art]] : [[AlexNet]] ](/AshokBhat/ml/wiki/[VGG) | [ResNet]] | EfficientNet

- Others: [DenseNet]] ](/AshokBhat/ml/wiki/[[MobileNet) | ResNext