Google BERT - yarak001/machine_learning_common GitHub Wiki

순서: BERT 시작하기(Transformer, 이해, 활용) -> BERT 파생model(다른구조 및 학습방식에 의한 & 지식증류 기반에 의한) -> BERT 적용하기(Text 요약, 언어, Sentence, Domain, Video, BART)

- RNN과 LSTM은 다음 단어예측, 기계번역, text 생성등 순차적 task에 많이 사용

- 장기 의존성 문제가 있음

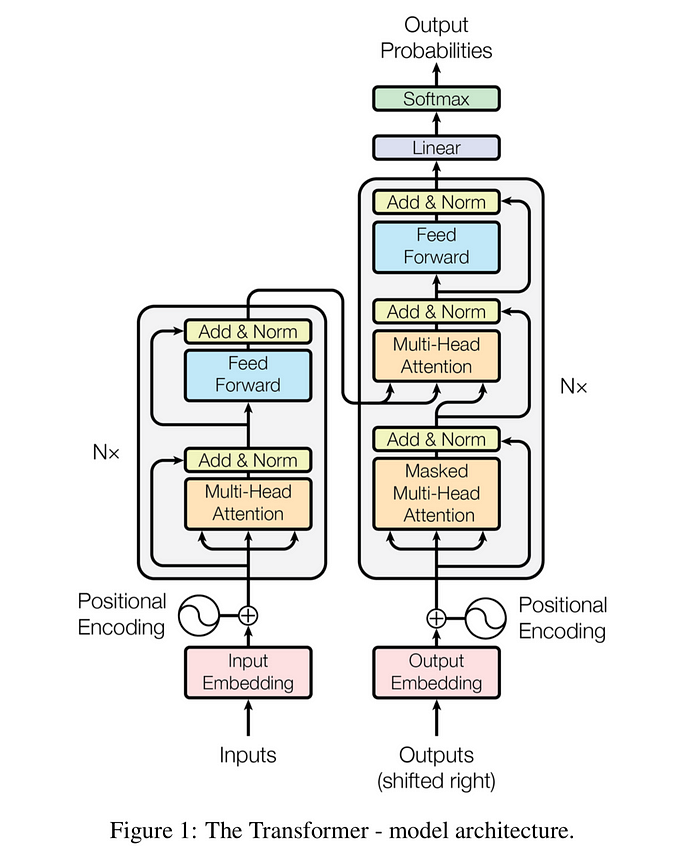

- RNN의 한계점은 극복하려 "Attention is all your need" 논문에서 transformer 제안

- RNN에서 사용하는 순환 방식을 사용하지 않고 순수하게 attention만 사용한 model, self attetion이라는 특수한 형태의 attention 사용

- BERT(Bidiectional Encoder Representataion from Transformer)는 구글에서 발표한 최신 embedding model

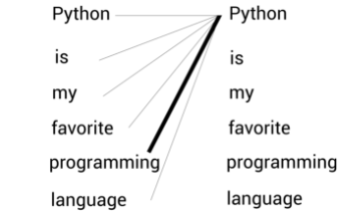

- BERT는 문맥 기반(context-based) embedding model (Word2Vec은 문맥 독립(context-free) embedding model)

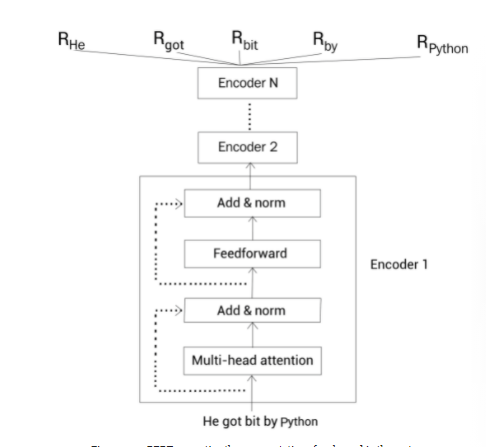

- A문장: He got bit by Python

- B문장: Python is my favorite programming language

- Word2Vec가 문맥 독립 model이기 때문에 문맥과 관계없이 'python'이라는 단어에 대해서 항상 동일한 embedding제공

- Bert는 문맥 기반 model이므로 문장의 문맥을 이해한 다음 문맥에 따라 단어 embedding 생성

- BERT는 transformer model을 기반으로 하며, encoder-decoder가 있는 transfomer model과 달리 encoder만 사용

- Encoder는 multi-head attention을 사용해 문장의 각 단어의 문맥을 이해허 문장에 있는 각 단어의 문맥 표현을 출력으로 반환

- BERB-base, BERT-large등 여러 구조를 지님

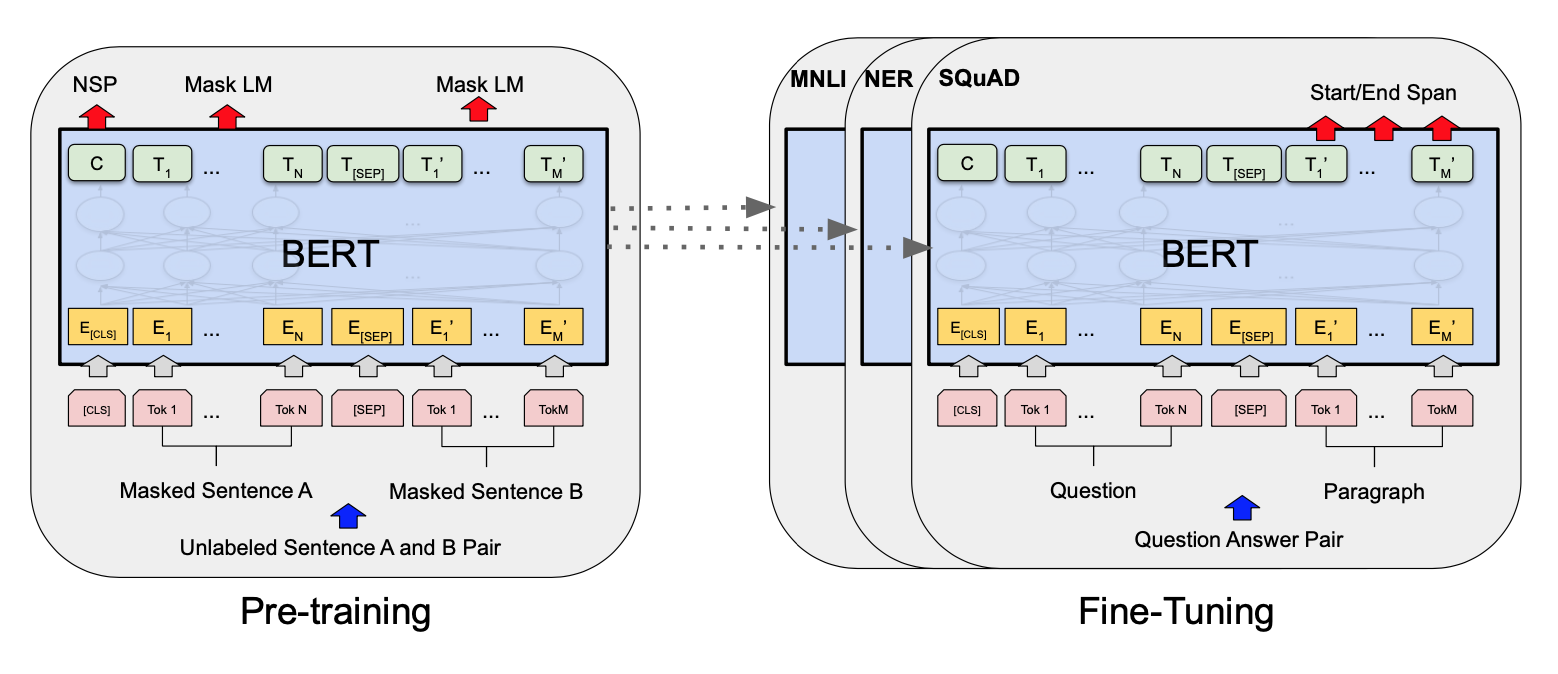

- BERT 사전학습

- model이 이미 대규모 dataset에서 학습되었으므로 새 task를 위해 새로운 model로 처음부터 학습시키는 대신 사전 학습된 model을 사용하고 새로운 task에 다란 가중치를 조정(fine tuning)하는 방식을 많이 사용

- BERT는 MLM(Masked Language Model)과 NSP(Next Sentence Prediction)으로 사전학습

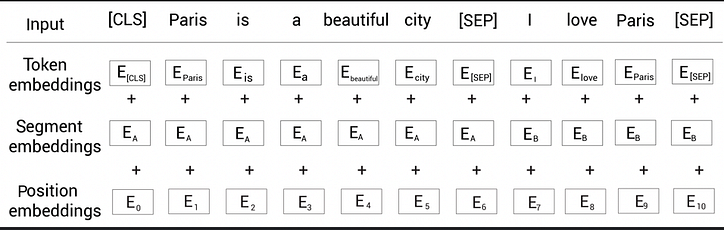

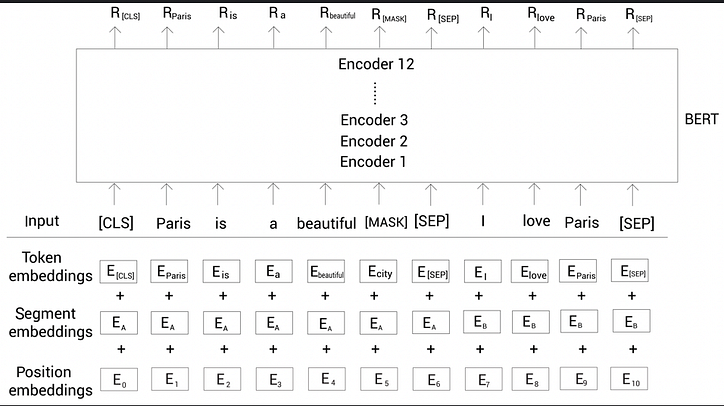

- BERT 입력

- A문장: Paris is a beautiful city

- B문장: I love Paris

-

- Token Embeddig

- [CLS] token은 첫번째 문장의 시작 부분에 추가되며 분류 작업에 사용, [SEP] token은 모든 문장의 끝에 추가되며 문장의 끝을 나타내는 데 사용

- Token Embedding 변수들은 사전 학습이 진행되면서 학습됨

- Segment Embedding

- 두 문장을 구별하는데 사용

- Positional Embedding

- Transformer가 어떤 반복 mechanism도 사용하지 않고 모든 단어르 병렬로 처리하므로 단어 순서와 관련된 정보를 제공해야 하는데 이때 위치 Positional Embedding사용

- Token Embeddig

- WordPiece Tokenizer

- BERT에서 사용하는 sub word tokenizer

- OOV(out-of-vocabulary) 처리에 효과적

- BERT 사전 학습 전략

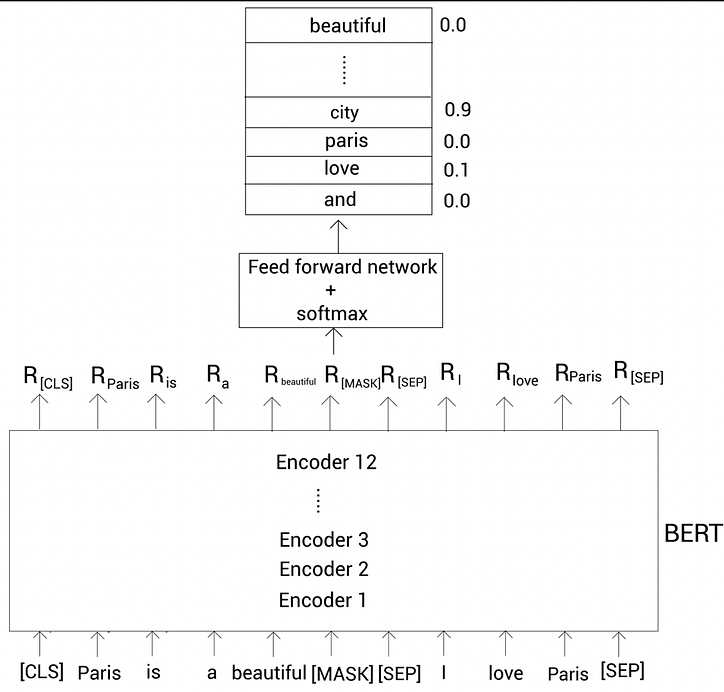

- MLM(Masked Language Modeling)

- 빈칸 채우기 task(cloze task)라고도 함

- 언어 모델링(Language Modeling): 일반적으로 임의의 문장이 주어지고 단어의 순서대로 보면서 다음 단어를 예측하도록 model을 학습시키는 것

- 자동 회귀 언어 모델링(Auto-regressive Language Modeling)

- 전방 예측(Forward(left to right) prediction)

- 후방 예측(Backwrad(right to left) prediction)

- 자동 인코딩 언어 모델링(Auto-Encoding Language Modeling)

- 양방향 에측을 모두 활용하여 문장 이해 측면에서 더 명확해지므로 더 정확한 결과를 제공

- 자동 회귀 언어 모델링(Auto-regressive Language Modeling)

- 주어진 문자에서 전체 단어의 15%를 무작위 masking하고 mask된 단어를 예측하도록 model을 학습

- ([MASK] token은 사전학습시에만 사용하고 fine-tuning입력에는 없으므로)사전학습과 file tuning사이에 불일치가 생기게 됨. 이를 극복하기 위해서 80-10-10규직 적용

- 15%중 80%의 token(실제 단어)을 [MASK] token으로 교체.

- 15%중 10%의 token(실제 단어)을 임의의 token(임의단어)로 교체

- 15%중 10%의 token는 어떤 변경도 하지 않음

- ([MASK] token은 사전학습시에만 사용하고 fine-tuning입력에는 없으므로)사전학습과 file tuning사이에 불일치가 생기게 됨. 이를 극복하기 위해서 80-10-10규직 적용

-

- Mask된 token을 예측하기 위해 BERT에서 반횐된 mask된 Token R[mask]의 표현을 softmax 활성화를 통해 FFNN에 전달 후 확률 출력

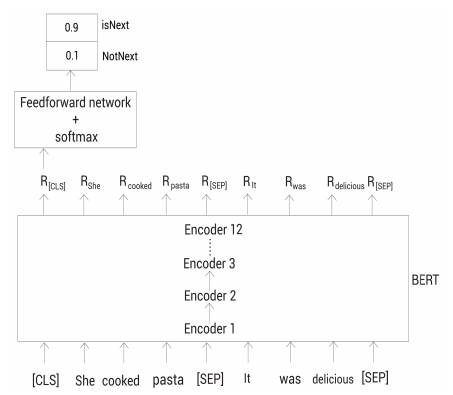

- NSP(Next Sentence Prediction)

- 이진 분류 task, BERT에 두 문장을 입력하고 두 번째 문장이 첫번째 문장의 다음 문장인지를 예측

- 두 문장사이의 관계를 파악할 수 있으며 이는 질문-응답 및 유사문장탐지와 같은 downstream task에 유용

-

- [CLS] token은 기본적으로 모든 token의 집계 표현을 보유하고 있으므로 문장 전체에 대한 표현을 담고 있음

- MLM(Masked Language Modeling)

- 사전 학습 절차

- BERT사전 학습에서는 토론토 책 말뭉치(Toronto BookCorpus)와 Wikipedia dataset사용

- 말웅치에서 두 문장을 sampliing(전체의 50%는 B문장이 A문장의 후속 문장이 되도록, 나머지 50%는 B문장이 A문장의 후속 문장이 아닌 것으로 sampling)

- A문장: We enjoyed the game

- B문장: Turn the radio on

- Wordpiece tokeinzer를 사용해서 문장 token화, 첫번째 문장의 시작 부분에 [CLS] token을 모든 문장의 끝에 [SEP] token추가

tokens = [ [CLS], we, enjoyed, the, game, [SEP], turn, the, radio, on, [SEP] ]

- 80-10-10 규칙에 따라 token의 15%를 무작위로 masking

tokens = [ [CLS], we, enjoyed, the, [MASK], [SEP], turn, the, radio, on, [SEP] ]

- BERT model 에 입력

- Mask된 token을 예측하기 위해 model을 학습시키며 동시에 B문장이 A문장의 후속 문장인지 여부를 판단. 즉 MLM과 NSP작업을 동시에 사용해 BERT를 학습

- 하위 단어 토큰화 알고리즘

- 바이트 쌍 인코딩(Byte Pair Encoding)

- 바이트 수준 바이트 쌍 인코딩(Byte-level Byte Pair Encoding)

- 워드피스(WordPiece)

- 사전 학습된 BERT model 탐색

- BERT를 처음부터 사전 학습시키는 것은 계산 비용이 많이 들므로, 사전 학습된 공개 BERT model을 download해서 사용하는게 효과적

| H=128 | H=256 | H=512 | H=768 | |

|---|---|---|---|---|

| L=2 | [2/128 (BERT-Tiny)][2_128] | [2/256][2_256] | [2/512][2_512] | [2/768][2_768] |

| L=4 | [4/128][4_128] | [4/256 (BERT-Mini)][4_256] | [4/512 (BERT-Small)][4_512] | [4/768][4_768] |

| L=6 | [6/128][6_128] | [6/256][6_256] | [6/512][6_512] | [6/768][6_768] |

| L=8 | [8/128][8_128] | [8/256][8_256] | [8/512 (BERT-Medium)][8_512] | [8/768][8_768] |

| L=10 | [10/128][10_128] | [10/256][10_256] | [10/512][10_512] | [10/768][10_768] |

| L=12 | [12/128][12_128] | [12/256][12_256] | [12/512][12_512] | [12/768 (BERT-Base)][12_768] |

- 사전학습 mdoel 사용 방법

- Embedding을 추출해 특징 추출기로 사용

- 사전 학습된 BERT model을 Text분류, 질문-응답등과 같은 downstream task에 맞게 fine tuning

- 사전 학습된 BERT에서 Embedding을 추출하는 방법

- 문장: I love Paris

- WordPiece tokenizer를 사용해 문장을 token화

tokens = [I, love, Paris]

- token list 시작 부분에 [CLS], 끝에 [SEP] token 추가

tokens = [ [CLS], I, love, Paris, [SEP] ]

- 동일 길이를 유지하기 위해서 [PAD] token 추가(길이가 7이라 가정)

tokens = [ [CLS], I, love, Paris, [SEP], [PAD], [PAD] ]

- [PAD] token 길이를 맞추기 위한 token이며 실제 token의 일부가 아니란 것을 model에게 이해시키기 위해 attention mask 생성

attention_mask = [1,1,1,1,1,0,0 ]

- 모든 token을 고유 token ID로 mapping

token_ids = [101, 1045, 2293, 3000, 102, 0, 0 ]

- 사전 학습된 BERT model에 대한 입력으로 attention mask와 token_ids를 공급하고 각 token에 대한 embedding을 얻음

- 전체 문장의 표현은 [CLS] token에 보유함. [CLS] token 표현을 문장 표현으로 사용하는 것이 항상 좋은 생각은 아님. 문장의 표현을 얻는 효츌적인 방법은 모든 token의 표현을 평균화하거나 pooling하는 것임

- 다운스트림 task를 위한 BERT 파인 튜닝 방법

- Text 분류

- 사전학습된 BERT model을 fine tuning할때 분류기와 함께 model의 가중치를 update하지만, 사전 학습된 BERT model을 특징 추출기로 사용하면 사전 학습된 BERT model아닌 분류기의 가중치만 update됨

- Fine tuning 중에 다음 두가징 방법으로 model을 가중치를 조정할 수 있음

- 분류 계층과 함꼐 사전 학습된 BERT model의 가중치를 update

- 사전 학습된 BERT model이 아닌 분류 계층의 가중치만 update. 이렇게 하면 사전 학습된 BERT model을 특징 추출기로 사용하는 것과 같음

- 자연어 추론(NLI)

- 자연어 추론(NLI, Natural Language Inference)은 model이 가정이 주어진 전저에 대해서 참인지 거짓인지 중립인지 여부를 분류하는 task

- 질문-응답

- 질문에 대한 응답이 포함된 단락과 함께 질문이 제공되면 model은 주어진 질문에 대한 답을 단락에서 추출함

- 입력값은 질문-단락쌍, 출력은 응답에 해당하는 text의 범위

- 단락 내 답의 시작과 끝 token(단어)의 확률 계산

- 개체명 인식(NER)

- 개체명 인식(NER, Named Entity Recognition)은 개체명을 미리 정의된 범주로 분류하는 것

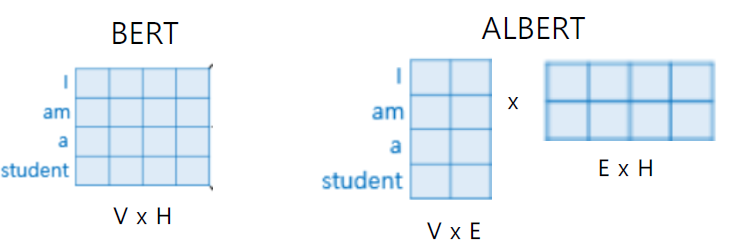

- ALBERT

- BERT의 주요 문제점 중 하나는 수백만 개의 변수로 구성되어 있어, model 학습이 어렵고, 추론시 시간이 많이 걸림. model 크기를 늘리게 되면 성능은 좋지만, 계산할 때 resouce 제한 발생

- 위 문제 해결을 위해 ALBERT가 도입되었으면 다음 두가지 방법으로 사용 변수양을 출소하여 학습시간과 추론시간 줄임

- 크로스 레이어 변수 공유(cross-layer parameter sharing)

- BERT model의 변수를 줄이는 방법중 하나. 모든 encoder의 변수르 학습시키는 것이 아니라 첫번째 encoder의 변수만 학습한 다음 첫번째 encoder layer 변수을 다른 모든 encoder layer와 공유

- All-shared: 첫번째 encoder의 하위 layer에 있는 모든 변수를 나머지 encoder와 공유

- Shared feed forward network: 첫번째 encoder layer의 feed forward network의 변수만 다른 encoding layer의 feed forward network와 공유

- Shared attention: 첫번째 encoder layer의 multi head attention 변수만 다른 encoder layer와 공유

- 팩토라이즈 임베딩 변수화(factorized embedding parameterization)

- Embedding행렬을 더 작은 행렬로 분해하는 방법

- Embedding행렬을 더 작은 행렬로 분해하는 방법

- BERT model의 변수를 줄이는 방법중 하나. 모든 encoder의 변수르 학습시키는 것이 아니라 첫번째 encoder의 변수만 학습한 다음 첫번째 encoder layer 변수을 다른 모든 encoder layer와 공유

- 크로스 레이어 변수 공유(cross-layer parameter sharing)

- ALBERT model 학습

- ALBERT는 MLM은 사용하지만 NSP task 대신 문장 순서 예측(SOP, Sentence Order Predition) 사용

- 사전학습에 NSP를 사용하는 것이 실제로는 유용하지 않고, MLM 대비 난이도가 높지 않음

- NSP는 주제에 대한 예측과 문장의 일관성에 대한 예측을 하나이 하나의 작업으로 결합되어 있ㅇ므

- SOP는 주제의 예측이 아니라 문장 간의 일관성 고려

- 문자 순서 예측

- 이진 분류 task, 주어진 한 쌍의 문장이 문장 순서가 바뀌었는지 여부(positive/negative)를 판단(NSP는 한쌍의 문장의 isNext, notNext인지 예측하는 형태로 학습)

- ALBERT는 MLM은 사용하지만 NSP task 대신 문장 순서 예측(SOP, Sentence Order Predition) 사용

- BERT와 마찬가지로 사전 학습된 ALBERT model으 가지고 fine tuning을 진행할 수 있음. BERT 대안을 사용하기 좋으 model

- RoBERTa

- BERT가 충분히 학습되지 않음을 확인하고, BERT model 사전시 다른 방법 사용

- MLM task에서 정적 masking이 아닌 동적 masking사용

- NSP task제거하고 MLM task만으로 사전 학습

- 더 많은 학습 data 사용

- BERT에서 사용한 토론토 책 말뭉치와 영어 wikipedia외 CC-News, Open WebText, Stories를 추가로 사용 (BERT:16GB, RoBERTa:160GB)

- Batch size 증가하여 학습

- BERT: 256 batch로 100만 단계동안 사전학습, RoBERTa: 8000 batch로 30만 단계 또는 50만 단계 동안 사전학습

- 학습 batch size르 증가하여 학습속도를 높이고 model 성능 향상

- BBPE tokenizer 사용

- BERT가 충분히 학습되지 않음을 확인하고, BERT model 사전시 다른 방법 사용

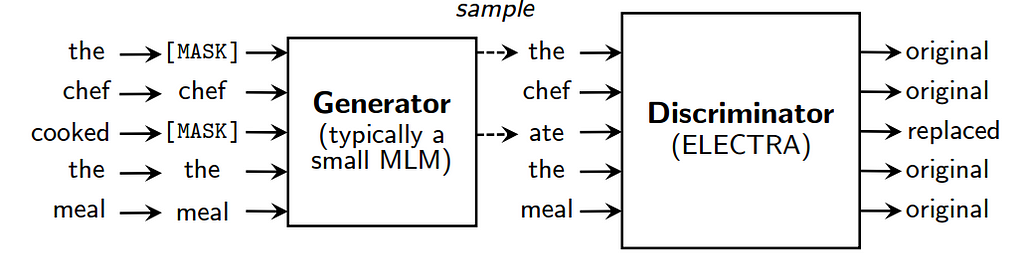

- ELECTRA(Effiicently Learning an Encoder that Classifies Token Replacement Accurately)

- MLM과 NSP대신 교체 토큰 탐지(Replaced token detection) task로 학습

- 교체 토큰 탐지

- Masking 대상인 token을 다른 token으로 변경한 후 이 token이 실제 token인지 교체한 token인지 판별

- MLM task의 문제중 하나는 사전 학습중 [MASK] token을 사용하지만 fine tuning task중에는 [MASK] token을 사용하지 않아, 사전학습과 fine tuning시 token에 대한 불일치가 생길수 있다는 것임

- Token을 무작위로 masking하고 생성자에 입력

- 입력 token을 생성자에 의해 생성한 token으로 교체하고 이를 판별자에 입력

- 판별자는 주어진 token이 원본인지 아닌지 판단

- 학습한 후 생성자를 제거하고 판별자를 ELECTRA model로 사용

- BETR의 MLM task의 경우 전체 token의 15%만 masking 한 후 학습하므로 15% mask된 token예측을 주목적으로 하지만, ELECTRA의 경우 주어진 token의 원본 여부를 판별하는 방법으로 학습하므로 모든 token을 대상으로 학습이 이뤄짐

- SpanBERT

- Text범위를 에측하는 질문-응답과 같은 task에 주로 사용

- Token을 무작위로 masking하는 대신에 token의 연속된 범위를 무작위로 masking

- MLM과 SBO(Span Boundary Objective)를 사용해 학습

- SBO는 mask된 token을 예측하기 위해서 해당하는 mask된 token을 표현에 사용하는 대신, span 경계에 있는 token의 표현만 사용. 또한 masked된 token의 위치 embedding값을 같이 사용. 이는 mask된 token의 상대적 위치

- MLM은 mask된 token을 예측하기 위해 해당 token의 표현만을 사용하고, SBO의 경우 mask된 token을 사용하기 위해 span 경계 token과 mask된 token의 위치 embedding 정보 사용

- SBO는 mask된 token을 예측하기 위해서 해당하는 mask된 token을 표현에 사용하는 대신, span 경계에 있는 token의 표현만 사용. 또한 masked된 token의 위치 embedding값을 같이 사용. 이는 mask된 token의 상대적 위치

- 사전 학습된 BERT를 사용하는데 따른 문제는 계산 비용이 많이 들고 제한된 resource로 model을 실행하기기 매우 어려움.

- 사전 학습된 BERT는 매개변수가 많고 추론에 시간이 오래 걸려 휴대폰과 같은 edge device에서 사용이 더 어려움

- 이를 위해 사전 학습된 대형 BERT에서 소형 BERT로 지식을 이전하는 지식 증류 사용

- 지식 증류(Knowledge distillation)

- 사전 학습된 대형 model의 동작을 재현하기 위해 소형 model을 학습시키는 model 압축 기술

- 교사-학생 학습(teacher-student learning)이라도 함.(teacher: 사전학습된 대형 model, student: 소형 model)

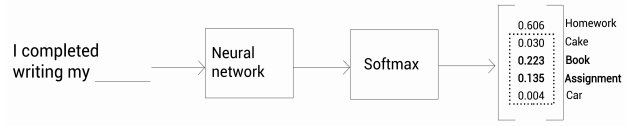

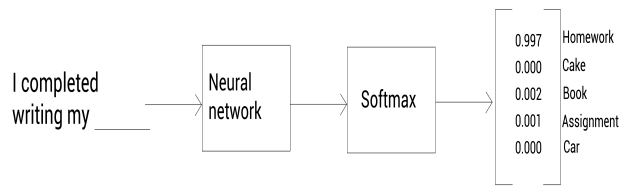

- 암흑 지식(dark knowledge): 확률이 높은 단어를 선택하는 것 외에도 network가 반환한 확률 분포에서 추출한 다른 유용한 정보

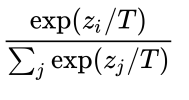

- Softmax Temperatue

- 출력 layer에서 softmax temperature를 사용하여 확률분포를 평활화 할수 있음

- T가 temperature, T=1인 경우 일반적인 softmax함수. T의 값을 늘리면 확률 분포가 더 부드러워지고 다른 class들에 대한 더 많은 정보 제공됨

- 결과적으로 softmax temperature를 사용해 암흑 지식을 얻을 수 있음. 암흑 지식을 얻기 위해 softmax temperature로 교사 network를 사전학습하고, 지식증류를 통해 암흑 지식을 교사로부터 학생에게 전달

- 학습 network 학습

-

- 학생 network는 사전 학습되지 않았으며, 교사 network만 softmax temperature로 사전학습됨

- Soft target: 교사 network의 출력, Soft prediction: 학생 network에서 만든 예측

- 증류손실(Distillation loss): Soft target과 Soft prediction상의 cross entropy 손실

- Hard target: label로 정답은 1, 다른 값들은 모두 0

- Hard prediction: Sottmax temperature=1인 학생 network에서 예측한 확률분포

- 학생손실(Student loss): Hard target과 Hard prediction간의 cross entropy 손실

- 최종 손실은 학생손실과 증류손실의 가중합계

- DistilBERT: BERT의 지식 증류 version

-

- 교사 BERT(BERT-base)사전 학습에 사용한 것과 동일한 dataset으로 학생 BERT 학습

- MLM task만으로 학습

- 동적 masking 사용

- 큰 batch size 학습

- 증류손실, 학생손실, Cosine Embedding 손실 사용

- Cosine Embedding 손실: 교사와 학생 BERT가 출력하는 vector사이의 거리 측정. 이를 최소화하면 학생 embedding을 더 정확하게 하면서도 교사 embedding과 유사하게 표현 가능

-

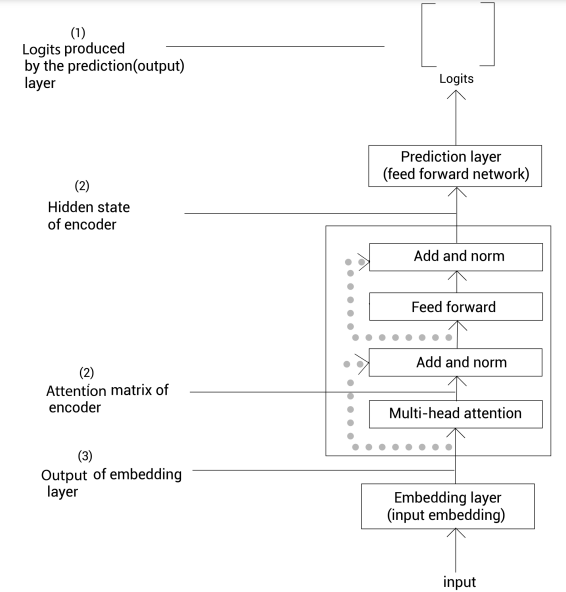

- TinyBERT

- 교사의 출력 layer(예측 layer)에서 학생에게 지식을 전달하는 것 외에 embedding 및 여러 encoder에서 지식 전달하여 더 많은 정보를 습득

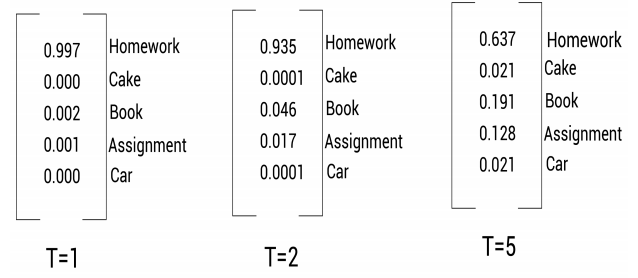

- TinyBERT 지식 증류

- Transformer layer(encoder layer)

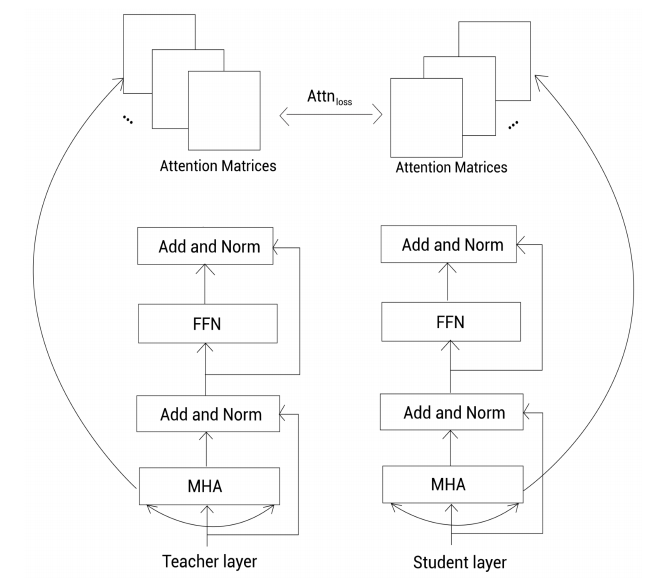

- 어텐션 기반 증류(attention based distillation)

- Attention 행렬에 대한 지식을 교사 BERT에서 학생 BERT로 전이. Attention 행렬에는 언어 구문, 상호 참조 정보등과 같은 유용한 정보가 포함되어 언어를 이해하는데 유용

- 학생 Attention 행렬과 교사 BERT attention 행력간의 평균 제곱 오차를 최소화해서 학생 network학습

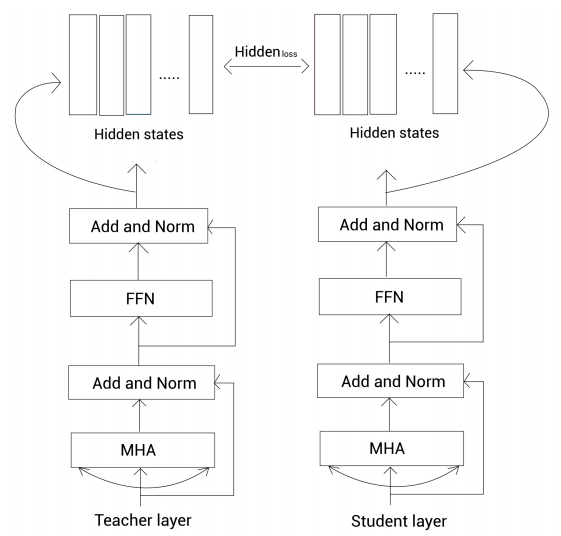

- 은닉상태 기반 증류(hidden state based distillation)

- 교사의 은닉상태와 학생의 은닉상태 사이의 평균 제곱 오차를 최소화해 증류 수행

*

- 교사의 은닉상태와 학생의 은닉상태 사이의 평균 제곱 오차를 최소화해 증류 수행

*

- 어텐션 기반 증류(attention based distillation)

- Embedding layer(input layer)

- 교사의 embedding layer에서 학생의 embedding layer로 지식 전달

- 학생 Embedding과 교사 Embedding 사이의 평균 제곱 오차를 최소화해서 Embedding layer증류 수행

- Prediction layer(output layer)

- 교사 BERT가 생성한 최종 출력 layer의 logi값을 학생 BERT로 전달해 진행. DistilBERT의 증류 손실과 유사

- Soft target과 soft prediction 간의 cross entropy 손실을 최소화해서 예측 layer에 증류 수행

- Transformer layer(encoder layer)

- TinyBERT 학습

-

일반 증류

- 사전 학습된 대형 BERT(BERT-base)를 교사로 사용하고 증류를 수행해 지식을 작은 학생 BERT(TinyBERT)로 전달

- 증류 후 학생 BERT는 교사의 지식으로 구성되며 이렇게 사전 학습된 학생 BERT를 일반 TinyBERT라 함

-

태스크 특화 증류

- 특정 task를 위해 일반 TinyBERT(사전 학습된 TinyBERT)를 fine tuning

- DitilBERT와 달리 TinyBERT에서는 사전 학습 단계에서 증류를 적용하는 것 외에 fine tuning 단계에서도 증류 적용할 수 있음

- 사전 학습된 BERT-base model을 사용해 특정 task에 맞게 fine tuning한 후 이를 교사로 사용. 일반 TinyBERT는 학생 BERT

- 증류 후 일반 TinyBERT는 교사의 task특화된 지식(fine tuning된 BERT-base)으로 구성되므로 특정 task에 대해 fine tuning된 일반 TinyBERT를 fine tuning된 TinyBERT라 부름

일반 증류(사전학습) Task 특화 증류(Fine tuning) 교사 사전 학습된 BERT-base Fine tuning된 BERT-base 학생 작은 BERT 일반 TinyBERT(사전 학습된 TinyBERT) 결과 증류 후 학생 BERT는 교사로부터 지식을 전수 받음. 이는 곧 사전 학습된 학생 BERT. 즉 일반 TinyBERT라 함 증류 후 일반 TinyBERT는 교사로부터 Task 특화 지식을 전수 받음. 이는 task 특화 지식으로 fine tunning된 TinyBERT라 함 -

Fine tuning 단계에서 증류를 수행하려면 일반적으로 더 많은 task별 dataset이 요구되어 data 증식 방법을 사용해 dataset 확보함

-

- BERT에서 신경망으로 지식 전달

- Text요약

- 긴 text의 문서를 짧은 문장으로 요약한느 과정

- 추출 요약(extractive summarization)

- 주어진 text에서 중요한 문장만 추출해 요약하는 고정, 많은 문장이 포함된 긴 문서에서 문서의 본질적인 의미를 담고 있는 중요한 문장만 추출해 문서의 요약을 생성

- 생성 요약(abstractive summarization)

- 주어진 text를 의역(paraphrasing)해 요약을 생성. 의역이란 text의 의미를 좀 더 명확하게 나타내기 위해서 다른 단어를 사용해 주어진 text를 새롭게 표현하는 것

- 주어진 text의 의미만 지닌 다른 단어를 사용해 주어진 text를 새로운 문장으로 표현

- 추출 요약(extractive summarization)

- 긴 text의 문서를 짧은 문장으로 요약한느 과정

- Text요약에 맞춘 BERT fine tuning

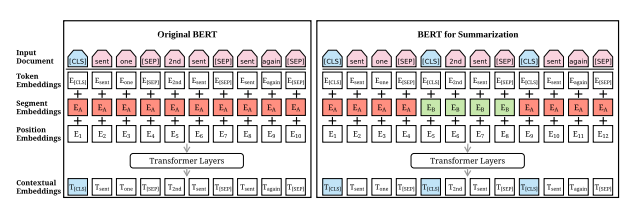

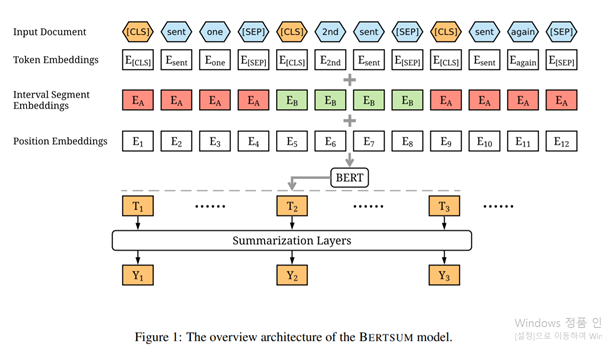

- BERT를 활용한 추출 요약

- 모든 문장의 시작 부분에 [CLS] token을 추가했기 때문에 [CLS] token에 대한 표현을 각 문장에 대한 표현으로 사용할 수 있음

- BERT model을 사용해서 입력 data 형식을 변경해 표현한 model을 BERTSUM이라 함

- BERT를 처음부터 학습시키는 대신, 사전 학습된 BERT model을 사용하되 입력 data 형태를 변경해서 학습시키면 모든 [CLS] token의 표현을 해당 문장의 표현으로 사용할 수 있음

- 분류기가 있는 BERTSUM

- BERT를 사용한 생성 요약

- 생성 요약을 수행하는데는 transformer의 encoder-decoder architecture 사용

- 사전 학습된 BERTSUM을 encoder로 활용

- trasnformer model은 encoder가 서전 학습된 BERTSUM model이지만 decoder는 무작위로 초기화되어 fine tuning중에 불일치가 발생할 수 있고, encoder가 사전학습 되었기 때문에 과적합될수 있고, decoder는 과소적합 될수 있음

- 이를 해결하기 위해 Adam optimizer를 encoder와 decoder에 각각 사용. encoder에는 학습률을 줄이고 좀더 부드럽게 감쇠하도록 설정

- 생성 요약을 수행하는데는 transformer의 encoder-decoder architecture 사용

- BERT를 활용한 추출 요약

- ROUGE 평가 지표 이해하기

- ROUGE(Recall-Oriented Understudy for Gisting Evaluation): Text 요약 task의 평가 지표

- ROUGE-N, ROUGE-L, ROUGE-W, ROUGE-S, ROUGE-SU

- ROUGE-N

- ROUGE-N은 후보 요약(예측한 요약)과 참조 요약(실제 요약)간의 n-gram 재현율(recall)

- 재현율 = 서로 겹치는 n-gram수 / 참조 요약의 n-gram수

- 후보 요약: Machine learning is seen as a subset of artificial intelligence.

- 참조 요약: Machine learning is as subset of artificial intelligence.

- ROUGE-1: 후보 요약(예측 요약)과 참조 요약(실제 요약)간의 unigram 재현율

- 후보 요약 unigram: machine, learning, is, seen, as, a, subset, of, artificial, intelligence

- 참조 요약 unigram: machine, learning, is, as, subset, of, artificial, intelligence

- ROUGE-1 = 8/8 =1

- ROUGE-1: 후보 요약(예측 요약)과 참조 요약(실제 요약)간의 bigram 재현율

- 후보 요약 bigram: (machine, learning), (learning, is), (is, seen), (seen, as), (as, a), (a, subset), (subset, of), (of, artificial), (artificial, intelligence)

- 참조 요약 bigram: (machine, learning), (learning, is), (is, a), (a, subset), (subset, of), (of, artificial), (artificial, intelligence)

- ROUGE-2 = 6/7 = 0.85

- ROUGE-L

- 가장 긴 공통 시퀀스(LCS, longest common subsequence)를 기반으로 함. 두 sequence 사이의 LCS란 최대 길이를 가지는 공통 하위 sequence를 말함

- 후보 및 참조 요약에 LCS가 있다는 것은 후보 요약과 참조 요약이 일치하는 것

- ROUGE-L은 F-measure를 사용해 측정

- M-BERT 이해하기

- M-BERT는 영어를 포함한 다른 언어들의 표현을 얻을 수 있음

- M-BERT는 MLM과 NSP를 사용하면서 영어 wikipedia뿐 아니라 104개 언어의 wikipedia로 학습됨 (언어별 비중이 다르므로 sampling 방법 이용, 자료가 많은 언어는 under sampling, 적은 sample은 over sampling)

- M-BERT는 특정 언어 쌍이나 언어 정렬이 되어 있는 학습 data없이도 다른 언어들로부터 context 이해 => 교차 언어를 고려한 목표 함수 없이 M-BERT 학습시킨 것이 중요!!

- 특징

- M-BERT의 일반화 기능성은 어휘 중복에 의존하지 않음

- M-BERT의 일반화 가능성은 유형학 및 언어 유사성에 따라 다름

- M-BERT는 code switching text를 처리할 수 있지만, 음차 text는 처리할 수 없음

- XLM(Cross-language Language Model)

- 다국어 목표를 가지고 학습된 BERT. XLM은 M-BERT보다 다국어 표현 학습을 할 때 성능이 뛰어남

- 단일 언어 dataset 및 병렬 언어 dataset(교차 언어 dadtaset) 사용

- 학습 방법

- 인과 언어 모델링(CLM, Casual Language Modeling)

- 주어진 이전 단어 set에서 현재 단어의 확률을 예측

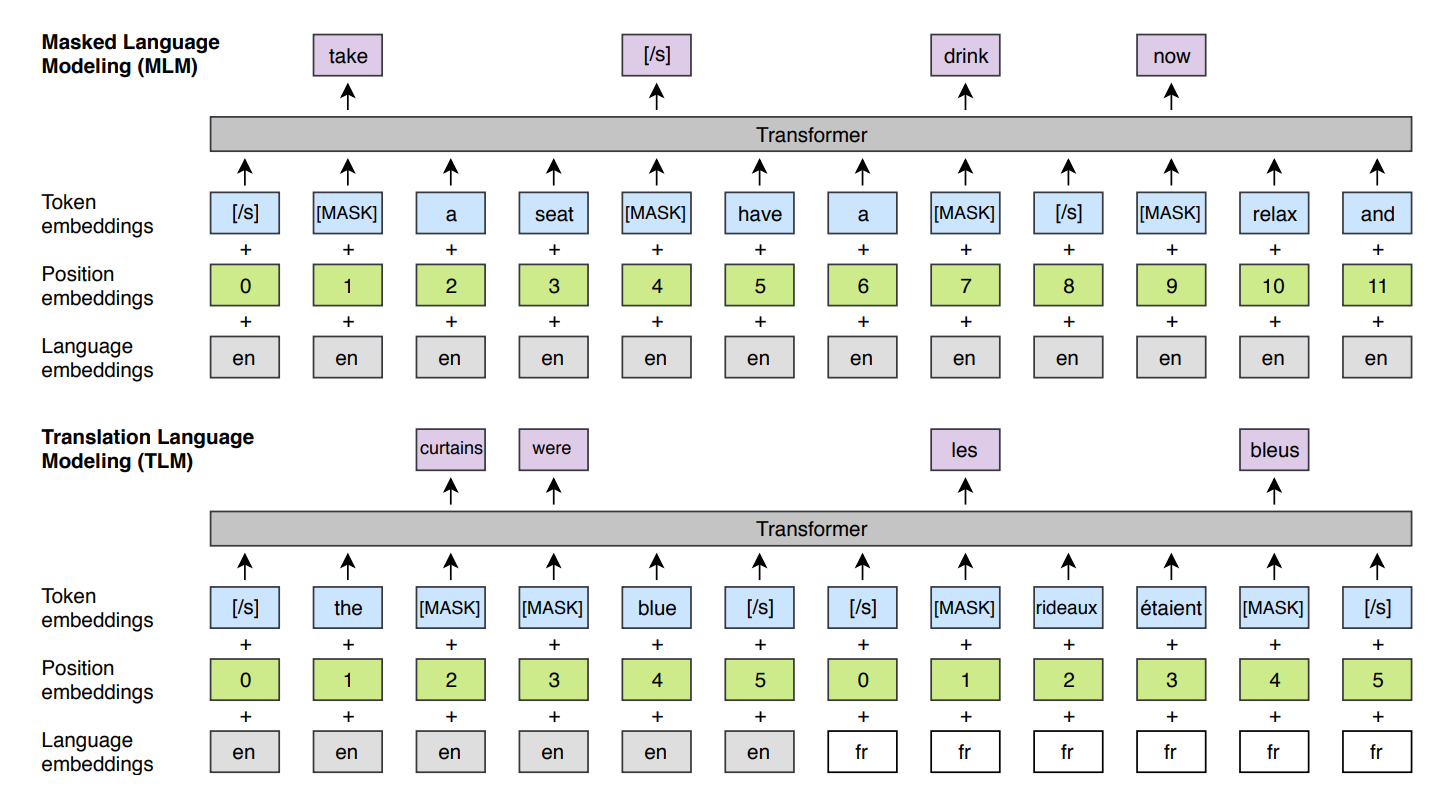

- 마스크 언어 모델링(MLM, Masked Language Modeling)

- Token의 15%를 masking(80-10-10규칙 적용)하고 mask된 token을 예측

- 번역 언어 모델링(TLM, Translation Language Modeling)

- 서로 다른 두 언어로서 동일한 text로 구성된 병렬 교차 언어 data를 이용해 학습

- 서로 다른 언어를 나타내기 위해서 언어 embedding을 사용하고, 두 문장 모두 별도의 위치 embedding을 사용.

- XLM 사전학습

- CLM사용

- MLM사용

- TLM과 결합해 MLM사용

- CLM, MLM을 사용해 XLM을 학습시키는 경우 단일 언어 dataset을 사용.

- TLM의 경우 병렬 dataset을 사용

- MLM과 TLM을 사용하는 경우 MLM과 TML으로 목적 함수를 변경

- 사전 학습된 XLM을 직접 사용하거나 BERT와 마찬가지로 downstream task에서 fine tuning 할 수 있음

- XLM-R 이해하기

- XLM-RoBERTa로 교차 언어 표현 학습을 위한 SOTA 기술

- 성능향상을 위해 XLM에서 몇가지를 보완한 확장 version

- MLM만으로 학습시키고 TLM은 사용하지 않음. 즉 단일 언어 dataset만 필요

- 2.5TB의 Common crawl dataset 사용

- 언어별 BERT

- sentence-BERT로 문장 표현 배우기

- 고정 길이의 문장 표현을 얻는데 사용하는 사전 학습된 BERT 또는 파생 model

- vanilla BERT도 문장 표현을 얻을 수 있지만 추론 시간이 많이 걸려 이를 개선하고 한 model

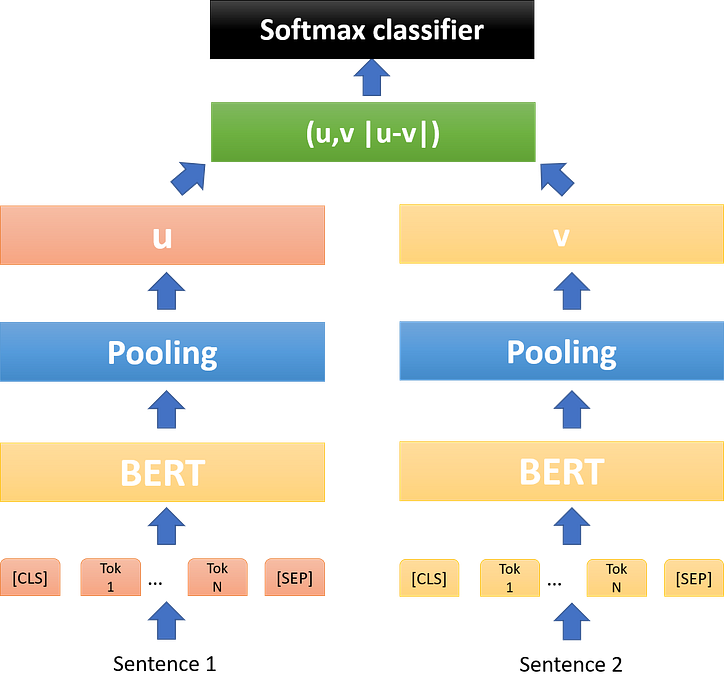

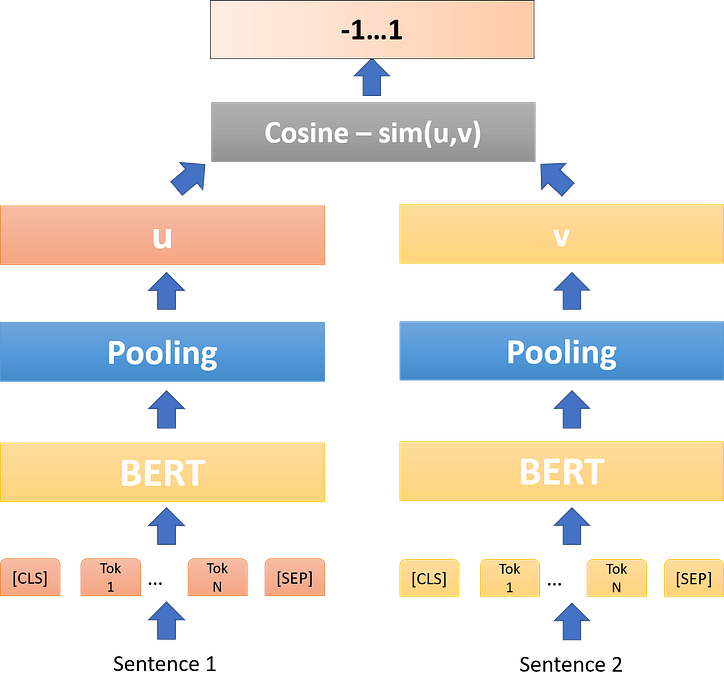

- 문장 쌍 분류와 두 문장의 유사도 계산등에 널리 사용

-

- [CLS] token의 표현을 문장 표현으로 사용할 때의 문제점은 특히 fine tuning 없이 사전 학습된 BERT를 직접 사용하는 경우 [CLS] token의 문장 표현이 정확하지 않음

-

- 모든 token의 표현을 pooling해 문장 표현을 계산

- 평균 pooling: 모든 단어(token)의 의미를 가짐

- 최대 pooling: 중요한 단어(token)의 의미를 가짐

- 모든 token의 표현을 pooling해 문장 표현을 계산

- sentence-BERT 이해하기

- 처음부터 학습시키지 않고 사전 학습된 BERT(또는 파생model)을 선택해 문장 표현을 얻도록 fine tuning함

- 즉, sentence-BERT는 문장 표현을 계산하기 위해 fine tuning된 BERT model

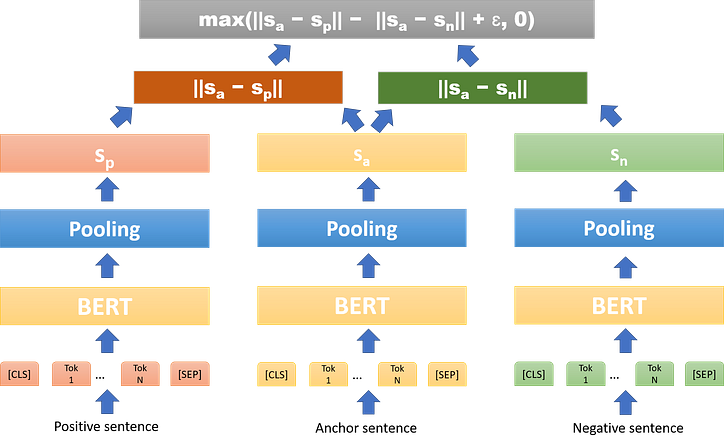

- 샴 네트워크

- 문장 쌍 분류 task: 두 문장이 유사한지 아닌지를 분류

- 문장 쌍 회귀 task: 두 문장 사이의 의미 유사도 예측

- 트리플렛 네트워크

- 기준문과 긍정문 사이의 유사도가 높아야 하고 기준문과 부정문 사이의 유사도가 낮아야 하는 표현 계산

- domain-BERT

- 특정 domain 말뭉치에 학습시킨 BERT

- VideoBERT로 언어 및 비디오 표현 학습

- VideoBERT는 언어 표현 학습과 동시에 video 표현도 학습. Image caption생성, Video caption, video의 다음 frame 예측등에 사용

- 사전학습

- 사전학습에는 교육용 video 사용. video에서 언어 token과 시각 token 추출

- cloze task

- 언어-시각(linguistic-visual) 정렬

- 언어와 시각 token이 시간적으로 정렬되어 있는지를 예측, 즉 text(언어 token)이 video(시각적 token)과 일치하는지 여부를 예측

- [CLS] token의 표현을 사용해 언어와 시각 token이 서로 정렬되는지 예측

- VideoBERT 응용

- 다음 시각 token 예측

- 시각 token을 입력해 상위 3개의 다음 시각 token 예측

- Text-Video 생성

- Text가 주어지면 해당하는 시각 token 생성

- video 자막

- Video를 입력하면 자막 생성

- BART 이해하기

- Facebook AI에서 도입한 transformer architecture기반의 noise 제거 autoencoder

- 손상된 text를 재구성해 학습

- 사전 학습된 BART를 사용하여 여러 downstream task에 fine tuning 가능.

- Text 생성에 가장 적함

- RoBERTa와 비슷한 성능

- 구조

- Encoder와 decoder가 있는 transformer model

- 손상된 text를 encoder입력 -> encoder를 주어진 text 표현 학습 후 decoder로 전달 -> decoder는 encoder가 생성한 표현을 가져와 손상되지 않은 원본 text 재구성

- Encoder는 양방향, decoder는 단방향

- 복원손실, 원본 text와 decoder가 생성한 text사이의 cross entropy 손실을 최소화 하도록 학습

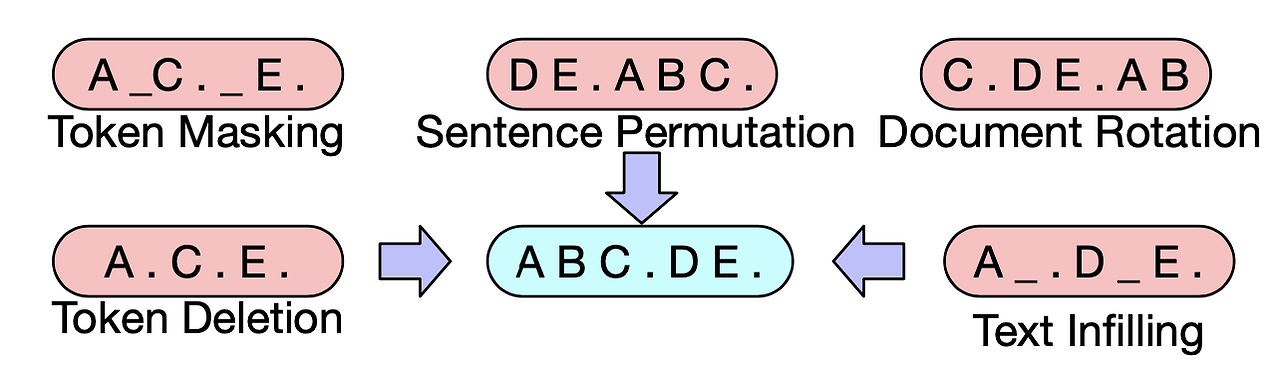

- Noising 기술

- Token masking: 몇 개의 token을 무작위로 masking

- Token Deletion: 일부 token을 무작위로 삭제

- Text Infilling: 단일 [MASK] token으로 연속된 token set를 masking

- Sentence Permutation: 문장의 순서를 무작위로 섞음

- Document Rotation: 주어진 문서에서 문서의 시작이 될 수 있는 특정 단어(token)을 무작위로 선택한 후 선택한 단어 앞의 모든 단어를 문서 끝에 추가

- KoBERT

- KoGPT2

- KoBART