Extracting text from an image using Ocropus 中文 - wanghaisheng/awesome-ocr GitHub Wiki

使用Ocropus从图片中提取文本

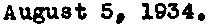

在上一篇文章中,我介绍了一种方法从图片中提取只包含文本的那部分。最终的效果如下:

In the last post, I described a way to crop an image down to just the part containing text. The end product was something like this:

在本文里,我会介绍如何使用来从图片中提取文本。纯文本和文本对应的图片相比优势多多:你可以对其进行搜索,可以用更简洁的方式存储,也可以调整成更加适合WEB UI的格式。

In this post, I'll explain how to extract text from images like these using the Ocropus OCR library. Plain text has a number of advantages over images of text: you can search it, it can be stored more compactly and it can be reformatted to fit seamlessly into web UIs.

我不想过多介绍为何从众多类似的库中选择了Ocropus的原因,比如 Tesseract。主旨大体如下: 1.更加透明 2.更加可hackable 3.针对字符切割问题更加健壮

I don't want to get too bogged down in the details of why I went with Ocropus over its more famous cousin, Tesseract, at famous cousin, Tesseract, at least not in this post. The gist is that I found it to be:

- more transparent about what it was doing.

- more hackable

- more robust to character segmentation issues

本文会有点长,但图片也很多,坚持住。

This post is a bit long, but there are lots of pictures to help you get through it. Be strong!

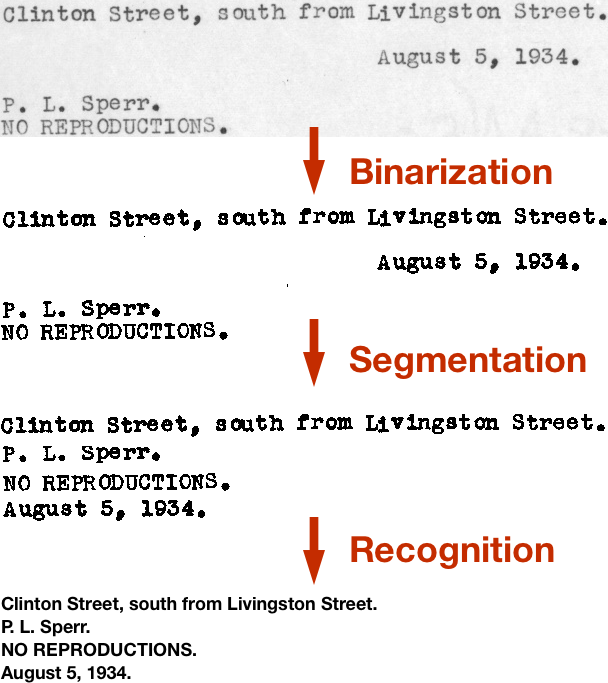

Ocropus 中包含了大量的工具,供从扫描后得到的图片中提取文本信息。基本的流程如下所示:

Ocropus (or Ocropy) is a collection of tools for extracting text from scanned images. The basic pipeline looks like this:

本文中会介绍这些步骤,但首先我们要安装好Ocropus!

I'll talk about each of these steps in this post. But first, we need to install Ocropus! Installation

Ocropus uses the Scientific Python stack. To run it, you'll need scipy, PIL, numpy, OpenCV and matplotlib. Setting this up is a bit of a pain, but you'll only ever have to do it once (at least until you get a new computer).

On my Mac running Yosemite, I set up brew, then ran:

Ocropus 使用的Python 技术栈,为了运行 Ocropus ,需要 scipy, PIL, numpy, OpenCV and matplotlib 这些包。要把这些都弄好是很麻烦的,但你只需要做一次就行。 在我的Mac上,使用brew,运行如下命令

brew install python

brew install opencv

brew install homebrew/python/scipy

为了解决最后一步中的问题,我使用了中的解决办法

cd /usr/local/Cellar/python/2.7.6_1/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages

rm cv.py cv2.so

ln -s /usr/local/Cellar/opencv/2.4.9/lib/python2.7/site-packages/cv.py cv.py

ln -s /usr/local/Cellar/opencv/2.4.9/lib/python2.7/site-packages/cv2.so cv2.so

然后 你可以按照 ocropy 网站上的命令。运行 ocropus-nlbin --help 可以知道是否已经安装成功。

Ocropus uses the Scientific Python stack. To run it, you'll need scipy, PIL, numpy, OpenCV and matplotlib. Setting this up is a bit of a pain, but you'll only ever have to do it once (at least until you get a new computer).

On my Mac running Yosemite, I set up brew, then ran:

brew install python

brew install opencv

brew install homebrew/python/scipy

To make this last step work, I had to follow the workaround described in this comment:

cd /usr/local/Cellar/python/2.7.6_1/Frameworks/Python.framework/Versions/2.7/lib/python2.7/site-packages

rm cv.py cv2.so

ln -s /usr/local/Cellar/opencv/2.4.9/lib/python2.7/site-packages/cv.py cv.py

ln -s /usr/local/Cellar/opencv/2.4.9/lib/python2.7/site-packages/cv2.so cv2.so

Then you can follow the instructions on the ocropy site. You'll know you have things working when you can run ocropus-nlbin --help.

Ocropus 处理流程中第一步是 binarization 二值化处理:将原始图片从灰度图转换为黑白图像。有很多方法可以实现,可以从该PPT中了解到其中一些。 Ocropus 使用了自适应阈值法,也就是整个图片中区分黑白的阈值是变化的。这在处理图书的扫描件时特别重要,整个页面可能会存在亮度等级的差异。

同样合并在这一步的还有倾斜度估计skew estimation,主要是为了将图片进行适量翻转以保证文本真正是水平的。大致上是通过brute force 算法来做的:Ocropus 中尝试了 +/-2° 的32个角度,选择其中row sums的方差最大的那个。这种方法行得通的原因是:当图片完全对齐的时候,文本所在行和文本之间的空行会存在巨大的方差。当对图片进行翻转,这个差距会blended。

ocropus-nlbin -n 703662b.crop.png -o book

** -n **告诉Ocropus跳过页面大小的检测。我们提供的一个很小的已经切割好的图像而非整个页面的一张图片,所有这个参数是必须的。 这条命令会得到两个输出:

- book/0001.bin.png: binarized version of the first page (above)

- book/0001.nrm.png: a "flattened" version of the image, before binarization. This isn't very useful.

The first step in the Ocropus pipeline is binarization: the conversion of the source image from grayscale to black and white.

There are many ways to do this, some of which you can read about in this presentation. Ocropus uses a form of adaptive thresholding, where the cutoff between light and dark can vary throughout the image. This is important when working with scans from books, where there can be variation in light level over the page.

Also lumped into this step is skew estimation, which tries to rotate the image by small amounts so that the text is truly horizontal. This is done more or less through brute force: Ocropy tries 32 different angles between +/-2° and picks the one which maximizes the variance of the row sums. This works because, when the image is perfectly aligned, there will be huge variance between the rows with text and the blanks in between them. When the image is rotated, these gaps are blended.

ocropus-nlbin -n 703662b.crop.png -o book

The -n tells Ocropus to suppress page size checks. We're giving it a small, cropped image, rather than an image of a full page, so this is necessary.

This command produces two outputs:

book/0001.bin.png: binarized version of the first page (above)

book/0001.nrm.png: a "flattened" version of the image, before binarization. This isn't very useful.

(The Ocropus convention is to put all intermediate files in a book working directory.)

下一步是从图片中提取单行文本。同样有多种方法实现,其中一些可以通过这个PPT了解到。

一开始 Ocropus 预估你的文本的 "scale" 。通过在二值化后的图片中寻找连接在一起的组件并计算他们维度的中值来实现。这可以对应到你所使用字体的x-height .

接下来找到单行文本。步骤如下所示:

- 1、根据scale移除过大或过小的组件。这些不可能是字符

- 2、使用y-derivative of a Gaussian kernel 第42页来检测剩下的特征的上限边缘。然后水平模糊化把同一行字符上边缘blend对齐

- 3、上边缘和下边缘之间的字符就是一行行文本

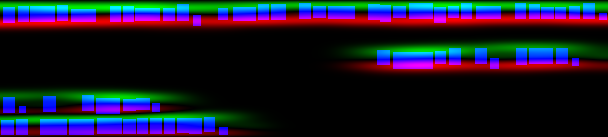

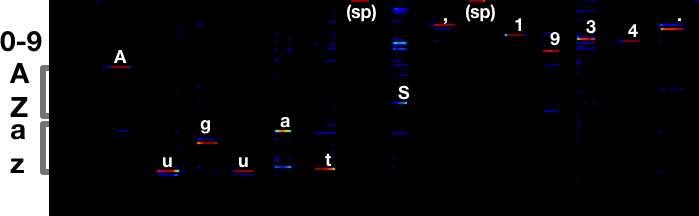

图片能够更好的解释,如下就是第2步的结果(he edge detector + horizontal blur)

蓝色框是二值化图片中的组件(比如 字符)。whispy绿色区域是顶部,红色为底部。我还从未见过这样使用Gaussian kernel: its derivative is an edge detector.

如下是检测的文本行,捎带扩展了顶部和底部的区域:

有趣的是文本行不需要是最简单的矩阵,事实上,底部两个组件的y-coordinates是重叠的。Ocropus 在提取矩阵文本行之前将这些区域标记为mask:

我所使用的命令如下:

ocropus-gpageseg -n --maxcolseps 0 book/0001.bin.png

** --maxcolseps 0 ** 告诉 Ocropus 图片中只有一列。-n跳过页面大小检测。 这条命令会得到5个输出:

- book/0001.pseg.png encodes the segmentation. The color at each pixel indicates which column and line that pixel in the original image belongs to.

- book/0001/01000{1,2,3,4}.bin.png are the extracted line images (above).

The next step is to extract the individual lines of text from the image. Again, there are many ways to do this, some of which you can read about in this presentation on segmentation.

Ocropus first estimates the "scale" of your text. It does this by finding connected components in the binarized image (these should mostly be individual letters) and calculating the median of their dimensions. This corresponds to something like the x-height of your font.

Next it tries to find the individual lines of text. The sequence goes something like this:

It removes components which are too big or too small (according to scale). These are unlikely to be letters.

It applies the y-derivative of a Gaussian kernel (p. 42) to detect top and bottom edges of the remaining features. It then blurs this horizontally to blend the tops of letters on the same line together.

The bits between top and bottom edges are the lines.

A picture helps explain this better. Here's the result of step 2 (the edge detector + horizontal blur):

The white areas are the tops and the black areas are the bottoms.

Here's the another view of the same thing:

Here the blue boxes are components in the binarized image (i.e. letters). The wispy green areas are tops and the red areas are bottoms. I'd never seen a Gaussian kernel used this way before: its derivative is an edge detector.

Here are the detected lines, formed by expanding the areas between tops and bottoms:

It's interesting that the lines needn't be simple rectangular regions. In fact, the bottom two components have overlapping y-coordinates. Ocropus applies these regions as masks before extracting rectangular lines:

Here's the command I used (the g in ocropus-gpageseg stands for "gradient"):

ocropus-gpageseg -n --maxcolseps 0 book/0001.bin.png

The --maxcolseps 0 tells Ocropus that there's only one column in this image. The -n suppresses size checks, as before.

This has five outputs:

- book/0001.pseg.png encodes the segmentation. The color at each pixel indicates which column and line that pixel in the original image belongs to.

- book/0001/01000{1,2,3,4}.bin.png are the extracted line images (above).

表格数据的切分 https://github.com/tmbdev/ocropy/issues/115

The number of connected components is part of some plausibility checks which will refuse "abnormal" input. However, in your case you can just skip these test with the -n option.

Actually, you need to increase that number, because you have more than 2 whitespace separator for columns. Try

ocropus-gpageseg -n --debug --maxcolseps 7 --maxlines 500 0001.bin.png

Here is the debug picture animation with increasing the maxcolseps-value from 0 to 7 (by 1 each time):

在前面的工作完成之后,我们可以进入到最有趣的部分:使用神经网络进行字符识别。

在完成这样的对应,问题在于:

由于每行都有自己独特的模式,对于某一行文本,可能二值化处理会得到一个更暗或更亮的图片。可能倾斜度估计并没有得到很好的结果。可能the typewriter had a fresh ribbon and produced thicker letters。可能该页在存储的时候被水淋了。

Ocropus 使用 LSTM RNN 来学习这种对应关系。默认的模型包含48个输入,单个隐藏层中200个节点和249个输出。

神经网络的输入是像素的列。图片中的列从左到右一次一个被喂给神经网络。输出是每个可能字符的打分。当我们把上图中的字符A的列输入到神经网络,期望得到we'd hope to see a spike from the A output.

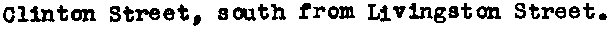

输出结果如下所示:

响应从中间某个部位开始到每个字符的右半边,直到神经网络确定匹配到这个字符。为了提取文本,你要在整张图片中寻找maxima。

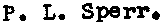

在这个例子里,最终得到的文本为:Auguat S, 1934.

我提交的第一个补丁就是让 Ocropus 能够输出更多character calls的元数据。尽管图片中并没有过多信息足以区分Auguat 和August, 在后续处理过程中使用字典应该能清楚的确定是后面一个结果。

使用默认模型得到的结果如下:

这个结果还行,但不是很理想,Ocropus 网站上给出的原因是:

目前所训练得到的 ocropus-rpred 模型对于某些情况处理的部署很好,主要是由于在目前的训练数据中不存在这些数据。包括了所有大写的文本,特殊字符如"?",印刷字体,上标和下标。在后续的版本中会进行修复,当然,也欢迎大家贡献训练好的模型。

在下一篇文章可以通过训练自己的模型来解决这个问题

The command to make predictions is:

ocropus-rpred -m en-default.pyrnn.gz book/0001/*.png

I believe the r stands for "RNN" as in "Recurrent Neural Net".

The outputs are book/0001/01000{1,2,3,4}.txt.

If you want to see charts like the one above, pass --show or --save.

After all that prep work, we can finally get to the fun part: character recognition using a Neural Net.

The problem is to perform this mapping:

This is challenging because each line will have its own quirks. Maybe binarization produced a darker or lighter image for this line. Maybe skew estimation didn't work perfectly. Maybe the typewriter had a fresh ribbon and produced thicker letters. Maybe the paper got water on it in storage.

Ocropus uses an LSTM Recurrent Neural Net to learn this mapping. The default model has 48 inputs, 200 nodes in a hidden layer and 249 outputs.

The inputs to the network are columns of pixels. The columns in the image are fed into the network, one at a time, from left to right. The outputs are scores for each possible letter. As the columns for the A in the image above are fed into the net, we'd hope to see a spike from the A output.

Here's what the output looks like:

The image on the bottom is the output of the network. Columns in the text and the output matrix correspond to one another. Each row in the output corresponds to a different letter, reading alphabetically from top to bottom. Red means a strong response, blue a weaker response. The red streak under the A is a strong response in the A row.

The responses start somewhere around the middle to right half of each letter, once the net has seen enough of it to be confident it's a match. To extract a transcription, you look for maxima going across the image.

In this case, the transcription is Auguat S, 1934.:

It's interesting to look at the letters that this model gets wrong. For example, the s in August produces the strongest response on the a row. But there's also a (smaller) response on the correct s row. There's also considerable ambiguity around the 5, which is transcribed as an S.

My #1 feature request for Ocropus is for it to output more metadata about the character calls. While there might not be enough information in the image to make a clear call between Auguat and August, a post-processing step with a dictionary would clearly prefer the latter.

The transcriptions with the default model are:

There are some things the currently trained models for ocropus-rpred will not handle well, largely because they are nearly absent in the current training data. That includes all-caps text, some special symbols (including "?"), typewriter fonts, and subscripts/superscripts. This will be addressed in a future release, and, of course, you are welcome to contribute new, trained models.

We'll fix this in the next post by training our own model.

The command to make predictions is:

ocropus-rpred -m en-default.pyrnn.gz book/0001/*.png

I believe the r stands for "RNN" as in "Recurrent Neural Net".

The outputs are book/0001/01000{1,2,3,4}.txt.

If you want to see charts like the one above, pass --show or --save.

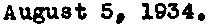

马上就要大功告成了! 从 Ocropus中 获得文本文件的一种方法是把所有文本文件串联起来。

cat book/????/??????.txt > ocr.txt

这些文件都是按照字母顺序排列的,应该能得到正确的结果。 在实际应用中,我发现 Ocropus 所使用的行的顺序与我所想要的不一样。比如,我认为**August 5, 1934. ** 应该是图片中的第二行而非第四行。

Ocropus 中有一个 ocropus-hocr 的工具,可以将输出转换成 hOCR格式. 使用这个工具可以得到每个文本框的边界。

$ ocropus-hocr -o book/book.html book/0001.bin.png

$ cat book/book.html

...

<div class='ocr_page' title='file book/0001.bin.png'>

<span class='ocr_line' title='bbox 3 104 607 133'>O1inton Street, aouth from LIYingston Street.</span><br />

<span class='ocr_line' title='bbox 3 22 160 41'>P. L. Sperr.</span><br />

<span class='ocr_line' title='bbox 1 1 228 19'>NO REPODUCTIONS.</span><br />

<span class='ocr_line' title='bbox 377 67 579 88'>Auguat S, 1934.</span><br />

</div>

...

Ocropus 倾向于从左到右而非从上到下读取文本。由于我(作者)的图片只有一行文本,我会优先选择从上到下的顺序,我写了一个小工具来达到这个目的。

We're on the home stretch!

One way to get a text file out of Ocropus is to concatenate all the transcribed text files:

cat book/????/??????.txt > ocr.txt

The files are all in alphabetical order, so this should do the right thing.

In practice, I found that I often disagreed with the line order that Ocropus chose. For example, I'd say that **August 5, 1934. ** is the second line of the image we've been working with, not the fourth.

Ocropus comes with an ocropus-hocr tool which converts its output to hOCR format, an HTML-based format designed by Thomas Breuel, who also developed Ocropus.

We can use it to get bounding boxes for each text box:

$ ocropus-hocr -o book/book.html book/0001.bin.png

$ cat book/book.html

...

<div class='ocr_page' title='file book/0001.bin.png'>

<span class='ocr_line' title='bbox 3 104 607 133'>O1inton Street, aouth from LIYingston Street.</span><br />

<span class='ocr_line' title='bbox 3 22 160 41'>P. L. Sperr.</span><br />

<span class='ocr_line' title='bbox 1 1 228 19'>NO REPODUCTIONS.</span><br />

<span class='ocr_line' title='bbox 377 67 579 88'>Auguat S, 1934.</span><br />

</div>

...

Ocropus tends to read text more left to right than top to bottom. Since I know my images only have one column of text, I'd prefer to emphasize the top-down order. I wrote a small tool to reorder the text in the way I wanted.

至少目前来看,整体结果不是很理想(~10%的错误率)。下篇文章中会介绍如何训练一个新的LSTM 模型。

Congrats on making it this far! We've walked through the steps of running the Ocropus pipeline.

The overall results aren't good (~10% of characters are incorrect), at least not yet. In the next post, I'll show how to train a new LSTM model that completely destroys this problem.