AI ML in Bioinformatics - ua-datalab/Bioinformatics GitHub Wiki

Important

🕐 Schedule

- 3:00pm-3:10pm: Welcome and introduction to the topic

- 3:10pm-3:40pm: What is Machine Learning (and how can we apply it in biology/bioinformatics?)

- 3:40pm-end: Real world applications of AI/ML in Bioinformatics (tools and applications)

Important

✅ Expected Outcomes

- Understanding what Machine Learning is

- Exposure to topics of interest

Modern biology heavily relies on data from advanced techniques. For precision medicine to be effective, we need to properly analyze this data. Tools from bioinformatics and artificial intelligence are essential in transforming big data into actionable information.

- Information science focuses on how to collect, organize, and share information, covering areas like library science and data management, with uses in education and business.

- Artificial intelligence (AI) mimics human thinking in machines, performing tasks like speech recognition and decision-making, and is widely used in healthcare and self-driving cars.

- Machine learning (ML), a part of AI, helps computers learn from data to make predictions without direct programming, applied in areas like protein structure and disease diagnosis.

- Deep learning (DL), a type of ML, uses neural networks to process data in layers, greatly improving AI's ability to perform tasks like image and speech recognition with high accuracy.

Deep learning algorithms excel at tasks such as image and speech recognition, text generation, and robotic control. By learning complex patterns from raw data, these algorithms have significantly advanced artificial intelligence capabilities.

Click to Expand: What is Machine Learning?

Machine learning, a branch of artificial intelligence, develops algorithms that learn from data to make predictions or decisions. It's widely applied in fields like image recognition, natural language processing, autonomous vehicles, and medical diagnosis (Acosta et al., 2022).

Machine learning algorithms require numeric data, typically represented as matrices of samples and features. When data isn't numeric, feature engineering transforms it into usable numeric features (Roe et al., 2020).

High-quality, accurate, and representative data is crucial for machine learning algorithms to learn correct patterns and make accurate predictions (Habehh and Gohel, 2021). Expert-crafted features, designed by domain specialists, can enhance algorithm performance, especially with limited training data (Lin et al., 2020).

Common machine learning algorithms include (Hastie et al., 2009; Jovel and Greiner, 2021):

- Linear regression: Finds best-fit lines for variable relationships

- Logistic regression: Predicts event probabilities

- Decision trees: Uses binary decisions for predictions

- Random forest: Combines multiple decision trees

- Support vector machines: Finds optimal separating hyperplanes

- Neural networks: Uses interconnected nodes to learn complex patterns

In summary, machine learning is a versatile tool requiring numeric, high-quality data and sometimes expert-crafted features. Various algorithms are available to address different problems.

Click to Expand: What is Deep Learning?

Neural networks, introduced decades ago, have evolved from simple structures to deep neural networks with multiple layers. Modern deep learning models typically consist of input, hidden, and output layers, leveraging increased computational power to handle more complex architectures.

The input layer receives raw data or features, with each node representing a data point. Hidden layers transform this data into abstract representations, with multiple layers defining the "depth" of the network. The output layer produces the final prediction based on the processed information.

Deep learning models are trained by adjusting connection weights to minimize errors, often using large labeled datasets and optimization techniques like backpropagation and gradient descent. Model architecture is typically chosen empirically for each specific task.

Unlike traditional machine learning, deep learning automatically detects higher-level features. This can lead to reduced explainability, which is particularly important in fields like healthcare where understanding decision-making processes is crucial (Sarker, 2021; the Precise4Q consortium et al., 2020).

Differences between machine learning using traditional algorithms and machine learning using deep neural networks.

| ML | DL | |

|---|---|---|

| Algorithms | Many different (SVM, Decision Trees, kNN, ...) | Defined by architecture (RNN, GAN, LSTM, ...) |

| Data size | Can work well with smaller inputs | Requires large amount of data |

| Performance | Typically extremely fast | Computational complexity depends on the architecture |

| Features | Hand-crafted | Can be learned |

| Preprocessing | Significant effort | Can be trained on raw data |

| Fine tuning | Setting the algorithm parameters | Can be performed automatically during training |

| Complexity | Typical simple mathematical models | Depends on the architecture (highly flexible) |

| Transparency | Typically transparent | Hard to transparently show decision making |

| Explainability | Typically explainable | Hard to show the reasoning process |

We live surrounded by data. Big or small. Large or minimal. Through the power of statistics, we have learned to leverage this data to give us further insights in the data that the world around us is full of.

ML/AI has accented this data discovery, but it comes with a couple of caveats:

- ML/AI is difficult to learn (but is becoming more and more accessible!) and apply

- ML/AI requires a lot of resources in order to execute: platforms require large GPU clusters and disk space; Models require a massive amount of power to be created.

Here are a few examples of groups that were able to take this technology and give us a window on the potential applications of ML/AI in Bioinformatics.

Important

Most of the following examples are searchable through Papers With Code: a powerful website that allows to look for papers that have made their code available.

This is extremely useful for folks (and skeptics!) that want to take a look at the code itself!

Machine learning algorithms to infer trait-matching and predict species interactions in ecological networks

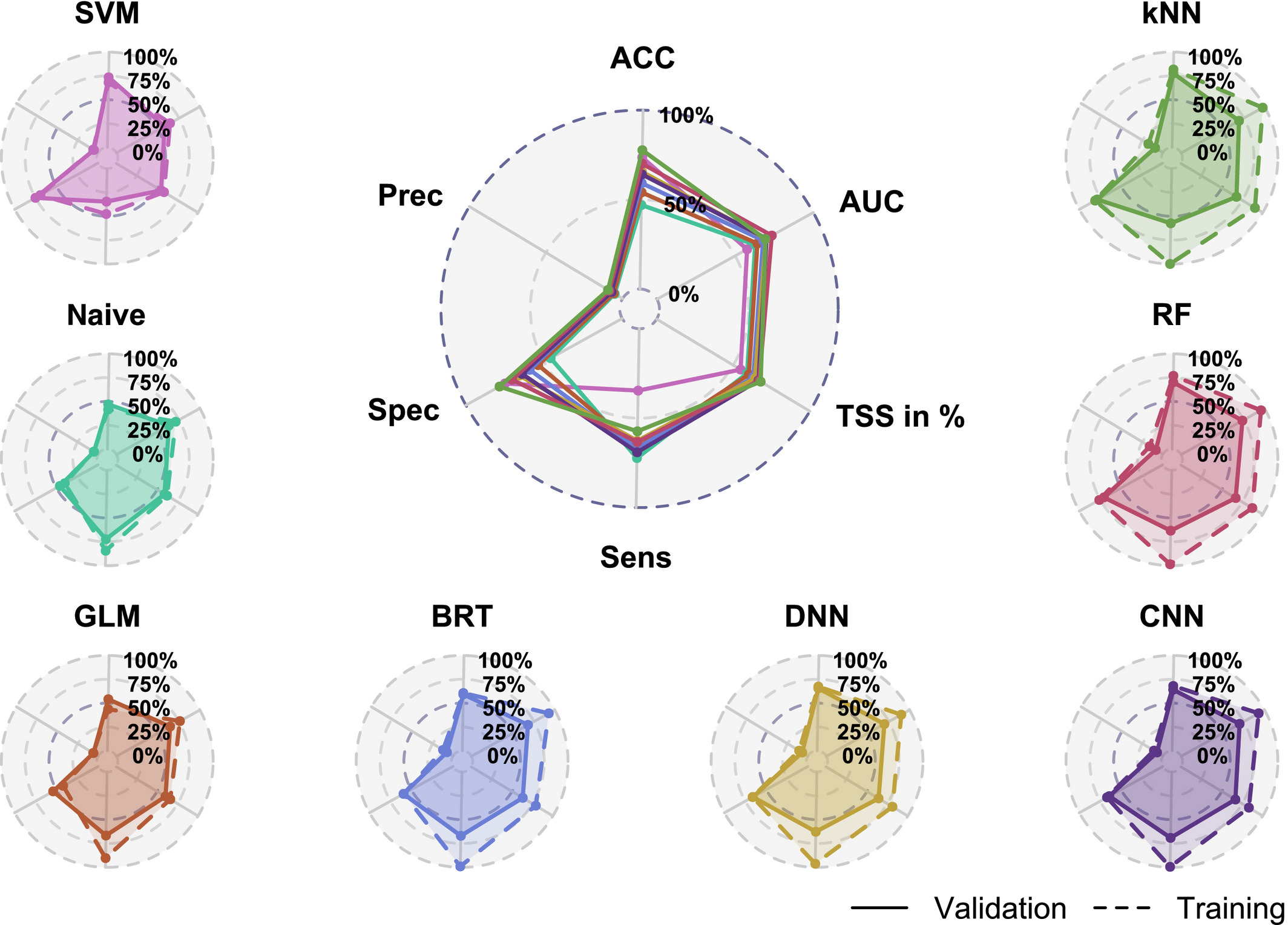

Predictive performance of different ML methods (naive Bayes, SVM, BRT, kNN, DNN, CNN, RF) and GLM in a global database of plant-pollinator interactions. Dotted lines depict training and solid lines validation performances. Models were sorted from left to right with increasing true skill statistic. The central figure compares directly the models’ performances. Sen = Sensitivity (recall, true positive rate); Spec = Specificity (true negative rate); Prec = Precision; Acc = Accuracy; AUC = Area under the receiver operating characteristic curve (AUC); TSS in % = True skill statistic rescaled to 0 – 1.

This paper investigates the use of machine learning algorithms to infer trait-matching and predict species interactions in ecological networks.

Specifically, the authors compare the performance of various machine learning models, including random forests, boosted regression trees, deep neural networks, and support vector machines, with traditional generalized linear models (GLMs) in predicting plant-pollinator interactions based on species traits.

The study finds that the best machine learning models outperform GLMs in both prediction accuracy and identification of the causal trait-trait combinations responsible for interactions.

The study compares the performance of seven ML models:

- Random Forest (RF)

- Boosted Regression Trees (BRT)

- Deep Neural Networks (DNN)

- Convolutional Neural Networks (CNN)

- Support Vector Machines (SVM)

- Naïve Bayes

- k-Nearest Neighbor (kNN)

This advantage stems from ML models' ability to:

- Capture Complex Trait-Matching Structures: ML models effectively detect and utilize complex interactions between traits (trait-trait interactions), a capability where GLMs often fall short. This flexibility is crucial because trait matching in real-world ecosystems can be intricate, involving multiple traits and non-linear relationships.

- Address Overfitting: ML models are inherently designed to mitigate overfitting, a common issue when dealing with a large number of possible trait-trait interactions. This robustness allows them to generalize better and provide more accurate predictions, especially when the number of potential trait combinations is high.

- Handle Uneven Species Distributions: ML models can effectively account for the fact that some species are more abundant than others, thereby preventing biases in predictions. This is crucial because species abundance significantly influences the number of observed interactions and can confound the trait-matching signal.

- Accommodate Different Data Types and Observation Times: The study indicates that ML models can effectively work with both presence-absence data and interaction frequency data, providing flexibility in applying the approach to various ecological datasets. Moreover, they can handle data with imbalanced class distributions, which is common in ecological networks with limited observation times.

The study also highlights the ability of ML models, coupled with the H-statistic, to identify the specific trait-trait combinations that drive species interactions (trait matching) with high accuracy. This capability is critical for:

- Understanding Ecological Mechanisms: Identifying the specific traits responsible for interactions provides insights into the ecological mechanisms underlying network formation and the functional basis of species interactions.

- Predicting Ecosystem Responses to Change: Understanding trait matching helps predict how species interactions might change in response to environmental shifts, such as climate change or species introductions. This information is vital for conservation efforts and managing ecosystem services.

- Moving Beyond Phylogenetic Proxies: While previous studies often relied on phylogenetic information as a proxy for unobserved traits, ML models can focus on directly measurable functional traits, leading to more interpretable and ecologically relevant insights.

The authors emphasize the potential of machine learning for advancing our understanding of species interactions and ecological networks, beyond standard tasks like image or pattern recognition.

Large-scale machine learning-based phenotyping significantly improves genomic discovery for optic nerve head morphology

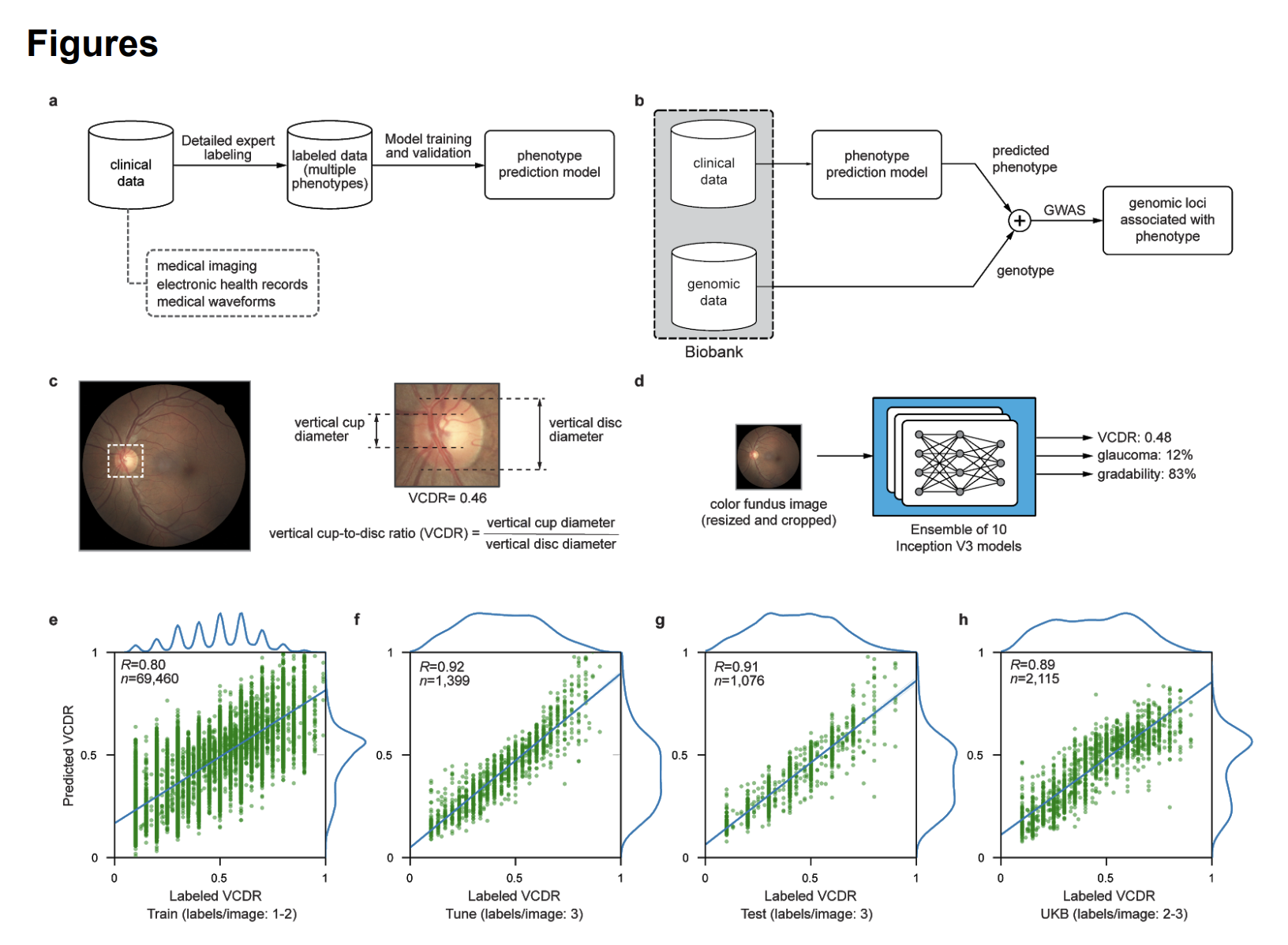

This article examines the use of machine learning (ML) models to predict glaucoma-related traits from color fundus photographs. The authors developed an ML model that accurately predicts the vertical cup-to-disc ratio (VCDR) and identified 299 independent genome-wide significant hits in 156 loci associated with VCDR, replicating known loci and discovering 92 novel ones.

This paper used machine learning (ML) to automatically predict VCDR from fundus photographs, an important endophenotype for glaucoma. The ML model was trained using 81,830 fundus photographs that were graded by experts for image gradability, VCDR, and referable glaucoma risk. These photographs were split into training, tuning, and test sets. The authors trained an ensemble of ten Inception V3 deep convolutional neural networks, a type of ML model well-suited for image analysis.

Once trained and validated, the ML model was applied to 175,337 fundus photographs from the UK Biobank. The model predicted VCDR for each image and also identified images that were ungradable.

The authors conducted a genome-wide association study (GWAS) using the ML-based VCDR predictions. They compared the results to previous GWAS of VCDR, including one based on manually-labeled UK Biobank images. The ML-based GWAS replicated a majority of known genetic associations with VCDR and discovered 92 novel loci. This finding suggests that ML-based phenotyping can improve the power of genomic discovery.

Important

Who won the Nobel Prize for chemistry this year? One of the winners is Demis Hassabis and John Jumper, from the team that developed AlphaFold for their work on “protein structure prediction”.

AlphaFold is an artificial intelligence (AI) system developed by DeepMind for predicting the 3D structure of proteins from their amino acid sequences. It has revolutionized structural biology by providing highly accurate protein structure predictions, which are crucial for understanding biological processes, drug design, and many other applications in molecular biology.

How AplhaFold works:

- Input: The primary input to AlphaFold is a protein’s amino acid sequence. This is analogous to a string of letters representing the order of the protein's building blocks.

- Multiple Sequence Alignment (MSA): AlphaFold compares the input sequence with known protein sequences in public databases. This comparison, called a multiple sequence alignment (MSA), helps AlphaFold understand evolutionary relationships between proteins and infer constraints on how the protein can fold.

- Neural Network Architecture: AlphaFold uses a transformer neural network model, which processes both the sequence data and the information from the MSA to make predictions about how close different pairs of amino acids will be in the 3D structure. It also models the geometric constraints of protein folding, like bond angles and distances between atoms.

- Structure Prediction: The model generates a predicted 3D structure, which it refines iteratively. AlphaFold optimizes the prediction by minimizing errors related to the physical and chemical properties of proteins.

- Confidence Metric: Along with the 3D structure, AlphaFold provides a confidence score (called a pLDDT score), which indicates how certain the model is about each part of the structure.

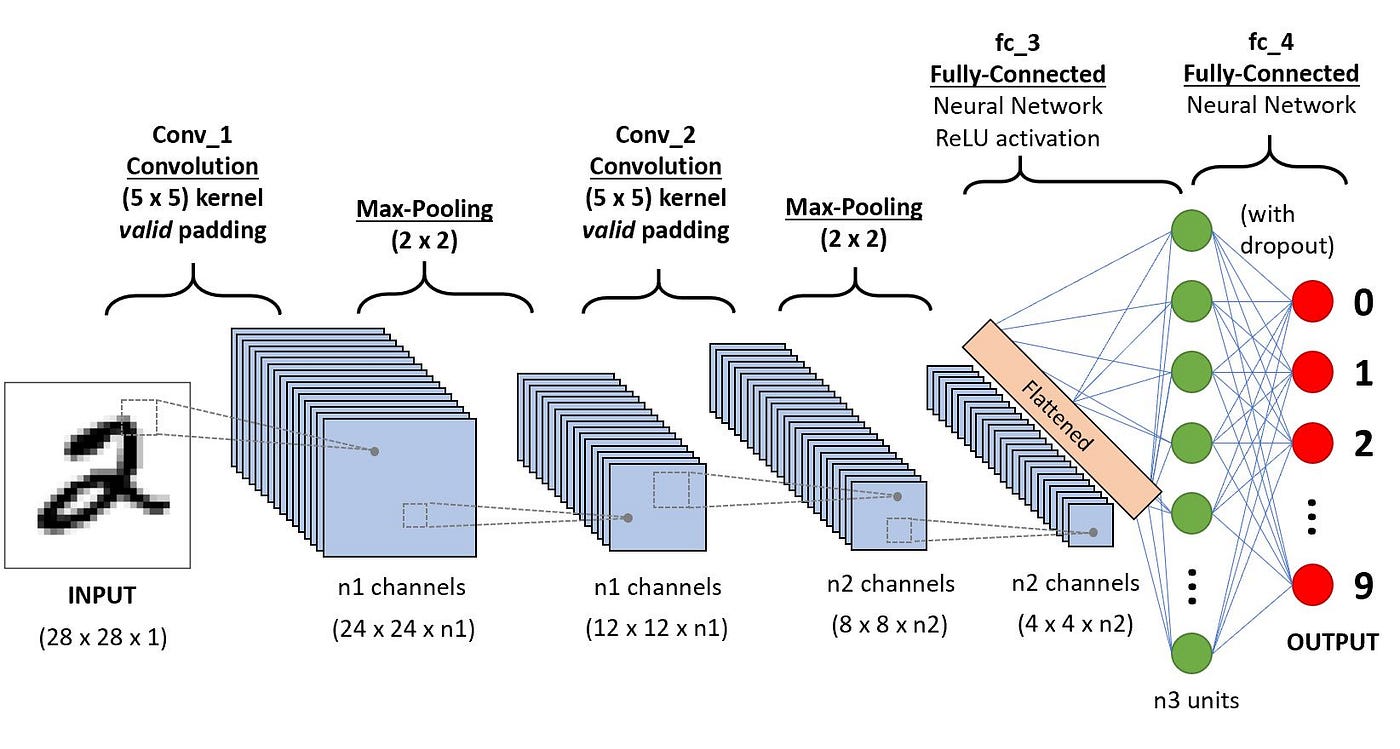

DeepVariant is an open-source software tool developed by Google for variant calling in genomics. It uses deep learning techniques to identify genetic variants from next-generation sequencing (NGS) data. DeepVariant processes the data through a neural network, transforming raw sequence data (from a BAM file or CRAM) into images (a 2 dimensional matrix) of aligned reads and then classifies these images to predict the presence of genetic variants, such as SNPs (single nucleotide polymorphisms) and indels (insertions or deletions).

In order to carry out the analysis of the matrices, DeepVariant uses a Convolutional Neural Network (CNN), a method that is often used in image analysis, to the pileup of images. The CNN then analyzes the pileup images to detect patterns associated with genetic variants, learning from both variant and non-variant regions.

Why DeepVariant is Effective:

- Deep Learning for Accuracy: Unlike traditional rule-based variant callers (like GATK), DeepVariant uses deep learning to learn subtle patterns in sequencing data, which helps it to be more accurate, particularly in challenging genomic regions (e.g., repetitive sequences, homopolymers).

- Training on Large Datasets: DeepVariant was trained on large reference datasets such as the Genome in a Bottle (GIAB) benchmarks, improving its ability to generalize and accurately call variants in various samples.

How is it so affective?

- DeepVariant creates tensors from the pileup images. A tensor is a generalization of vectors and matrices to potentially higher dimensions, and it's a fundamental data structure used in deep learning models. Tensors are used to represent data in a way that can be efficiently processed by neural networks.

- In DeepVariant, a pileup image tensor is a 3D data structure. It holds sequencing information in a format that a deep learning model can process:

- The first dimension is typically the height (number of reads aligned at a particular position).

- The second dimension is the width (corresponding to base positions along the reference genome).

- The third dimension contains different channels representing additional features (e.g., base information, quality scores, mapping quality, strand information).

- This tensor then becomes the input to the CNN, which then the CNN interprets the tensor’s patterns to classify positions in the genome as variant or non-variant.