How to setup Data Manager (old) - stonezhong/DataManager GitHub Wiki

- Background

- Home Environment Brief

- Before you setup Data Manager

- Step 1: create a docker container

- Step 2: config ssh

- Step 3: Initialize Mordor

- Step 4: Build

- Step 5: Deploy

- Step 6: Target initialization after first deployment

- Step 7: build and deploy data applications

- Step 8: (optional) config nginx for reverse proxy

- Step 9: register Data Applications

Background

This article captures the steps I setup DataManager at my home. However, it can be a reference for anyone who want to install Data Manager.

Home Environment Brief

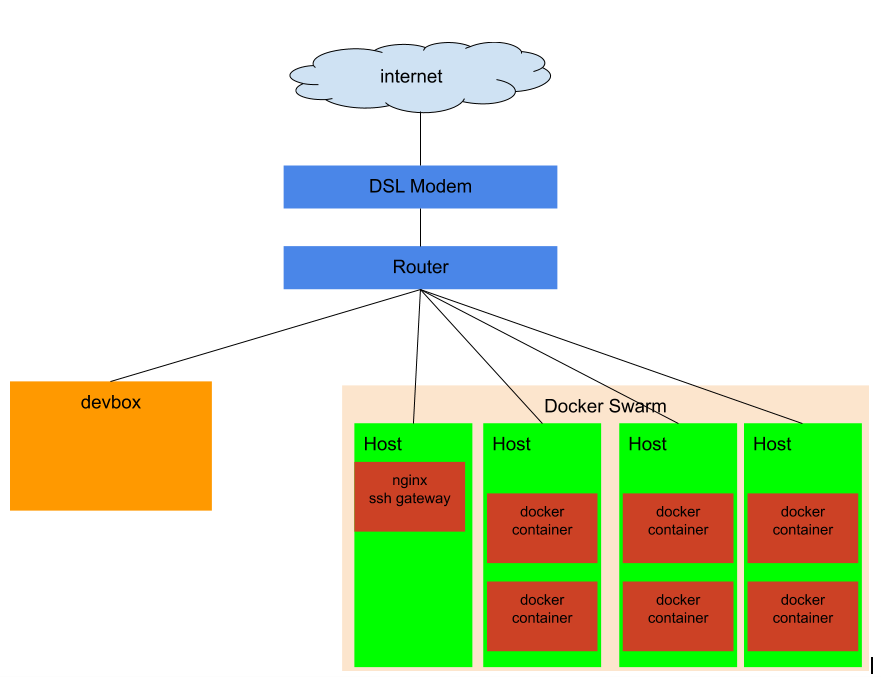

Highlights:

-

All my test software is installed in a docker swarm environment

-

A container "gw" acting as "ssh jumper", so you can ssh to container inside the docker swarm overlay network

-

nginx is installed in "gw", service inside the docker swarm overlay network can be exposed through it

-

I can config the router for "port forwarding" to expose service at home

-

My DSL modem has static IP address, the IP address has been registered with the following domain name:

demo.dmbricks.comdemo2.dmbricks.com

Spark Cluster

I have Apache Spark cluster installed. Here are the highlights:

- livy service is exposed at 10.0.0.18:60008 Here is the nginx config for it:

server {

# hadoop Livy API

listen 60008;

proxy_pass spnode1:8998;

}

- spnode1 is a node in spark cluster acting as

bridge

Before you setup Data Manager

- You MUST have a devbox with python3 and node installed

- I am using Ubuntu 18.04 as my devbox

- I have Python 3.6.9

- I have node v10.16.3

- You MUST have a MySQL instance setup, data manager and airflow will use your MySQL instance to store the metadata.

- You need to have a MySQL client, I am using MySQL Workbench as client.

Step 1: create a docker container

# lab04 is my docker host machine name

# home is a docker swarm overlay network

ssh lab04

docker run \

--privileged -d \

-h dmdemo \

--name dmdemo \

--network home \

-v /mnt/DATA_DISK/docker/containers/dmdemo:/mnt/DATA_DISK \

stonezhong/centos_python3

Now let's update the OS:

docker exec -it dmdemo bash

yum update

^D

docker stop dmdemo

docker start dmdemo

Install some yum packages:

docker exec -it dmdemo bash

yum install tmux mysql-devel graphviz

Then, let's set root password:

docker exec -it dmdemo bash

passwd

New password: <Enter your root password here>

CTRL-D

update ~/.ssh/config on dmdemo so you can ssh to the apache spark bridge host without password, here is what I have:

Host spnode1

HostName spnode1

User root

IdentityFile ~/.ssh/home

Let's verify it by running ssh spnode1 and make sure we can successfully connect to it from dmdemo.

step 2: config ssh

The purpose is, from your devbox, you should be able to ssh to the target machine (here is dmdemo) without entering password.

Since the devbox has no direct access to the docker swarm network, it has to go through a gatwway, so I have the following setting in my ~/.ssh/config file:

Host gw

HostName 10.0.0.18

Port 60000

User root

IdentityFile /mnt/DATA_DISK/ssh/keys/local/home

Host dmdemo

User root

ProxyCommand ssh gw -W dmdemo:22

Then do ssh dmdemo, you will be prompted for password.

Now let's copy the ssh key to it:

ssh-copy-id -i /mnt/DATA_DISK/ssh/keys/local/home dmdemo

Then update the ~/.ssh/config, change the dmdemo entry to:

Host dmdemo

User root

ProxyCommand ssh gw -W dmdemo:22

IdentityFile /mnt/DATA_DISK/ssh/keys/local/home

Then you should be able to ssh to it without password as root.

Step 3: Initialize Mordor

We use mordor as our deployment tool. on dev machine, first, create a virtual environment

mkdir ~/.venvs/mordor

python3 -m venv ~/.venvs/mordor

source ~/.venvs/mordor/bin/activate

pip install pip setuptools --upgrade

pip install wheel

pip install mordor

# in the future, you can enter this virtual environment by "source ~/.venvs/mordor/bin/activate"

Mordor requires you to have the following directory structure on your devbox:

~/.mordor

|

+-- config.json

|

+-- configs

|

+ dm/prod

here is my ~/.mordor/config.json file, note, /home/stonezhong/DATA_DISK/projects/DataManager is the directory where I checkout the source code from git repo.

{

"hosts": {

"dmdemo": {

"ssh_host" : "dmdemo",

"env_home" : "/mnt/DATA_DISK/mordor",

"virtualenv": "/usr/bin/virtualenv",

"python3" : "/usr/bin/python3"

}

},

"applications": {

"dm_prod": {

"stage" : "prod",

"name" : "dm",

"home_dir" : "/home/stonezhong/DATA_DISK/projects/DataManager/server",

"deploy_to" : [ "dmdemo" ],

"use_python3" : true,

"config" : {

"db.json": "copy",

"django.json": "copy",

"scheduler.json": "copy",

"logging.json": "copy",

"dc_config.json": "copy"

}

}

}

}

Now, run mordor -a init-host --host-name dmdemo to initialize the host dmdemo for mordor.

Step 4: Build and deploy

First, let's checkout the source code,

git clone https://github.com/stonezhong/DataManager.git

Then, let's create a python virtual environment:

mkdir ~/.venvs/dmbuild

python3 -m venv ~/.venvs/dmbuild

source ~/.venvs/dmbuild/bin/activate

pip install pip setuptools --upgrade

pip install wheel

pip install libsass==0.20.1

To build, you can:

source ~/.venvs/dmbuild/bin/activate

cd server

DM_STAGE=beta ./build.sh

Step 5: Deploy

You can run the following:

source ~/.venvs/mordor/bin/activate

mordor -a stage -p dm --stage prod --update-venv T

In the future, you normally can do mordor -a stage -p dm --stage prod --update-venv F for subsequent deployment unless you change the package dependency.

In order for successful deployment, you need to have the following files in ~/mordor/configs/dm/prod directory, you should change the content based on your need:

db.json

{

"server": "mysql1",

"username": "stonezhong",

"password": "xyz",

"db_name": "dm-prod"

}

django.json:

{

"log_config": {

"version": 1,

"disable_existing_loggers": false,

"formatters": {

"standard": {

"format": "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

}

},

"handlers": {

"fileHandler": {

"class": "logging.handlers.TimedRotatingFileHandler",

"level": "DEBUG",

"formatter": "standard",

"filename": "{{ log_dir }}/web.log",

"interval": 1,

"when": "midnight",

"backupCount": 7

}

},

"loggers": {

"": {

"handlers": ["fileHandler"],

"level": "DEBUG",

"propagate": true

},

"django": {

"handlers": ["fileHandler"],

"level" : "DEBUG",

"propagate": true

}

}

},

"SECRET_KEY": "zoz#m&pm!t#0e4+%es_x!2n6(af6sh+@pt2gr03#n!=1%on*^g",

"ALLOW_ANONYMOUS_READ": true,

"airflow": {

"base_url": "http://demo2.dmbricks.com/airflow"

}

}

logging.json

{

"version": 1,

"disable_existing_loggers": true,

"formatters": {

"standard": {

"format": "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

}

},

"handlers": {

"consoleHandler": {

"class": "logging.StreamHandler",

"level": "DEBUG",

"formatter": "standard",

"stream": "ext://sys.stdout"

},

"fileHandler": {

"class": "logging.handlers.TimedRotatingFileHandler",

"level": "DEBUG",

"formatter": "standard",

"filename": "{{ log_dir }}/{{ filename }}",

"interval": 1,

"when": "midnight"

}

},

"loggers": {

"": {

"handlers": ["fileHandler", "consoleHandler"],

"level": "DEBUG",

"propagate": true

}

}

}

scheduler.json

{

"log_config": {

"version": 1,

"disable_existing_loggers": true,

"formatters": {

"standard": {

"format": "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

}

},

"handlers": {

"consoleHandler": {

"class": "logging.StreamHandler",

"level": "DEBUG",

"formatter": "standard",

"stream": "ext://sys.stdout"

},

"fileHandler": {

"class": "logging.handlers.TimedRotatingFileHandler",

"level": "DEBUG",

"formatter": "standard",

"filename": "{{ log_dir }}/scheduler.log",

"interval": 1,

"when": "midnight"

}

},

"loggers": {

"": {

"handlers": ["fileHandler", "consoleHandler"],

"level": "DEBUG",

"propagate": true

},

"oci": {

"propagate": false

},

"urllib3": {

"propagate": false

}

}

}

}

Step 6: Target initialization after first deployment

Step 6.1: Initialize django database

First, create database for data-manager using MySQL client (I am using MySQL workbench)

CREATE SCHEMA `dm-prod` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_bin

Now ssh to dmdemo, and do the following

eae dm

python manage.py migrate

python manage.py makemigrations main

python manage.py migrate main

Then create admin user for data manager:

python manage.py createsuperuser --email [email protected] --username stonezhong

Step 6.2: Start data manager server and scheduler

# You can create a tmux session

eae dm

python manage.py runserver 0.0.0.0:8888

# You can do it in another tmux session

eae dm

./scheduler.py

Step 6.3: Setup airflow

- First, you need to install airflow on the target, please follow the instruction at How to setup airflow to install airflow

Step 6.4: Test your setup

ssh -L 8888:localhost:8888 dmdemo

# then open a browser and access http://localhost:8888/explorer/

Step 7: build and deploy data applications

See How to build and deploy data applications for details.

Step 8: (optional) config nginx for reverse proxy

The purpose is, we can access data manager from internet with http://demo2.dmbricks.com/, port forwarding on router is needed as well.

http {

...

server {

listen 80;

server_name demo2.dmbricks.com;

location / {

proxy_pass http://dmdemo:8888;

}

location /airflow {

proxy_pass http://dmdemo:8080;

}

}

You also need to update airflow.cfg, so airflow know the base_url is changed. (you need to restart airflow scheduler and airflow web ui)

[webserver]

base_url = http://demo2.dmbricks.com/airflow

Step 9: register Data Applications

- You must register

Execute SQLsince it is required by system - You can register

Import Trading Dataapp if you want to play around with it.

Steps for creating "Execute SQL" app:

- First, login to http://demo2.dmbricks.com/ with the username you created

- click the "Applications" menu

- Click "Create" button

- Set name to "Execute SQL"

- Set team to "admins"

- Set description to "System Application for Executing SQL statements"

- Set location to "hdfs:///etl-prod/apps/execute_sql/1.0.0.0" Click "Save Changes" button, then go to MySQL client, find it in "main_application" table, and set "sys_app_id" to 1

Steps for creating "Import Trading Data" app:

- First, login to http://demo2.dmbricks.com/ with the username you created

- click the "Applications" menu

- Click "Create" button

- Set name to "Import Trading Data"

- Set team to "tradings"

- Set description to "Generate fake trading data daily."

- Set location to "hdfs:////etl-prod/apps/generate_trading_samples/1.0.0.0"