3.3.3.2.Qwiklab: Introduction to BigQuery - sj50179/Google-Data-Analytics-Professional-Certificate GitHub Wiki

Overview

SQL (Structured Query Language) is a standard language for data operations that allows you to ask questions and get insights from structured datasets. It's commonly used in database management and allows you to perform tasks like transaction record writing into relational databases and petabyte-scale data analysis.

In this lab, you will learn fundamental SQL querying keywords, which you will run in the BigQuery console on a public dataset that contains information on London bikeshares.

Objectives

In this lab, you will learn how to:

- Distinguish databases from tables and projects.

- Use the

SELECT,FROM, andWHEREkeywords to construct simple queries. - Identify the different components and hierarchies within the BigQuery console.

- Load databases and tables into BigQuery.

- Execute simple queries on tables.

The Basics of SQL

Databases and Tables

As mentioned earlier, SQL allows you to get information from "structured datasets". Structured datasets have clear rules and formatting and often times are organized into tables, or data that's formatted in rows and columns.

An example of unstructured data would be an image file. Unstructured data is inoperable with SQL and cannot be stored in BigQuery datasets or tables (at least natively.) To work with image data (for instance), you would use a service like Cloud Vision, perhaps through its API directly.

The following is an example of a structured dataset—a simple table:

| User | Price | Shipped |

|---|---|---|

| Sean | $35.00 | Yes |

| Rocky | $50.00 | No |

If you've had experience with Google Sheets, then the above should look quite similar. As we see, the table has columns for User, Price, and Shipped and two rows that are composed of filled in column values.

A Database is essentially a collection of one or more tables. SQL is a structured database management tool, but quite often (and in this lab) you will be running queries on one or a few tables joined together—not on whole databases.

SELECT and FROM

SQL is phonetic by nature and before running a query, it's always helpful to first figure out what question you want to ask your data (unless you're just exploring for fun.)

SQL has predefined keywords which you use to translate your question into the pseudo-english SQL syntax so you can get the database engine to return the answer you want.

The most essential keywords are SELECT and FROM:

- Use

SELECTto specify what fields you want to pull from your dataset. - Use

FROMto specify what table or tables we want to pull our data from.

An example may help understanding. Assume that we have the following table example_table, which has columns USER, PRICE, and SHIPPED:

And let's say that we want to just pull the data that's found in the USER column. We can do this by running the following query that uses SELECT and FROM:

SELECT USER FROM example_table

content_copy

If we executed the above command, we would select all the names from the USER column that are found in example_table.

You can also select multiple columns with the SQL SELECT keyword. Say that you want to pull the data that's found in the USER and SHIPPED columns. To do this, modify the previous query by adding another column value to our SELECT query (making sure it's separated by a comma!):

SELECT USER, SHIPPED FROM example_table

content_copy

Running the above retrieves the USER and the SHIPPED data from memory:

And just like that you've covered two fundamental SQL keywords! Now to make things a bit more interesting.

WHERE

The WHERE keyword is another SQL command that filters tables for specific column values. Say that you want to pull the names from example_table whose packages were shipped. You can supplement the query with a WHERE, like the following:

SELECT USER FROM example_table WHERE SHIPPED='YES'

content_copy

Running the above returns all USERs whose packages have been SHIPPED to from memory:

Now that you have a baseline understanding of SQL's core keywords, apply what you've learned by running these types of queries in the BigQuery console.

Exploring the BigQuery Console

The BigQuery paradigm

BigQuery is a fully-managed petabyte-scale data warehouse that runs on the Google Cloud. Data analysts and data scientists can quickly query and filter large datasets, aggregate results, and perform complex operations without having to worry about setting up and managing servers. It comes in the form of a command line tool (preinstalled in cloudshell) or a web console—both ready for managing and querying data housed in Google Cloud projects.

In this lab, you use the web console to run SQL queries.

Open BigQuery Console

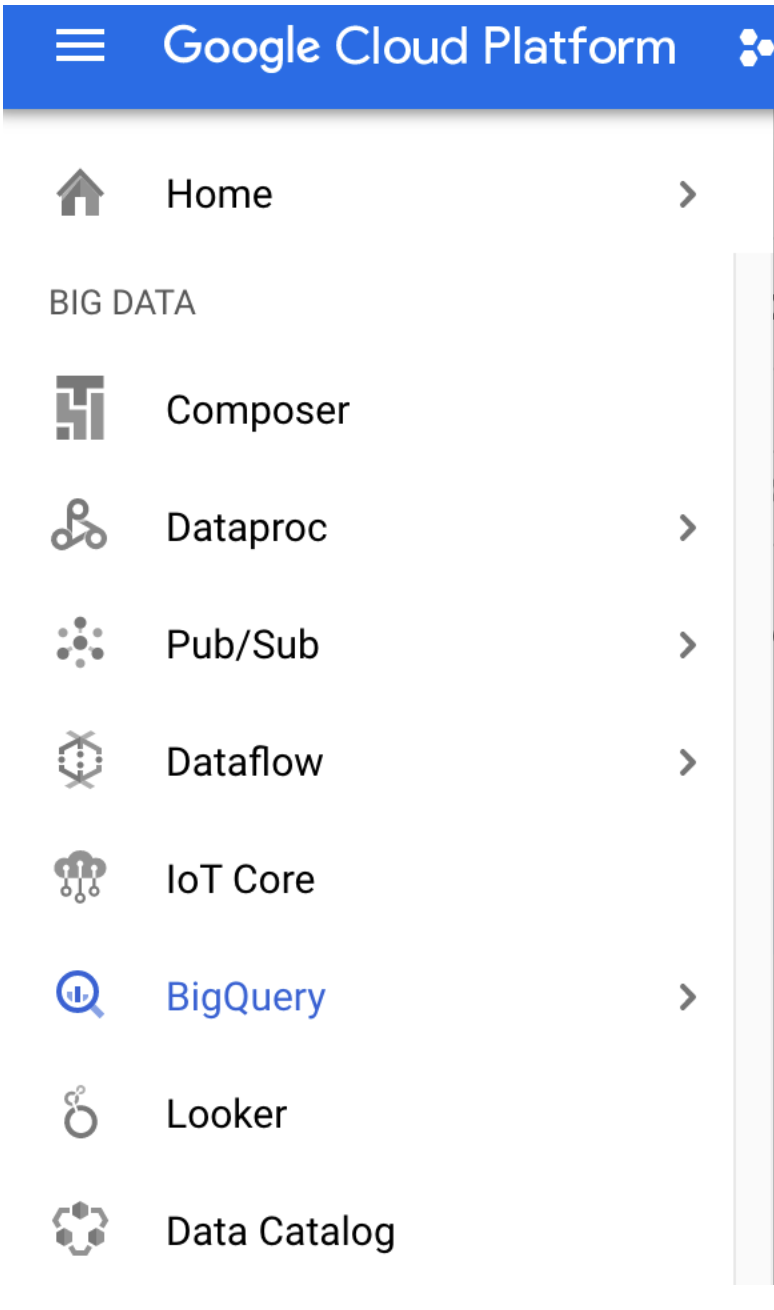

In the Google Cloud Console, select Navigation menu > BigQuery:

The Welcome to BigQuery in the Cloud Console message box opens. This message box provides a link to the quickstart guide and the release notes.

Click Done.

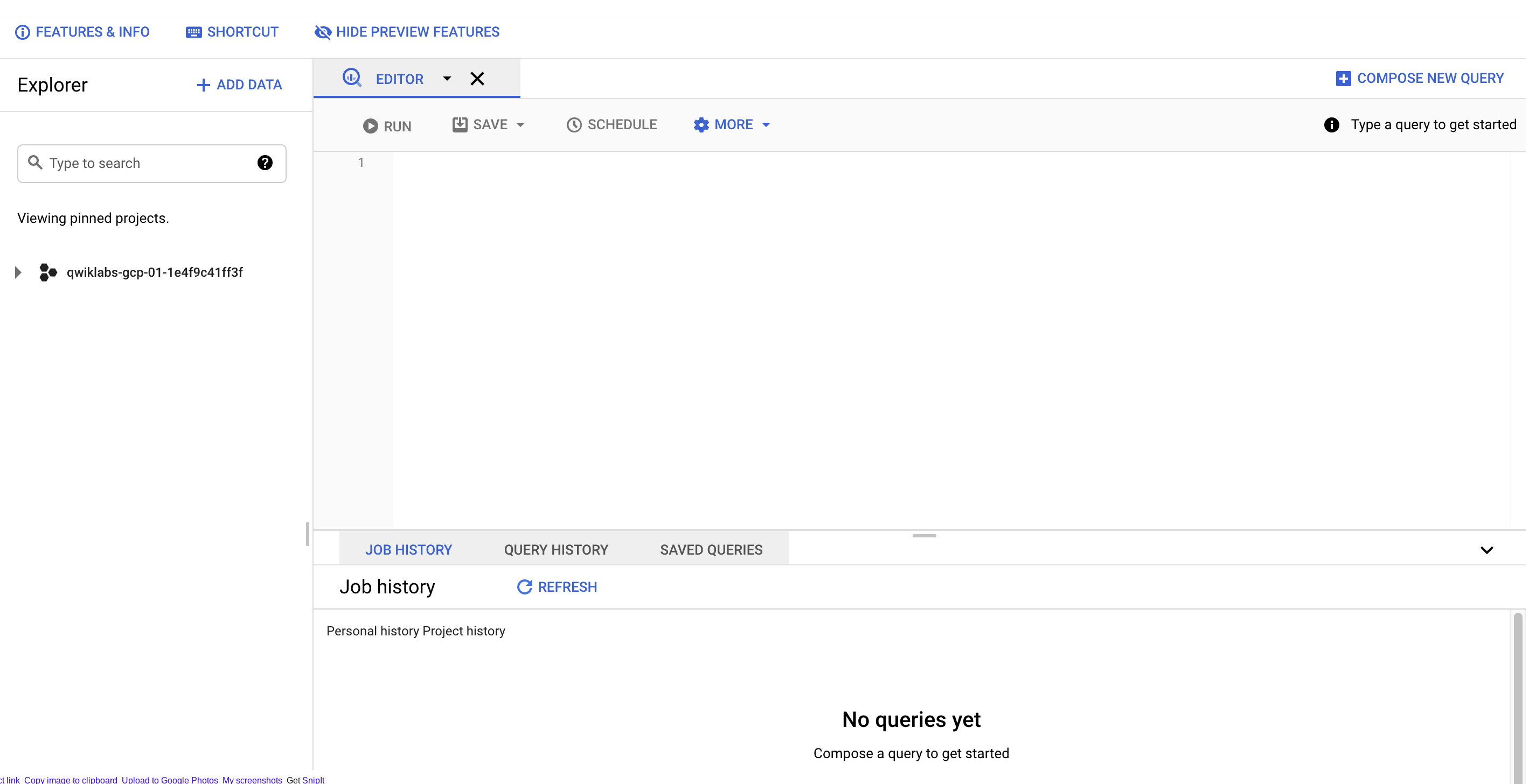

The BigQuery console opens.

Take a moment to note some important features of the UI. The right-hand side of the console houses the "Query editor". This is where you write and run SQL commands like the examples we covered earlier. Below that is "Query history", which is a list of queries you ran previously.

The below pane of the console contains self-explanatory query history, saved queries, and job history. You can also find the Explorer tab in the left pane.

The highest level of resources contain Google Cloud projects, which are just like the temporary Google Cloud projects you sign in to and use with each Qwiklab. As you can see in your console and in the last screenshot, we only have our Qwiklabs project housed in the Resources tab. If you try clicking on the arrow next the project name, nothing will show up.

This is because your project doesn't contain any datasets or tables, you have nothing that can be queried. Earlier you learned datasets contain tables. When you add data to your project, note that in BigQuery, projects contain datasets, and datasets contain tables. Now that you better understand the project → dataset → table paradigm and the intricacies of the console, you can load up some queryable data.

Uploading queryable data

In this section you pull in some public data into your project so you can practice running SQL commands in BigQuery.

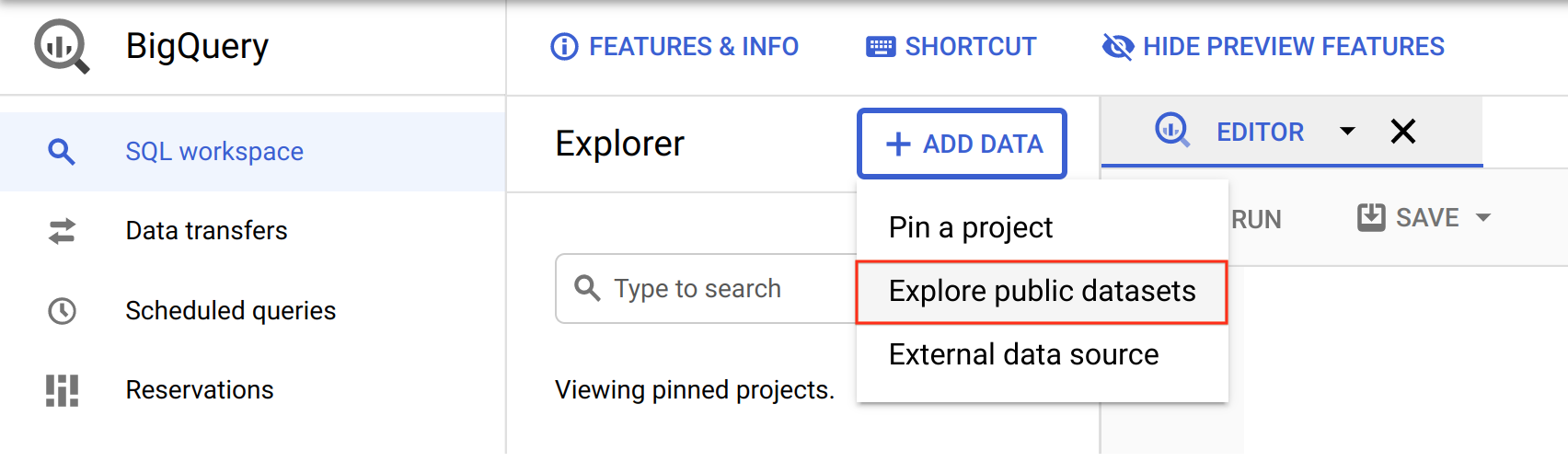

Click on the + ADD DATA link then select Explore public datasets:

In the search bar, enter "london", then select the London Bicycle Hires tile, then View Dataset.

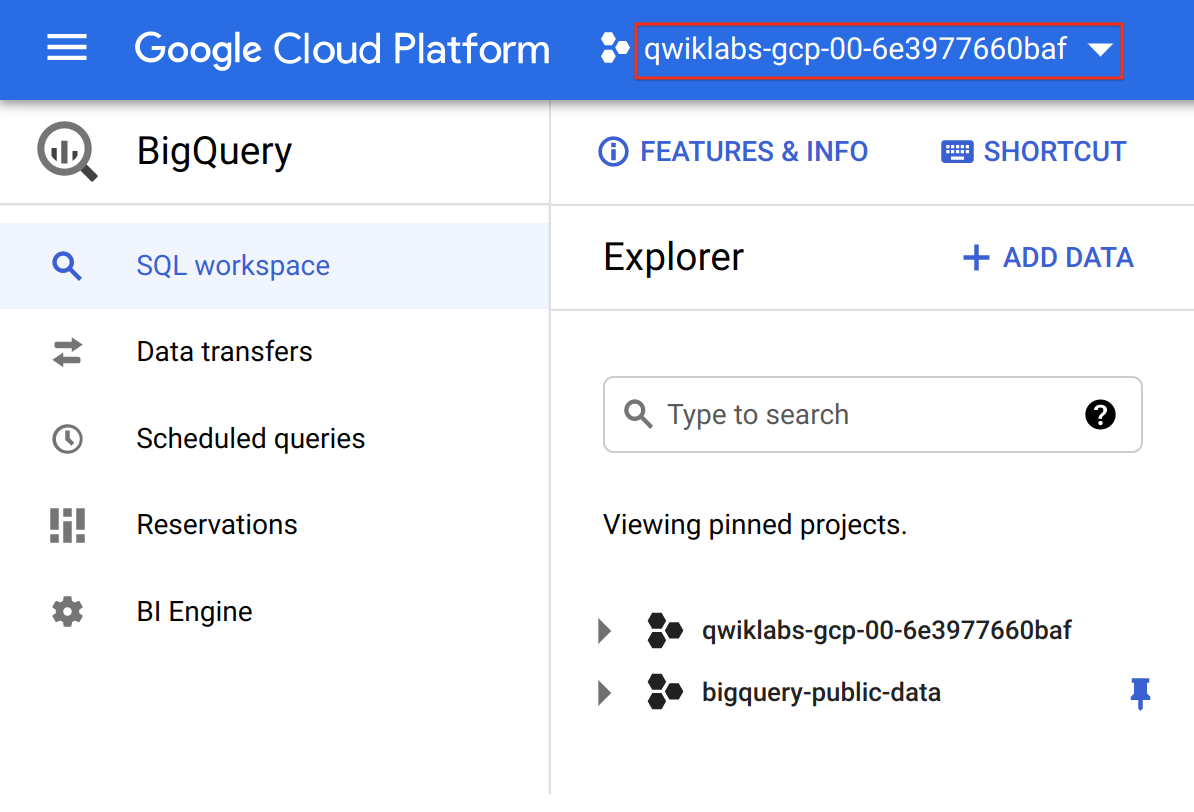

A new tab will open, and you will now have a new project called bigquery-public-data added to the Resources panel:

It's important to note that you are still working out of your lab project in this new tab. All you did was pull a publicly accessible project that contains datasets and tables into BigQuery for analysis — you didn't switch over to that project. All of your jobs and services are still tied to your Qwiklabs account. You can see this for yourself by inspecting the project field near the top of the console:

Click on bigquery-public-data > london_bicycles > cycle_hire. You now have data that follows the BigQuery paradigm:

- Google Cloud Project →

bigquery-public-data - Dataset →

london_bicycles - Table →

cycle_hire

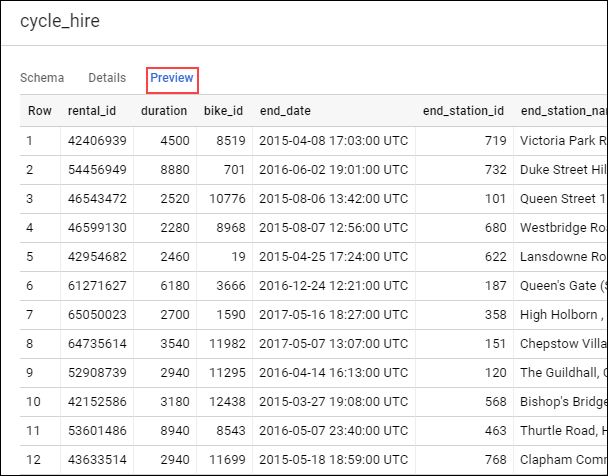

Now that you are in the cycle_hire table, in the center of the console click the Preview tab. Your page should resemble the following:

Inspect the columns and values populated in the rows. You are now ready to run some SQL queries on the cycle_hire table.

Running SELECT, FROM, and WHERE in BigQuery

You now have a basic understanding of SQL querying keywords and the BigQuery data paradigm and some data to work with. Run some SQL commands using this service.

If you look at the bottom right corner of the console, you will notice that there are 24,369,201 rows of data, or individual bikeshare trips taken in London between 2015 and 2017 (not a small amount by any means!)

Now take note of the seventh column key: end_station_name, which specifies the end destination of bikeshare rides. Before we get too deep, let's first run a simple query to isolate the end_station_name column. Copy and paste the following command in to the Query editor:

SELECT end_station_name FROM `bigquery-public-data.london_bicycles.cycle_hire`;

content_copy

Then click Run.

After ~20 seconds, you should be returned with 24369201 rows that contain the single column you queried for: end_station_name.

Why don't you find out how many bike trips were 20 minutes or longer?

Click COMPOSE NEW QUERY to clear the Query editor, then run the following query that utilizes the WHERE keyword:

SELECT * FROM `bigquery-public-data.london_bicycles.cycle_hire` WHERE duration>=1200;

content_copy

This query may take a minute or so to run.

SELECT * returns all column values from the table. Duration is measured in seconds, which is why you used the value 1200 (60 * 20).

If you look in the bottom right corner you see that 7,334,890 rows were returned. As a fraction of the total (7334890/24369201), this means that ~30% of London bikeshare rides lasted 20 minutes or longer (they're in it for the long haul!)