Build aarch64 Packages on your PC, with User Mode QEMU and binfmt_misc - sakaki-/gentoo-on-rpi-64bit GitHub Wiki

Compile resource-hungry aarch64 builds from a chroot on your PC, using QEMU's user-mode emulation, via Linux's binfmt_misc.

Although most Gentoo packages can straighforwardly be built from source on an RPi3/4 (particularly if you have distcc backing, as described elsewhere in this wiki), some are more problematic. For example, at the time of writing, dev-lang/rust cannot exploit distcc (nor will it cross-emerge), and tops out at about 3GiB of swap emerging natively on an RPi3. This is very inefficient, and can lead to build times measured (literally) in days.

But there is another way! Using the binfmt_misc framework, you can request your PC's Linux kernel vector through a nominated "handler" (interpreter or emulator), any time it is asked to invoke an alien userland binary whose initial bytes match a given pattern. As such, if we register a (statically compiled for x86-64) user-mode QEMU aarch64 emulator in this capacity, for files matching the aarch64 ELF header "magic", we can temporarily mount our RPi system's rootfs ('sysroot') on the PC, chroot into it, and then invoke emerge operations from there.

The technique may feel slightly surreal the first time you try it, but it works, and can be very useful. Because the chroot-ed system is not using QEMU in full system-emulation-mode (but rather, in user-mode, where only individual userland binaries are dynamically translated on demand, and the PC's kernel still ultimately handles all system calls), you can use all the memory and CPU threads available on your PC - so no more 1GiB RAM limit (or swapping)! Furthermore, on a sufficiently powerful x86-64 host, the improved parallelism available (for all "guest" compiler types, even rustc etc.) easily outweighs the loss in per-thread performance (due to binary translation) when compared to native RPi3 (or even RPi4) execution.

In what follows, I'll describe how to set this up when using my gentoo-on-rpi-64bit image's rootfs, and a Gentoo Linux PC. It isn't hard to do ^-^

Note for advanced users: while this user-mode QEMU /

binfmt_misc/chrootapproach can be used as an pure alternative todistccon a sufficiently powerful PC host, actually the two approaches complement each other well. That's because:

- QEMU can add PC muscle behind tricky compiles (such as those using e.g.

rustc) which do not distribute, but- for those packages that do use

distcc(in non-pump mode), QEMU can also perform client header pre-processing more rapidly (in general) than the RPi3 can natively, and- the

distccx86-64cross-compiler (server) running natively on the PC host (even if it is servicing requests from its own client in the QEMUchroot) will run more efficiently than anaarch64compiler translated by QEMU.

The instructions which follow may easily be adapted for other target sysroot / host distribution combinations, but doing so is out of scope for this brief article.

Note that I also have prebuilt

x86-64versions of the staticqemu-aarch64andqemu-aarch64-wrapperbinaries referred to in this section available for download on GitHub (here and here); you may prefer to use these to save time (or if using a non-Gentoo PC host). Use at your own risk! The wrapper is GPL-3 licensed, for the QEMU licenses, please see here.

First, you'll need to build a statically-linked x86-64 QEMU user-mode emulator for aarch64.

To do this, add the following line to /etc/portage/make.conf on your x86-64 Gentoo PC:

QEMU_USER_TARGETS="aarch64"If you already have a

QEMU_USER_TARGETSspecified, simply addaarch64to the existing list instead (separated by a space), if it is not already present.

Then, create the file /etc/portage/package.use/qemu, and add to it:

# build QEMU's user targets as static binaries

app-emulation/qemu static-user

# requirements of qemu (caused by static-user)

dev-libs/glib static-libs

sys-apps/attr static-libs

sys-libs/zlib static-libs

dev-libs/libpcre static-libsThat done, emerge QEMU:

root@gentoo-pc ~ # emerge --ask --verbose app-emulation/qemuWhen complete, an aarch64 user-mode emulator should be present at /usr/bin/qemu-aarch64:

root@gentoo-pc ~ # file /usr/bin/qemu-aarch64

/usr/bin/qemu-aarch64: ELF 64-bit LSB executable, x86-64, version 1 (GNU/Linux), statically linked, for GNU/Linux 3.2.0, strippedThe output you see may of course differ slightly, depending on the current versions of software installed on your PC, but the important thing to note is that this is an x86-64 binary, and is statically linked (requires no dynamic libraries at run-time to function).

We'll not have the kernel call this QEMU application directly, however. Instead, we'll use a wrapper, to allow us to pass additional arguments to it when invoked - in this case, to stipulate the type of CPU to emulate (viz.: a Cortex A53 for RPi3 B/B+; if you prefer to emulate an RPi4, use cortex-a72 instead).

To do so, create a file called qemu-aarch64-wrapper.c, and put in it:

/*

* Call QEMU binary with additional "-cpu cortex-a53" argument.

*

* Copyright (c) 2018 sakaki <[email protected]>

* License: GPL v3.0+

*

* Based on code from the Gentoo Embedded Handbook

* ("General/Compiling_with_qemu_user_chroot")

*/

#include <string.h>

#include <unistd.h>

int main(int argc, char **argv, char **envp) {

char *newargv[argc + 3];

newargv[0] = argv[0];

newargv[1] = "-cpu";

newargv[2] = "cortex-a53";

memcpy(&newargv[3], &argv[1], sizeof(*argv) * (argc -1));

newargv[argc + 2] = NULL;

return execve("/usr/local/bin/qemu-aarch64", newargv, envp);

}Note that this expects the 'real' QEMU to live at

/usr/local/bin/qemu-aarch64; we will copy it there (within thechrootrootfs) shortly.

Compile and link it (stripped):

root@gentoo-pc ~ # gcc -static -O3 -s -o qemu-aarch64-wrapper qemu-aarch64-wrapper.c

root@gentoo-pc ~ # file qemu-aarch64-wrapper

qemu-aarch64-wrapper: ELF 64-bit LSB executable, x86-64, version 1 (GNU/Linux), statically linked, for GNU/Linux 3.2.0, strippedAgain, your output may differ slightly, but the important point is, that this too is an x86-64 static executable. We'll place it at /usr/local/bin/qemu-aarch64-wrapper within the chroot rootfs in a moment.

To check if your current kernel has the necessary binfmt_misc support configured already, issue:

root@gentoo-pc ~ # modprobe configs &>/dev/null

root@gentoo-pc ~ # zgrep CONFIG_BINFMT_MISC /proc/config.gzIf that returns CONFIG_BINFMT_MISC=y (preferred) or CONFIG_BINFMT_MISC=m, then you already have the necessary option selected, and should jump to the next section below, now.

Otherwise, you'll need to configure the option, rebuild your kernel, and then restart.

If you use my buildkernel script on your PC, just issue:

root@gentoo-pc ~ # buildkernel --menuconfigOr, if you use genkernel, instead issue:

root@gentoo-pc ~ # genkernel <your normal genkernel options> --menuconfigOr, if you prefer to build your kernels manually, ensure that you have a current version of the kernel source tree in /usr/src/linux, and then issue:

root@gentoo-pc ~ # cd /usr/src/linux

root@gentoo-pc /usr/src/linux # make distclean

root@gentoo-pc /usr/src/linux # zcat /proc/config.gz > .config

root@gentoo-pc /usr/src/linux # make olddefconfig

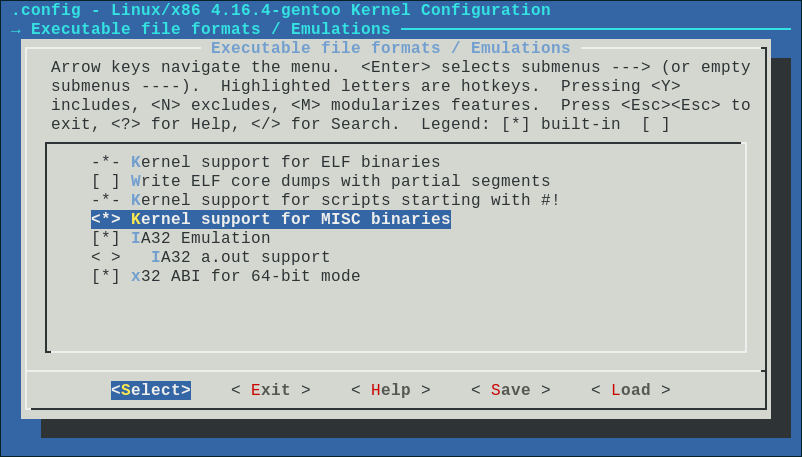

root@gentoo-pc /usr/src/linux # make menuconfigOnce the configuration tool starts, navigate to the Executable file formats / Emulations category, enter it, then select the Kernel support for MISC binaries option (by pressing y; doing so sets CONFIG_BINFMT_MISC=y in the kernel configuration file):

I have some brief instructions for using the kernel configuration tool, here.

Your output will likely differ, depending on kernel version etc. Note that you can also elect to modularize this option if desired, but I recommend building it in.

With that done, exit the tool by hitting Esc four times, then press y when prompted "Do you wish to save your new configuration?".

The tool will then exit, and, if you are using either the buildkernel or genkernel scripts, the kernel should automatically build and install. However, if working manually, you'll need to kick this off yourself:

root@gentoo-pc /usr/src/linux # make

root@gentoo-pc /usr/src/linux # make modules_install

root@gentoo-pc /usr/src/linux # make install

root@gentoo-pc /usr/src/linux # cd

root@gentoo-pc ~ #For further notes on building custom kernels, please see the Gentoo Handbook.

Once the new kernel has been built and installed successfully, reboot to start using it.

That done, you are in a position to follow along with the rest of the tutorial, so continue reading immediately below.

Mounting your gentoo-on-rpi-64bit Image's rootfs and bootfs

Next, we need to mount the root and boot filing systems ('rootfs' and 'bootfs') of your RPi4/3's gentoo-on-rpi-64bit image on your PC.

If you are currently running the image on your RPi, shut this down and, when ready, disconnect power. Then take the microSD card from the RPi, and insert it into your PC.

You may need an adaptor to do this, depending on what slots are available on your PC, just as when you wrote the original project image. Users booting their RPi3 from a USB stick, or USB-adapted SSD, should of course just substitute that device for the microSD card in these instructions.

Locate the device path of the image (you can use the lsblk tool to help you). Then, create a mountpoint, and mount the image's root partition filing system ('sysroot') on your PC; this lives in the second partition of the image, so issue:

root@gentoo-pc ~ # mkdir -pv /mnt/piroot

root@gentoo-pc ~ # mount -v -t ext4 /dev/XXX2 /mnt/pirootNB: substitute your actual image sysroot path for

/dev/XXX2in the above command. For example, on your PC, if say the microSD card was registered as/dev/sdc, the correct path would be/dev/sdc2; if instead it was registered as/dev/mmcblk0, the correct path would actually be/dev/mmcblk0p2, and so on.

Once mounted, you should be able to look at the files on the image sysroot on your PC:

root@gentoo-pc ~ # ls /mnt/piroot

bin dev firmware lib lost+found mnt proc run sys usr

boot etc home lib64 media opt root sbin tmp varThe actual layout you see may vary slightly, of course, depending on which version of the image you have, changes you have made yourself etc.

Next, because some operations will assume you have the boot filing system ('bootfs') mounted at /boot within this rootfs, let's also set that up now. Issue:

root@gentoo-pc ~ # mount -v -t vfat /dev/XXX1 /mnt/piroot/bootNB: substitute your actual (image) bootfs path for

/dev/XXX1in the above command; this will be the same as for your rootfs above, but with a concluding "1" instead of "2". So, if for example your rootfs is on/dev/sdc2, you would use/dev/sdc1here; if/dev/sdb2, use/dev/sdb1; if/dev/mmcblk0p2, use/dev/mmcblk0p1, and so on.

Then, if your mounted rootfs is on a slow device like a microSD card, it makes sense to leverage the host PC's storage for Portage's $TMPDIR in the chroot (by default, (/mnt/piroot)/var/tmp/portage), since this is where all the build action will happen, so we want it as fast as possible (if your RPi's image rootfs is on e.g. a fast SSD instead, you can safely skip this step).

Now, while it is possible to simply bind-mount your host PC's /var/tmp/portage directory over /mnt/piroot/var/tmp/portage to achieve this goal, that can cause collisions to occur, should you try to simultaneously emerge the same package on the host and chroot. As such, it is better to create a dedicated work directory on the host PC, and mount that.

Here, you have two options, choose whichever better suits your situation:

Option 1: if your host PC has only modest amounts of RAM available, create the working directory on disk at an appropriate location, and then bind-mount this. So, for example, issue:

root@gentoo-pc ~ # [[ -d /var/tmp/pi-portage ]] || (mkdir /var/tmp/pi-portage; chown portage:portage /var/tmp/pi-portage; chmod 775 /var/tmp/pi-portage)

root@gentoo-pc ~ # mount -v --rbind /var/tmp/pi-portage /mnt/piroot/var/tmp/portageOption 2: if your PC has copious RAM available (> 64GiB, say), it makes sense to create the working directory as a tmpfs in memory. So, for example, following this guide you could issue:

root@gentoo-pc ~ # mount -v -t tmpfs -o size=16G,mode=775,uid=portage,gid=portage,nr_inodes=0 tmpfs /mnt/piroot/var/tmp/portageNow we have the image rootfs mounted, we can prepare it for use in a binfmt_misc chroot, by copying in the static QEMU, and wrapper, that we prepared earlier. Issue:

root@gentoo-pc ~ # cp -av /usr/bin/qemu-aarch64 /mnt/piroot/usr/local/bin/qemu-aarch64

root@gentoo-pc ~ # cp -av qemu-aarch64-wrapper /mnt/piroot/usr/local/bin/qemu-aarch64-wrapperOf course, if you chose to download my prebuilt copy of these binaries instead, modify the above source paths as appropriate.

This preparation step need only be done once (for any given target image rootfs).

Note that it is fine to leave these binaries present, even when the image is booted 'natively' on an RPi3 (they won't be runnable in such a situation, but will do no harm).

Next, we need to define a binfmt_misc matching rule, which will instruct the PC's kernel to vector to our (/mnt/piroot)/usr/local/bin/qemu-aarch64-wrapper handler (and so on to (/mnt/piroot)/usr/local/bin/qemu-aarch64 -cpu cortex-a53) whenever an attempt is made to execute an aarch64 ELF binary.

To do so, create the file /etc/binfmt.d/qemu-aarch64, and put in it:

# emulate aarch64 ELF binaries using a static QEMU, with -cpu cortex-a53

:aarch64:M::\x7fELF\x02\x01\x01\x00\x00\x00\x00\x00\x00\x00\x00\x00\x02\x00\xb7:\xff\xff\xff\xff\xff\xff\xff\xfc\xff\xff\xff\xff\xff\xff\xff\xff\xfe\xff\xff:/usr/local/bin/qemu-aarch64-wrapper:

This may look complicated, but the details are actually straightforward. The fields in the file are colon separated. The first field just specifies a name for the rule, in this case 'aarch64' (creating a corresponding entry at /proc/sys/fs/binfmt_misc/aarch64), and the second, 'M', indicates that we are matching on some 'magic' (i.e., identifier) bytes at the start of the file. The third field is blank, implying a zero offset to said magic, and the next field contains the pattern to match. Then comes a mask, which is ANDed with the candidate file header bytes before matching, then the full path to the handler to invoke in case of a match, and lastly some flags (here, empty).

Note that the eighth byte of the mask field has been set to

0xfc, to allow running ofgccetc. (which hasOSABI_GNU, notOSABI_SYSV, specified in its ELF header; see e.g. Debian bug #799120).

You can verify for yourself that this will work. Dump the start of the /bin/ls binary on your PC:

root@gentoo-pc ~ # file /bin/ls

/bin/ls: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, stripped

root@gentoo-pc ~ # hexdump --canonical /bin/ls | head -n 2

00000000 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 |.ELF............|

00000010 03 00 3e 00 01 00 00 00 80 54 00 00 00 00 00 00 |..>......T......|And do the same for the /mnt/piroot/bin/ls binary, on the mounted rootfs:

root@gentoo-pc ~ # file /mnt/piroot/bin/ls

/mnt/piroot/bin/ls: ELF 64-bit LSB shared object, ARM aarch64, version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-aarch64.so.1, for GNU/Linux 3.7.0, stripped

root@gentoo-pc ~ # hexdump --canonical /mnt/piroot/bin/ls | head -n 2

00000000 7f 45 4c 46 02 01 01 00 00 00 00 00 00 00 00 00 |.ELF............|

00000010 03 00 b7 00 01 00 00 00 1c 5d 00 00 00 00 00 00 |.........]......|As you can see, the first 19 bytes of the x86-64 ls binary are

7f454c4602010100000000000000000003003e, which when masked with

fffffffffffffffcfffffffffffffffffeffff is 7f454c4602010100000000000000000002003e. This does not match the target 7f454c460201010000000000000000000200b7 ("ELF" = 454c46) magic. By contrast, the first 19 bytes of the aarch64 ls binary are 7f454c460201010000000000000000000300b7, which when masked is 7f454c460201010000000000000000000200b7. This does match.

Note that this rule will cause all attempted execution of

aarch64binaries on your PC to vector to theqemu-aarch64-wrapperhandler; if you are emulating multiple differentaarch64processors, this may not be what you want. For most users however, a direct vector like this is the most straightforward approach. Note also that although the RPi3 and RPi4 use different processors, they are both ARMv8a architecture systems.

Save the /etc/binfmt.d/qemu-aarch64 file, and then restart the binfmt service to pick it up. This service should already be installed (and activated) on your PC, whether you are running OpenRC or systemd.

OpenRC users should issue:

root@gentoo-pc ~ # rc-service binfmt restartWhereas, systemd users should issue:

root@gentoo-pc ~ # systemctl restart systemd-binfmtAgain, this only needs to be done once. The changes you have made will persist (on your PC) even if you reboot.

Next, check that the service has restarted correctly.

OpenRC users should issue:

root@gentoo-pc ~ # rc-service binfmt statusWhereas, systemd users should issue:

root@gentoo-pc ~ # systemctl status systemd-binfmtto perform this check.

NB, if your kernel has

binfmt_miscmodularized (CONFIG_BINFMT_MISC=m; see earlier), rather than built in, you may need to manuallymodprobeit here (and add it to your on-boot autoloaded module set). Most modern systems will autoload the module, however, and in any event, if you have it built in instead (CONFIG_BINFMT_MISC=y, recommended) the problem will not arise.

If that was OK, you should now be able to see (on either OpenRC or systemd) that your handler has been registered:

root@gentoo-pc ~ # cat /proc/sys/fs/binfmt_misc/aarch64

enabled

interpreter /usr/local/bin/qemu-aarch64-wrapper

flags:

offset 0

magic 7f454c460201010000000000000000000200b7

mask fffffffffffffffcfffffffffffffffffeffffRemember, the above path

/usr/local/bin/qemu-aarch64-wrapper, will resolve once wechrootinto the mounted rootfs.

We are now ready to prepare, and then enter, the chroot itself!

Our first chroot preparation task is to ensure that the host system's special filesystems (viz.: /dev, /proc, and /sys) are available inside it. To do so, we'll bind-mount them (for more details, please see here and here):

root@gentoo-pc ~ # mount -v --rbind /dev /mnt/piroot/dev

root@gentoo-pc ~ # mount -v --make-rslave /mnt/piroot/dev

root@gentoo-pc ~ # mount -v -t proc /proc /mnt/piroot/proc

root@gentoo-pc ~ # mount -v --rbind /sys /mnt/piroot/sys

root@gentoo-pc ~ # mount -v --make-rslave /mnt/piroot/sysWe don't bind-mount

/tmphere, since our primary goal is to set up a build system, not one in which we can e.g. runaarch64X applications on the host machine's X server. You can of course add this if required - be sure to copy in an appropriate.Xauthorityfile if you do (see e.g. these notes).

Next, to ensure DNS works as expected, we'll copy the 'host' system's /etc/resolv.conf file into the 'donor' (retaining the original so it can be restored later):

root@gentoo-pc ~ # mv -v /mnt/piroot/etc/resolv.conf{,.orig}

root@gentoo-pc ~ # cp -v -L /etc/resolv.conf /mnt/piroot/etc/Next, check that /dev/shm on your host PC is an actual tmpfs mount, and not a symlink (if the latter, it will become invalid after the chroot):

root@gentoo-pc ~ # test -L /dev/shm && echo "FIXME" || echo "OK"If the above test returned "OK", your system is already fine, so skip ahead to the next step now.

However, if it returned "FIXME", then issue the following to make dev/shm/ a proper tmpfs mount:

root@gentoo-pc ~ # rm -v /dev/shm && mkdir -v /dev/shm

root@gentoo-pc ~ # mount -v -t tmpfs -o nosuid,nodev,noexec shm /dev/shm

root@gentoo-pc ~ # chmod -v 1777 /dev/shmNow we are ready to enter the chroot! Issue:

root@gentoo-pc ~ # chroot /mnt/piroot /bin/bash --login

root@gentoo-pc / # We're in, but the console prompt is a bit confusing! As everything we do (at this prompt) is running inside a chroot-ed aarch64 system, we should modify it for clarity. Issue:

root@gentoo-pc / # export PS1="${PS1//\\h/pi64-chroot}"

root@pi64-chroot / # cd /root

root@pi64-chroot ~ #Now the chroot is set up, you can easily open one or more additional shell prompts 'inside' it. To do so (at your option), just start up another root terminal window on your PC, and therein issue:

root@gentoo-pc ~ # chroot /mnt/piroot /bin/bash --login

root@gentoo-pc / # export PS1="${PS1//\\h/pi64-chroot}"

root@pi64-chroot / # cd /root

root@pi64-chroot ~ #There is no need to repeat any of the mount steps etc. when doing this

Within the

chrootterminal(s), the path/mnt/pirooton the 'host' will appear as the "true" root,/(hence the name of the command, change root), and paths on the host such as '/bin' (that haven't been bind-mounted) will be inaccessible (i.e.,/bininside the chroot will refer to the path/mnt/piroot/binoutside). For avoidance of doubt, if you open another terminal on your PC's desktop of course, that will be (initially at least) outside of thechroot, with a 'normal' view of the filesystem.

Have a look around your chroot 'jail':

root@pi64-chroot ~ # ls /

bin dev firmware lib lost+found mnt proc run sys usr

boot etc home lib64 media opt root sbin tmp varThat was an aarch64 ls you just ran (courtesy of QEMU and the binfmt_misc machinery), but the kernel is still that of your PC (as this is a user-mode emulation only; note however that the 'machine' component output from uname --all now shows as aarch64):

root@pi64-chroot ~ # file $(which ls)

/bin/ls: ELF 64-bit LSB shared object, ARM aarch64, version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-aarch64.so.1, for GNU/Linux 3.7.0, stripped

root@pi64-chroot ~ # uname --all

Linux gentoo-pc 4.16.3-gentoo #4 SMP Sun Apr 22 13:54:47 BST 2018 aarch64 GNU/LinuxYour output will of course differ somewhat (different kernel version etc.), but the take-home point is: you just ran an aarch64 program on your x86-64 PC system.

Indeed, this has been happening behind the scenes from the moment you launched

/bin/bashin thechroot^-^.

Nevertheless, you can do much more than that! As we are chroot-ed inside a full Gentoo system (in this terminal window), we can leverage your PC's (hopefully!) larger memory and parallel processing capability, allowing us to emerge packages, such as dev-lang/rust, whose build requirements (swap etc.) are prohibitive when running 'natively' on an RPi3 (or even RPi4, for packages such as Chromium).

Another advantage is potentially faster media access speeds, particularly if your PC has USB 3 access to your image's rootfs (microSD card, USB stick or SSD).

So let's try that out next!

You can perform (almost) any standard emerge or ebuild operation within the chroot (some exceptions are described in a later section).

Note: if using modern versions of Portage, you may need to turn off

pid-sandboxinFEATURESto allowemergeto work correctly in achrootenvironment. See e.g. this thread for further details.

For example, suppose we wanted to build the package dev-lang/rust-1.25.0 (and any dependencies), using all available (PC) threads where possible, and inhibiting the specified MAKEOPTS value from being subsequently 'clamped' too low during the build by protective package.bashrc entries in the custom profile.

To do so, issue (e.g.):

root@pi64-chroot ~ # export MAKEOPTS="-j$(($(nproc)+1)) -l$(nproc)"

root@pi64-chroot ~ # export SUPPRESS_BASHRC_MAKEOPTS=1

root@pi64-chroot ~ # emerge --ask --verbose ~dev-lang/rust-1.25.0This will attempt to build the most recent revision of

rust-1.25.0, together with any dependencies (such as e.g.dev-util/cargo). For further background on the Portage package management system, please see my notes here.

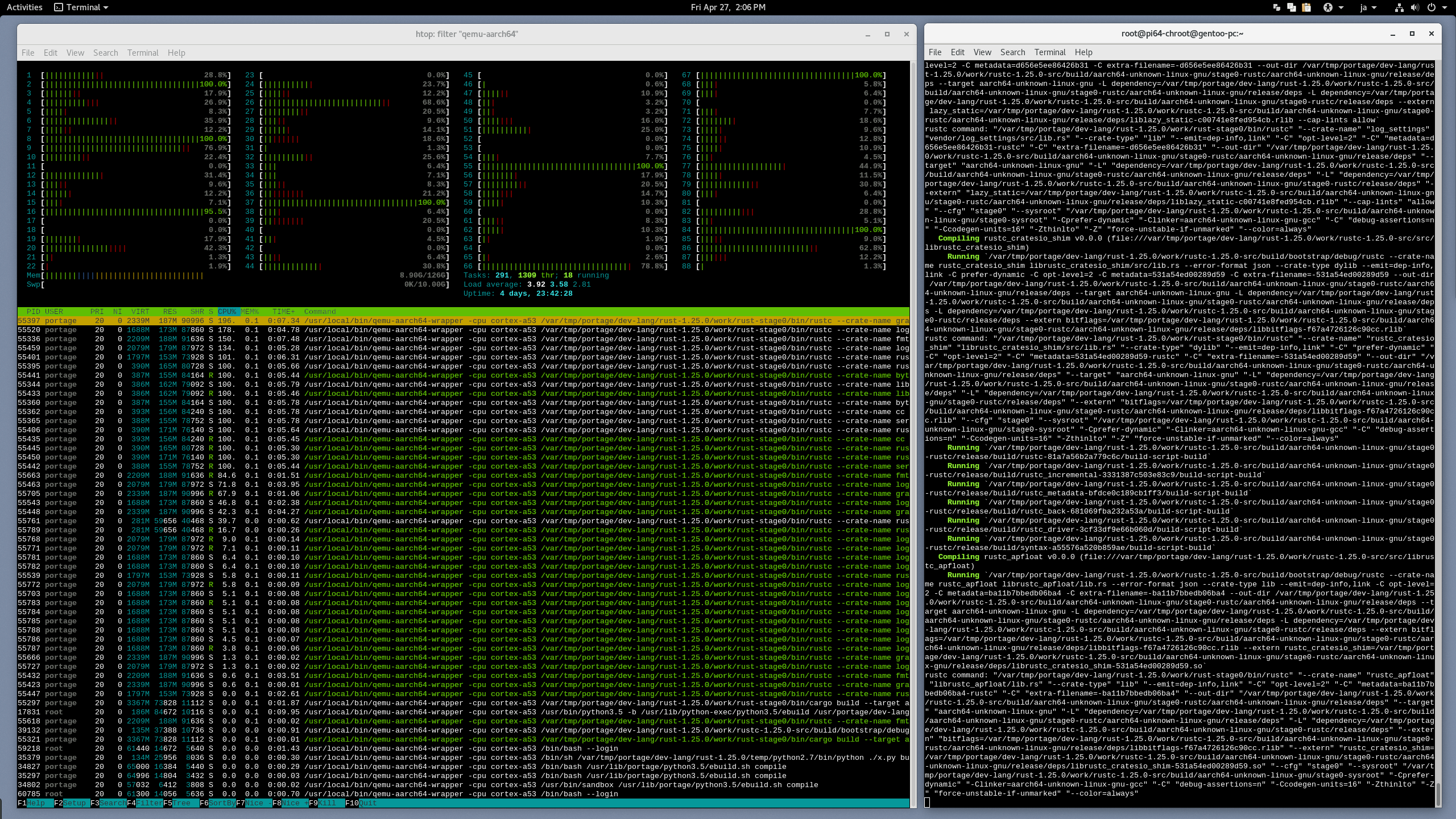

Confirm when prompted, and off it goes! Here's a screenshot of just such a (highly parallel) binfmt_misc chroot build of an aarch64 rust-1.25.0 in action, on the author's PC workstation:

While the process is running, you can monitor the spawned tasks using a tool such as htop (which allows you to e.g. easily apply a command filter, such as "qemu-aarch64", by pressing F4), as shown above.

For most packages, an emerge like this will run successfully to completion (if that applies to you, then just skip ahead to the next section, now).

However, sometimes you will encounter errors (or lock-ups) due to parallelism, and these can be dealt with successfully simply by killing the emerge if necessary (using Ctrl-c), and then restarting.

Note, however, that after you have stopped the emerge (or it has failed out by itself), but prior to restarting it, you should first clean up any null object files and lock 'dotfiles', which may have been left from the previous run. To do this for our example package (dev-lang/rust-1.25.0), you would issue:

root@pi64-chroot ~ # find /var/tmp/portage/dev-lang/rust-1.25.0 -empty -name '*.o' -delete

root@pi64-chroot ~ # find /var/tmp/portage/dev-lang/rust-1.25.0 -name '.*lock*' -deleteThen, restart the build. You need to use the lower-level ebuild command (rather than emerge) to do this: it should try to pick up where it left off. Suppose, for example, that all dependencies of rust-1.25.0 had built successfully, but that the rust build itself had frozen. Then, after stopping it and performing the clean-up as just described, you could restart the build manually by issuing:

root@pi64-chroot ~ # ebuild /usr/portage/dev-lang/rust/rust-1.25.0.ebuild mergeNB do not use the

cleandirective toebuildhere: otherwise the whole process will start from the beginning, and all build progress will be lost.

Hopefully your build will now run to completion correctly.

Congratulations, you should now have the target aarch64 package (in the case of this example, dev-lang/rust-1.25.0) installed on the image rootfs!

Since our example app is non-graphical, you can even try it out immediately, in the chroot; issue:

root@pi64-chroot ~ # rustc --version

rustc 1.25.0-devFurthermore, because the gentoo-on-rpi-64bit image defaults to having the buildpkg FEATURES directive set, a binary package will also automatically have been created in $PKGDIR (which is /usr/portage/packages, unless you have customized it), as a by-product of the emerge, and through this you can efficiently install your new package on other RPi3 units (running the same OS).

In the case of our example, the created binary package is /usr/portage/packages/dev-lang/rust-1.25.0.tbz2. If you copy this to the same location on another gentoo-on-rpi-64bit RPi, and then on that machine run:

pi64 ~ # emaint binhost --fixto pick it up, you can then simply issue (e.g.):

pi64 ~ # emerge --ask --verbose --usepkg ~dev-lang/rust-1.25.0And you should see (unless you have e.g. different USE flags set for this package on the two images) that Portage will offer to install the binary package, which is much faster than building locally.

There are of course many more sophisticated ways of dealing with binary packages in Gentoo: please see this article for further details.

Although the vast majority of packages will build successfully when using a user-mode QEMU binfmt_misc chroot as described here, not all will.

If your package build involves javac (e.g., you are trying to emerge dev-java/icedtea) or invokes some of the more exotic syscalls (e.g. dev-lang/go), then the build may fail in the chroot. You simply need to try it again with the image booted natively on an RPi4 / RPi3 (or under a full system-mode QEMU emulation).

Note that you may also see a number of QEMU errors emitted during regular builds, of the form "qemu: Unsupported syscall: nnn". This occurs because QEMU has to map the aarch64 Linux kernel call numbers (and arguments) into a form comprehensible by your PC's x86-64 kernel - and the mapping code, while pretty good, is not complete. You can see a lookup table for these system calls here. The two most common unsupported syscalls encountered when emerging are:

-

277:

seccomp: this is called by thescanelfprogram during Portage QA checks (and a few other places). It may safely be ignored, asscanelfstill produces valid output even whenseccompis unavailable. To suppress the message, you can re-emergeapp-misc/pax-utils(which suppliesscanelf) with the-seccompUSE flag (thanks to deagol for this tip). -

285:

copy_file_range: this call is emulated by modernglibcwhere not available, and as it only became available with Linux 4.5, programs should have alternative mechanisms in place anyway if invoking it fails. Therefore, this error may also generally safely be ignored.

Some of these issues may have been resolved with the 3.0.0 release of

app-emulation/qemu- to be checked.

When you're finished working with the chroot (and have no builds running therein), you can exit from it, remembering to restore the original /etc/resolv.conf:

root@pi64-chroot ~ # rm /etc/resolv.conf

root@pi64-chroot ~ # mv -v /etc/resolv.conf{.orig,}

root@pi64-chroot ~ # exit

root@gentoo-pc ~ # You're now back natively in the 'outer' system within this terminal.

If you elected to enter the chroot from one or more additional shell prompts earlier, be sure to exit from each of these also, before continuing.

Then, dismount the special mounts we set up. First, if you chose to set up working space on the host earlier, dismount that:

root@gentoo-pc ~ # umount -v /mnt/piroot/var/tmp/portageThen (all users) dismount the remaining mounts:

root@gentoo-pc ~ # umount -lv /mnt/piroot/{proc,sys,dev,boot,}

root@gentoo-pc ~ # syncThat's ell-vee, the '-l' being the short-form for

--lazy, without which you have to follow a very strict order to get dismounting to work correctly.

Once this completes, you can safely remove your gentoo-on-rpi-64bit microSD card (or other media) into your (powered off) RPi3 / RPi4, and reboot it. Your newly built package(s) should now be useable!

To re-enter the chroot again with the same image, simply follow the process from "Mounting your gentoo-on-rpi-64bit Image's rootfs and bootfs" above, but omit the sections marked "(One-Off)" (viz.: "Preparing the Mounted rootfs (One-Off)" and "Adding a binfmt_misc Matching Rule (One-Off)").

Have fun ^-^

You may also find the following Gentoo Wiki articles useful (I did!):

Wiki content license:

Wiki content license: