ReLU - rugbyprof/5443-Data-Mining GitHub Wiki

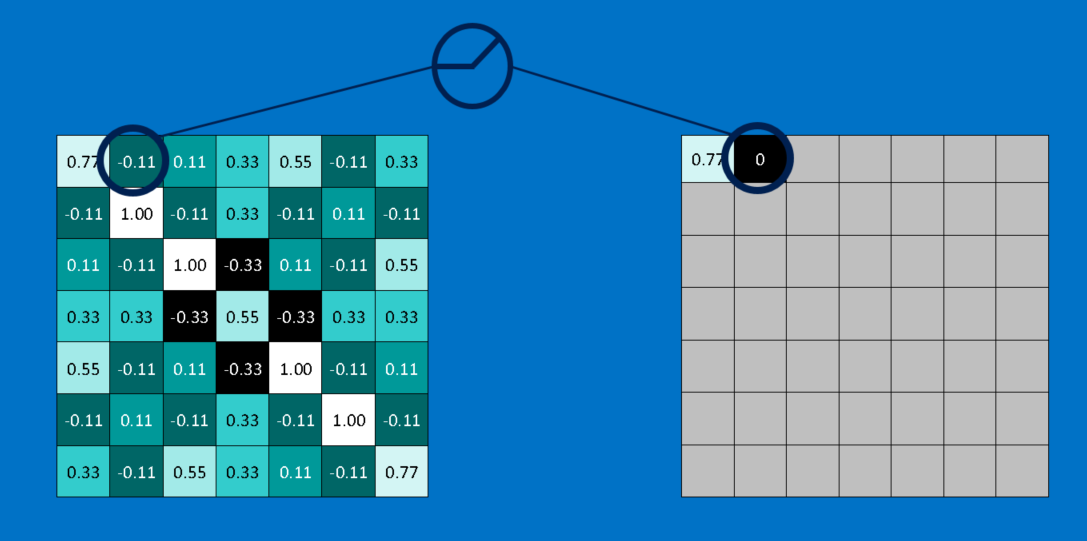

Rectified Linear Unit is a non-linear operation. It’s math is also very simple—wherever a negative number occurs, swap it out for a 0. The rectifier function is an activation function f(x) = Max(0, x). This helps the CNN stay mathematically healthy by keeping learned values from getting stuck near 0 or blowing up toward infinity. It’s the axle grease of CNNs—not particularly glamorous, but without it they don’t get very far. This function is used instead of a linear activation function to add non linearity to the network. This is used because it can be computed efficiently compared to more conventional activation functions like the sigmoid and hyperbolic tangent.

it's one of the activation function in Neural Networks

References:

Source: https://github.com/Kulbear/deep-learning-nano-foundation/wiki/ReLU-and-Softmax-Activation-Functions#rectified-linear-units

Source: http://brohrer.github.io/how_convolutional_neural_networks_work.html

Most recent deep learning networks use rectified linear units (ReLUs) for the hidden layers. A rectified linear unit has output 0 if the input is less than 0, and raw output otherwise. That is, if the input is greater than 0, the output is equal to the input. ReLUs' machinery is more like a real neuron in your body. One way ReLUs improve neural networks is by speeding up training. The ReLU function is f(x)=max(0,x).f(x)=max(0,x).

Its limitation is that it should only be used within Hidden layers of a Neural Network Model. Another problem with ReLu is that some gradients can be fragile during training and can die. It can cause a weight update which will makes it never activate on any data point again. Simply saying that ReLu could result in Dead Neurons. To fix this problem another modification was introduced called Leaky ReLu to fix the problem of dying neurons. It introduces a small slope to keep the updates alive. (https://towardsdatascience.com/activation-functions-and-its-types-which-is-better-a9a5310cc8f)