Performance and benchmarking - rosepearson/GeoFabrics GitHub Wiki

The Python library Dask is used to support parallel processing during the generation of DEM from LiDAR. It is currently not applied during any offshore interpolation of Bathymetry values.

Dask requires the number of CPU cores to be used to be specified as well as the chunk_size in pixels to be allocated to each worker. More details on these parameters and how to encode them in the instruction file can be found in instruction file contents

Benchmarking

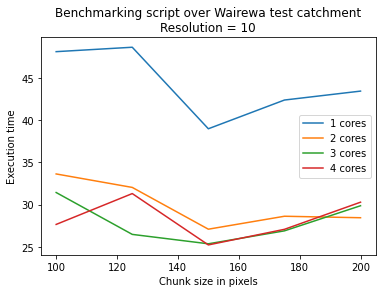

As mentioned in the introduction, a benchmarking.py script is provided under the src folder. It's purpose to to help the user of geofabrics select the best chunk_size and number_of_cores for a dataset that they would like to process.

benchmarking.py expects a single argument instructions that can either be provided as an argument to the main function, or as a command line option --instructions "path/to/instructions.json". As covered in detail in instruction file contents, it expects an additional section benchmarking that contains delete_dems, numbers_of_cores, chunk_sizes, and title.

At the end of it's execution, benchmarking.py will produce a plot showing the execution time of each run against the chunk size for a given number of cores.