Attention is all you need - notitiam/ML-paper-notes GitHub Wiki

-

All images from Andrew Ng's Deeplearning.ai course 5: sequence models

-

Existing language models (RNN + encoder-decoder)

- Problems:

- Single vector representation by encoder

- Network forgets for too long sequences

- Problems:

-

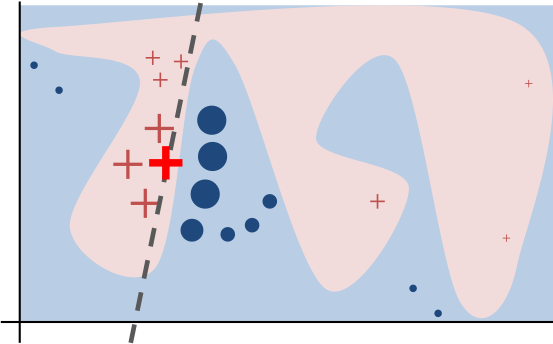

Intuition of attention

- Self attention

- Look at smaller window of sequence and previous output to generate next output

-

w<t,t'>=> weights of input sequencet'to look at for generating outputt - weights generated by nerual network with input of previous output + activations

- other types: for non NLP applications

-

Transformer model

- From 'Attention is all you need' https://arxiv.org/abs/1706.03762

- Google brain

- No encoder RNN, only attention

- Feed forward arch,

- input => activation (hiddent state)

- activation + prev output => output

- Multi head attention

- For each input word, get

hdifferent attentions (h= 8 for transformer) - Allows focussing on different part of sentence

- For each input word, get

- Position embedding in output

- Encode position explicity (no recurrence)

- Masked attention in decoder

- Prevent looking at future output at training

- Attention in encoder as well as decoder

- From 'Attention is all you need' https://arxiv.org/abs/1706.03762