Machine Reading Comprehension - newlife-js/Wiki GitHub Wiki

by KAIST 서민준 교수님

Machine Reading Comprehension(MRC)

주어진 지문을 이해하고, 주어진 질의의 답변을 추론하는 문제

MRC Datasets 종류

- Extractive Answer Datasets: 질의에 대한 답이 항상 주어진 지문의 segment(span)로 존재

(지문에 해당 단어가 그대로 존재하는 경우) - Descriptive/Narrative Answer Datasets: 답이 지문 내에서 추출한 span이 아닌, 질의를 보고 생성된 sentence(free-form)의 형태

- Multiple-choice Datasets: 질의에 대한 답을 여러 개의 answer candidates 중 하나로 고르는 형태

Challenges

- 단어들의 구성이 유사하지는 않지만 동일한 의미를 가지는 경우

- 답이 지문에 없는 경우

- Multi-hop reasoning(여러 개의 documnet에서 supporting fact를 찾아야지만 답을 찾을 수 있는 경우)

※ KorQuAD: 질의응답/기계독해 한국어 데이터셋

Extraction-based MRC

Metric

- Exact Match Score: GT와 character가 같으면 1, 아니면 0

- F1 score: GT와의 token overlap을 F1으로 계산

Preprocessing

- Context와 Question을 concatenation

- Tokenization + Special Token(CLS, SEP, UNK, PAD 등)

- Attention mask: 입력 sequence에서 필요한 정보가 있는 부분을 1로, 아닌 부분을 0으로(PAD, 질문 등)

- 정답 token의 위치 표현

- 출력 레이어 구성

Pre-training & Fine-tuning

BERT로 Contextualized Embedding을 구성(pre-training)

각 토큰이 답의 시작 토큰일 확률 / 끝 토큰일 확률을 출력하도록 classification(fine-tuning)

Post-processing

- 불가능한 답 제거하기(end가 start보다 앞에 잇는 경우, position이 context에 속하지 않는 경우 등)

- score(logit)이 가장 큰 예측을 출력

Passage Retrieval

질문에 대한 답을 포함한 문서(passage)를 찾는 것

Overview

query와 passage를 임베딩한 뒤 유사도로 랭킹을 매기고, 가장 높은 passage 선택

Sparse Embedding

0이 대부분인 vector로 embedding

- Bag-of-Words: 단어가 존재하면 1, 없으면 0(n-gram을 사용하기도 함)

단어가 많아질수록 space 증가, n-gram의 n 커질수록 증가 - TF-IDF(Term Frequency - Inverse Document Frequency): 단어의 등장 빈도와 단어가 제공하는 정보의 양으로 가중치 부여

TF: 단어 등장 횟수 / word 수(or 1)

IDF: log(document 수 / 단어가 등장한 document의 수)

쿼리와 문서의 TF-IDF 내적으로 유사도를 계산할 수 있음

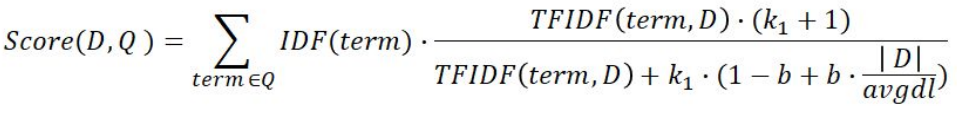

※ BM25: TF-IDF를 바탕으로 문서의 길이까지 고려한 scoring

※ BM25: TF-IDF를 바탕으로 문서의 길이까지 고려한 scoring

한계

차원의 수가 매우 큼

대부분의 element가 0으로 구성되어 비효율적

term 간의 유사성을 고려하지 못함

Dense Embedding

작은 차원의 고밀도 벡터

각 차원이 특정 term을 가리키지 않으며 대부분의 요소가 non-zero

단어의 유사성을 표현할 수 있음

Dense Encoder

보통 pretrained BERT encoder를 사용

문서 전체를 하나의 vector(CLS 토큰의 output)로 나타내어 question에서 나온 vector와 비교

연관된 passage(positive sampling)와는 거리를 좁히고

연관되지 않은 passage(negative sampling)와는 거리가 멀어야 함

■ Objective function: Positive passage에 대한 negative log likelihood loss

MIPS(Maximum Inner Product search)

내적이 가장 큰(유사성이 큰) vector를 찾는 것

모든 passage embedding에 대해서 내적 구하는(brute-force) 것은 매우 비효율적

- compression: Scalar Quantization(SQ)

vector를 압축하여 메모리 절약(4byte float -> 1byte unsigned integer) - pruning: Inverted File(IVF)

search space를 줄여 속도 개선(clustering + inverted file)

전체 vector space를 k개의 cluster로 나누고 그 안에서 search

inverted file: 각 cluster의 위치와 이에 속하는 vector들의 정보

※ FAISS: Facebook에서 만든 efficient similarity search 라이브러리

Open-Domain Question Answering(ODQA)

Open-domain: supporting evidence가 존재하지 않는 질문에 대해 답을 함

web이나 wiki에서 정보를 찾아서 답을 하는 문제

Retriever-Reader Approach

Retriever: 데이터베이스에서 관련 문서를 검색

Reader: 검색된 문서에서 질문에 해당하는 답을 찾아냄

- knowledge source: 구조화되지 않은 문서로 이루어진 corpus(위키피디아 등)

- Distant supervision: 질문-답변만 있는 데이터셋에서 MRC 학습 데이터 만듦

추론

retriever가 질문과 가장 관련성 높은 5개 문서 출력

reader는 5개 문서를 읽고 답변을 예측

답변 중 가장 score가 높은 것을 최종 답으로 사용

Reducing Bias

training에 SQuAD처럼 context에 무조건 답이 있는 데이터만 존재한다면...

training의 주제와 text의 주제가 많이 다르다면...

-

train negative examples: 훈련할 때 잘못된 예시도 보여줌

corpus 내에서 랜덤하게 뽑기, 좀 더 헷갈리는 negative 샘플 뽑기 -

add [no answer] bias: 입력 시퀀스에 1개 토큰을 더해서, start-end 확률이 해당 토큰을 가리키면 no answer을 출력하도록

Anotation Bias

데이터 제작 단계에서의 bias

질문을 하는 사람이 답을 알고 있기 때문에 질문과 evidence 문단 사이에 많은 단어가 겹치는 bias 발생

학습 데이터의 분포 자체가 bias(유명한 wiki, article 등..)

Closed-book QA

대량의 지식 소스를 기반으로 사전학습된 언어 모델이 그 지식을 기억하고 있을 것이라 가정

search 과정 없이 바로 정답 생성

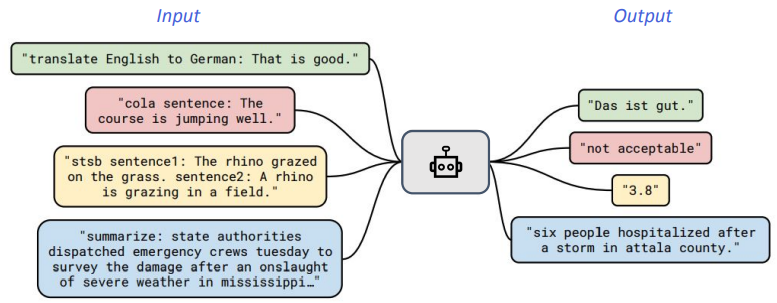

T5(Text-to-Text Format)

input에 task 정의가 같이 들어감

T5에 QA를 fine-tuning 적용했더니 잘 하더라..

T5에 QA를 fine-tuning 적용했더니 잘 하더라..