Interpretable Machine Learning - newlife-js/Wiki GitHub Wiki

by Christoph Molnar (번역 : TooTouch)

- 머신러닝 모델을 신뢰하지 못하는 이유: accuracy와 같은 단일 평가 지표는 현실문제에 사용하기에 불완전한 지표이기 때문

- 예측만으로 문제를 해결할 수 없는 문제에 더 중요

Intrinsic: shallow decision tree와 같은 sparse 선형 모델과 같은 단순한 구조로 인해 해석 가능

Post Hoc: 모델 학습 후 해석 방법 적용(Permutation Feature Importance)

전체론적 해석가능성: Hyperplane과 같은 결과로 해석.. (3차원 이상은 인간의 상상범위 밖)

모듈 수준에서 전체론적 해석가능성: 단일가중치로 해석(선형모델의 가중치)

단일 예측치에 대한 지역적 해석가능성: 하나의 x로 예측

어플리케이션 수준: 전문적인 실제 사용자에 의해 평가받는 것

인간 수준: 어플리케이션 수준을 단순화하여 전문가가 아닌 사용자가 평가하는 것

기능 수준: 모델의 클래스가 이미 인간 수준의 평가에서 평가된 경우(짧은 트리일수록 설명력이 높다 등)

- 가중치들과 feature값의 곱으로 예측값에 대한 기여도를 설명

- 비선형성이나 교호작용이 많은 경우에는 적절치 못함

- 선형성은 설명을 더 일반적이고 간단하게 만듦

■ 너무 많은 feature들이 존재할 경우에는 선형 모델로 학습할 수 없음. -> sparsity를 적용

- 전문적인 지식을 이용해 수동적으로 feature 선택

- feature과 목표값 간의 상관관계가 임계값을 넘는 경우 선택(feature이 서로 독립적이라고 가정)

- Forward Selection: feature 하나로부터 시작해서, 가장 좋은 모델을 만드는 feature들을 하나씩 추가

- Backward Selection: 모든 feature를 넣은 모델로부터 시작해서, 가장 좋은 모델을 만들도록 feature를 하나씩 제거

- feature들의 선형결합에 로지스틱함수를 적용

- 가중치 대신 odds ratio로 해석함

GLM: 모든 결과값의 유형(비가우시안 분포, 음수가 없는 유형 등)을 모델링하기 위해 확장시킨 선형 모델

선형델과 기대값을 비선형 함수를 통해서 연결

GAM: 가중치 합이 아닌, 각각의 feature별로 임의의 함수를 적용한 값의 합으로 모델링(비선형 해결 위해)

- impurity를 감소시키는 방향으로 feature을 구분하는 트리를 구성

- 분할 후 감소한 impurity의 정도를 feature 중요도로 사용

- If-Then 구조로 예측하는 방식 ■ support : 규칙 조건이 적용되는 관측지의 백분율(지원하는 범위) ■ accuracy: 규칙이 올바른 클래스를 예측하는 비율

- 적절한 간격을 선택하여 연속형 feature를 범주화

- feature와 결과 사이에 교차 테이블을 만들어 가장 오류가 적은 feature를 선택

- 규칙 1로 학습하고, 규칙 1에 해당하는 데이터 지점을 제거한 후, 나머지 데이터로 그 다음 규칙 2를 학습한다.

- 데이터에서 빈번한 패턱을 미리 파악

- 미리 확인된 규칙의 선택 항목에서 의사결정 목록을 학습

- 의사결정 규칙의 형태로 자동으로 탐지된 상호작용 효과를 포함하는 희소 선형 모델을 학습

- 의사 결정 트리에서 상호작용을 고려한 새 feature을 자동으로 생성

- 앙상블 등을 이용해서 최대한 만은 규칙을 만듦(노드의 예측값은 버리고, 분할 조건만 사용)

- 만들어진 규칙들과 기존 feature을 사용해 희소 선형 모델을 만들어 가중치 추정치를 얻음

- Lasso 모델의 가중치에 선형 항의 표준편차를 곱해서 feature 중요도를 얻음

- 모델의 학습과 설명을 분리시켜, 학습의 종류에 제한되지 않은 설명을 제공하는 방법

- 해석 가능한 모델만을 사용하기에는 성능이 떨어져서...

- Model flexibility(어느 모델이든 적용 가능), Explanation flexibility(특정 form의 설명에 국한되지 않음), Representation flexibility(설명하는 모델 별로 다른 feature representation을 사용)

■ Example-based Explanation: 모델을 설명하기 위해 특정 dataset을 선택(model-agnostic에서는 feature의 summary를 create)

머신러닝 모델의 average behavior를 describe하는 방법

1,2가지 feature가 예측 결과에 미치는 marginal effect를 보여주는 방법

S는 관심 있는 feature의 집합(1~2개)이며, C는 머신러닝 모델에 사용된 다른 feature들(S의 feature들과는 상관관계 없다는 가정)

S는 관심 있는 feature의 집합(1~2개)이며, C는 머신러닝 모델에 사용된 다른 feature들(S의 feature들과는 상관관계 없다는 가정)

PDP는 training set에 대하여 S의 값에 따라 생성되는 결과의 평균을 그림

PDP는 training set에 대하여 S의 값에 따라 생성되는 결과의 평균을 그림

PDP의 average curve로부터의 deviation이 클수록 중요도가 높음

feature들이 머신러닝 모델의 결과 예측에 평균적으로 영향을 미치는지를 나타냄.

PDP보다 빠르고 unbiased(변수 간 상관관계 고려)되어 있다.

- Grid를 Window로 나누어서, Window 내의 예측값의 차이를 평균내어서 grid에 따라 accumulate 한다.

Feature간 상관관계를 측정하기 위함

■ H-statistics: 두 feature간 or 한 feature과 나머지 feature들의 interaction을 partial dependence를 사용하여 측정한 통계량

고차원 함수를 각각의 feature effect와 interaction effect의 합으로 나타내는 것.

■ (Generalized) Functional ANOVA ■ ALE ■ Statistical Regression Models

Feature의 값을 permute함에 따라 변하는 prediction error의 증가를 사용

- Training data로 모델을 학습한 뒤, test data에 permutation 적용하여 feature importance 도출

예)

단점: unlabeled data에는 적용 불가, correlated된 feature이 있으면 unrealistic data instance에 의해 biased될 수 있음, correlated feature을 추가하면 관련된 feature의 importance가 줄어들 수 잇음

black box model의 예측에 근사하는 예측가능한 모델

- black box model에 사용한 dataset을 X로, black box model의 예측을 y로 해서 linear model이나 decision tree 같은 해석가능한 모델을 학습

- r^2로 black box model과 surrogate model의 예측의 유사성을 측정

예)

Prototype: 모든 data를 잘 대표하는 data instance

Criticism: prototype에 의해 대표되지 못하는 data instance

데이터의 분포에 대한 이해를 돕고, 해석가능한 모델을 만들거나, black box model을 해석하는 데 도움이 됨

■ MMD-critic: prototype과 실데이터의 분포를 비교하여, 괴리를 최소화하는 prototype을 선택하는 방법

- prototype과 criticism의 갯수를 선정

- greedy search로 prototype 찾기

- greedy search로 criticism 찾기

- data density를 추정하기 위한 kernel function을 이용하여 두 분포의 차이를 계산하는 witness function을 사용

Individual Prediction에 대한 설명을 하는 방법

한 feature의 변화에 따라 각각의 instance의 예측이 어떻게 변화하는지를 line으로 나타냄

PDP는 ICE들의 평균이라고 보면 됨.

-

centered ICE Plot: 각각의 prediction의 차이가 시작점이 다른 것 때문일 수 있으므로, 시작점을 일치시킴.

-

Derivative ICE Plot: 변화의 방향과 feature의 range 파악이 쉬움.

Individual prediction을 설명하기 위한 local surrogate model을 학습

- 새로운 dataset(관심 instance에 perturbed sample 포함) 만들어 black box model을 예측하도록 학습

- 관심 instance에 가까운 new sample에 더 큰 가중치를 줌

- Local approximation에만 초점을 두고, global approximation을 잘할 필요는 없음

- Loss(black box model의 prediction과의 차이)를 최소로 하는 데 초점을 두면서 model complexity에 제한을 둠

- neighborhood의 크기를 결정하는 kernel width 조절 잘해야 함

의문) 기존 모델이 perturbed sample도 잘 예측한다는 가정이 있어야 하는 것인가?

관심 instance는 어떻게 선정하는가?

장점: black box model의 종류와 상관없이 적용 가능, 간결하고 직관적인 설명 가능, tablular, text, image 데이터 모두 적용 가능, fidelity measure 가능, 패키지 잘 되어 있음,

단점: neighborhood의 크기를 결정하는 kernel width 선정이 어려움, Gaussian distribution에 의한 sampling의 한계(ex: correlated feature), model complexity 미리 정해야 함, instability of the explanations

predefined output에 대한 예측을 변화(ex: Y->N / 90->100)시키는 가장 작은 변화가 필요한 feature를 설명

- feature value 변화의 크기를 작게 하는 것도 중요하지만, 변화하는 feature 갯수도 작아야 함

- 다수의 counterfactual instance를 generate하는 게 바람직할 때도 있음

- 그럴 듯한(현실적인) counterfactual instance를 generate해야 함

■ Generating Counterfactual Explanations

(1) Minimizing Loss by Watchter:

desired outcome과 couterfactual의 prediction의 차이

- 실제 point와 counterfactual point 사이의 거리

- 단점: 적은 수의 feature만 구함, categorial feature는 다루기 어려움

(2) Minimizing 4 Loss by Dandl:

desired outcome과 couterfactual의 prediction의 차이

- 실제 point와 counterfactual point 사이의 거리(Gower distance)

- 변화된 feature의 갯수 + likely feature values/combinations를 가진 counterfactual

장점: 해석이 명확, 새로운 counterfactual를 만들거나 / 기존 dataset 안에서 outcome이 변하게 만든 feature를 뽑거나 둘 다 가능, data나 model에 상관없이 prediction function에만 접근을 요구(무슨 말인지 모르겠음...), implementation 쉬움

다른 feature가 individual prediction에 영향을 미치지 않도록 하는 특정 feature들의 decision rule을 찾는 방법

- 특정 coverage 이상의 input space에서 특정 threshold의 precision을 만족하는 조건을 찾음

■ Finding Anchors

- Candidate Generation

- Best Candidate Identification

- Candidate Precision Validation

- Modified Beam Search

game theory에서 착안하여, single instance(game)에 대해 feature values(player)가 gain(single prediction - average prediction)에 기여하는 정도를 나타내는 방법

Sharpley Value : average of marginal contributions to all possible coalitions

단점: 연산량 ↑, 잘못된 해석의 가능성(해당 feature가 제거되었을 대의 contribution이 아닌, feature value가 average와의 차이에 기여하는 정도를 나타냄), prediction model이 아님, feature가 correlated 되어잇으면, unrealistic data를 포함할 수 있음

kernel-based estimation approach for Shapley values

■ KernelSHAP

estimates for an instance x the contributions of each feature value to the prediction

■ TreeSHAP

Tree-based model을 위한 SHAP

- marginal expectation 대신 conditional expectation을 사용

- KernelSHAP보다 연산 complexity가 낮음(TLD^2 < TL2^M, T: # of trees, L: max # of leaves, D: max depth)

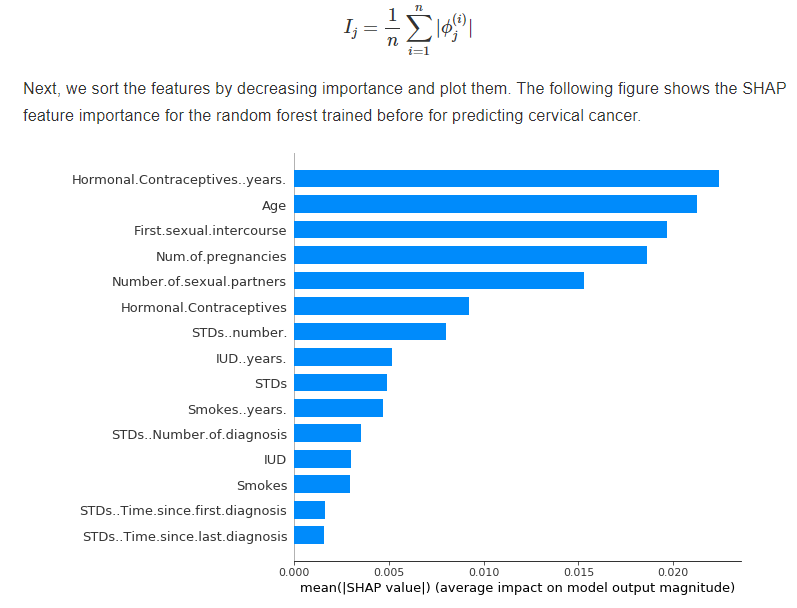

예) Cervical Cancer

참고) SHAP graph 해석

장점: solid theoretical foundation, constrastive explanations, LIME과 Shapley values를 연결, fast implementation for tree-based models(global model interpretations를 위한 연산에 유리)

단점: KernelSHAP은 느림, KernelSHAP은 feature dependence를 무시, TreeSHAP은 unintuitive feature attribution을 만들어낼 수 있음, 잘못 해석될 수 있음

위의 interpretation method들과의 차별점

- hidden layers의 feature들을 uncover할 수 있도록

- gradient를 interpretation에 이용할 수 있음

■ Feature Visualization

finding the input that maximizes the activation of that unit(individual neurons, channel, entire layers)

- unit의 activation을 최대화하는 image를 찾는 optimization problem이라고 생각하면 됨

- 기존 데이터에서 찾을 수도 있고, 새로운 이미지를 생성할 수도 있음

- tabular data에 대해서는 unit의 activation을 최대화하는 feature의 조합을 찾는 문제

■ Network Dissection

참고: Network Dissection

CNN unit의 interpretability를 정량화하는 방법

가정: Units of a neural network (like convolutional channels) learn disentangled concept

- Broden dataset(Broadly and densely labeled data)가 필요(pixel level의 concepts을 labeling 해줘야 함)

- image에서 top activated area를 찾아내 activation mask를 만든다.

- 해당 activation mask와 가장 많이 일치하는 concept를 찾는다.

장점: unique insight를 줌, unit을 concept과 자동으로 연결해줌, non-technical way로 소통 가능, class를 넘어서 concept까지 detect 가능

classification과 관련 있는 pixel을 highlight하는 방법(sensitivity map, saliency map, pixel attribution map 등)

- Vanilla Gradient

- DeconvNet

- Grad-CAM(Gradient-weighted Class Activation Map)

- Guided Grad-CAM

- SmoothGrad

장점: explanations are visual, faster to compute than mode-agnostic methods, many methods to choose from

Neural network에 의해 학습된 latent space에 내재된 concept을 detect

■ TCAV(Testing with Concept Activation Vectors)

concept과 class 간의 관계를 묘사(예: 줄무늬가 얼룩말 class에 어떻게 영향을 미치는지)

CAV: numerical representation that generalizes a concept in the activation space of a NN layer

■ 다른 방법들: ACE, CBM, CW

small perturbation을 가해서 model을 deceive하는 samples

(model의 취약점을 찾기 위한 것인 듯)

- Fast gradient sign method

- 1-pixel attack

- Adversarial patch

- Black box attack

model의 parameter나 prediction을 변화시키는 instance를 찾는 것

(model을 debug하거나 설명하는 데 도움이 됨, problematic instance가 있거나, measurement error가 있거나 등)

- 해당 instance를 제거(deletion Diagnostics)하거나 loss의 가중치를 조금 크게(influence functions) 했을 때 얼마나 변하는지

- outlier와는 다름(dataset과의 거리가 먼 것), 하지만 outlier가 influential instance가 될 수는 있음

■ Deletion Diagnostics: instance를 제거하여 parameter나 prediction의 변화를 보는 것

- DFBETA: parameter의 변화 정량화

- Cook's distance: prediction의 변화 정량화

■ Influence functions: loss의 가중치(e)를 조금 크게 햇을 때의 변화를 보는 것

retraining을 하지 않고, 변화를 approximate하는 방법