HetGNN - lshhhhh/deep-learning-study GitHub Wiki

코드: https://github.com/chuxuzhang/KDD2019_HetGNN

논문: http://www.shichuan.org/hin/time/2019.KDD%202019%20Heterogeneous%20Graph%20Neural%20Network.pdf

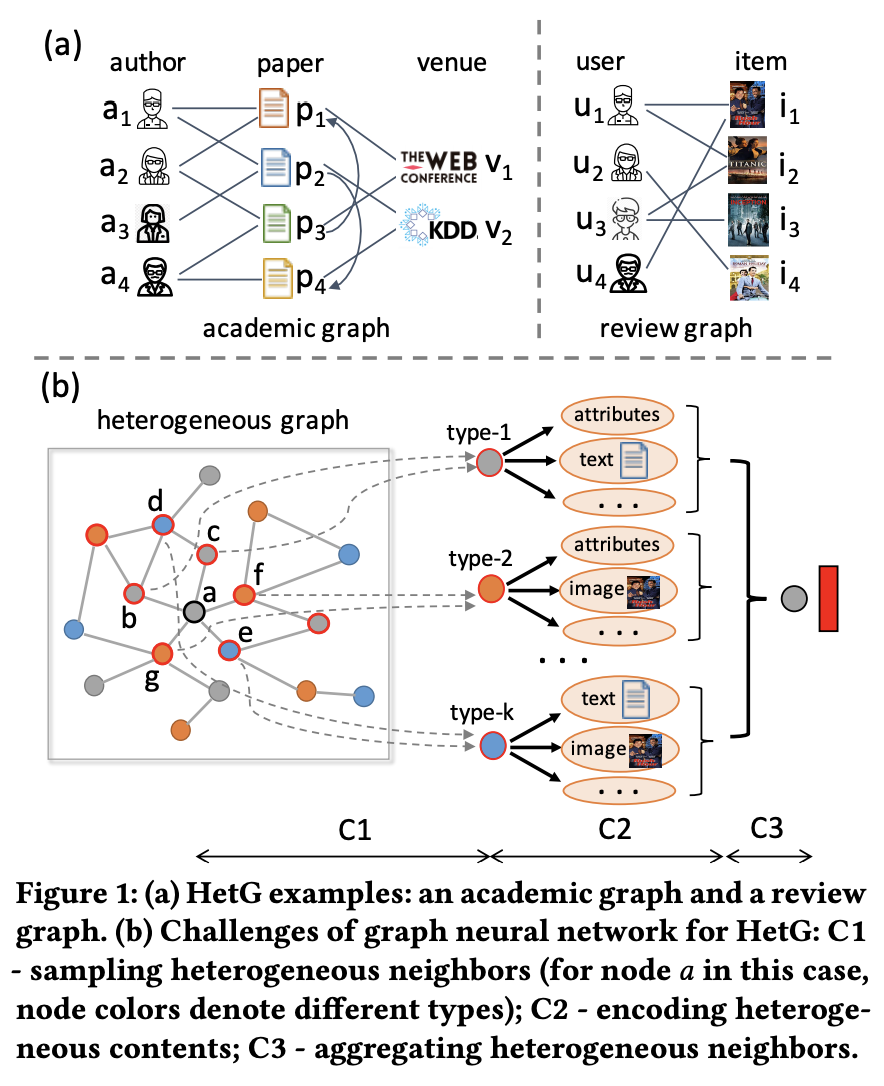

- 모든 노드가 모든 type의 노드들과 연결되어있지 않을 수 있다. 또한, 이웃의 수도 node마다 천차만별

-> Fig. 1(b)의 C1: HetG의 각 노드에 대한 embedding 생성을 하는데에 있어 강한 상관관계를 가진 heterogeneous 이웃들을 sampling하는 방법 - Fig. 1(b)의 C2: 서로 다른 노드의 content heterogeneity를 해결하기 위한 노드 content encoder를 설계하는 방법

- Fig. 1(b)의 C3: 서로 다른 노드 type의 영향을 고려하여 heterogeneous 이웃들의 feature 정보를 aggregation하는 방법

G = (V, E, OV, RE)

- OV, RE: V의 object types set, E의 relation types set

C-HetG G = (V, E, OV, RE) with node content set C가 있을 때,

heterogeneous structural closeness와 heterogeneous unstructured contents among them을 encode할 수 있는 d-dimensional embeddings E ∈ R|V|×d (d≪|V|)를 학습하는 모델 FΘ(with parameters Θ)를 design!

앞서 설명했듯 4가지 파트가 있다.

(1) sampling heterogeneous neighbors;

(2) encoding node heterogeneous contents;

(3) aggregating heterogeneous neighbors;

(4) formulating the objective and designing model training procedure.

GraphSAGE, GAT와 같은 GNN의 key idea는 인접 이웃 노드들의 feature를 aggregate하는 것인데, 이를 직접적으로 적용하면 몇몇 문제가 있다.

- 다른 종류의 이웃들로부터 직접적으로 feature 정보를 캐치하기 힘들다. (Fig.1 (a)의 author-venue)

- 다양한 이웃들의 size (어떤 author는 많은 paper를 썼는데, 어떤 author는 그렇지 않다) -> hub 노드의 임베딩은 상관관계가 적은 이웃 노드로 인해 저하될 수 있고, cold-start 노드 임베딩은 정보가 불충분하다.

- 서로 다른 content feature를 지닌 heterogeneous 이웃들을 aggregation할 때, 다른 feature transformation이 필요

이러한 문제를 해결하기 위해 random walk with restart(RWR)를 이용한 heterogeneous neighbors sampling strategy를 제안한다.

- Step-1: 고정 길이의 RWR sampling

- 노드 v를 시작으로 random walk를 하는데 현재 노드의 이웃을 traverse할 수도 있고 p의 확률로 자신으로 돌아올 수도 있다.

- 노드 v에 대해 모든 type의 이웃이 sampling되도록, 각 노드 type의 수를 제한한다.

모든 type의 노드를 모을 수 있다.

- Step-2: 이웃들을 type에 따라 grouping

- 각 노드 type마다 출현 빈도수에 대하여 top k개 node 셋을 뽑는다.

고정 크기 + 유의미한 순서, type-based aggregation 가능하게 만듦

Fig 2.(b)

- 노드 v의 heterogeneous contents Cv

- Cv의 i-th content: xi ∈ Rdf×1 (df: content feature dimension)

- xi는 content type에 따라 다른 기술로 pre-train될 수 있음. (아래 예시)

attribute: one-hot vec, text: Par2Vec, image: CNN

- xi는 content type에 따라 다른 기술로 pre-train될 수 있음. (아래 예시)

- 이들을 바로 concat하거나 linear transform하여 통합된 하나의 vector를 만드는 것 대신, bi-LSTM -> mean pooling

- 간결한 구조, 상대적으로 낮은 complexity (적은 params) -> 모델 구현과 tuning이 상대적으로 쉽다.

- heterogeneous contents information를 결합할 수 있다. -> strong expression capability

- flexible to add extra content features -> 모델 확장이 편리

Fig 2.(c)

- 같은 type의 이웃 노드들을 aggregation한다. Aggregation function 예시로는 bi-LSTM -> mean pooling를 들었다.

Fig 2.(d)

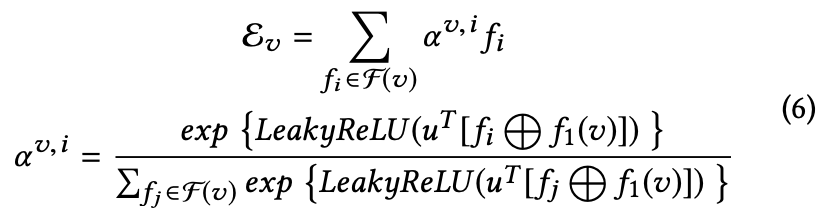

- 이제 한 노드 v에 대해서 |OV|의 embedding이 생겼는데, 이들을 attention을 사용해서 합친다.

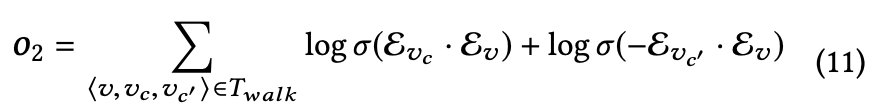

Logarithm을 취하고, negative sampling size M = 1로 두고, 식을 정리하면 최종 식은 아래와 같다.

- Academic graph

- Node: author - paper - venue

- Side info: paper -> title, abstract, authors, references, year, venue

- Review graph

- Node: user review - item

- Side info: item => title, description, genre, price, picture

- a_p_list_train.txt: each author - paper neighbor list

- p_a_list_train.txt: each paper - author neighbor list

- p_p_citation_list.txt: each paper - paper citation neighbor list

- v_p_list_train.txt: each venue - paper neighbor list

- p_v.txt: each paper - venue

- p_title_embed.txt: pre-trained paper title embedding

- p_abstract_embed.txt: pre-trained paper abstract embedding

- node_net_embedding.txt: pre-trained node embedding by network embedding

- het_neigh_train.txt: generated neighbor set of each node by random walk with re-start

- het_random_walk.txt: generated random walks as node sequences (corpus) for model training