Extensions Generation - linwownil/stable-diffusion-webui GitHub Wiki

https://github.com/dfaker/SD-latent-mirroring

Applies mirroring and flips to the latent images to produce anything from subtle balanced compositions to perfect reflections

https://github.com/yownas/seed_travel

Small script for AUTOMATIC1111/stable-diffusion-webui to create images that exists between seeds.

Example: (Click to expand:)

https://github.com/dustysys/ddetailer

An object detection and auto-mask extension for Stable Diffusion web UI.

https://github.com/klimaleksus/stable-diffusion-webui-conditioning-highres-fix

This is Extension for rewriting Inpainting conditioning mask strength value relative to Denoising strength at runtime. This is useful for Inpainting models such as sd-v1-5-inpainting.ckpt

https://github.com/Extraltodeus/multi-subject-render

It is a depth aware extension that can help to create multiple complex subjects on a single image. It generates a background, then multiple foreground subjects, cuts their backgrounds after a depth analysis, paste them onto the background and finally does an img2img for a clean finish.

https://github.com/Extraltodeus/depthmap2mask

Create masks for img2img based on a depth estimation made by MiDaS.

https://github.com/AlUlkesh/sd_save_intermediate_images

Implements saving intermediate images, with more advanced features.

https://github.com/OedoSoldier/enhanced-img2img

An extension with support for batched and better inpainting. See readme for more details.

https://github.com/kohya-ss/sd-webui-additional-networks

Allows the Web UI to use networks (LoRA) trained by their scripts to generate images.

https://github.com/antis0007/sd-webui-multiple-hypernetworks

Extension that allows the use of multiple hypernetworks at once

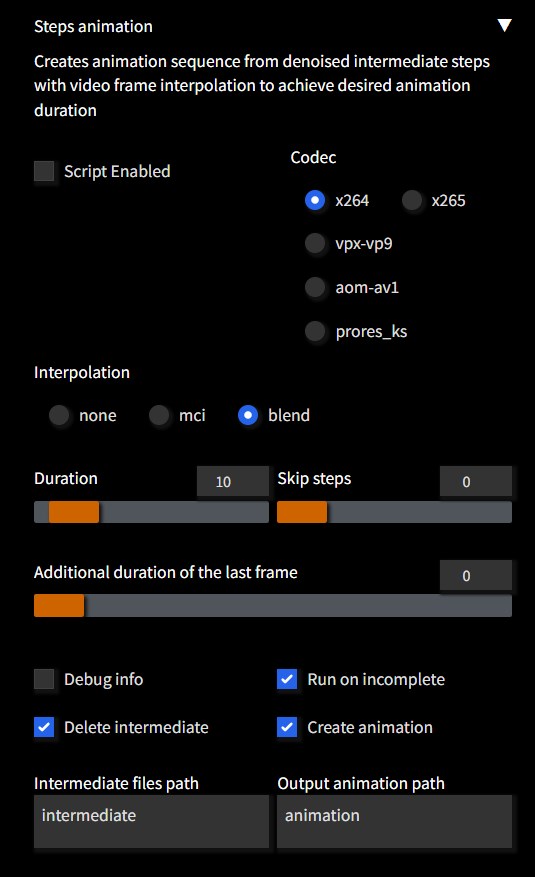

https://github.com/vladmandic/sd-extension-steps-animation

Extension to create animation sequence from denoised intermediate steps

Registers a script in txt2img and img2img tabs

Creating animation has minimum impact on overall performance as it does not require separate runs

except adding overhead of saving each intermediate step as image plus few seconds to actually create movie file

Supports color and motion interpolation to achieve animation of desired duration from any number of interim steps

Resulting movie fiels are typically very small (~1MB being average) due to optimized codec settings

https://github.com/Klace/stable-diffusion-webui-instruct-pix2pix

Adds a tab for doing img2img editing with the instruct-pix2pix model.

https://github.com/klimaleksus/stable-diffusion-webui-anti-burn

Smoothing generated images by skipping a few very last steps and averaging together some images before them