Spark - jjin-choi/study_note GitHub Wiki

§ Why Distributed Computing?

-

Big data

- Volume : Since the size of our data is growing, we need larger data stores to store that data, and we need ways to run computation across those larger datasets.

- Velocity : As we have more and more mechanisms that can produce data, it is arriving in data pipelines at a faster and faster rate.

- Variety : This ranges from numeric and textual data to images and video streams.

- Veracity : how much do we trust the data that we do have? Some data arriving in our systems might have missing values or it might otherwise be inaccurate. For instance, with user-generated data.

-

Aparche Spark : Original Story

- Fast, general-purpose system

- distributes computation across a cluster of machines

-

Spark Architecture

- One driver : Optimizes queries and Delegates task

- One or many executors : Perform actual queries. More is not always faster

- Slot and task :

- Slot : a unit of parallelism

-

Parallelism and Scalability

- Amdahl's Law of linear scalability : 주어진 작업을 병렬화해서 볼 수 있는 속도 향상의 양은 그 작업의 양을 병렬로 계산할 수 있는 함수 ?

- The amount of acceleration we would see from parallelizing a task is a function of what portion of the task can be completed in parallel.

- Amdahl's Law of linear scalability : 주어진 작업을 병렬화해서 볼 수 있는 속도 향상의 양은 그 작업의 양을 병렬로 계산할 수 있는 함수 ?

-

When and where to use ?

- Scale out : if you have too much data to process on a single machine.

- Speed up : if your data can't fit on a single machine, you might benefit from speeding up your query by adding more computing resources.

§ Spark DataFrames

- Learning Objective : RDD 와 DataFrame API within Spark 의 차이점을 설명할 수 있음.

- Version Spark 1.3 - RDD API 의 상단에 더 많은 기능과 최적화를 제공하는 DataFrame API 를 도입했음.

- RDD (Resilient Distributed Datasets) :

- Resilient (탄력적) : Fault-tolerant (내결함성)

- 내결함성을 속성을 수행하는 방법은, DAG (Directed Acyclic Graph) 방향성 비순환 그래프

- 데이터에 적용하는 일련의 transformation 이지만, you cannot change any of the transformations that came before you in this graph. (이전에 제공된 변환을 변경할 수 없는 비순환)

- Distributed dataset component : in which the data is distributed and stored across multiple nodes in your cluster.

- 클러스터의 여러 노드에 분산되고 저장되는 분산 데이터 집합 구성 요소

- Computed across multiple notes

- Results are aggregated by the driver

- DataFrame API : RDD 속성의 대부분을 상속함. (resilient + distributed) +

__metadata__- Metadata : Number of columns, Data types : 즉 데이터를 저장하고 해당 방향 비순환 그래프를 사용하여 적용할 변환을 아는 것 외에도 데이터의 metadata about the number of columns in your dataset and the datatypes

- 즉 Excel 이나 csv 파일과 유사하다고 생각할 수 있음. 열 맨 위에 data type 을 지정할 수 있음 (call type)

- Spark : is not a database. It's compute engine that can read from databases.

- But data is ephemeral (일시적). 즉, spark cluster 가 다운되더라도 data 를 잃지 않음.

- You can think about it if one of your friends goes out for lunch from your Spark cluster, leaves your cluster, you haven't lost the data that friend was responsible for.

- DataFrame 은 SQL table 도 아니고 excel 이나 csv 도 아님. 그것은 abstractions on top of these underlying data sources

- 기본 데이터 소스 위의 추상화 ?

- The analogy I'm going to give you when we're talking about Catalyst is that when you're using the DataFrame API, you specify what you want to be done not how you want it to be done.

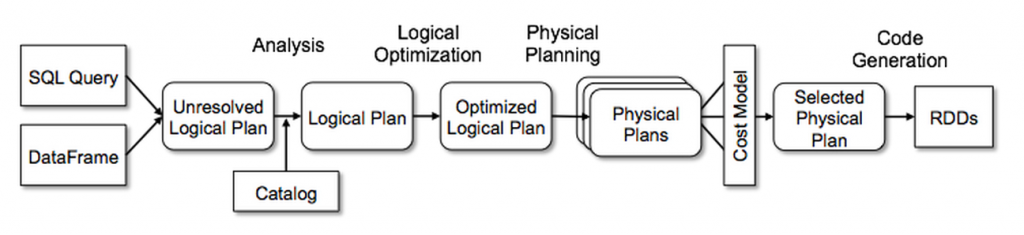

- Spark DataFrame Execution

- Unresolved logical plan before look-up in data catalog

- Then Catalyst resolves them and creates a logical plan

-

- Link: Spark DataFrame Execution

- Catalyst 외에도 DataFrame 이 RDD 보다 효과 있는 이유에 기여할 Project Tungsten 이 있음

§ Databricks Environment

- Databricks Environment : a unified analytics platform that enables data science and engineering teams to run all analytics in one place.

- This includes running reports, empowering dashboards, as well as running extract transform load jobs known as ETL. This is where new data is cleaned and inserted into databases. On Databricks you can also run machine learning and streaming jobs as well.

- Most important to us in this course is the hosted notebook environment. This means that we can interact with our data in real time by running cells of code hosted on a Databricks server.

- This code will actually be executing against a Spark cluster.

- Spark can be tricky to set up, since it involves networking together different machines.

- Databricks is going to manage the installation and setup for us so that we can focus on doing our analytics in SQL.

- In practice though, Spark is about scaling computation.

- So Community Edition allows us to prototype code but not quite unleash the full power of distributed computation.

- navigate to Databricks.com

============= § Pandas UDF for pyspark

본론으로 돌아와, Pandas UDF는 인풋과 아웃풋의 형태에 따라 3가지로 분류된다.

Name Input Output Scalar UDFs pandas.Series pandas.Series Grouped Map UDFs pandas.DataFrame pandas.DataFrame Grouped Aggregate UDFs pandas.Series scala

-

참고 자료 : https://chioni.github.io/posts/pandasudf/ https://databricks.com/blog/2017/10/30/introducing-vectorized-udfs-for-pyspark.html

-

ImportError: PyArrow >= 0.15.1 must be installed; however, it was not found.

- Pyarrow 설치하기

-

spark 튜닝 : https://gritmind.blog/2020/10/16/spark_tune/

-

pandas UDF : https://chioni.github.io/posts/pandasudf/

§