Ensemble Methods - jaeaehkim/trading_system_beta GitHub Wiki

Motivation

- Data Structures, Labeling, Sample Weights를 통해서 Financial Raw Data를 어떻게 가공하여 정보를 살리면서 IID화 시켜 ML 모델이 학습 할 수 있는 기본적인 토대를 만들 것인가 부터(Data Structures) 시작해서 그 토대를 바탕으로 좋은 정답지를 만들 것인지(Labeling) 이를 ML 모델에 넣기 직전에 발생하는 문제점인 중첩성 문제를 어떻게 해결할 수 있는지를 고민한다. 특히, Bagging의 Weak model에게 줄 Bootstrap Sampling을 질 좋게 만드는 것에 관한 이야기다.

- 이번엔 모델에 관한 직접적인 얘기를 하지만 개별 모델에 관한 이야기 보다는 Ensemble Methods에 관한 이야기다. 모델 파트에서 개별 모델보다 중요한 것은 Ensemble에 대한 이해이다. 하나의 예제인 Random Forest에 대해선 sklearn에 나오는 다양한 파라미터에 대한 이해를 높이면서 응용력을 높이는 것이 중요하다고 보인다. 근본적인 부분을 다져놓으면 다른 모델로 확장할 때 올바른 방향으로 작업할 수 있기 때문이다.

The Three Sources of Errors

Mathematical Expression of ML Model

-

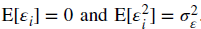

-

-

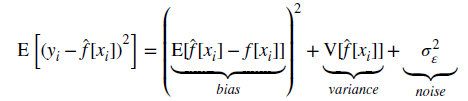

- Minimize left side

- f(x) : 이상적인 모델링에 의한 예측 값, f'(x) : 실제 모델링에 의한 예측 값 , y : 실제 값

- f'(x)-f(x) : 모델링 오차 , f'(x)^2 : 훈련 데이터의 민감도

-

머신러닝 모델의 오류의 원인은 크게 3가지이다. 오류의 원인을 정확하게 안다는 것의 의미는 이를 줄이는 것을 목표함수로 잡을 때 좋은 모델을 만들 수 있다는 것이다.

- 편향(Bias)

- 편향이란 "실제 값과 추정 값의 차이의 평균"을 의미한다. (데이터 집단을 어떻게 정의하느냐에 따라 바이어스란 것이 다양하게 표현될 수 있을 것) 편향이 크면 "과소적합(Underfitting) 이슈가 발생한다.

- 분산(Variance)

- 수식을 통해 보면 이 부분은 "훈련 데이터의 변화에 대한 민감도"를 의미한다. 즉, 훈련 데이터의 안정성이 ML Model을 학습시키는데 굉장히 중요하다는 것을 의미함. (이를 만들기 위한 노력 : Data Structures, Labeling, [Sample Weights])

- 분산이 작으면 "일반적인 패턴"을 모델링하지만 분산이 크면 신호를 잡음으로 오판하게 되어 과적합(Overfitting) 이슈가 발생한다.

- 잡음(Noise)

- 예측하지 못한 변수나 측정 오류로 더이상 줄일 수 없는 오류

- (*) 더이상 줄일 수 없다는 것이 위의 수식에서 사용된 변수 x_i외의 것에서 나온 경우를 말한다.

- (*) 대부분의 복잡한 문제는 우리가 가지고 있는 데이터(변수) 외의 다른 변수로 인해 시장이 움직일 때가 있는데 이런 오류는 필연적으로 발생할 수 밖에 없음을 인지해야 한다.

- (*) 이를 위해 극단적인 상황에 대한 보수적인 리스크 관리는 필수적인 것이다.

- (*) AI는 정량화를 통한 자동화를 도와주는 것이지 모든 것을 해결하지 못한다.

- (*) 어디까지 해결할 수 있고 어디까지 해결하지 못하는지를 구분하는 것이 매우 중요하다.

- 편향(Bias)

-

Ensemble 기법을 활용하면 많은 Weak Model을 만들고 이를 Aggregation하면서 편향 또는 분산을 축소하여 더 좋은 성능을 내게 만드는 것이 핵심 원리이다.

Bootstrap Aggregation

- 배깅(Bagging)은 Bootstrap Aggregation의 줄임말이다. 주어진 데이터(Source)를 Bootstrap을 통해서 여러 개의 Sampling 자료를 만들고 각 Sampling 자료마다 Modeling을 적용하여 여러 모델을 Aggregation 하여 최종의 예측 값을 산출한다.

- 위의 과정은 여러 모델을 만들어내는 것에 '병렬처리'를 진행할 수 있으며 최종 예측 값을 산출할 때는 기본적으론 '단순 평균'을 활용한다. 기초 추정기(Estimator)가 예측 확률을 갖고 있는 정도(P>0.5)인 경우엔 Bagging이 효과가 있다.

- Bagging은 '예측 오차'의 구조에서 '분산'을 낮춰서 정확도를 높이게 해준다. 즉, 과적합을 방지하는데 도움이 된다.

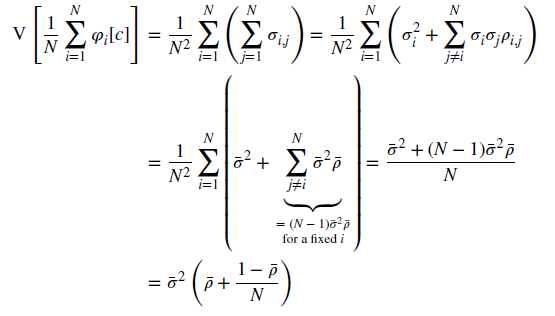

Variance Reduction & Improved Accuracy

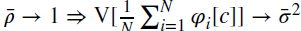

- phi_i(c) : 배깅된 모델의 최종 예측 간의 분산 , N : 배깅된 모델의 수 , variance_bar : 단일 추정기(모델)의 예측의 평균 분산, rho_bar : 단일 추정기 예측 간 상관관계

- 수식 연결고리 : phi_i(c) = sumvariance_between_bagged_model(i,j) , variance_between_bagged_model(i,j) = 배깅된 모델 간의 공분산

결론

- 단일 추정기(기초 모델)간의 상관관계가 1보다 작지 않으면 배깅 모델의 오차 개선에 도움이 되지 않는다는 것이 수식적으로 증명된다.

- 물론 여러가지 실전에서의 이슈가 있다.

- ex1) 정확한 상관관계란 무엇이고 이를 어떻게 정량화 할 것인가? a) 정확한 것은 알 수 없고 가설을 세우고 의미있는 상관관계를 나타낼 수 있는 mathematical expression을 사용하는 것이 최선. 데이터의 길이에 따라서도 계속 상관관계가 변할텐데 이런 확률적인 부분까지도 고려하는 상관관계 modeling을 사용한다면 best

Observation Redundancy

- 1) 관측 중복 이슈

- 관측 중복은 위에서 얘기했듯 사실상 각 Bootstrap sampling들이 '사실상' 동일해질 가능성이 높으며 이는 평균적인 상관관계가 1에 수렴해진다는 것을 의미하며 모델을 많이 만들지만 Bagging 모델의 분산을 줄이지 못하여 효과가 없어짐을 의미한다.

- 참고) Sample Weights

- 2) OOS 신뢰성 약화 이슈

- 모델을 훈련할 때 복원을 동반한 무작위 표본 추출로 인해 OOB와 매우 흡사한 train set 표본들을 다수 생성하고 train set과 사실상 유사한 test set을 만들어 버린다. 하나의 방법으론 k개의 블럭으로 쪼갠 뒤에 k개 안에서 추출하는 형태로 한단면 이런 현상을 어느 정도 막을 수 있다.

- OOB는 시계열 데이터 관련한 모델에선 무시하는 것이 낫다. 이전의 과정들이 데이터들을 IID화 시키는 작업을 했지만 여전히 완벽한 IID란 존재할 수 없다. 이런 과정에서 OOB는 미래 데이터를 미리 train 해버리는 효과가 들어가서 퍼포먼스에 버블이 있게 된다.

Random Forest

Concept

- Decision Tree는 과적합 되기 쉽다고 알려져 있고 각 DT가 과적합 되어 있다면 이를 활용해 단순한 Forest를 만들 경우 Bagging된 모델의 Variance를 증가시킨다.

- 최우선 과제는 개별 DT가 과적합 되지 않도록 노력하는 것이고 이후에 'Random' Forest를 활용해 Aggregation된 모델의 Variance를 낮춘다.

- Details & Reference

vs Bagging

- 공통점 : Random Forest는 개별 추정기(Estimator, 기본 모델)를 독립적으로 Bootstrap Sampling을 가지고 훈련한다는 점에서 비슷하다.

- 차이점 : Random Forest는 2차 level의 Randomness를 포함한다.

- Bagging은 Feature는 계속 동일하고 Bootstrap 부분에만 Randomness를 넣은 모델인 반면, Random Forest는 개별 추정기(Estimator)를 만들 때 M개의 Feautre 중에서 N개를 랜덤으로 한 번 추출한 후 이를 활용해 Bootstrap Sampling들에 대해 훈련하고 Aggregation 작업을 진행한다. 즉, Bagging과 다르게 2단계(Feature Sampling + Data Bootstrap Sampling)를 거친다.

- Bootstrap 표본 크기가 train data set 크기와 일치해야 한다. (Bagging은 상관없다.)

Advantage

- Random Forest는 Bagging과 마찬가지로 예측의 분산을 과적합을 최대한 방지하면서 줄일 수 있다.

- Bagging과 마찬가지로 개별 추정기(Estimator) 간의 상관관계가 1에 가까울수록 분산을 줄이는 효과를 거의 못 볼 수 있다.

- Feature Importance를 계산할 수 있다. 참고) Feature Importance

Tips for avoiding Overfitting

- Random Forest의 max_features를 낮은 값을 설정. 이는 Tree간의 상관관계를 낮춰줌.

- Early Stopping : min_weight_fraction_leaf 정규화 매개 변수를 충분히 큰 수로 설정(5%)

- Bagging + DecisionTree & max_samples=avgU (평균 고유성, Sample Weights)

(a) clf=DecisionTreeClassifier(criterion='entropy',max_features='auto',class_weight='balanced')

(b) bc=BaggingClassifier(base_estimator=clf,n_estimators=1000,max_samples=avgU,max_features=1.)

- Bagging + RandomForest & max_samples=avgU (평균 고유성, Sample Weights)

(a) clf=RandomForestClassifier(n_estimators=1,criterion='entropy',bootstrap=False,class_weight='balanced_subsample')

(b) bc=BaggingClassifier(base_estimator=clf,n_estimators=1000,max_samples=avgU,max_features=1.)

- Bagging + RandomForest with Sequential Bootstrap ( Sequential Bootstrap Code)

- RandomForest의 Parameter 부분을 하나 만들어서 사용

- class_weight = 'balanced_subsample' : RandomForest Hyper Params

- Feature의 PCA + RandomForest

- Feature에 PCA를 활용하여 Feature 수를 줄여서 사용한다면 각 개별 추정기인 Decision Tree가 필요로 하는 depth가 줄어들 수 있고 이는 각 개별 추정기의 상관관계를 낮추므로 Variance를 줄이는 것에 효과적이다.

Boosting

Concept

- Weak Estimator들을 활용해 더 높은 정확도를 얻어낼 수 있는 지에 대한 의문으로 부터 시작했다. (Bagging은 기본적으로 모든 추정기가 약하면 성능 개선의 효과가 미미하다)

- Details & Reference

- AdaBoost : AdaBoost에 대한 디테일한 설명

- Kearns & Valiant, 1989 : Boosting의 최초 아이디어 제시

- How to Develop an AdaBoost Ensemble in Python

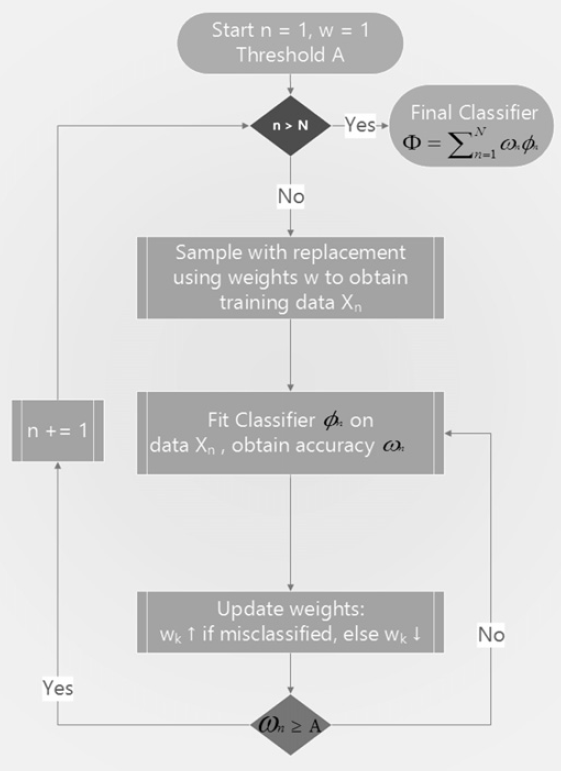

Decision Flow

- 어떤 추출 확률 분포에 따라 복원을 동반한 추출을 진행하여 '하나'의 train set을 생성 (초기 확률 분포는 Uniform)

- 하나의 Estimator를 위의 train set을 활용해 fitting

- 위의 Estimator가 performance metric 임계값을 넘어서면 해당 Estimator는 등록하고 아니면 폐기

- 이후에 잘못 예측된 label에는 더 많은 가중 값을 부여하고 정확히 예측된 label엔 낮은 가중 값을 부여한다.

- N개 추정기 생성할 때까지 반복

- N개 모델의 가중 평균이고 가중 vector는 개별 Estimator의 performance(ex. accuracy)에 따라 결정

- 위의 기본적인 flow는 모든 boosting 알고리즘이 동일하며 가장 유명한 것은 AdaBoost

Bagging vs Boosting

Comparison

- 각 Estimator를 만들어내는 과정 : Parallel(Bag) vs Sequential(Boost)

- Estimator 선별 여부 : X(Bag) vs O(Boost)

- Probability Distribution of Sampling의 변화 : X(Bag) vs O(Boost)

- Ensemble 예측 값 Average 방식 : Equal(Bag) vs Weight(Boost)

In Finance

- Boosting은 Bias,Variance를 모두 감소시키지만 주어진 Data에 Overfitting하게 하는 요소가 다수 포함되어 있음

- 금융 데이터는 Underfitting 보다는 Overfitting이 문제기 때문에 기본적으로 Bagging이 선호

Bagging For Scalability

- SVM 같은 경우는 train data의 observation이 100만개 정도 되면 속도가 기하급수적으로 느려짐.

- 속도에 비해 최적 보장, 과적합 보장 측면에서 메리트가 없음

- 개별 Estimator로는 SVM을 사용하고 제한 조건에 과적합이 되지 않도록 설정한 후 bagging을 하게 되면 속도 문제와 과적합 문제를 동시에 해결할 수 있음

- Bagging : Bagging 알고리즘은 병렬처리가 가능

- Scalability Example

- Logistic + Bagging (or RandomForest style)

- SVM + Bagging (or RandomForest style)

- Random Forest + Bagging

- Boosting + Bagging (or RandomForest style)

- Linear SVC + Bagging (or RandomForest style)

- ref : Combining Models (Bagging style + Base Estimator)