Motivation - itzjac/cpplearning GitHub Wiki

Why more material on C/C++ programming? What's the importance with low level programming? Aren't programming tools up to speed? Didn't programming tools and IDEs evolve all this long?

If CPUs are faster, memory cheaper and Moore's law has been proven over the years on the Hardware department. How long until Software quality catches up with Moore's law?

Is software quality, speed and performance relevant to the big software companies?

Does code get old? What to do with legacy code and how to take the best out from it?

Want to reinvent the wheel, you better get a better wheel done!

Not only introducing people to programming is a difficult task, getting them to embrace more important facts is even harder. Software industry (video games are no exception) needs to evolve and grow with better processes, better practices, better programmers. There is no doubt game industry is full of talented people creating amazing stuff, but is also true, top talented developers are a minority.

By sharing my own personal experiences, real work stories, I demonstrate the state of the art of the broken things as well as the state of the art using high performance tools. The broken things will always be a place for opportunities. Opportunities to improve, and make things different, easier, faster, more flexible. Maybe I get you excited enough to fix some of broken things. As for the high performance tools, I use exactly the same workflow for this wiki and the code repo. Gaining access to the later you get for free a fresh development environment. Obviously, the solutions to every code problem not included!

When relevant, the experiences listed here will contain either an engine version or a year as reference. Newer versions/patches/hardware upgrades might have solved it.

One of the greatest feelings of being a engineer is the freedom you gain by deeply understanding a particular topic, then translating the knowledge into functional and practical artifacts that you share to society. They might not be perfect, ideally they get the job done fast and can adapt to changes. Having the confidence with yourself and your knowledge is an important step in your journey, that might lead on having an impact in society. A confidence that has zero dependency with technology bubbles, working tools or job positions. It's a level of security, you know what you are doing and how to hand over your work, maybe open source projects are the best examples. The engineering role has nothing to do credentials, graduated status or academia trajectory. I find its definition closer to a general attitude, that curiosity that makes you go beyond the average understanding. Some people (perhaps the majority) aren't just as curious. If curiosity is not a mainstream talent, we've got a myriad of problems translated to work and opportunities for us! The demand for great engineers keeps growing, unfortunately they are so scarce.

Maybe transcending your engineering work to society is not in your list of interests. That's also fine, the engineering practice can exist just for fun! Engineer's curiosity can perfectly exist as a purely ludic experience, no purpose at all. Ironically, when reaching that level of deep specialization will naturally attract others and have an unintended impact around you.

Society has so much work for the engineers, there is an engineer deficit world wide. As newer technology evolves, new areas are opened and so the need for improvements. Am sure that during our lifetimes the demand for great engineers will keep growing.

Helping you reach the bleeding edge technology, master all basic skills and have the motivation to keep training/improving yourself. That is one of the goals with the material presented here.

In the future, opening you personal Pandora's box (those personal tricks that you kept secret and well earned over many years) will feel as a natural practice, you will have the intuition to know what and how to expose without undermine you. Warn you, keep your eyes open and your guard up, there are so many tech people out there waiting for the great programmer(s) to do it all and do it for free.

Hoping I can keep your hunger for knowledge growing.

Different compilers lead to different results in performance, executable size. Some compilers might give you access to latest language features others not. When learning to program, it can be so useful to create a hybrid environment that give you immediate results comparing side to side compiler results and behavior. No IDE out there is going to give you that access, but with the methods taught in this wiki you will be able to create from scratch your own building and coding pipelines. Very hybrid environments with cross platform capabilities, tool agnostic.

In the chapter All About Windows Compilers, we familiarize with the most useful Windows methods to grant access to the tools, while you learn the importance of the native script languages (windows batches) and having a hybrid very customizable work environment. Many new terms to warm you up for the next chapters that are fully hands-on code. The knowledge contained in this chapter is naturally extended to any operating system, but you want to start learning it in Windows if games are your thing.

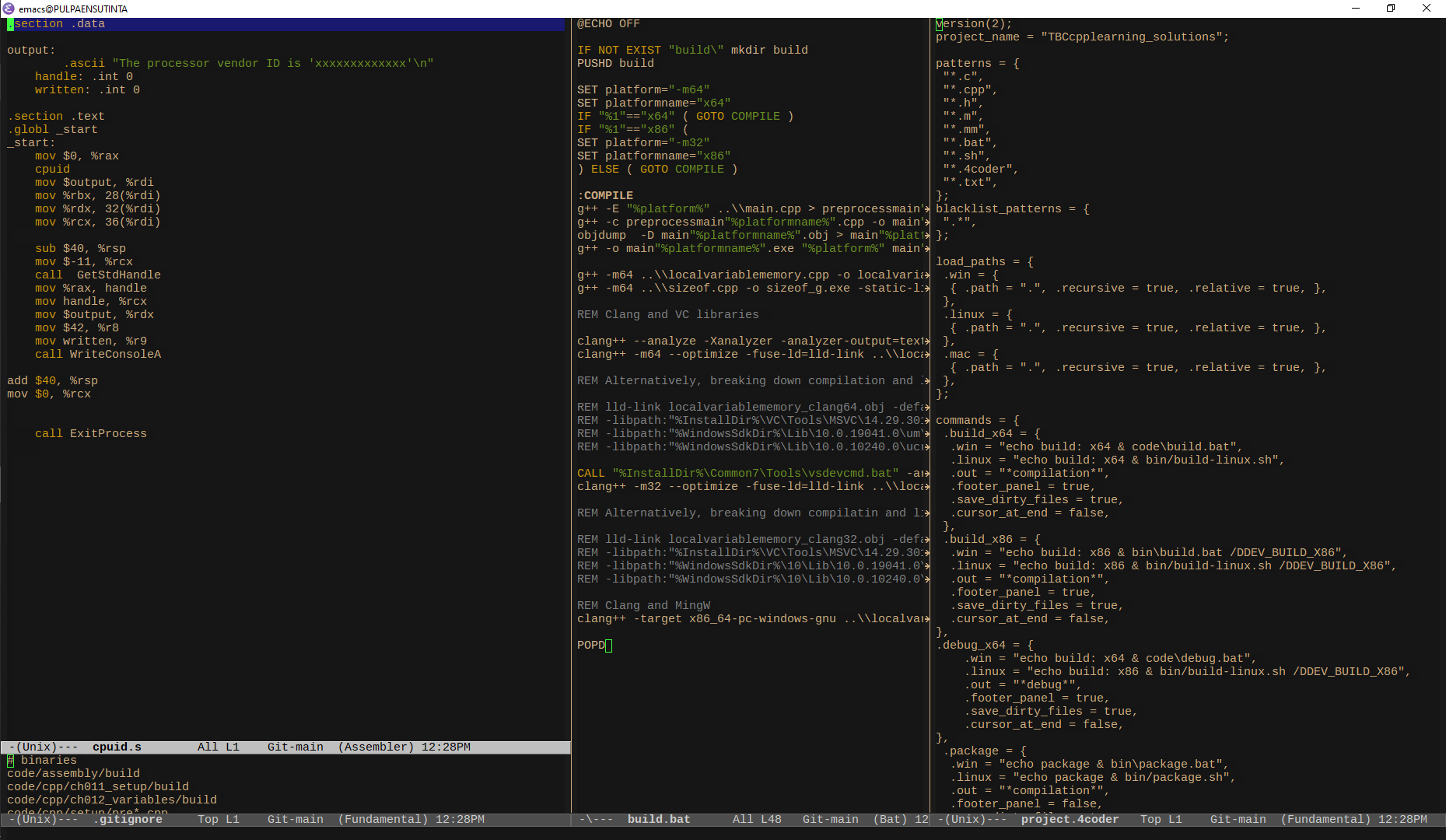

At the end of the chapter we will have a single development environment with a flavor of you preference, which is capable of using multiple compiler/assemblers (Visual Studio C, mingw64, mingw86, LLVM/clang, ML, ML64), multiple platform targets, support for multiple programming languages Rust, C/C++ static code analyzer and asm syntax. I guarantee you, it is not possible with any IDE out there. Even I haven't played with it myself, I dare to tell it will be 100% possible to include JAI as a supported programming language. And much more, it comes down to your own imagination and preference.

As you learn and move on to new projects, new teams, upgrading your hardware or upgrading to a newer machine. Most likely you want to preserve and forget about your projects, have them as toolsets to look back in the future. Best case you will want them to get compiled and run without much effort, after all, you left everything working last time.

Relying on the standard (usually largest) SDKs/IDEs environments will result in a disaster. Simply put a combination of Visual Studio and a newer OS versions, the dirty porting work will be handed over to you, imagine when you skip over 10 or 20 years of those upgrades. Is almost true for about any other major software suite.

Code from academia books, even old amazing projects like Wolfenstein 3D, won't run out of the box in your latest OS environment. Whatever software version they used back are too old at this point, or incompatible, or non existent. Legacy projects tend to be run by either installing virtual machine environments (DOSBox, VMPlayer with old OS, VM VirtualBox) which must contain specific software, or have you spend time working on porting them to the latest versions.

Things are getting better now, I won't lie to you. IDEs like CodeBlocks, Xcode, Visual Studio have tried hard making the transitions from a previous to a newer version smoother. If you come from 5 or more versions behind, things are going to get spiky.

Carmack also mentioned a "brute force" method to preserve legacy code and projects. Extreme legacy code preservation, keep safe everything over a PC with a clean PC, a drive with specific OS, specific SDKs/IDEs and the exact code at the time the project was released. Lock and keep safe that PC and hard drive. Oh well.

Bottom line: maintenance and legacy code is a huge pain.

Over the years I learned a way out, relying in smaller and standalone software tools, usually well maintained and therefore long lasting. Emacs, vim, nano to give you great examples. Newer alternatives like 4coder pretend to become part of the list. I am personally jumping into the 4coder boat by actively promoting and using it. GNU projects, free open source, have demonstrated at least the past 30 years to be loyal to their values (and how would they not), following standards, truly multiplatform, not breaking much with newer versions. Overall being consistent with the user and their interfaces across different OS.

Counterpart, the proprietary software with every new incarnation of their products, not following the standards, removing tools, multiplaform support is variable, adding new ones, not consistent UI, deprecating features (x64 application can't contain inline assembly from VisualStudio2010 and on Microsoft C/C++ x86 inline assembly ) creating the ultimately vxxx new real standard all over again. The worse, a tendency of a slower experience, longer load times and slower response when using the tools (debugger, compiler) making your work miserable. Not doing what it was suppose to do. Just look at the bug/complain counting in the msdn forums.

Huge companies working side by side with other huge companies, taking arbitrary decisions for how things will move on and continue, and also synchronizing their efforts to keep seamless transitions with their future upgrades. Take as an example Visual Studio and Epic, IDE upgrades, Engine upgrades. And you never know what will happen in the next version, radical changes from the usability up to licenses, programming languages, deprecating a OS or platform support. Is battle ground and you better be ready.

While you won't be able to code your own UnrealEngine version alone(at least from a pragmatic side), you want the tools at your disposal to solve many of the problems described above, you want to keep your own projects isolated from that chaos, and you also want to be able to integrate them without shooting your feet.

Particularly important when you are learning. You don't want to create 100 Visual Studio solutions/projects to run and execute your code then start over when porting it to Linux or OSX. You don't want to introduce the clutter from the tools. You want to get the job done in the most sane and clean possible way.

In this material, I invite you to follow a long lasting approach. The material provides you with full Emacs and 4coder development environments that you can extend and customize at your will. With the promise as years pass by, you will quickly look back at your code, the base code of this wiki, and others code. Compile and run with the latest versions at the time, including that brand new OS of your preference.

Getting your game out of the door is a challenging fact. For game logic, relying on technology like Blueprints(BPs for abbreviation), won't help you do that, the larger the projects the worse it gets. Previous major Unreal version, 3, didn't need to store binary data for game logic, the Kismet visual script language was pure text, diff and merge was way easier. BPs were an evolution to Kismet, across all the BP base code you can actually read Kismet classes. Leave it to you and find out why Epic decided to do the move from text in Unreal 3 to binaries in Unreal 4. Newest version Unreal 5 still preserves the binary approach and is likely that it is here to stay.

Blueprints have both the dark and the bright side, obviously introducing a binary file format that you don't fully understand into your pipeline sounds like a dangerous practice. In fact, larger projects tend to minimize BP dependency, scaling using pure text files (cpp source code or other language) is a safe bet.

Blueprints can be good at

- Fast prototype

- Small/Medium size projects

- Single player experiences

- A visual scripting language, accessible to almost anybody

- Moderate usage

Blueprints are bad at

- Scaling

- Avoiding cyclic dependencies

- Generating useful crash reports

- Catching up with everything in your code

- Extending multiplayer and network capabilities

As a general strategy, keep BPs as small as possible and as isolated as possible. The real power of UE is in their code base, by mastering few of their systems soon you will be implementing your own modules, relying less in BPs.

Let's say you want to expose an array of bools to the BPs through code, each one serves as a toggle for a certain feature in your actor/component/object. You don't want users to change the size of the array, you only want to list a fixed amount of bools and no more, the EditFixedSize property will get the job done. Let's add 5 of them

Header file declaration

USTRUCT(BlueprintType)

struct FAnnoyingData

{

UPROPERTY(EditAnywhere, EditFixedSize, BlueprintReadOnly)

TArray<bool> AnnoyingArray;

};

FAnnoyingData AnnoyMe;

cpp file constructor

AnnoyMe.AnnoyingArray.Add(false);

AnnoyMe.AnnoyingArray.Add(false);

AnnoyMe.AnnoyingArray.Add(false);

AnnoyMe.AnnoyingArray.Add(false);

AnnoyMe.AnnoyingArray.Add(false);

As a result, user only gets to toggle the bool values, default values are initialized as false. Opening the BP shows the expected array access

All dandy, days/weeks later you had to add a sixth element into the array. A single line of code

AnnoyMe.AnnoyingArray.Add(false);

Compile and you open your BP, only 5 elements listed!

Maybe

- rebuild/delete binaries

- rebuild/delete intermediate folders

- recreate the BP, copy all values... too much work

No luck, a 1min task is becoming 10 mins/30mins.

Thinking as an epic engineer helps. How did we screw that up?

Tried this, deleted the AnnoyingArray from the struct and commented all references in the cpp file.

USTRUCT(BlueprintType)

struct FAnnoyingData

{

// UPROPERTY(EditAnywhere, EditFixedSize, BlueprintReadOnly) NOTE(dagon): Go away

// TArray<bool> AnnoyingArray;

};

Recompile, verify no array is present in the BP, the new binaries just dumped the annoyingarray. Good! Time to redo, uncomment the the Array above, recompile. Voila! 6 elements now :)

Reason, injecting those new array elements into the BP is not fully supported. And even worse, not reliable: sometimes it will work, sometimes won't. Our development environment and AAA engines are full of episodes like this. Wasting your time.

Now, you say, fix that yourself. Sure, when you are in the middle of implementing/fixing an important feature for your game, maybe another colleague and you, that's all. Sorry, ain't nobody get time for that

Ultimately

- Maybe one day you can get time to fix it yourself, create your Engine fork and make everyone else happy.

- Maybe you are lucky, report your bug to Epic and get the problem fixed in the next version. Ok, maybe someone at Epic was kind enough and send you the patch in a message. Recompile the entire engine to include the fix

Wouldn't we be in a better world if problems like these weren't as frequent? Software quality is not at the best of interests for big companies. Not to blame them, but someone has to address the problem or at least, try as hard not to be that person.