HOWTO: Run the CloudBench orchestrator outside of the cloud (or with multiple tenant networks) - ibmcb/cbtool GitHub Wiki

-

Problem: During the deployment of a Virtual Application (VApp), the CB orchestrator node will briefly ssh into the VMs,as explained in detail here.

- This might become a problem when one aims to run the CB orchestrator node outside of the Cloud. If VMs do not have an IP address that is accessible to connections initiated from the outside, then the VApp deployment cannot be completed.

- Please note that this is an issue only for the VApp's initial deployment. Once deployed, VMs within a intra-Cloud (i.e., "tenant") network can still push/pull data to/from CB's Object Store, Metric Store and, Log Store (short explanation).

- An obvious question would be: well, why doesn't CB makes use of Cloud-init? The answer: historically, Cloud-init was not supported by every cloud (we are willing to concede that this is not the case in 2017), and we wanted to keep CB compatible with as many clouds as possible. An additional point to consider: if we adopt Cloud-init, with some mechanism for notification when the Virtual Application deployment scripts finished the execution on the VMs (probably through Pub/Sub), new and more complex modes of failure will have to be taken into account.

- Please note the above problem can promptly rewritten as: how to deploy Virtual Applications in multiple networks, other than the one currently occupied by the CB orchestrator?

-

Here are five potential solutions for the problem. Each solution contains a (very brief) pro/cons, a diagram, and the CB attributes that need to be set on your private configuration file

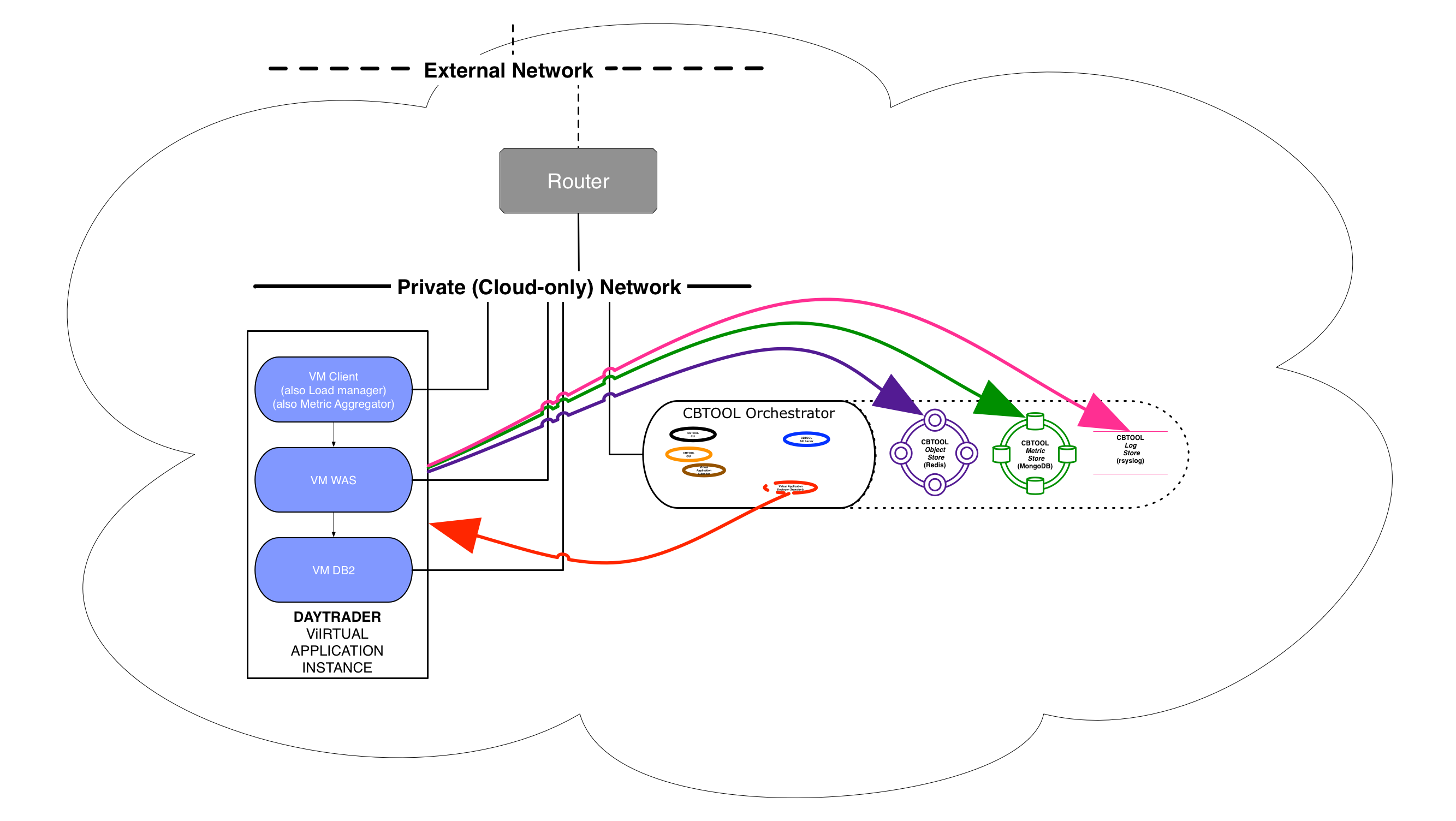

- Option 0: Sidestep the problem by running the CB Orchestrator directly in the Cloud:

- Comment: In a small cloud, the CB Orchestrator might impact the results due its own resource requirements

- Pros: Simple and robust setup, probably the highest network performance.

- Cons: Does not really solve the problem of deploying workloads in multiple networks.

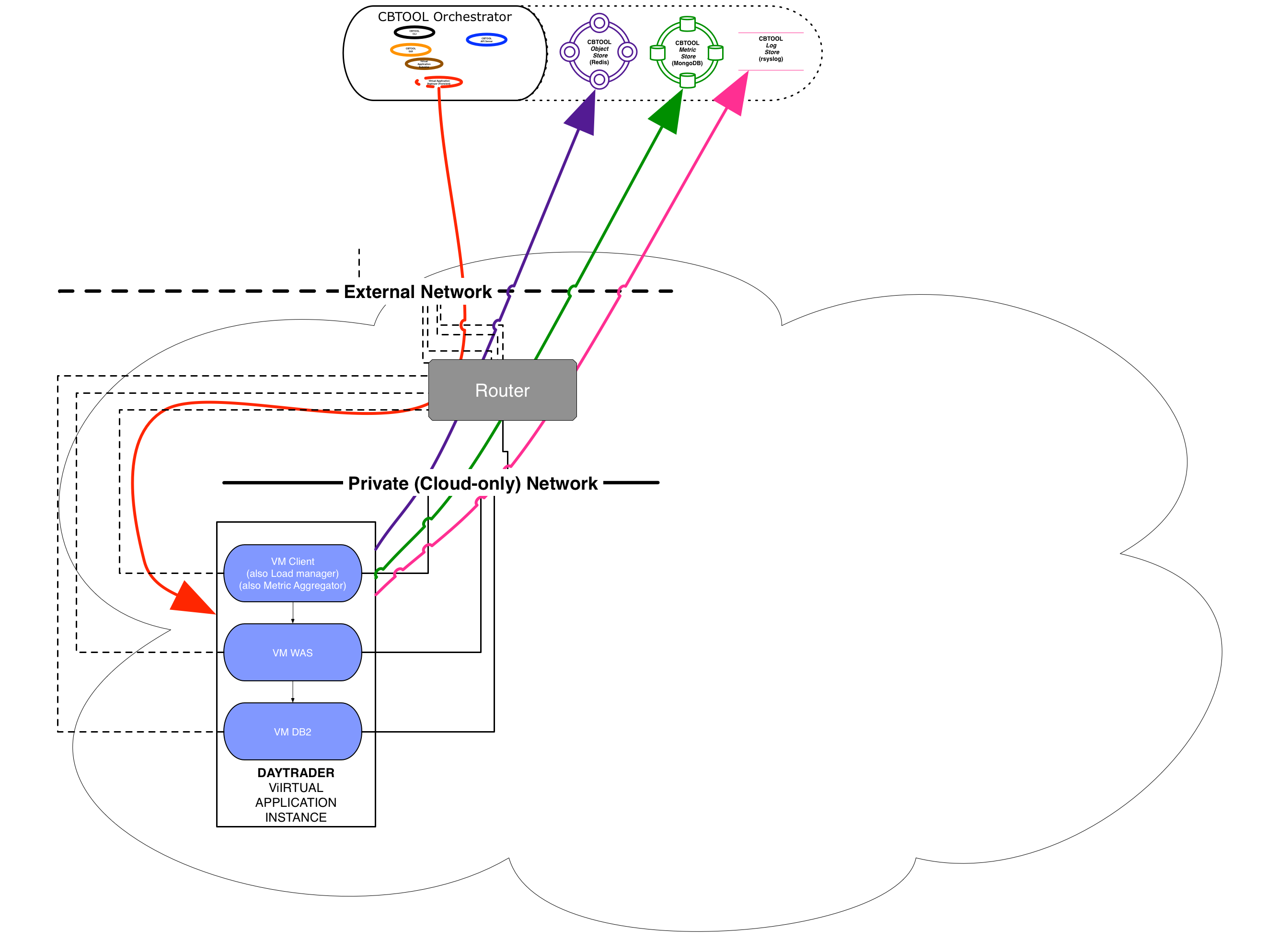

- Option 1: Flat or Provider Network

-

Comment: just have the VMs to be connected to networks that, albeit different, are accessible through one or more routers

-

Pros: Simple and robust setup

-

Cons: Each VMs requires an IP address in the external network. Typically, no more than a few dozens (up to a few hundreds) of addresses would be available in private cloud (this is entirely different for a public cloud, where each VM has a public and private address).

-

Configuration: In case of Flat or Provider Network

[VM_DEFAULTS] PROV_NETNAME=<flat network name> RUN_NETNAME=<flat network name>In case of Floating IPs (OpenStack-Specific)

[VM_DEFAULTS] USE_FLOATING_IP = $True

-

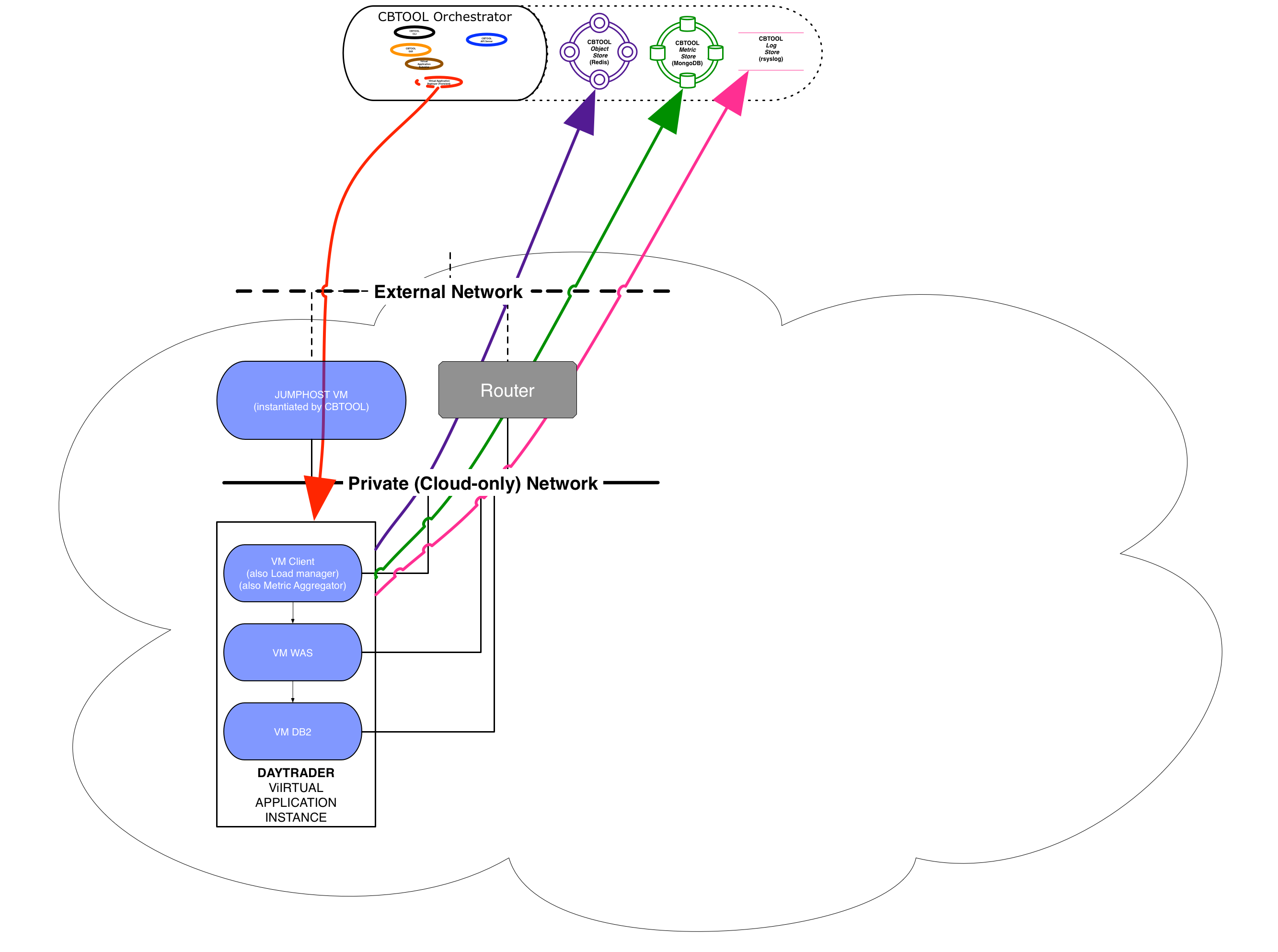

- Option 2: Jumphost (Jumpbox)

-

Comment: CB can automatically create a Jumphost/Jumpbox, in the form of a "tiny" VM that is connected to all networks on the cloud.

-

Pros: Simple (but not particularly robust) setup.

-

Cons: The Jumphost might become a bottleneck, depending on the number of simultaneous deployments.

-

Configuration:

[VM_DEFAULTS] USE_JUMPHOST = $TrueNote: This will auto set CREATE_JUMPHOST = $True

-

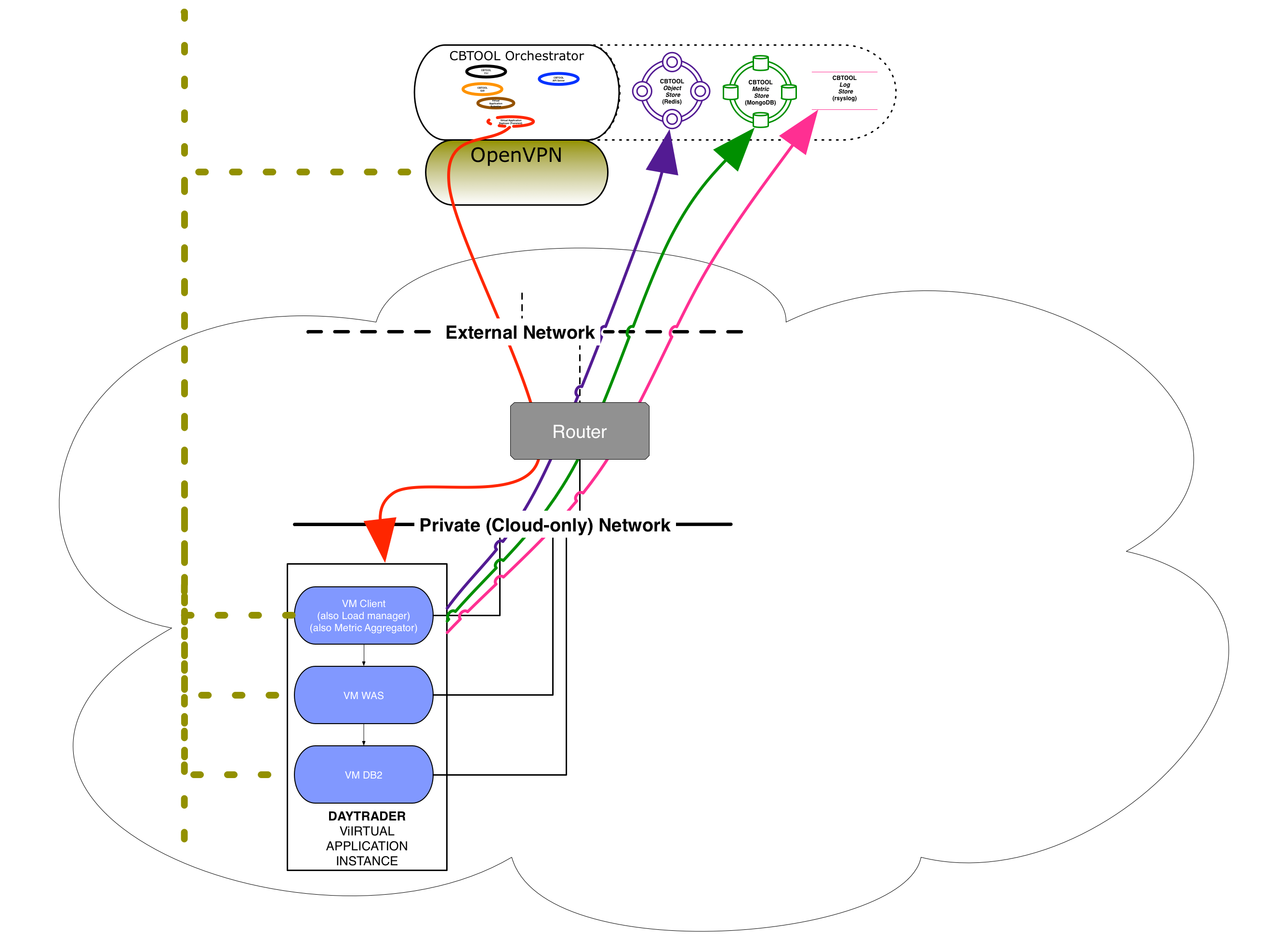

- Option 3: CB's VPN server.

-

Comment: CB can automatically start an OpenVPN server, and then use cloud-init to have the VMs to automatically connect to the VPN network and publish their VPN-reachable IP address.

-

Pros: Single IP address space for multiple networks.

-

Cons: Setup complex. Relies on VMs publishing messages back to the orchestrator in a timely fashion.

-

Configuration (detailed instructions):

[VM_DEFAULTS] USE_VPN_IP = $True VPN_ONLY = $True USERDATA = $TrueNote1: This will auto set START_SERVER = $True in the Global Object [VPN]

Note2: With this configuration, the Orchestrator will reach back into the attached VMs through the VPN network, but the VMs will establish communication back to the Orchestrator (e.g., Object Store, Metric Store) through the cloud's L3 Network (e.g., OpenStack Neutron L3 agents).

or

[VM_DEFAULTS] USE_VPN_IP = $True VPN_ONLY = $False USERDATA = $TrueNote1: This will auto set START_SERVER = $True in the Global Object [VPN]

Note2: With this configuration, ALL the communication between the VMs and the Orchestrator will performed through the VPN network.

-

- Additional (OpenStack-specific, many thanks to Joe Talerico for coming up with this elegant solution)

-

Make the Orchestrator part of the neutron network:

-

On the CloudBench host, install ovs and neutron-openvswitch-agent. For the config files, simply copied over one of the compute nodes config files for neutron piece.

-

Start all services ovs and neutron-openvswitch-agent.

-

On the OpenStack Controller : hostid=hostname of the CB orchestrator netid=network to attach to (in my example, private)

neutron port-create --name rook --binding:host_id=$hostid $netid

-

On the CB orchestrator : iface-id is the port-id from neutron mac - set this to what neutron used. port=cbport #(whatever you want to name it)

ovs-vsctl -- --may-exist add-port br-int $port -- set Interface $port type=internal -- set Interface $port external-ids:iface-status=active -- set Interface $port external-ids:attached-mac=fa:16:3e:5c:7f:91 -- set Interface $port external-ids:iface-id=61463840-f3a3-4b1f-9102-6d2c7d8524bf

-

Change the MAC of the cbport port to reflect the mac that neutron generated for that port.

-

Run dhclient on the cbport port.

-

-