Hypershift: Power worker nodes via Agent based installation - hypershift-on-power/hack GitHub Wiki

$ hypershift installMake sure the default storage class is set WaitForFirstConsumer as VOLUMEBINDINGMODE, this is required because some of the pods deployed in the operator contains multiple pvs and will create issues with the node affinity if cluster contains multi-zone nodes.

# In this example default one doesn't contain the `WaitForFirstConsumer` VOLUMEBINDINGMODE hence create a new storage class and set that as a default one

$ oc get sc -A

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ibmc-vpc-block-10iops-tier (default) vpc.block.csi.ibm.io Delete Immediate true 10d

ibmc-vpc-block-10iops-tier-us-east-1 vpc.block.csi.ibm.io Delete WaitForFirstConsumer true 5d11h

# e.g: copied the first sc and made changes to the VOLUMEBINDINGMODE and made that as default one, post creation(3rd one)

$ oc get sc -A

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ibmc-vpc-block-10iops-tier vpc.block.csi.ibm.io Delete Immediate true 10d

ibmc-vpc-block-10iops-tier-us-east-1 vpc.block.csi.ibm.io Delete WaitForFirstConsumer true 5d11h

ibmc-vpc-block-10iops-tier-wait-for-first-consumer (default) vpc.block.csi.ibm.io Delete WaitForFirstConsumer true 25sassisted-service-operator operator requires the BMH CRD, install the CRD using following instruction to install if if OpenShift doesn't contain it.

$ oc get crd baremetalhosts.metal3.io

NAME CREATED AT

baremetalhosts.metal3.io 2023-01-27T14:01:07Z

# Install the following CRD if above command does't reply any entry

# IBM Cloud's ROKS solution doesn't have this CRD, hence this step is mandatory if you are management cluster is on ROKS

$ oc apply -f https://raw.githubusercontent.com/openshift/assisted-service/master/hack/crds/metal3.io_baremetalhosts.yaml# Install the tasty tool

$ go install github.com/karmab/tasty@latest$ tasty install assisted-service-operator -w

$ tasty install hive-operator -wThis patching for changing the assisted installer agent container images pointing to ppc64le images.

$ oc get csv -n assisted-installer

NAME DISPLAY VERSION REPLACES PHASE

assisted-service-operator.v0.5.55 Infrastructure Operator for Red Hat OpenShift 0.5.55 assisted-service-operator.v0.5.54 Succeeded$ oc edit csv assisted-service-operator.v0.5.55 -n assisted-installerchange

- name: AGENT_IMAGE

value: quay.io/edge-infrastructure/assisted-installer-agent@sha256:d1bdcf41b8dec2f913253d3afb692e10fdfce5b75a7914c2d7795591db08cda3

- name: CONTROLLER_IMAGE

value: quay.io/edge-infrastructure/assisted-installer-controller@sha256:cb2964e84e4857b17f0ea6eff152e78e9e03ac618523937d6058b9f89b74f9a8

- name: INSTALLER_IMAGE

value: quay.io/edge-infrastructure/assisted-installer@sha256:83842a74e410f3005af9d1f5649504bb28b1f1cf64cbf680c67ef3c323160ee2to

- name: AGENT_IMAGE

value: quay.io/powercloud/assisted-installer-agent:latest

- name: CONTROLLER_IMAGE

value: quay.io/powercloud/assisted-installer-controller:latest

- name: INSTALLER_IMAGE

value: quay.io/powercloud/assisted-installer:latest# This storage class is created in the previous step, update with the appropriate one.

export STORAGE_CLASS="ibmc-vpc-block-10iops-tier-wait-for-first-consumer"

export DB_VOLUME_SIZE="10Gi"

export FS_VOLUME_SIZE="10Gi"

export OCP_VERSION="4.12.0"

export OCP_MAJMIN=${OCP_VERSION%.*}

export ARCH="ppc64le"

export OCP_RELEASE_VERSION=$(curl -s https://mirror.openshift.com/pub/openshift-v4/${ARCH}/clients/ocp/${OCP_VERSION}/release.txt | awk '/machine-os / { print $2 }')

export ISO_URL="https://mirror.openshift.com/pub/openshift-v4/${ARCH}/dependencies/rhcos/${OCP_MAJMIN}/${OCP_VERSION}/rhcos-${OCP_VERSION}-${ARCH}-live.${ARCH}.iso"

export ROOT_FS_URL="https://mirror.openshift.com/pub/openshift-v4/${ARCH}/dependencies/rhcos/${OCP_MAJMIN}/${OCP_VERSION}/rhcos-${OCP_VERSION}-${ARCH}-live-rootfs.${ARCH}.img"

envsubst <<"EOF" | oc apply -f -

apiVersion: agent-install.openshift.io/v1beta1

kind: AgentServiceConfig

metadata:

name: agent

spec:

databaseStorage:

storageClassName: ${STORAGE_CLASS}

accessModes:

- ReadWriteOnce

resources:

requests:

storage: ${DB_VOLUME_SIZE}

filesystemStorage:

storageClassName: ${STORAGE_CLASS}

accessModes:

- ReadWriteOnce

resources:

requests:

storage: ${FS_VOLUME_SIZE}

osImages:

- openshiftVersion: "${OCP_VERSION}"

version: "${OCP_RELEASE_VERSION}"

url: "${ISO_URL}"

rootFSUrl: "${ROOT_FS_URL}"

cpuArchitecture: "${ARCH}"

EOFRun the create cluster command with render option and dump the content to the cluster-agent.yaml file

#!/usr/bin/env bash

export CLUSTERS_NAMESPACE="clusters"

export HOSTED_CLUSTER_NAME="example"

export HOSTED_CONTROL_PLANE_NAMESPACE="${CLUSTERS_NAMESPACE}-${HOSTED_CLUSTER_NAME}"

# domain managed via IBM CIS

export BASEDOMAIN="hypershift-ppc64le.com"

export PULL_SECRET_FILE=${HOME}/.hypershift/pull_secret.txt

export OCP_RELEASE=4.12.0-multi

export MACHINE_CIDR=192.168.122.0/24

# Typically the namespace is created by the hypershift-operator

# but agent cluster creation generates a capi-provider role that

# needs the namespace to already exist

oc create ns ${HOSTED_CONTROL_PLANE_NAMESPACE}

hypershift create cluster agent \

--name=${HOSTED_CLUSTER_NAME} \

--pull-secret="${PULL_SECRET_FILE}" \

--agent-namespace=${HOSTED_CONTROL_PLANE_NAMESPACE} \

--base-domain=${BASEDOMAIN} \

--api-server-address=api.${HOSTED_CLUSTER_NAME}.${BASEDOMAIN} \

--ssh-key ${HOME}/.ssh/id_rsa.pub \

--release-image=quay.io/openshift-release-dev/ocp-release:${OCP_RELEASE} --render> cluster-agent.yamlChange the servicePublishingStrategy to LoadBalancer and Route(because ROKS cluster is deployed in the cloud environment and nodes are in private network and can't be accessed by the workers directly).

Note: If management cluster is in name network as workers and if workers can talk to nodes in the management cluster then no changes needed

- service: APIServer

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OAuthServer

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OIDC

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: None

- service: Konnectivity

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: Ignition

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OVNSbDb

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePortto

- service: APIServer

servicePublishingStrategy:

type: LoadBalancer

- service: OAuthServer

servicePublishingStrategy:

type: Route

- service: OIDC

servicePublishingStrategy:

type: None

- service: Konnectivity

servicePublishingStrategy:

type: Route

- service: Ignition

servicePublishingStrategy:

type: Route

- service: OVNSbDb

servicePublishingStrategy:

type: RouteNote:

Nightly builds require ImageContentSourcePolicy to pull certain images on the hosted cluster. Please add imageContentSources under HostedCluster spec:

imageContentSources:

- mirrors:

- brew.registry.redhat.io

source: registry.redhat.io

- mirrors:

- brew.registry.redhat.io

source: registry.stage.redhat.io

- mirrors:

- brew.registry.redhat.io

source: registry-proxy.engineering.redhat.com

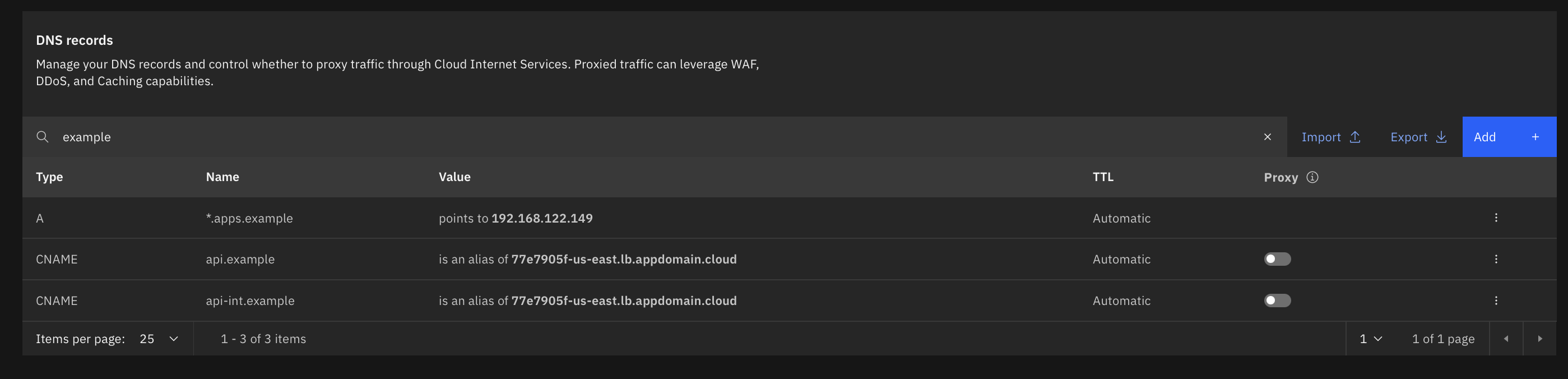

$ oc apply -f cluster-agent.yamlOnce Hosted cluster pods are deployed, list the Kubernetes api server svc and create the DNS entries(Creating entries in the IBM Cloud CIS for this use case)

$ oc get svc kube-apiserver -n clusters-example

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-apiserver LoadBalancer 172.21.75.9 77e7905f-us-east.lb.appdomain.cloud 6443:32729/TCP 6h50m

Note: Add

*.apps.exampleentry when we have worker IP address later in the doc.

export SSH_PUB_KEY=$(cat $HOME/.ssh/id_rsa.pub)

export CLUSTERS_NAMESPACE="clusters"

export HOSTED_CLUSTER_NAME="example"

export HOSTED_CONTROL_PLANE_NAMESPACE="${CLUSTERS_NAMESPACE}-${HOSTED_CLUSTER_NAME}"

export ARCH="ppc64le"

envsubst <<"EOF" | oc apply -f -

apiVersion: agent-install.openshift.io/v1beta1

kind: InfraEnv

metadata:

name: ${HOSTED_CLUSTER_NAME}

namespace: ${HOSTED_CONTROL_PLANE_NAMESPACE}

spec:

cpuArchitecture: $ARCH

pullSecretRef:

name: pull-secret

sshAuthorizedKey: ${SSH_PUB_KEY}

EOFDownload the ISO from the below link:

$ oc -n ${HOSTED_CONTROL_PLANE_NAMESPACE} get InfraEnv ${HOSTED_CLUSTER_NAME} -ojsonpath="{.status.isoDownloadURL}"There are different ways of creating machines like PowerVM, KVM etc.. For this guide to keep it simple, kvm hypervisor been used

$ virt-install --name agent-ibmcloud-1 --memory 16384 --vcpus 4 --disk size=120 --cdrom /var/lib/libvirt/images/a2a04275-2ca0-4b82-81fa-72126076f6fa-discovery.iso --os-variant rhl8.0 --graphics none --console pty,target_type=serial$ oc get agents -n ${HOSTED_CONTROL_PLANE_NAMESPACE} -o=wide

NAMESPACE NAME CLUSTER APPROVED ROLE STAGE HOSTNAME REQUESTED HOSTNAME

clusters-example 5e65c2be-ea88-3796-533f-4a4b5c2e420b false auto-assign 52-54-00-ee-66-ff$ oc -n ${HOSTED_CONTROL_PLANE_NAMESPACE} patch agent 5e65c2be-ea88-3796-533f-4a4b5c2e420b -p '{"spec":{"installation_disk_id":"/dev/vda","approved":true,"hostname":"worker-0.hypershift-ppc64le.com"}}' --type merge$ oc -n clusters scale nodepool example --replicas 1$ oc get agents -A -o=wide

NAMESPACE NAME CLUSTER APPROVED ROLE STAGE HOSTNAME REQUESTED HOSTNAME

clusters-example 5e65c2be-ea88-3796-533f-4a4b5c2e420b example true worker Done 52-54-00-ee-66-ff worker-0.hypershift-ppc64le.com$ oc -n clusters-example get agent -o jsonpath='{range .items[*]}BMH: {@.metadata.labels.agent-install\.openshift\.io/bmh} Agent: {@.metadata.name} State: {@.status.debugInfo.state}{"\n"}{end}'

BMH: Agent: 5e65c2be-ea88-3796-533f-4a4b5c2e420b State: added-to-existing-cluster$ oc get clusters -A -o=wide

NAMESPACE NAME PHASE AGE VERSION

clusters-example example-znwfk Provisioned 6h43m

$ oc get machines -o=wide -A

NAMESPACE NAME CLUSTER NODENAME PROVIDERID PHASE AGE VERSION

clusters-example example-656c864db8-8zgzv example-znwfk worker-0.hypershift-ppc64le.com agent://5e65c2be-ea88-3796-533f-4a4b5c2e420b Running 6h35m 4.12.0

$ oc get agentmachines -A

NAMESPACE NAME AGE

clusters-example example-zm2kf 6h35m$ hypershift create kubeconfig --namespace clusters --name example > example.kubeconfig

$ export KUBECONFIG=example.kubeconfig

$ oc get nodes -o=wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker-0.hypershift-ppc64le.com Ready worker 19m v1.25.4+77bec7a 192.168.122.149 <none> Red Hat Enterprise Linux CoreOS 412.86.202301061548-0 (Ootpa) 4.18.0-372.40.1.el8_6.ppc64le cri-o://1.25.1-5.rhaos4.12.git6005903.el8

$ oc get clusterversion

NAME VERSION AVAILABLE PROGRESSING SINCE STATUS

version False True 6h57m Unable to apply 4.12.0: some cluster operators are not available

$ oc get clusteroperators

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

console 4.12.0 True False False 7m33s

csi-snapshot-controller

dns 4.12.0 True False False 21m

image-registry 4.12.0 True False False 21m

ingress 4.12.0 True False False 6h55m

insights 4.12.0 True False False 29m

kube-apiserver 4.12.0 True False False 6h57m

kube-controller-manager 4.12.0 True False False 6h57m

kube-scheduler 4.12.0 True False False 6h57m

kube-storage-version-migrator 4.12.0 True False False 21m

monitoring 4.12.0 True False False 18m

network 4.12.0 True False False 29m

node-tuning 4.12.0 True False False 32m

openshift-apiserver 4.12.0 True False False 6h57m

openshift-controller-manager 4.12.0 True False False 6h57m

openshift-samples 4.12.0 True False False 20m

operator-lifecycle-manager 4.12.0 True False False 6h56m

operator-lifecycle-manager-catalog 4.12.0 True False False 6h57m

operator-lifecycle-manager-packageserver 4.12.0 True False False 6h57m

service-ca 4.12.0 True False False 29m

storagePlease refer hypershift doc for the detailed instructions.