Agent based Hosted Cluster on PowerVM using MCE with Assisted Service and Hypershift - hypershift-on-power/hack GitHub Wiki

- This document is trying to provide the steps to create agent based hosted cluster on PowerVM using hypershift.

- Using MCE operator from operator hub to install the necessary operators like assisted-service, hypershift and other required custom resources.

- Install the MCE operator

- Create AgentServiceConfig

- Create Hosted Control Plane

- Create InfraEnv

- Add agents

- Scale Node Pool

- Confirm cluster is working fine

-

Install the latest version of MultiCluster Engine operator from operator hub by following this link.

-

If you are sure that the currently available MCE operator from Operator Hub is latest, you can install it or else can follow next instruction to get the latest version of MCE.

-

Latest version can be made available to download by creating stage catalog source which requires updating the cluster pull secret with

brew.registry.redhat.io's creds and updating brew mirror info in/etc/containers/registries.confin worker nodes.- Instructions to update global pull secret can be found here.

- Once pull secret is updated, nodes need to be replaced with the new set of nodes which will inject the updated pull secret to worker nodes.

- Update

/etc/containers/registries.confwith below content in all the worker nodes.

[[registry]] location = "registry.stage.redhat.io" insecure = false blocked = false mirror-by-digest-only = true prefix = "" [[registry.mirror]] location = "brew.registry.redhat.io" insecure = false [[registry]] location = "registry.redhat.io/multicluster-engine" insecure = false blocked = false mirror-by-digest-only = true prefix = "" [[registry.mirror]] location = "brew.registry.redhat.io/multicluster-engine" insecure = false- Reboot the worker nodes.

- Create stage catalog

apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: redhat-operators-stage namespace: openshift-marketplace spec: sourceType: grpc publisher: redhat displayName: Red Hat Operators v4.13 Stage image: quay.io/openshift-release-dev/ocp-release-nightly:iib-int-index-art-operators-4.13

- Once catalog is created, latest MCE operator would be available to install from operator hub.

-

-

Once MCE operator installed, need to create an instance of

MultiClusterEnginewhich can be created in console itself while installing MCE operator. -

Enable

hypershift-previewcomponent inMultiClusterEngineengine instance to install hypershift operator and Hosted Cluster CRDs.

$ oc edit mce- assisted-service operator requires the BMH CRD, install the CRD using following instruction to install if OpenShift doesn't contain it.

# IBM Cloud's ROKS solution doesn't have this CRD, hence this step is mandatory if you use ROKS as management cluster.

$ oc apply -f https://raw.githubusercontent.com/openshift/assisted-service/master/hack/crds/metal3.io_baremetalhosts.yaml- List

ClusterImageSetto verify the release that you want to use has a respectiveClusterImageSetor not.

# This would list the ClusterImageSet available in the cluster

$ oc get ClusterImageSet- If

ClusterImageSetfor the ocp-release that you are looking to use, is not exist, please create it like below

# Trying to create ClusterImageSet for OCP 4.13.0-multi

cat <<EOF | oc create -f -

apiVersion: hive.openshift.io/v1

kind: ClusterImageSet

metadata:

name: img4.13.0-multi-appsub

spec:

releaseImage: quay.io/openshift-release-dev/ocp-release:4.13.0-ec.3-multi

EOFHere we need to create an agent service configuration custom resource that will tell the operator how much storage we need for the various components like database and filesystem and it will also define what OpenShift versions to maintain. Here is an example for OCP 4.12.0, please substitute correct values as per your env.

export DB_VOLUME_SIZE="10Gi"

export FS_VOLUME_SIZE="10Gi"

export OCP_VERSION="4.12.0"

export OCP_MAJMIN=${OCP_VERSION%.*}

export ARCH="ppc64le"

export OCP_RELEASE_VERSION=$(curl -s https://mirror.openshift.com/pub/openshift-v4/${ARCH}/clients/ocp/${OCP_VERSION}/release.txt | awk '/machine-os / { print $2 }')

export ISO_URL="https://mirror.openshift.com/pub/openshift-v4/${ARCH}/dependencies/rhcos/${OCP_MAJMIN}/${OCP_VERSION}/rhcos-${OCP_VERSION}-${ARCH}-live.${ARCH}.iso"

export ROOT_FS_URL="https://mirror.openshift.com/pub/openshift-v4/${ARCH}/dependencies/rhcos/${OCP_MAJMIN}/${OCP_VERSION}/rhcos-${OCP_VERSION}-${ARCH}-live-rootfs.${ARCH}.img"

envsubst <<"EOF" | oc apply -f -

apiVersion: agent-install.openshift.io/v1beta1

kind: AgentServiceConfig

metadata:

name: agent

spec:

databaseStorage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: ${DB_VOLUME_SIZE}

filesystemStorage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: ${FS_VOLUME_SIZE}

osImages:

- openshiftVersion: "${OCP_VERSION}"

version: "${OCP_RELEASE_VERSION}"

url: "${ISO_URL}"

rootFSUrl: "${ROOT_FS_URL}"

cpuArchitecture: "${ARCH}"

EOF- If stage catalog with brew mirror is used to install latest MCE operator, need to update

AgentServiceConfigwith the brew mirror information. For that need to do following steps.- Create a config map in the same namespace where MCE is installed that is

multicluster-engineusually.

cat <<EOF | oc create -f - apiVersion: v1 kind: ConfigMap metadata: name: mirror-config namespace: multicluster-engine # please verify that this namespace is where MCE is installed. labels: app: assisted-service data: registries.conf: | unqualified-search-registries = ["registry.access.redhat.com", "docker.io"] [[registry]] location = "registry.stage.redhat.io" insecure = false blocked = false mirror-by-digest-only = true prefix = "" [[registry.mirror]] location = "brew.registry.redhat.io" insecure = false [[registry]] location = "registry.redhat.io/multicluster-engine" insecure = false blocked = false mirror-by-digest-only = true prefix = "" [[registry.mirror]] location = "brew.registry.redhat.io/multicluster-engine" insecure = false EOF

- Refer config map created in

AgentServiceConfigcreated.

Add below field in$ oc edit AgentServiceConfig agent

specmirrorRegistryRef: name: mirror-config

- Create a config map in the same namespace where MCE is installed that is

- Build hypershift binary

git clone https://github.com/openshift/hypershift.git

cd hypershift

make build- Create agent cluster

When you create a hosted cluster with the Agent platform, HyperShift installs the Agent CAPI provider in the Hosted Control Plane (HCP) namespace.

#!/usr/bin/env bash

export CLUSTERS_NAMESPACE="clusters"

export HOSTED_CLUSTER_NAME="example"

export HOSTED_CONTROL_PLANE_NAMESPACE="${CLUSTERS_NAMESPACE}-${HOSTED_CLUSTER_NAME}"

# domain managed via IBM CIS

# Please add correct domain as per your IBM CIS instance

# export BASEDOMAIN="<CIS_DOMAIN>"

export BASEDOMAIN="hypershift-ppc64le.com"

# Please make sure auth info for `brew.registry.redhat.io` exists if it's used as mirror registry to install MCE operator.

export PULL_SECRET_FILE=${HOME}/.hypershift/pull_secret.txt

export OCP_RELEASE=4.12.0-multi

export MACHINE_CIDR=192.168.122.0/24

# Typically the namespace is created by the hypershift-operator

# but agent cluster creation generates a capi-provider role that

# needs the namespace to already exist

oc create ns ${HOSTED_CONTROL_PLANE_NAMESPACE}

bin/hypershift create cluster agent \

--name=${HOSTED_CLUSTER_NAME} \

--pull-secret="${PULL_SECRET_FILE}" \

--agent-namespace=${HOSTED_CONTROL_PLANE_NAMESPACE} \

--base-domain=${BASEDOMAIN} \

--api-server-address=api.${HOSTED_CLUSTER_NAME}.${BASEDOMAIN} \

--ssh-key ${HOME}/.ssh/id_rsa.pub \

--release-image=quay.io/openshift-release-dev/ocp-release:${OCP_RELEASE} --render> cluster-agent.yaml- Modify the rendered file

Change the servicePublishingStrategy to LoadBalancer and Route(because ROKS cluster is deployed in the cloud environment and nodes are in private network and can't be accessed by the workers directly).

Note: If management cluster is in name network as workers and if workers can talk to nodes in the management cluster then no changes needed

- service: APIServer

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OAuthServer

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OIDC

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: None

- service: Konnectivity

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: Ignition

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePort

- service: OVNSbDb

servicePublishingStrategy:

nodePort:

address: api.example.hypershift-ppc64le.com

type: NodePortto

- service: APIServer

servicePublishingStrategy:

type: LoadBalancer

- service: OAuthServer

servicePublishingStrategy:

type: Route

- service: OIDC

servicePublishingStrategy:

type: None

- service: Konnectivity

servicePublishingStrategy:

type: Route

- service: Ignition

servicePublishingStrategy:

type: Route

- service: OVNSbDb

servicePublishingStrategy:

type: Route- Create it

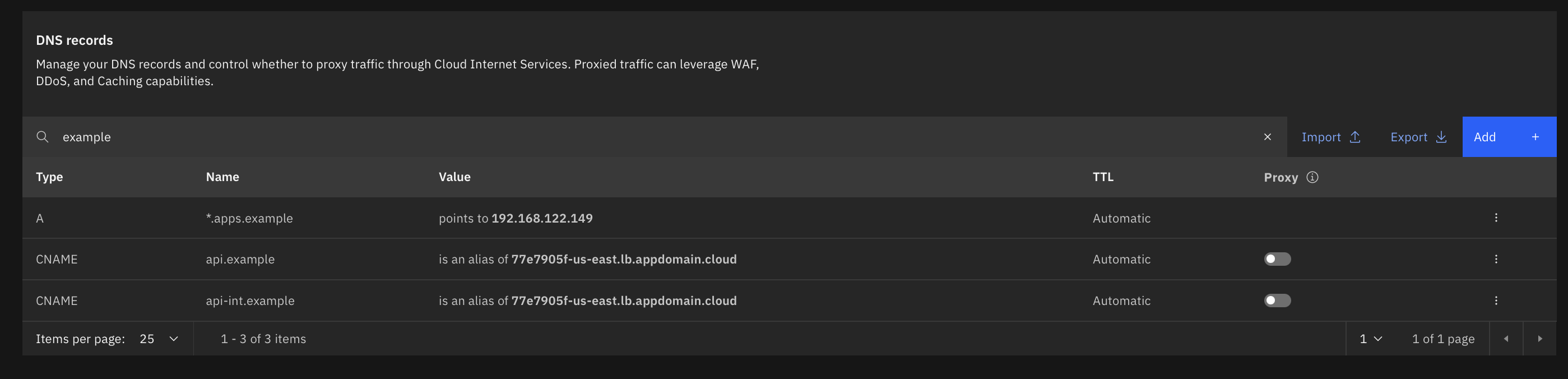

$ oc apply -f cluster-agent.yaml- Update the DNS

Once Hosted cluster pods are deployed, list the Kubernetes api server svc and create the DNS entries(Creating entries in the IBM Cloud CIS for this use case)

$ oc get svc kube-apiserver -n ${HOSTED_CONTROL_PLANE_NAMESPACE}

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-apiserver LoadBalancer 172.21.75.9 77e7905f-us-east.lb.appdomain.cloud 6443:32729/TCP 6h50m

Note: Add

*.apps.exampleentry when we have worker IP address later in the doc.

An InfraEnv is a enviroment to which hosts booting the live ISO can join as Agents. In this case, the Agents will be created in the same namespace as our HostedControlPlane.

export SSH_PUB_KEY=$(cat $HOME/.ssh/id_rsa.pub)

export CLUSTERS_NAMESPACE="clusters"

export HOSTED_CLUSTER_NAME="example"

export HOSTED_CONTROL_PLANE_NAMESPACE="${CLUSTERS_NAMESPACE}-${HOSTED_CLUSTER_NAME}"

export ARCH="ppc64le"

envsubst <<"EOF" | oc apply -f -

apiVersion: agent-install.openshift.io/v1beta1

kind: InfraEnv

metadata:

name: ${HOSTED_CLUSTER_NAME}

namespace: ${HOSTED_CONTROL_PLANE_NAMESPACE}

spec:

cpuArchitecture: $ARCH

pullSecretRef:

name: pull-secret

sshAuthorizedKey: ${SSH_PUB_KEY}

EOF- Once InfraEnv is created, a minimal iso would be generated by assisted service which can be used to bring up the worker nodes. Get the ISO Download URL from below command.

$ oc -n ${HOSTED_CONTROL_PLANE_NAMESPACE} get InfraEnv ${HOSTED_CLUSTER_NAME} -ojsonpath="{.status.isoDownloadURL}"If you want to configure static ip for the workers, need to create an instance of NMStateConfig resource and use a label to refer it in InfraEnv.

Need to ensure appropriate Mac address is used to map the network interface to set the static ip address. Interface name would get overrode with the interface name present in the VM.

apiVersion: agent-install.openshift.io/v1beta1

kind: NMStateConfig

metadata:

name: static-ip-test-nmstate-config

namespace: clusters-static-ip-test

labels:

infraenv: static-ip-test-ppc64le

spec:

config:

interfaces:

- name: eth0

type: ethernet

state: up

mac-address: fa:16:3e:f0:41:4b

ipv4:

enabled: true

address:

- ip: 9.114.97.133

prefix-length: 24

dhcp: false

dns-resolver:

config:

server:

- 9.3.1.200

routes:

config:

- destination: 0.0.0.0/0

next-hop-address: 9.114.96.1

next-hop-interface: eth0

table-id: 254

interfaces:

- name: "eth0"

macAddress: "fa:16:3e:f0:41:4b"Use below label mapping in InfraEnv to use the NMStateConfig created.

nmStateConfigLabelSelector:

matchLabels:

infraenv: static-ip-test-ppc64le-

Create VM under PowerVM hypervisor with the downloaded ISO as boot medium.

-

After sometime, you will see the agents

$ oc get agents -n ${HOSTED_CONTROL_PLANE_NAMESPACE} -o=wide

NAMESPACE NAME CLUSTER APPROVED ROLE STAGE HOSTNAME REQUESTED HOSTNAME

clusters-example 5e65c2be-ea88-3796-533f-4a4b5c2e420b false auto-assign 52-54-00-ee-66-ff- Once you see the agents, approve the agent.

$ oc -n ${HOSTED_CONTROL_PLANE_NAMESPACE} patch agent 5e65c2be-ea88-3796-533f-4a4b5c2e420b -p '{"spec":{"approved":true}}' --type mergeWe have the HostedControlPlane running and the Agents ready to join the HostedCluster. Before we join the Agents let's access the HostedCluster.

First, we need to generate the kubeconfig:

$ hypershift create kubeconfig --namespace clusters --name example > example.kubeconfigIf we access the cluster we will see that we don't have any nodes and that the ClusterVersion is trying to reconcile the OCP release:

$ oc --kubeconfig example.kubeconfig get clusterversion,nodes

NAME VERSION AVAILABLE PROGRESSING SINCE STATUS

clusterversion.config.openshift.io/version False True 8m6s Unable to apply 4.12.0: some cluster operators have not yet rolled outIn order to get the cluster in a running state we need to add some nodes to it. Let's do it.

We add nodes to our HostedCluster by scaling the NodePool object. In this case we will start by scaling the NodePool object to one node. You can scale the node pool like below which will randomly choose the agent which is ready to bound to the cluster.

$ oc -n clusters scale nodepool example --replicas 1

If you want to bound a specific agent, need to update node pool created with label selector and scale the node pool like the command mentioned above.

agent:

agentLabelSelector:

matchLabels:

inventory.agent-install.openshift.io/cpu-architecture: x86_64Need to make sure the agent you want to bound has this inventory.agent-install.openshift.io/cpu-architecture: x86_64 label.

By default agents will be populated with cpu-architecture label.

- Once agent is approved and nodepool is scaled, agent will go through the installation process and you would see a couple of reboots, after quite sometime, you would see the agent reaching the

Donestage, which means agent is successfully added to the hosted cluster and you can start using it. - Those agents go through different states and finally join the hosted cluster as OpenShift Container Platform nodes. The states pass from

bindingtodiscoveringtoinsufficienttoinstallingtoinstalling-in-progresstoadded-to-existing-cluster. Below command's outputs denotes that resources are in good state.

$ oc get agents -A -o=wide

NAMESPACE NAME CLUSTER APPROVED ROLE STAGE HOSTNAME REQUESTED HOSTNAME

clusters-example 5e65c2be-ea88-3796-533f-4a4b5c2e420b example true worker Done 52-54-00-ee-66-ff worker-0.hypershift-ppc64le.com- Status of the agents

$ oc -n ${HOSTED_CONTROL_PLANE_NAMESPACE} get agent -o jsonpath='{range .items[*]}BMH: {@.metadata.labels.agent-install\.openshift\.io/bmh} Agent: {@.metadata.name} State: {@.status.debugInfo.state}{"\n"}{end}'

BMH: Agent: 5e65c2be-ea88-3796-533f-4a4b5c2e420b State: added-to-existing-cluster- Hypershift resources

$ oc get clusters -A -o=wide

NAMESPACE NAME PHASE AGE VERSION

clusters-example example-znwfk Provisioned 6h43m

$ oc get machines -o=wide -A

NAMESPACE NAME CLUSTER NODENAME PROVIDERID PHASE AGE VERSION

clusters-example example-656c864db8-8zgzv example-znwfk worker-0.hypershift-ppc64le.com agent://5e65c2be-ea88-3796-533f-4a4b5c2e420b Running 6h35m 4.12.0

$ oc get agentmachines -A

NAMESPACE NAME AGE

clusters-example example-zm2kf 6h35m- Access the hostedcluster via kubeconfig

$ hypershift create kubeconfig --namespace clusters --name example > example.kubeconfig

$ export KUBECONFIG=example.kubeconfig

$ oc get nodes -o=wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

worker-0.hypershift-ppc64le.com Ready worker 19m v1.25.4+77bec7a 192.168.122.149 <none> Red Hat Enterprise Linux CoreOS 412.86.202301061548-0 (Ootpa) 4.18.0-372.40.1.el8_6.ppc64le cri-o://1.25.1-5.rhaos4.12.git6005903.el8

$ oc get clusterversion

NAME VERSION AVAILABLE PROGRESSING SINCE STATUS

version False True 6h57m Unable to apply 4.12.0: some cluster operators are not available