DevOps ‐ Complete CICD Pipeline With AWS CodePipeline - git-muhammad-sadique-i987/projects GitHub Wiki

Welcome to this 6 parts DevOps challenge.

In upcoming 6 parts, you'll build a complete CI/CD pipeline that takes code from commit to production, gaining practical DevOps skills that are in high demand across the tech and cloud industry.

By the end of this challenge, you'll have:

-

Built a complete CI/CD pipeline using AWS tools

-

Automated the testing and deployment of a real application

Credits

This project structure and learning methodology comes from NextWork.org - an exceptional platform for DevOps practitioners who believe in learning through building. Their project-based AWS/Kubernetes track provided the foundation for this implementation.

-

Building a CI/CD pipeline in 6 parts

-

What to Expect

-

Roadmap (Overall Project)

-

What is DevOps?

-

What is CI/CD?

-

Part-1. Set Up a Web App in the Cloud

-

What You'll Need

-

Key Concepts

-

Roadmap (Part-1)

-

Step-1 Launching an EC2 instance

-

Step-2 Set up VS Code

-

Step-3 SSH connection to EC2 instance

-

Step-4 Install Apache Maven on EC2 Instance

-

Step-5 Install Amazon Correto 8 (Java 8)

-

Step-6 Create the Application

-

Step-7 Connect VS Code with your EC2 Instance

-

Summary (Part-1)

-

-

Part-2 Connect a GitHub Repo with AWS

-

What You'll Need

-

Key Concepts

-

Before We Start

-

Roadmap (Part-2)

-

Step-1 Install Git

-

Step-2 Set up GitHub

-

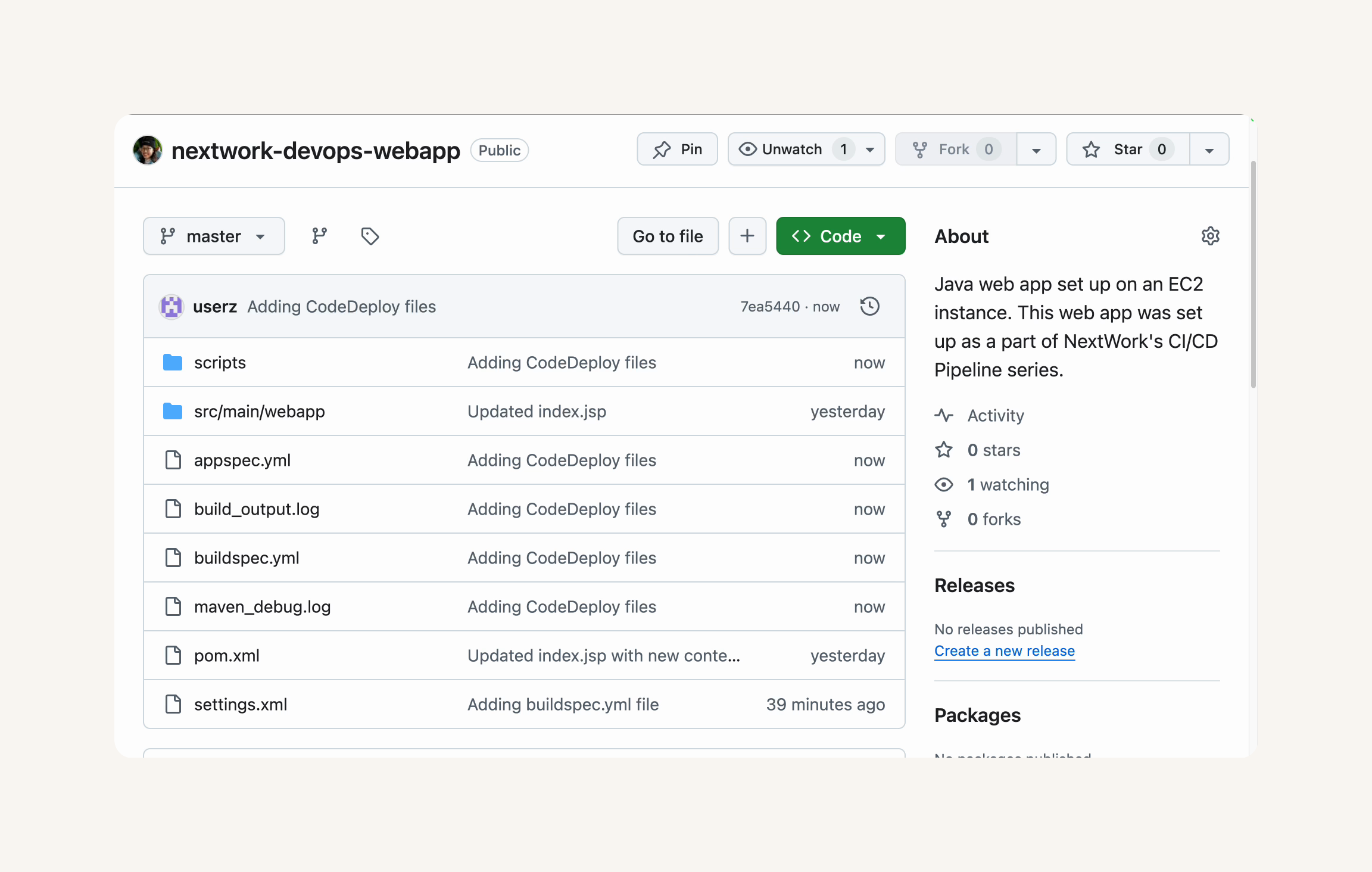

Step-3 Commit and Push Your Changes to GithHub

-

Summary (Part-2)

-

-

Part-3 Secure Packages with CodeArtifact

-

What You'll Need

-

Key Concepts

-

Before We Start

-

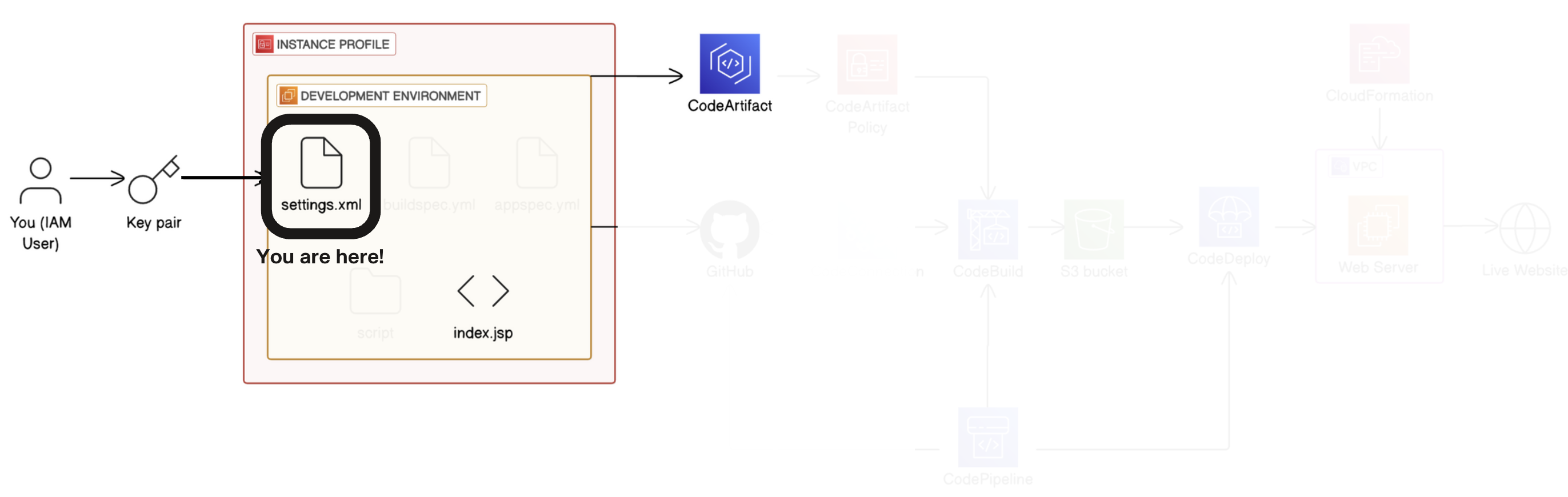

Roadmap (Part-3)

-

Step-1 Set up CodeArtifact

-

Step-2 Configure your EC2 instance for CodeArtifact

-

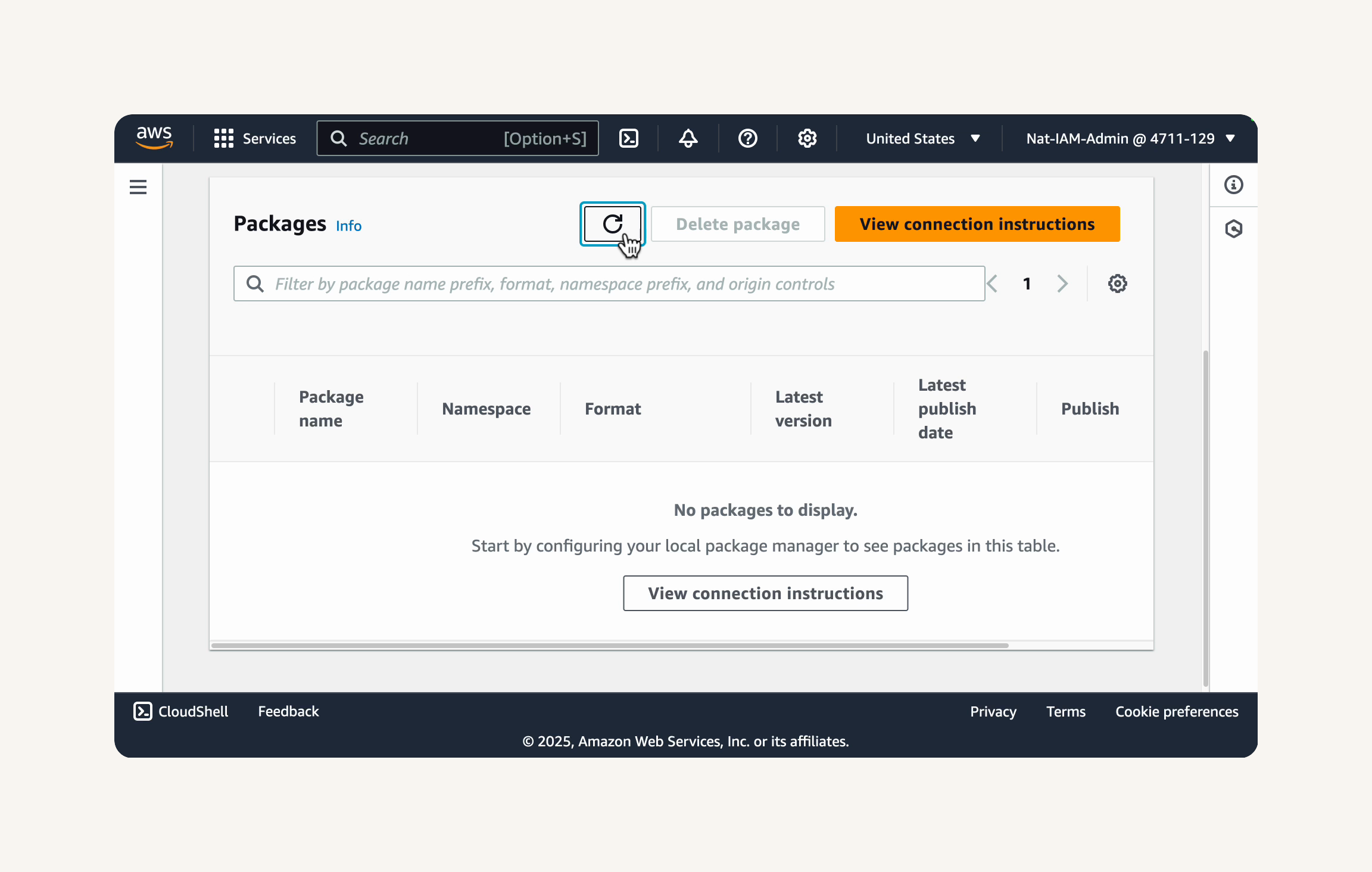

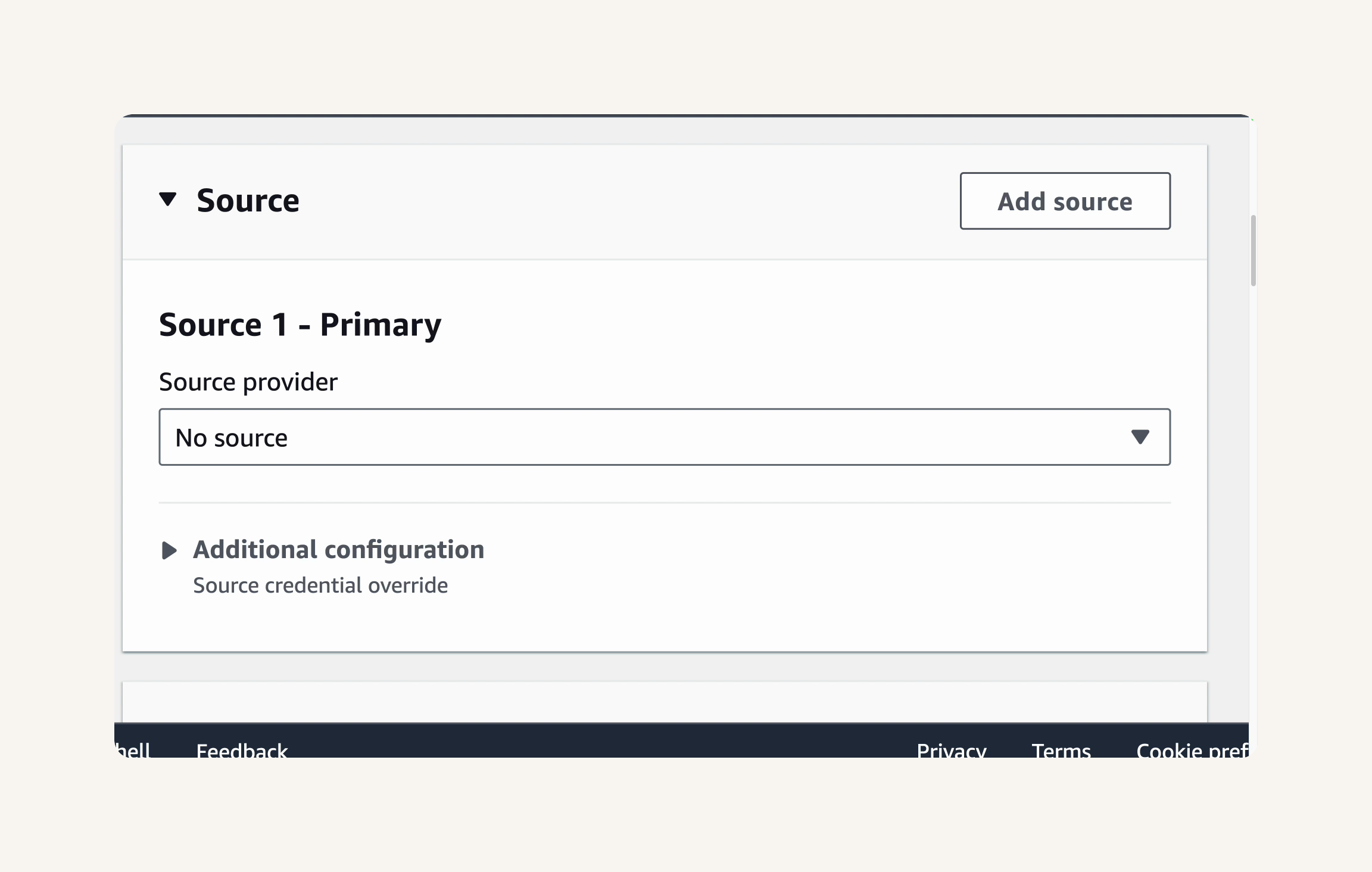

Step-3 Verify Maven is using CodeArtifact

-

Step-4 (Optional for Experts) Publish a package to CodeArtifact

-

Summary (Part-3)

-

-

Part-4 Continuous Integration with CodeBuild

-

What You'll Need

-

Key Concepts

-

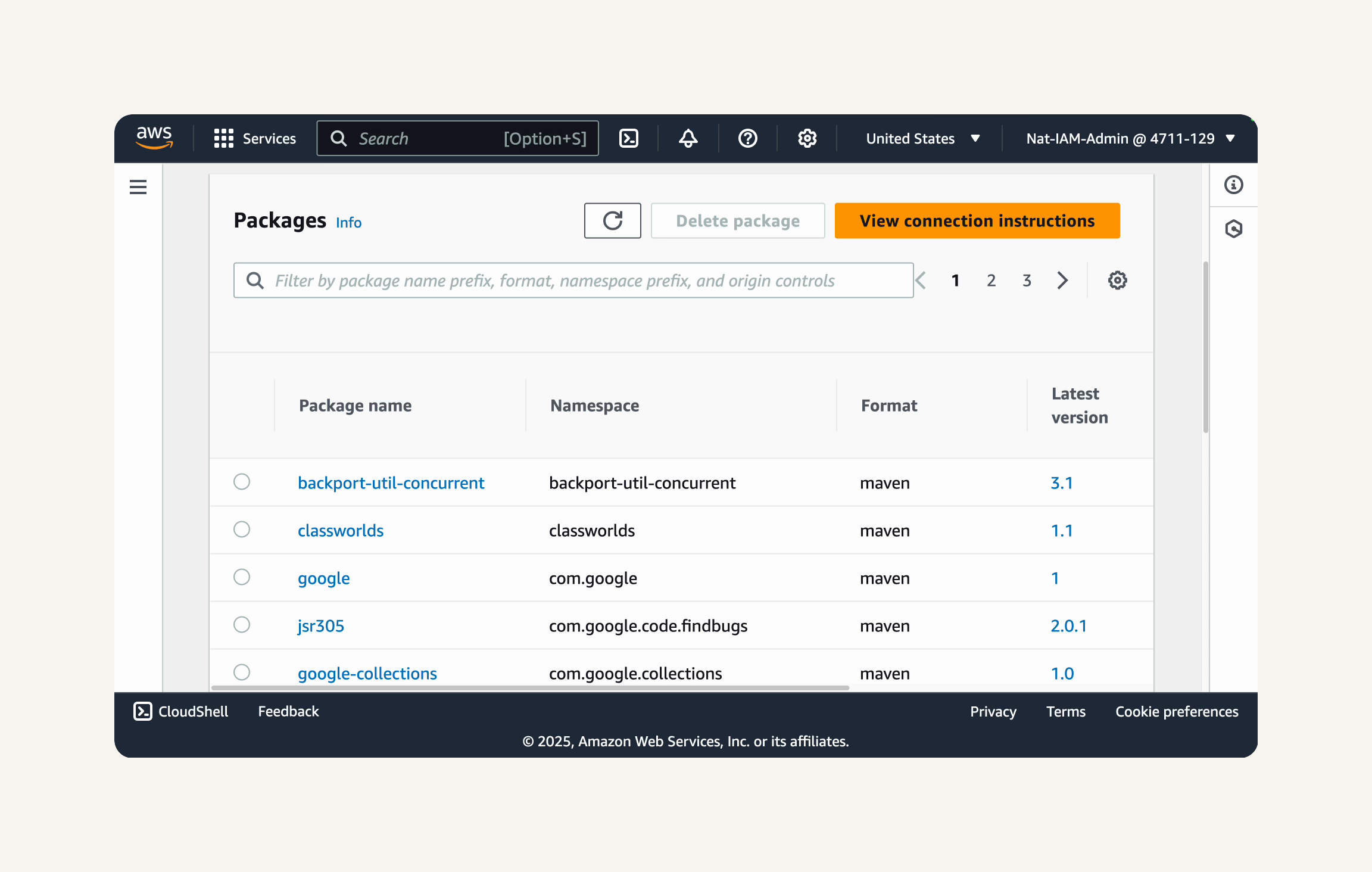

Before We Start

-

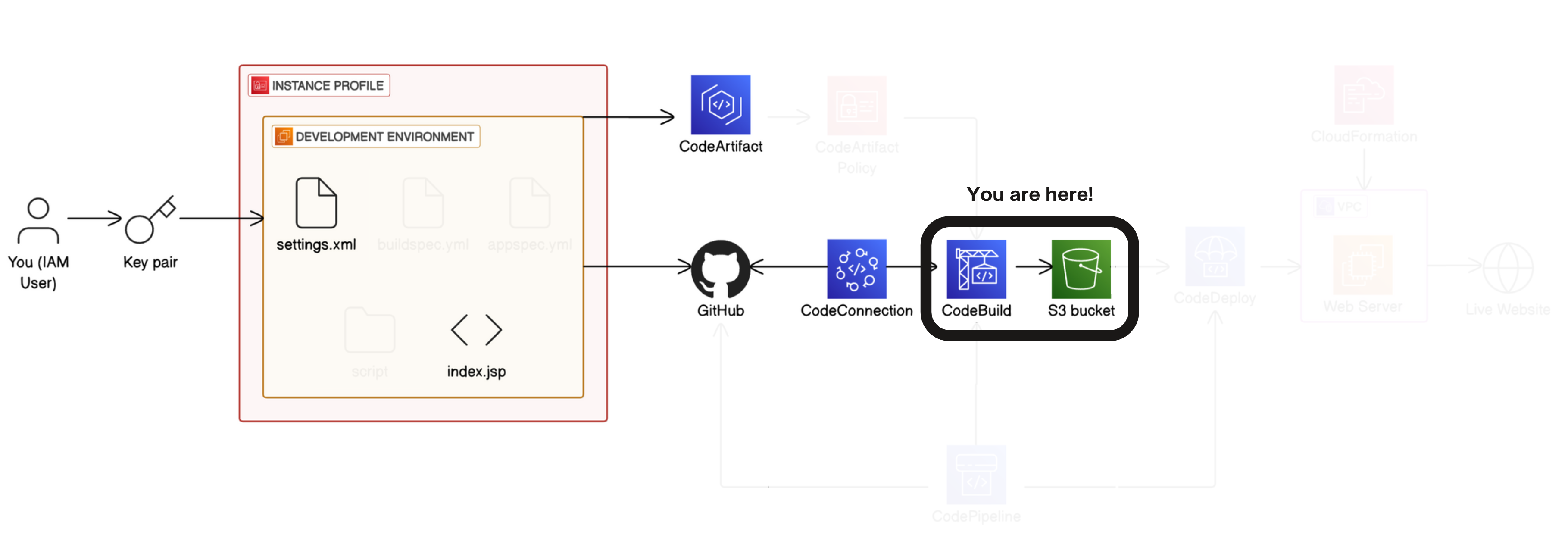

Roadmap (Part-4)

-

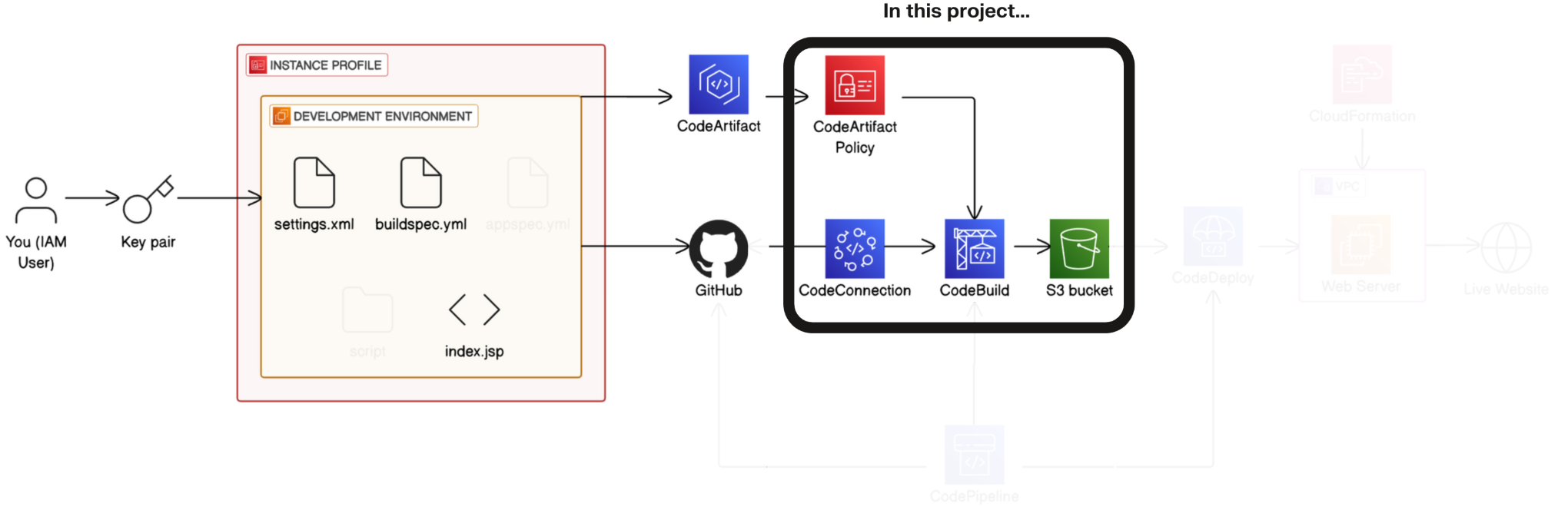

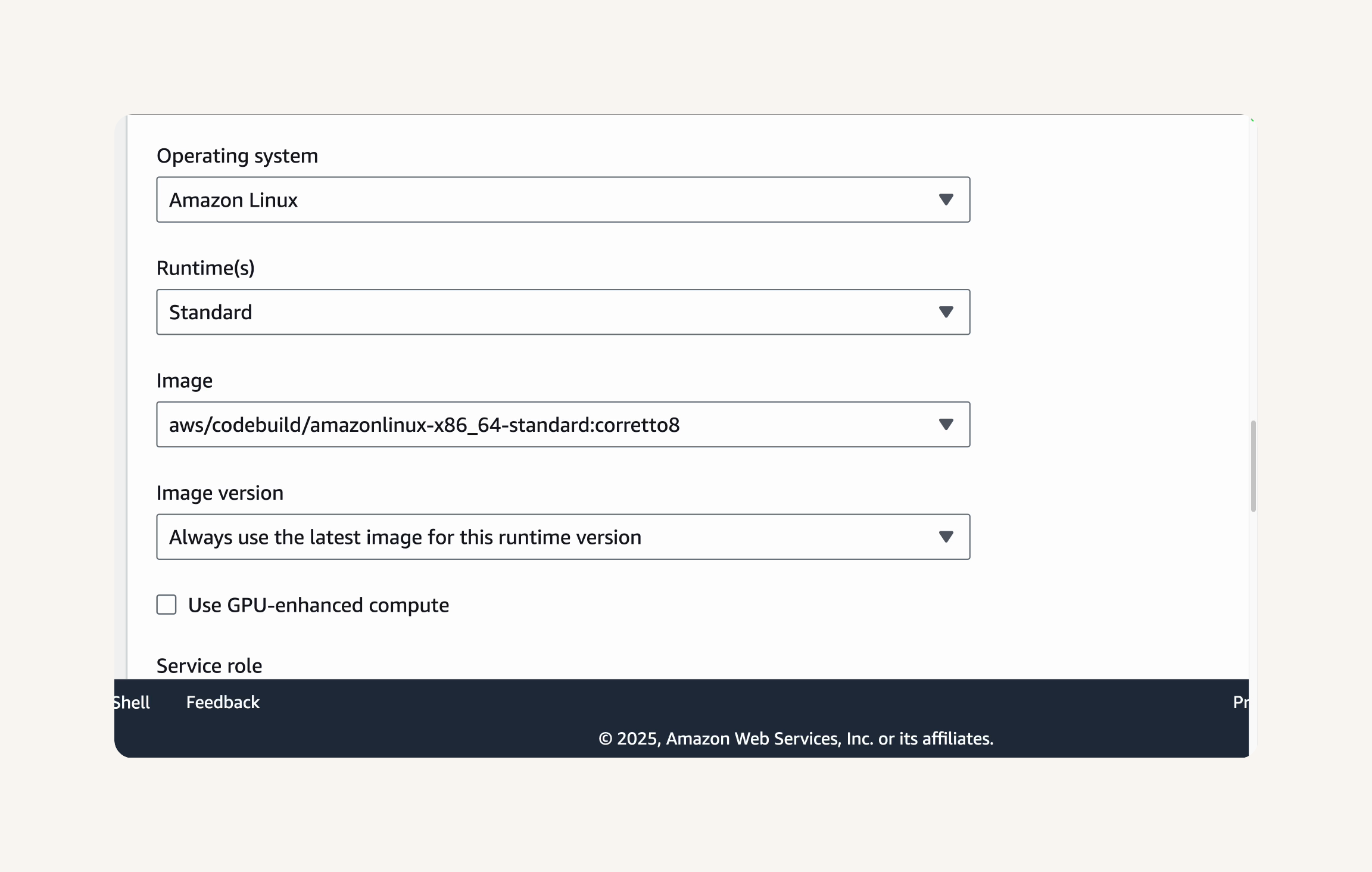

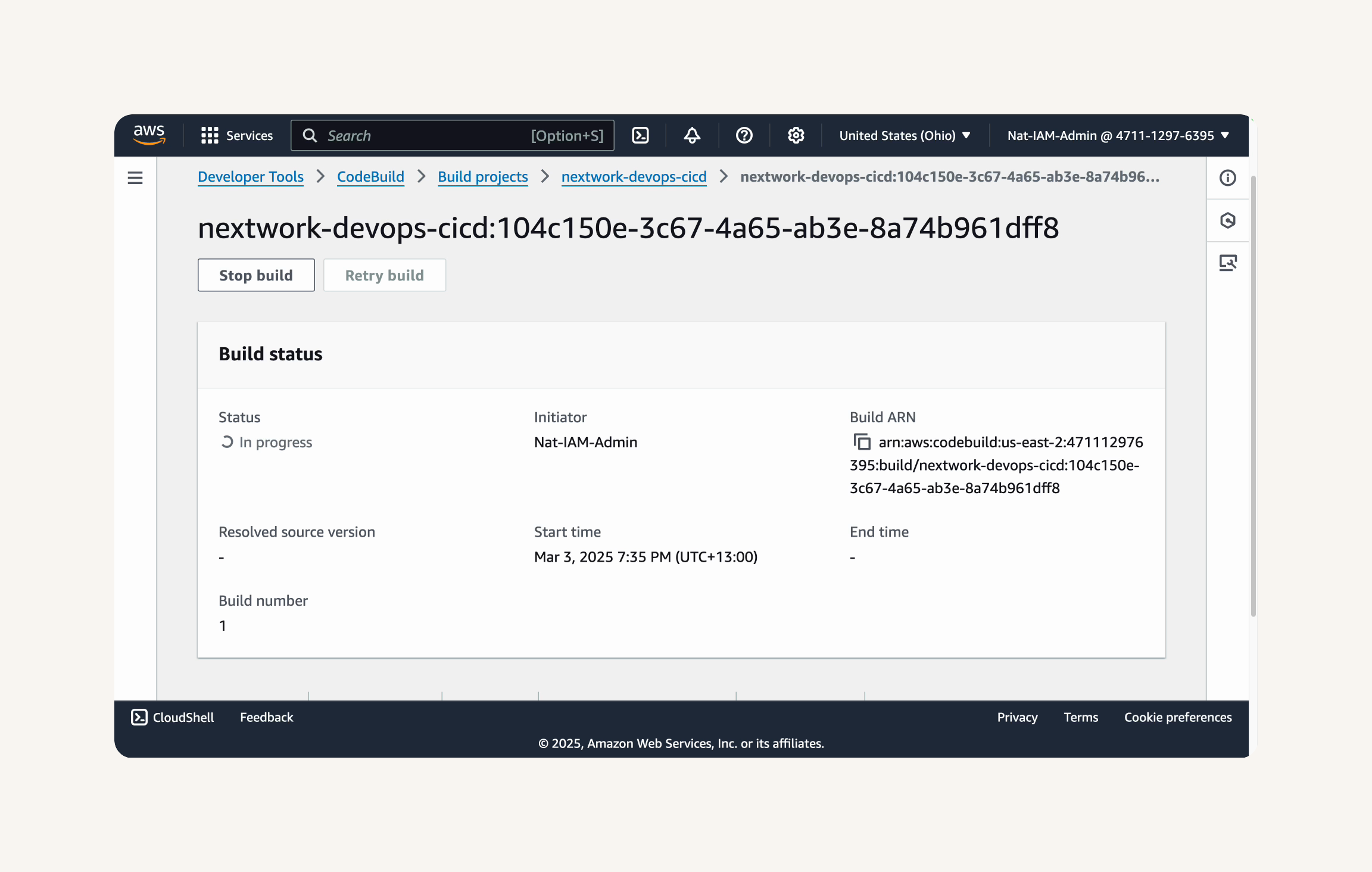

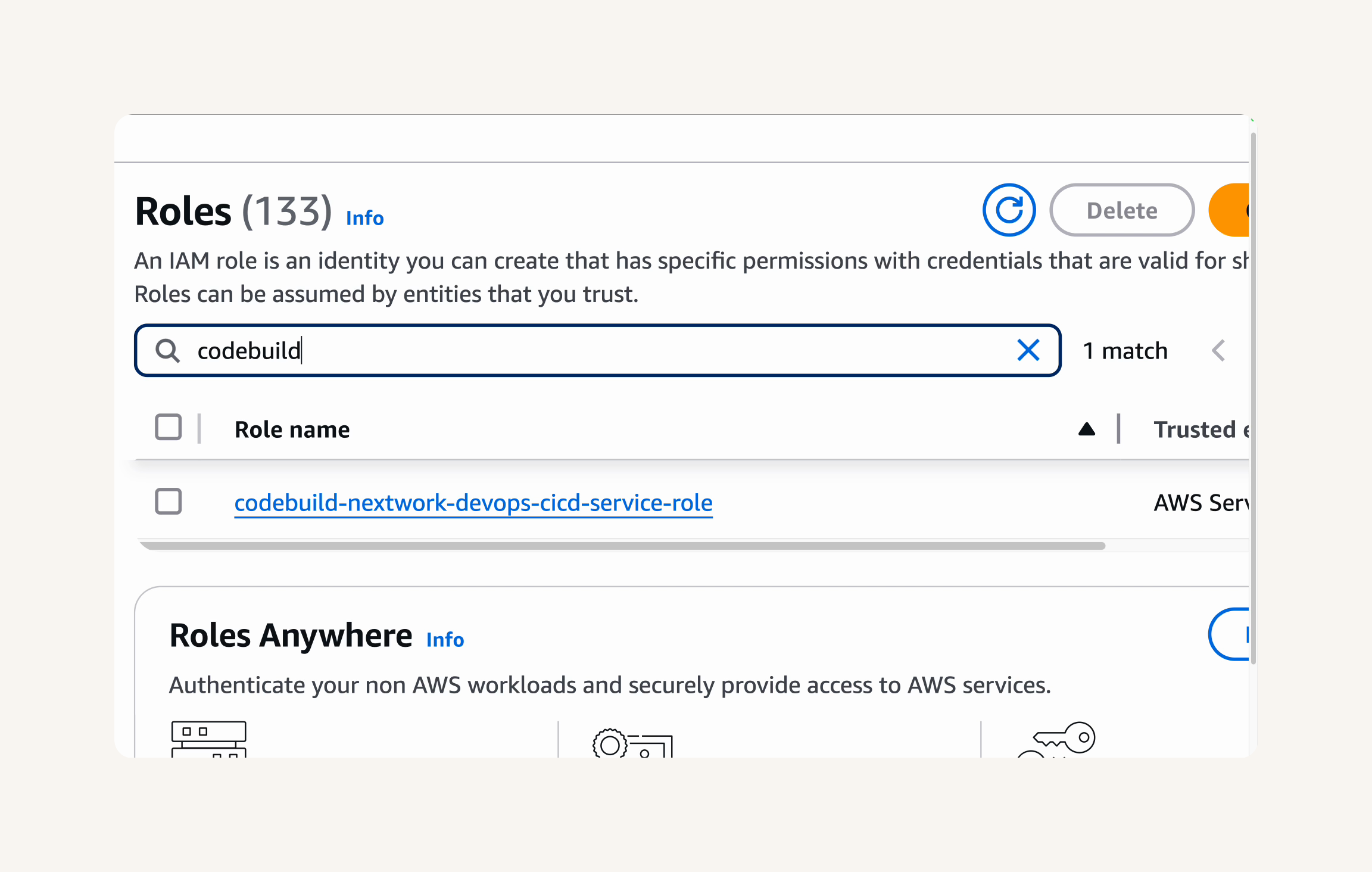

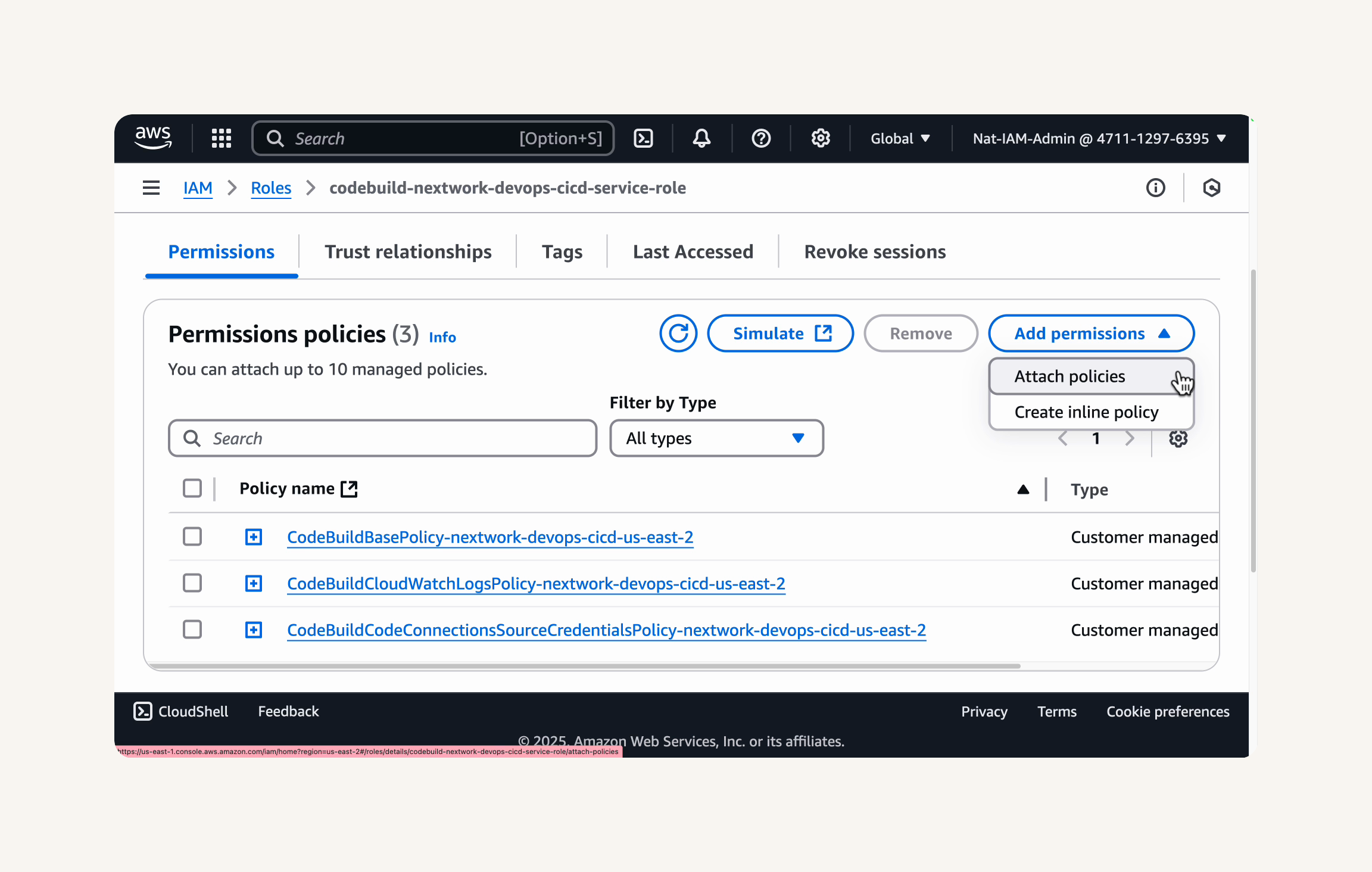

Step-1 Set up a CodeBuild project

-

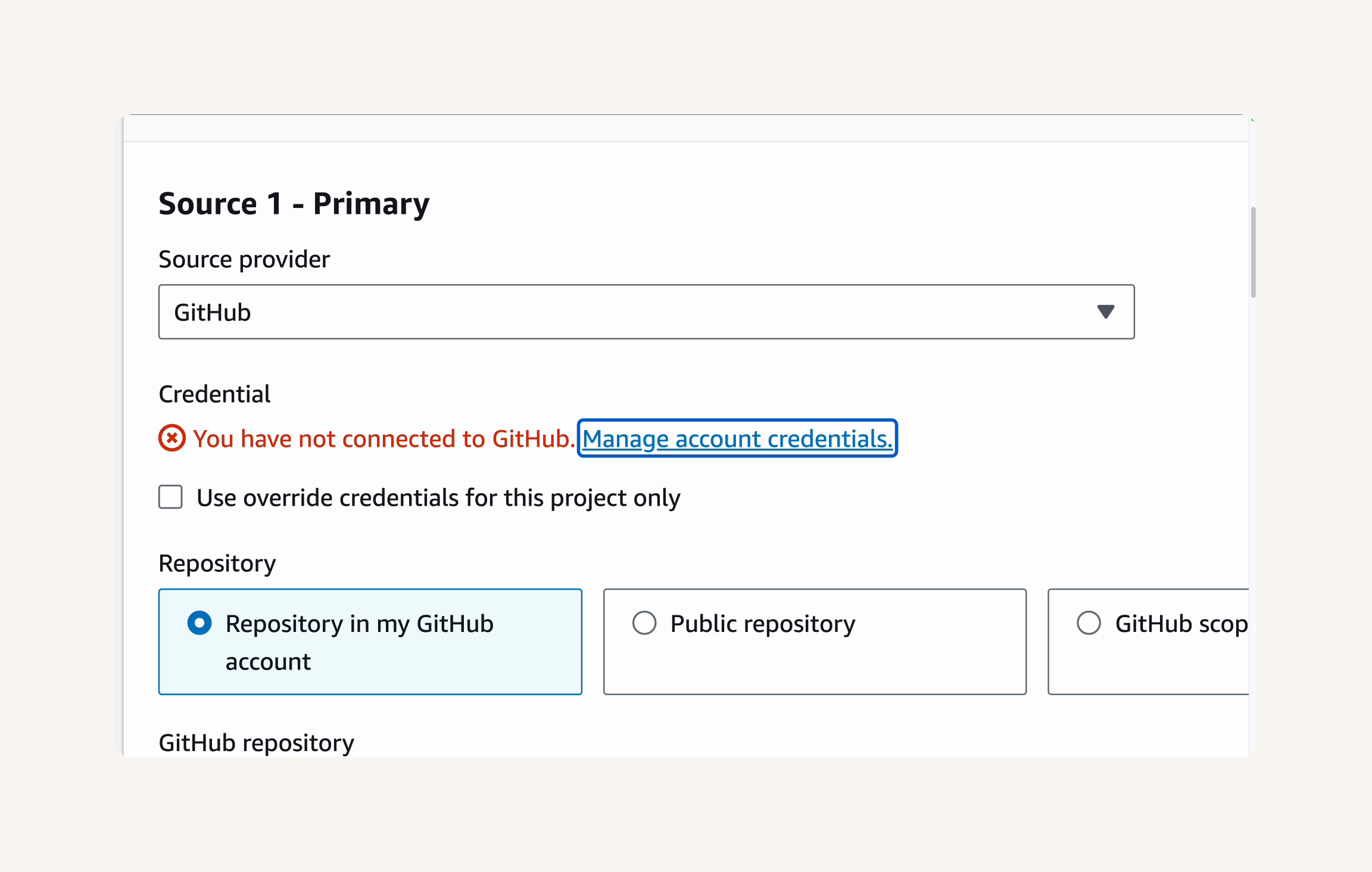

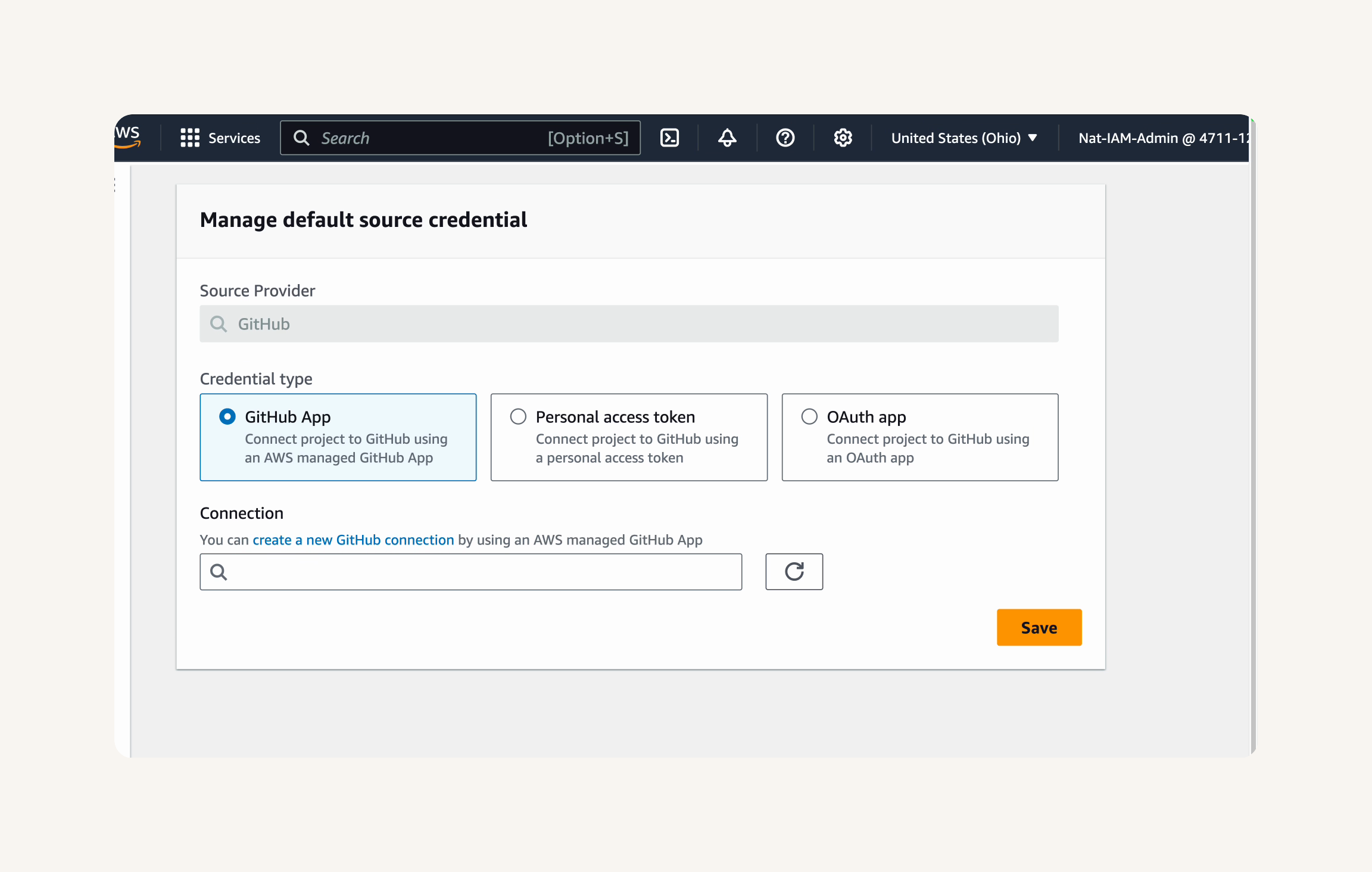

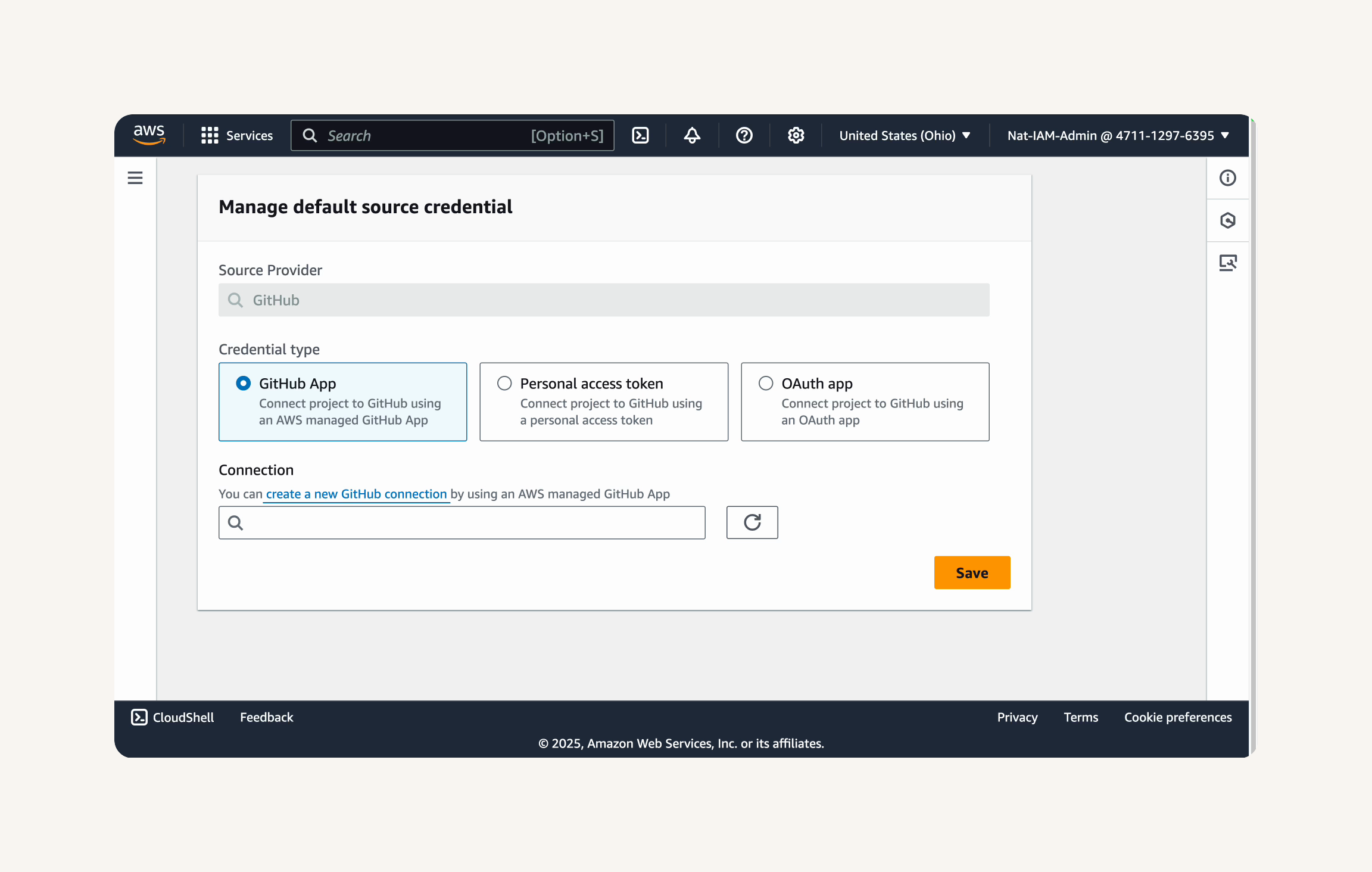

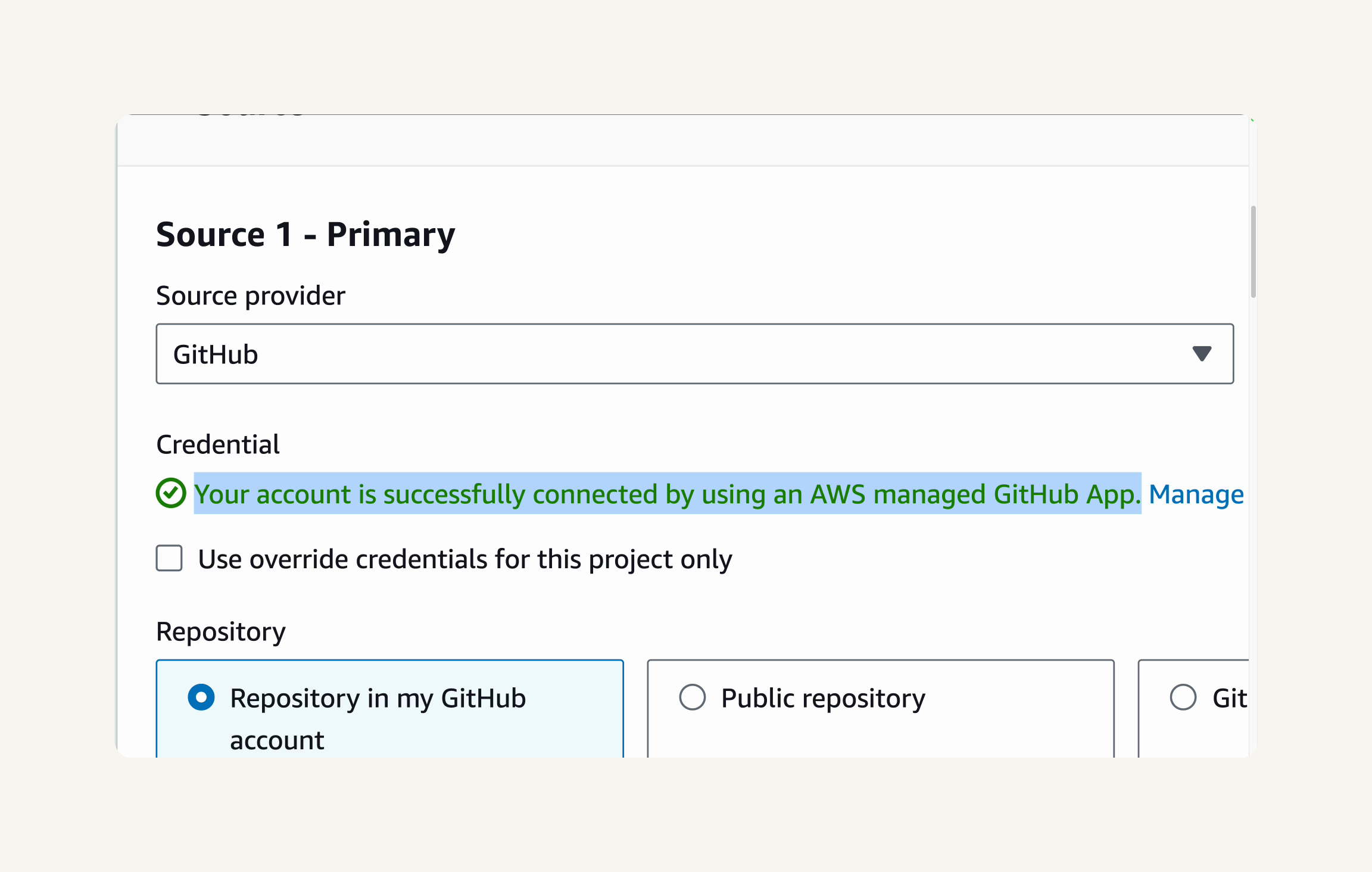

Step-2 Connect CodeBuild to your GitHub repo

-

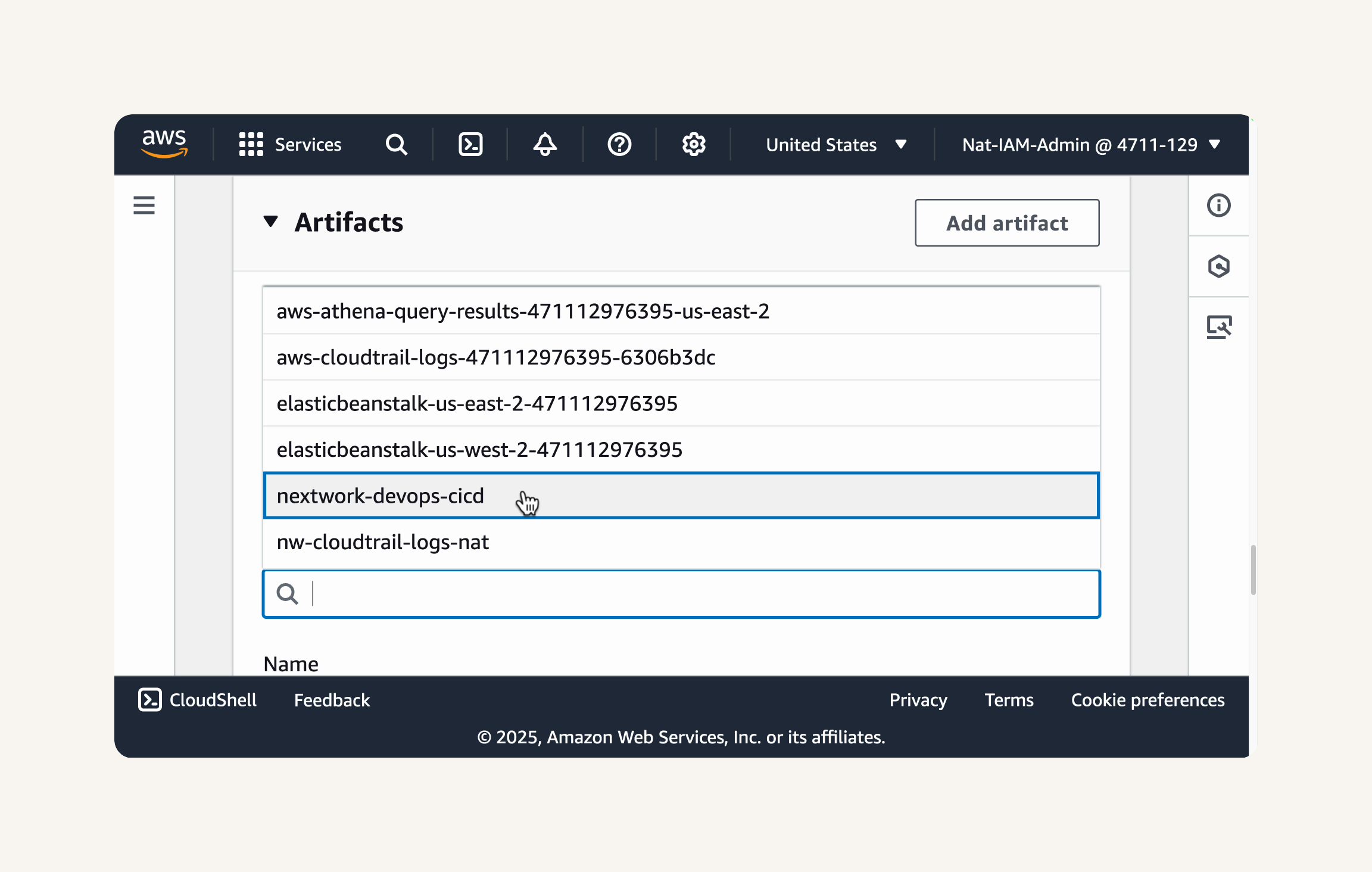

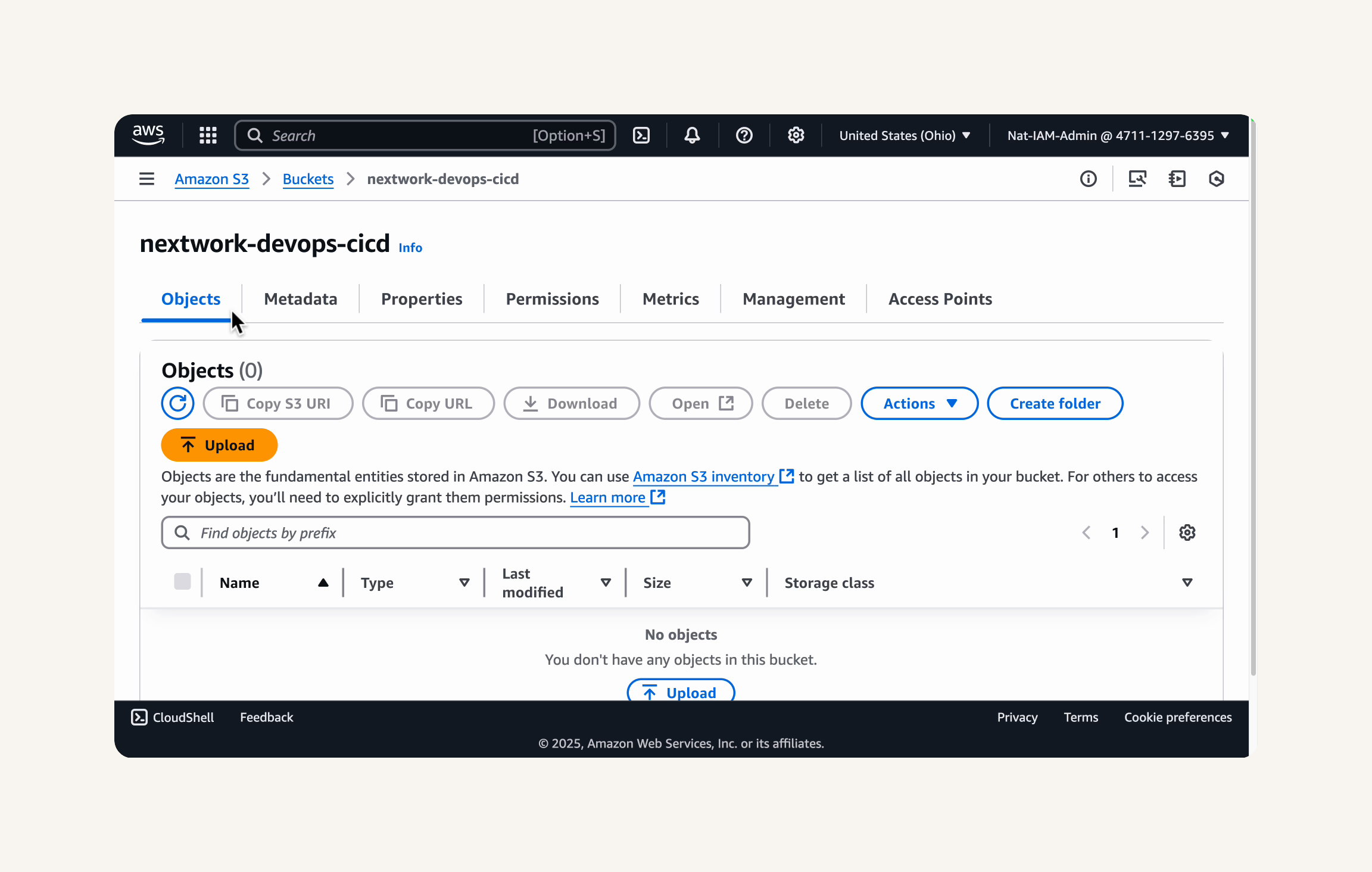

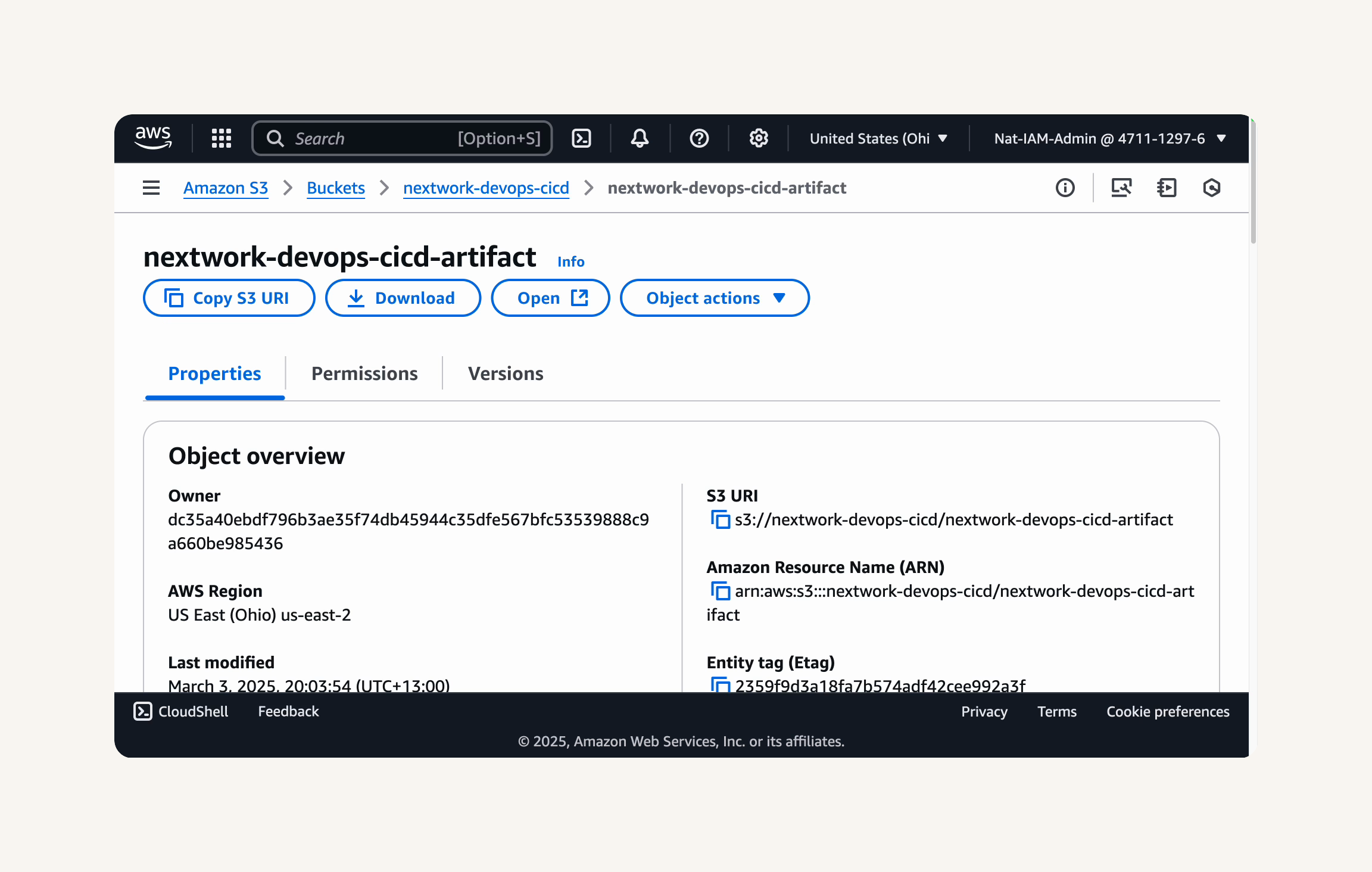

Step-3 Set up an S3 bucket for build artifacts

-

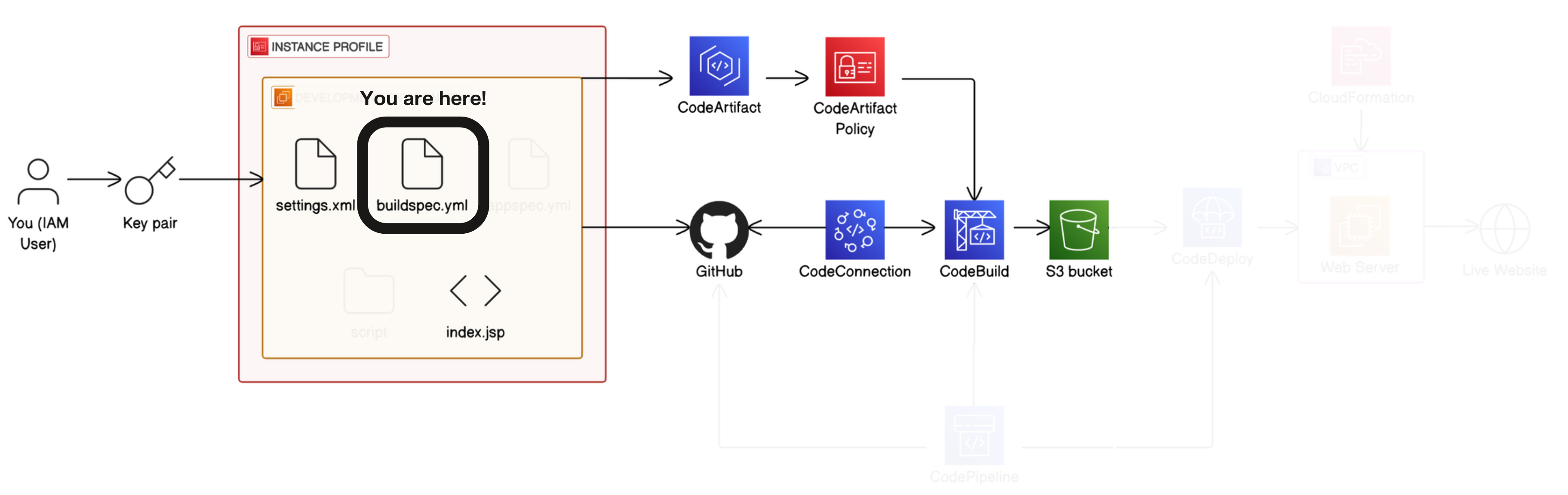

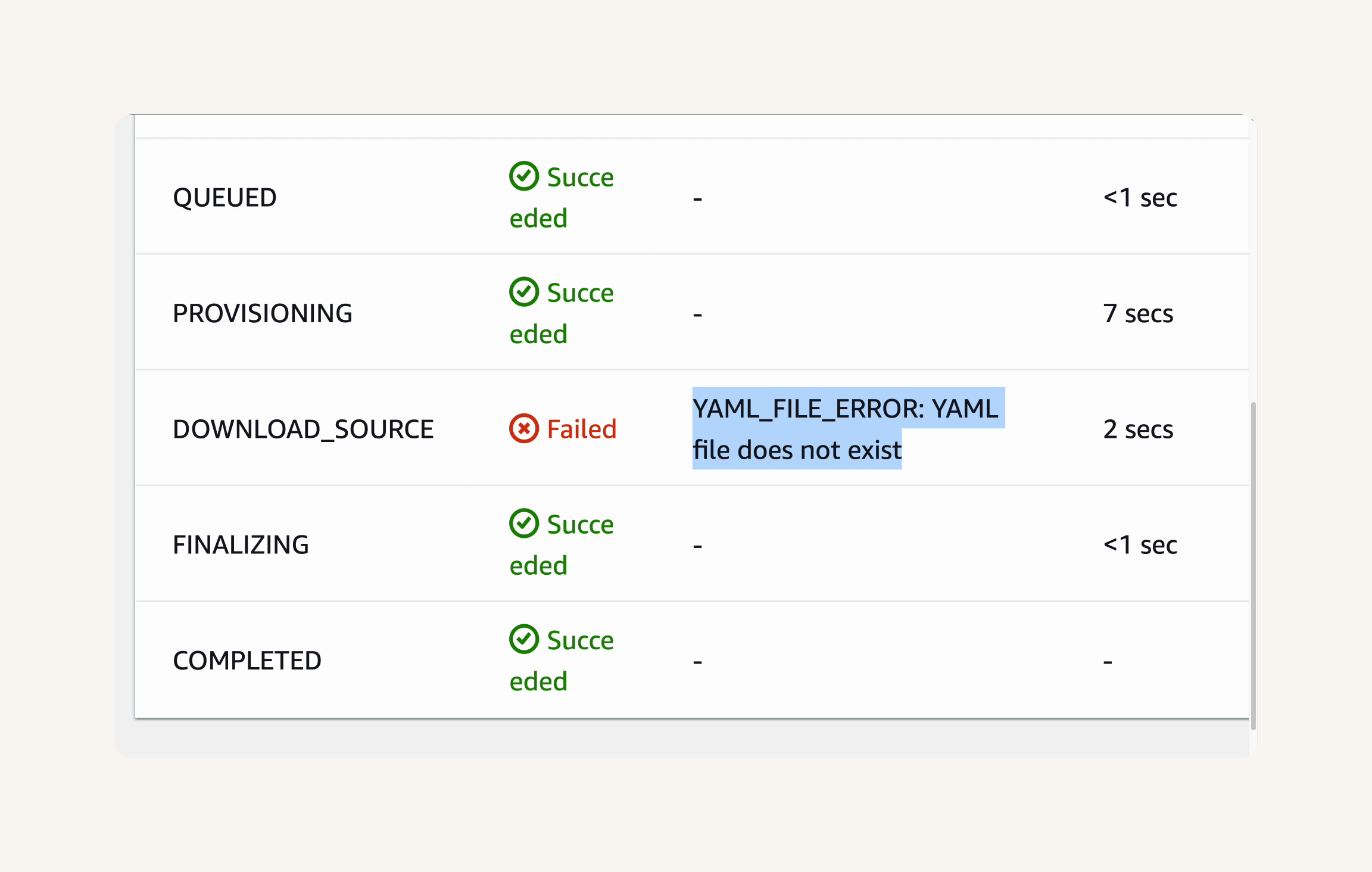

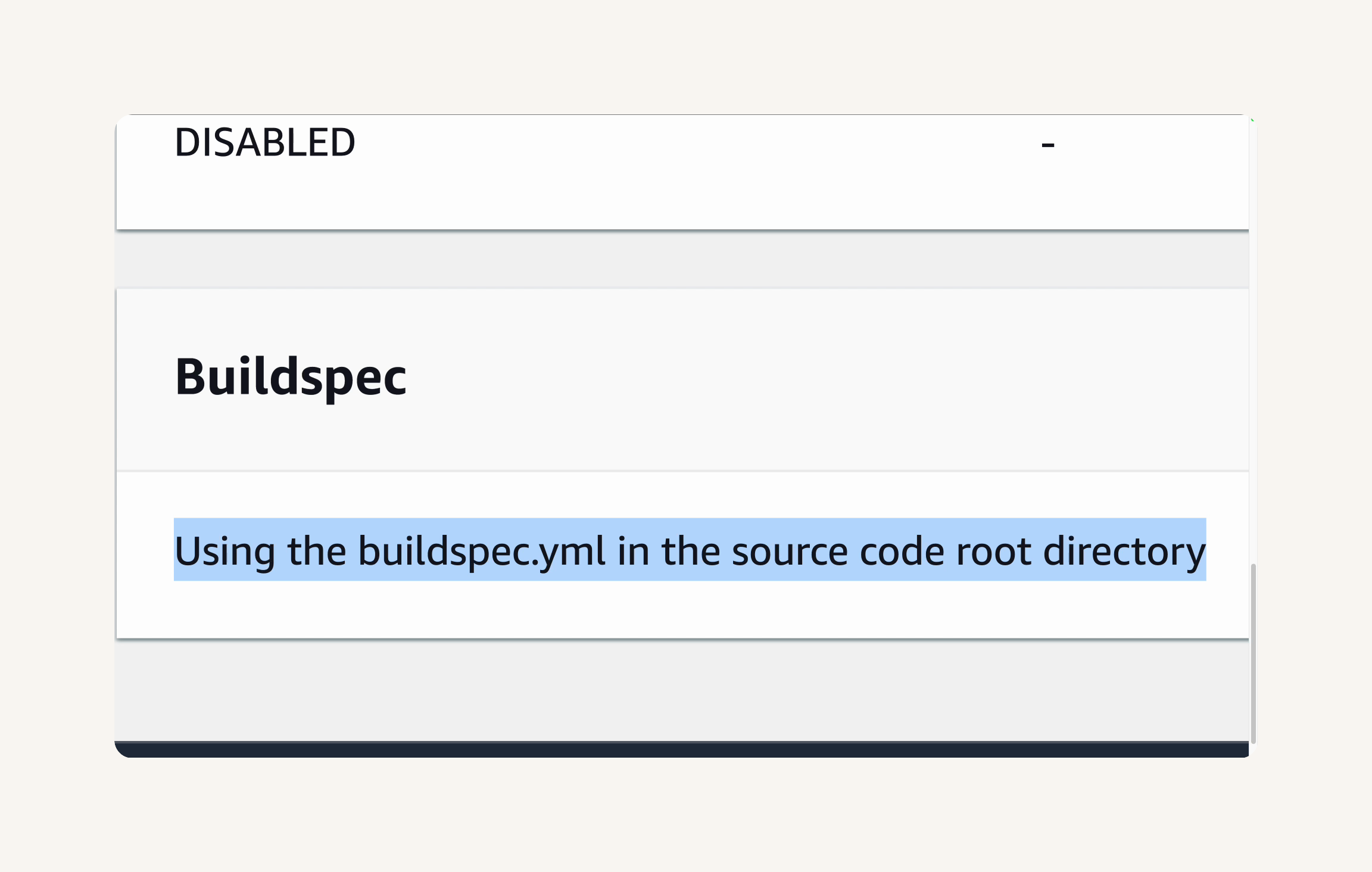

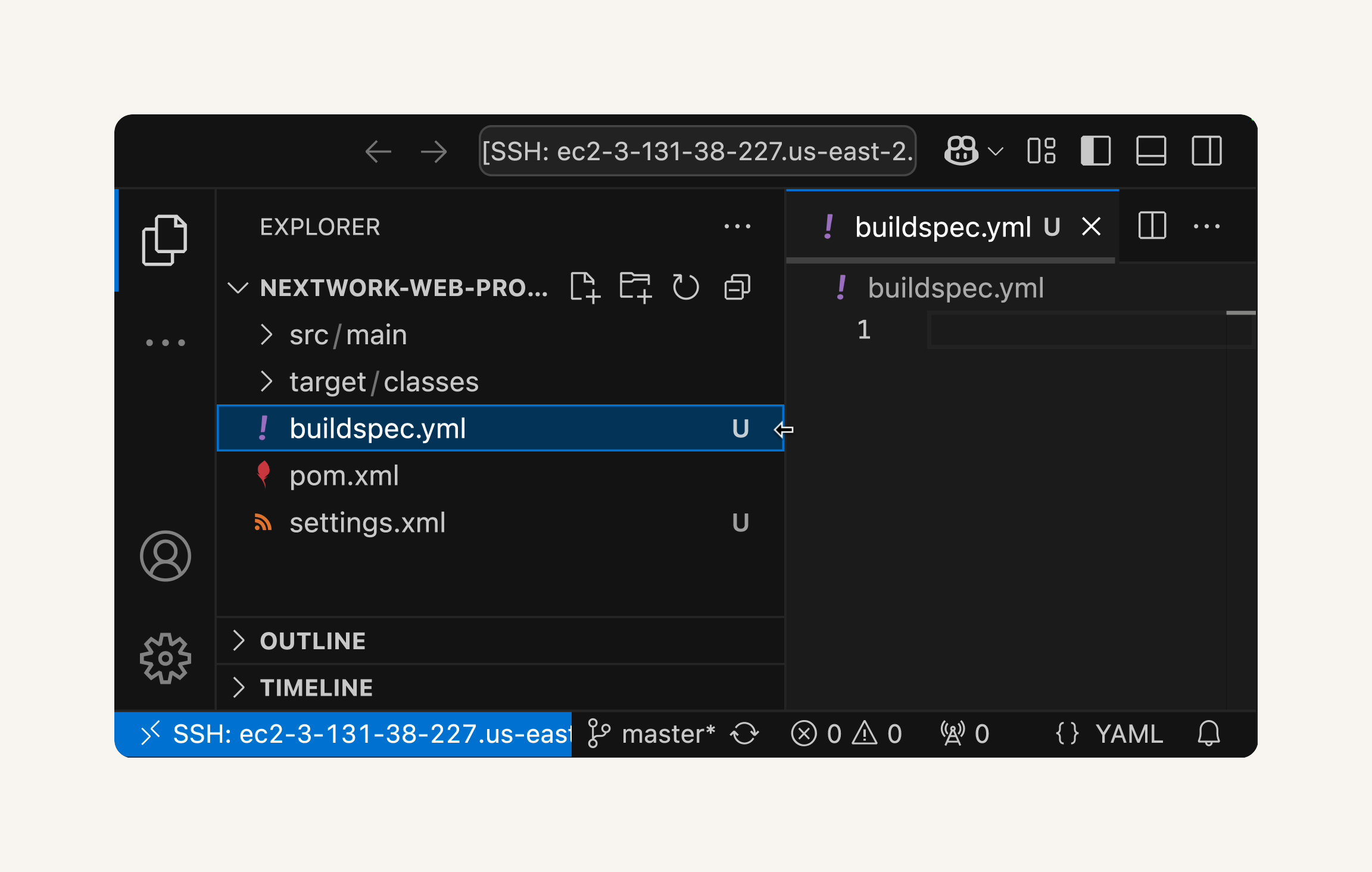

Step-4 Configure buildspec.yml

-

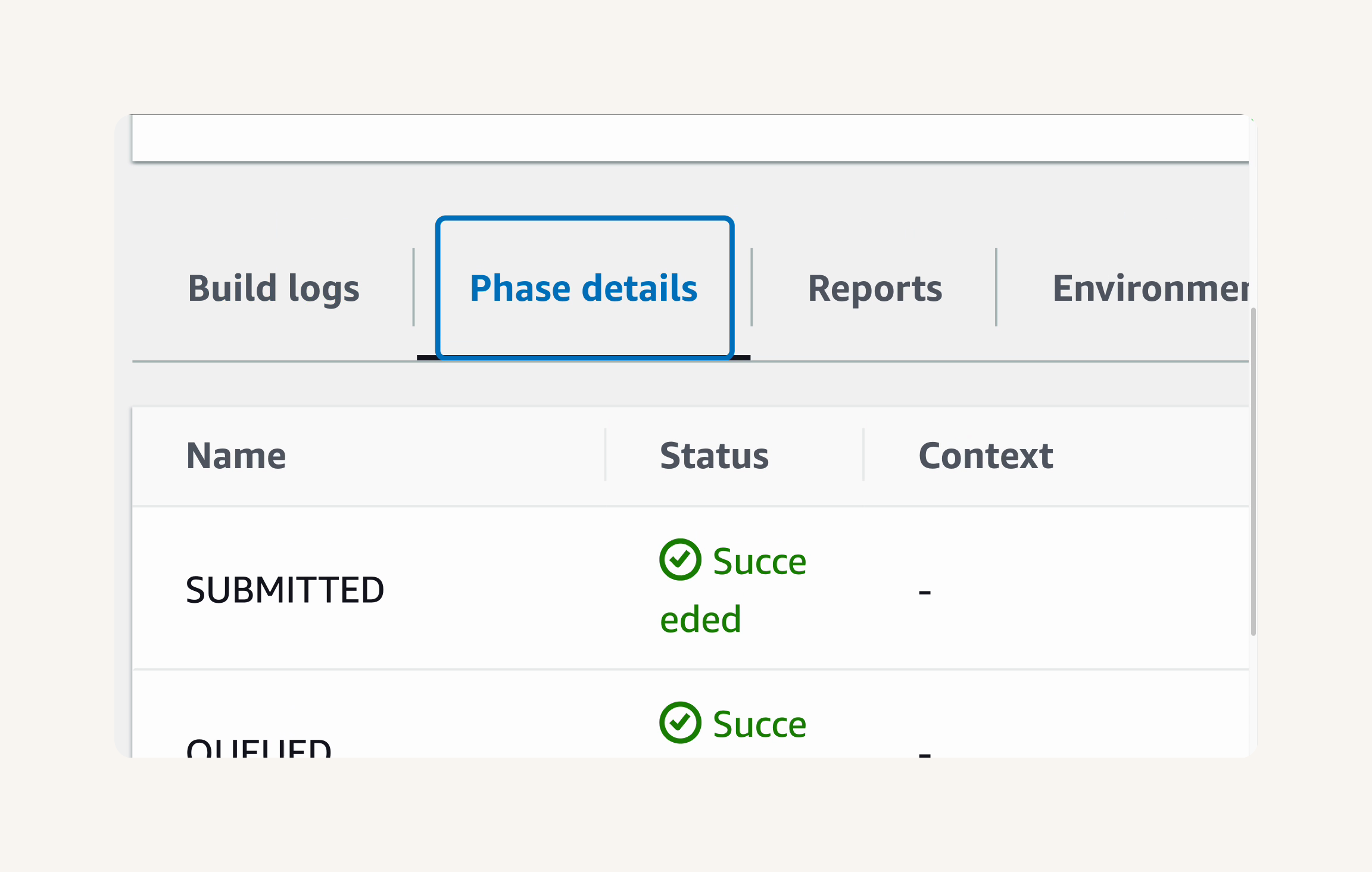

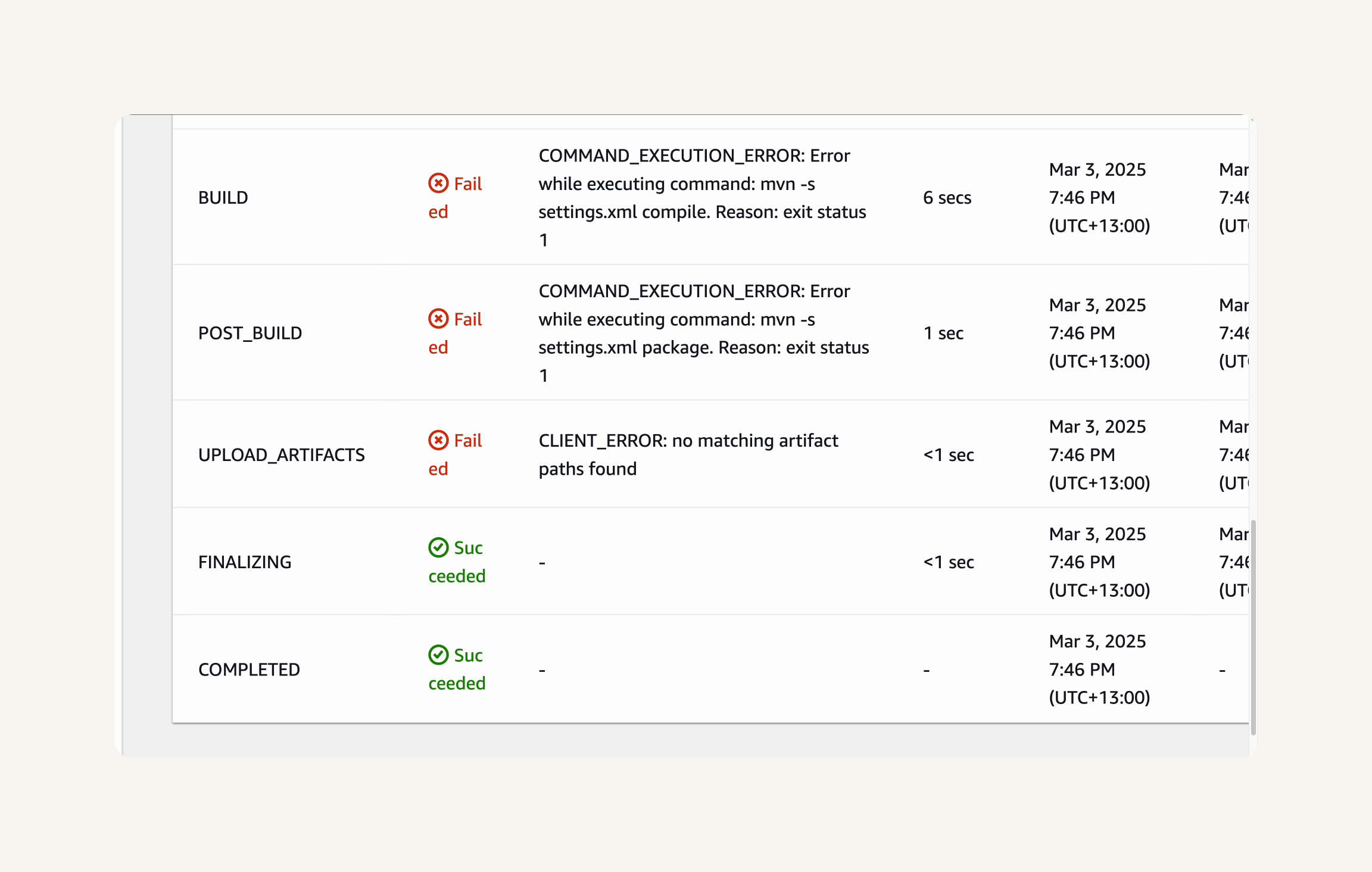

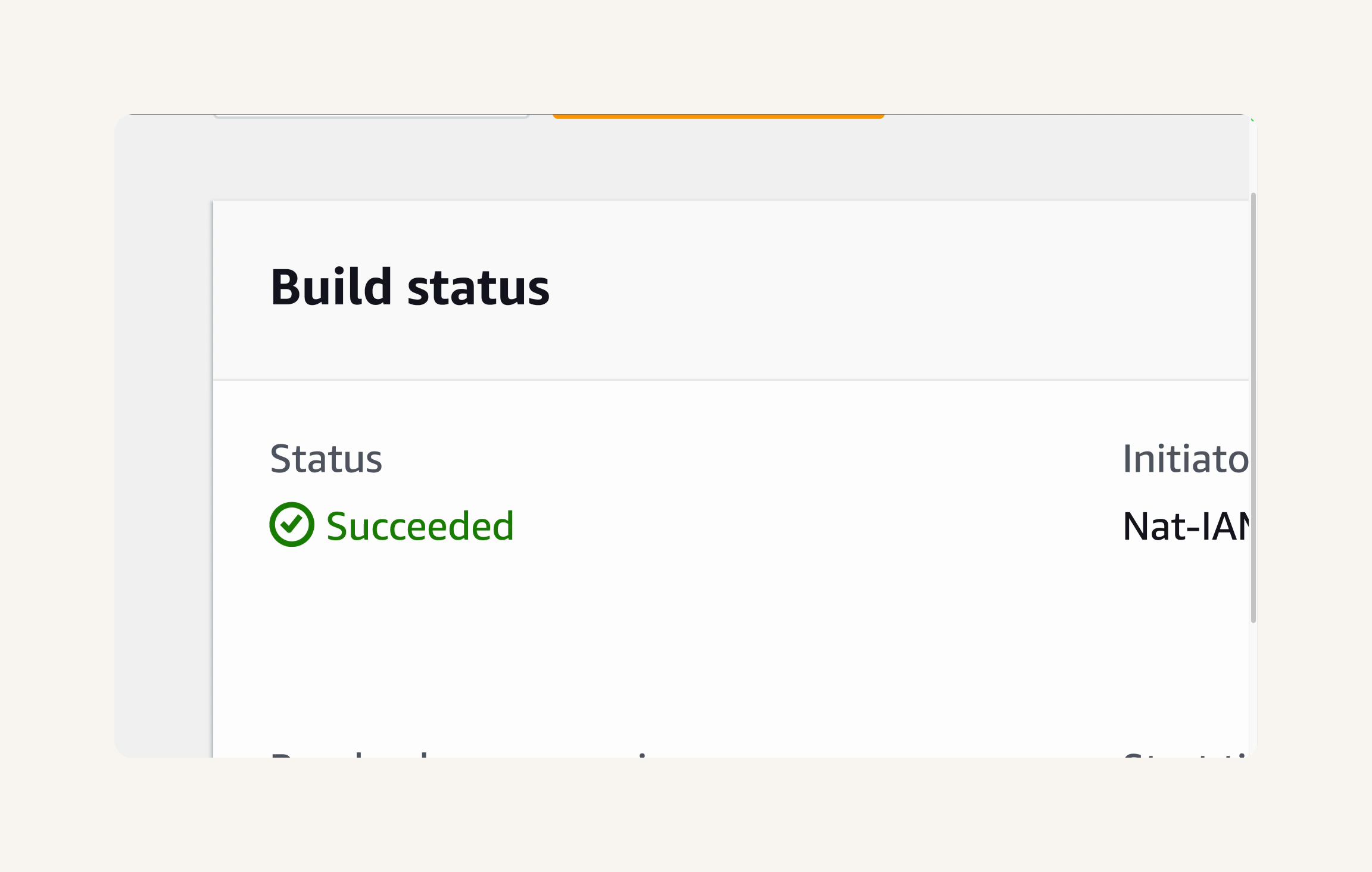

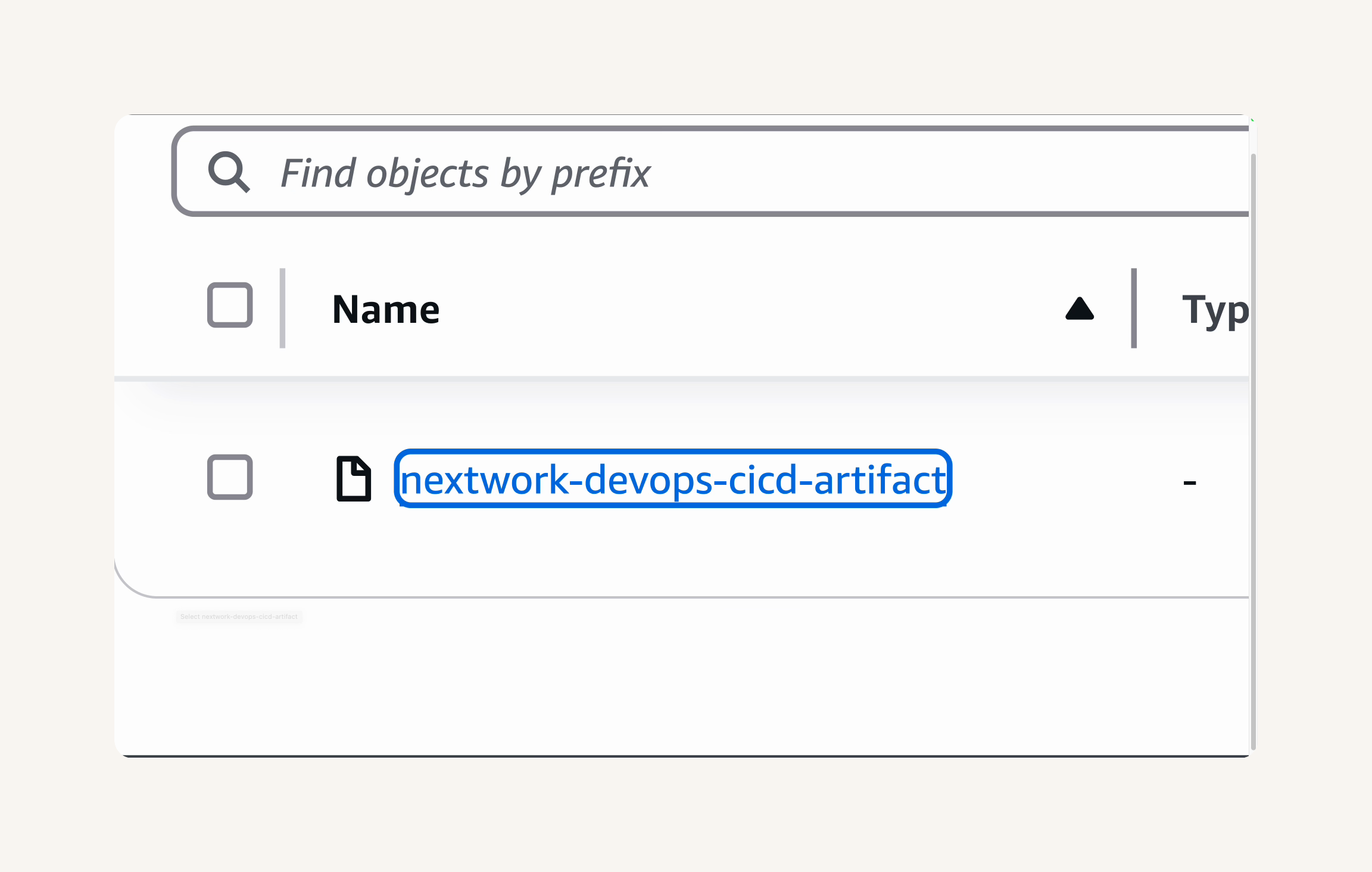

Step-5 Verify Successful Build and Artifacts

-

Summary (Part-4)

-

-

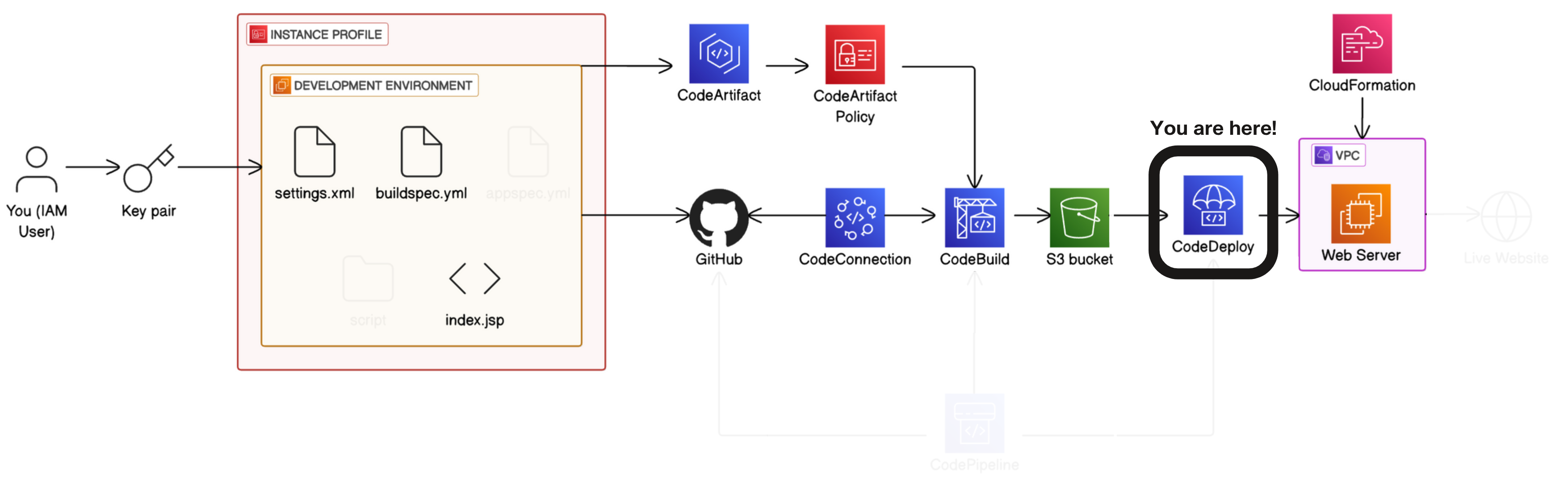

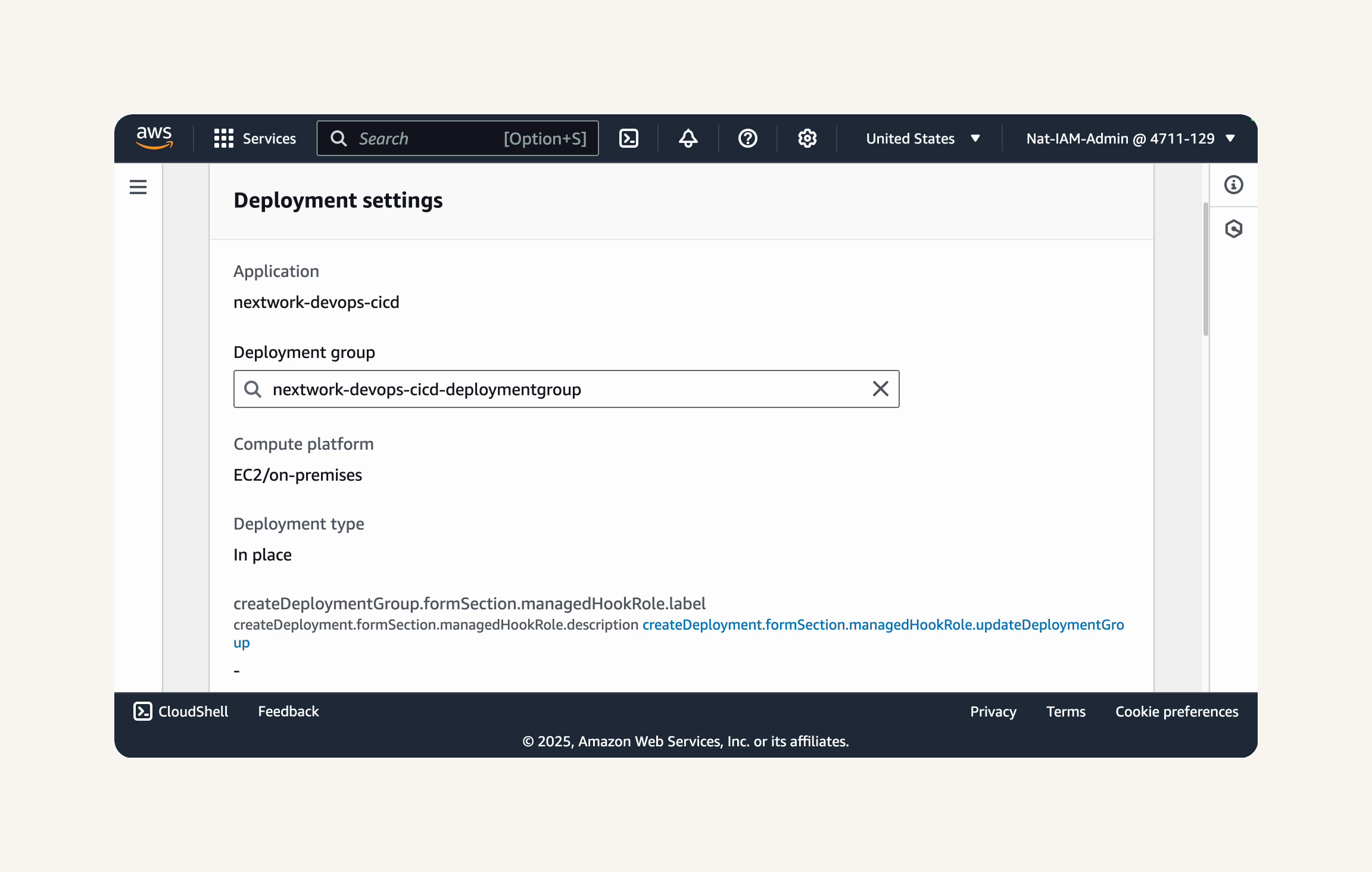

Part-5 Deploy a Web App with CodeDeploy

-

What You'll Need

-

Key Concepts

-

Before We Start

-

Roadmap (Part-5)

-

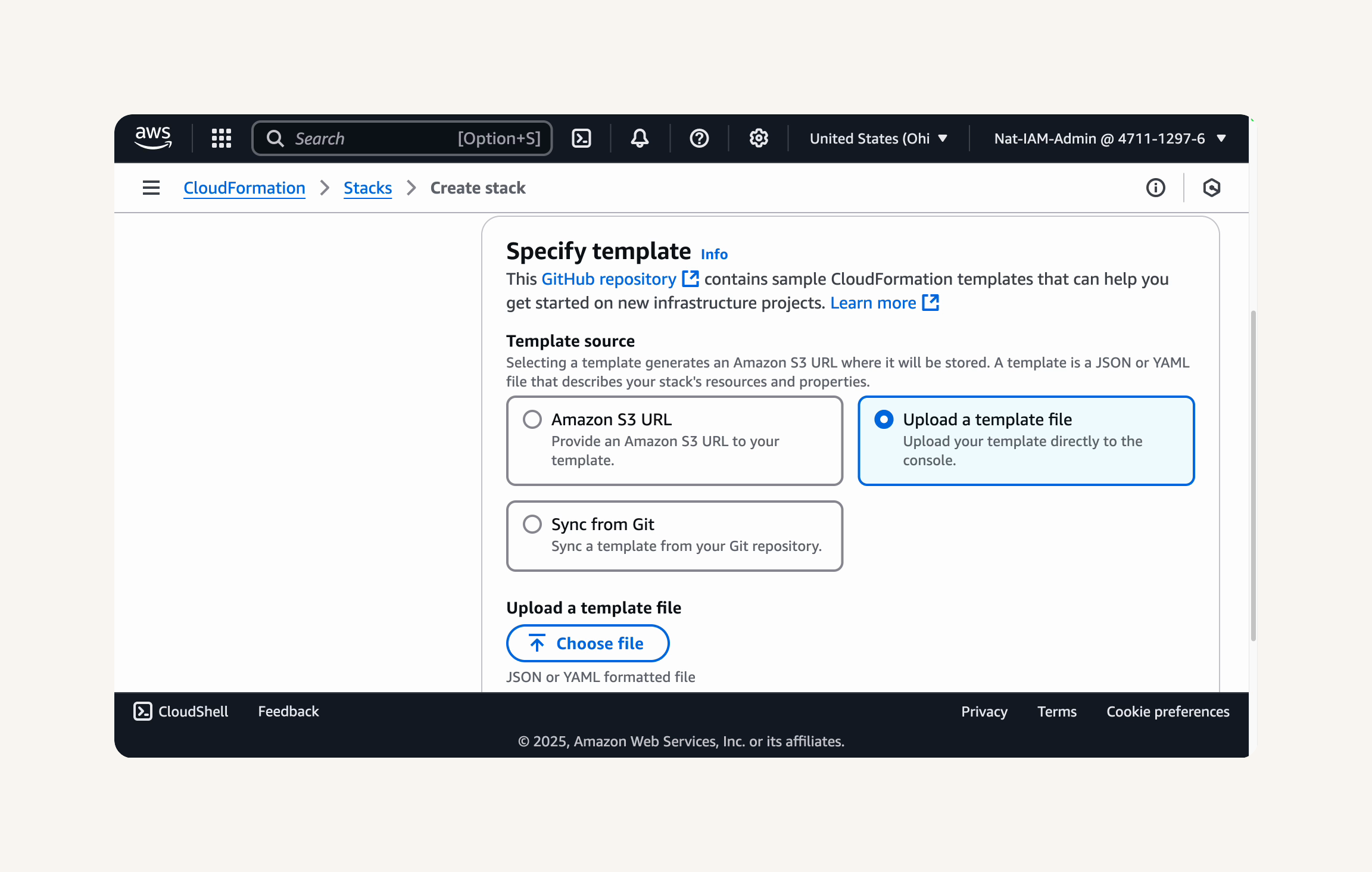

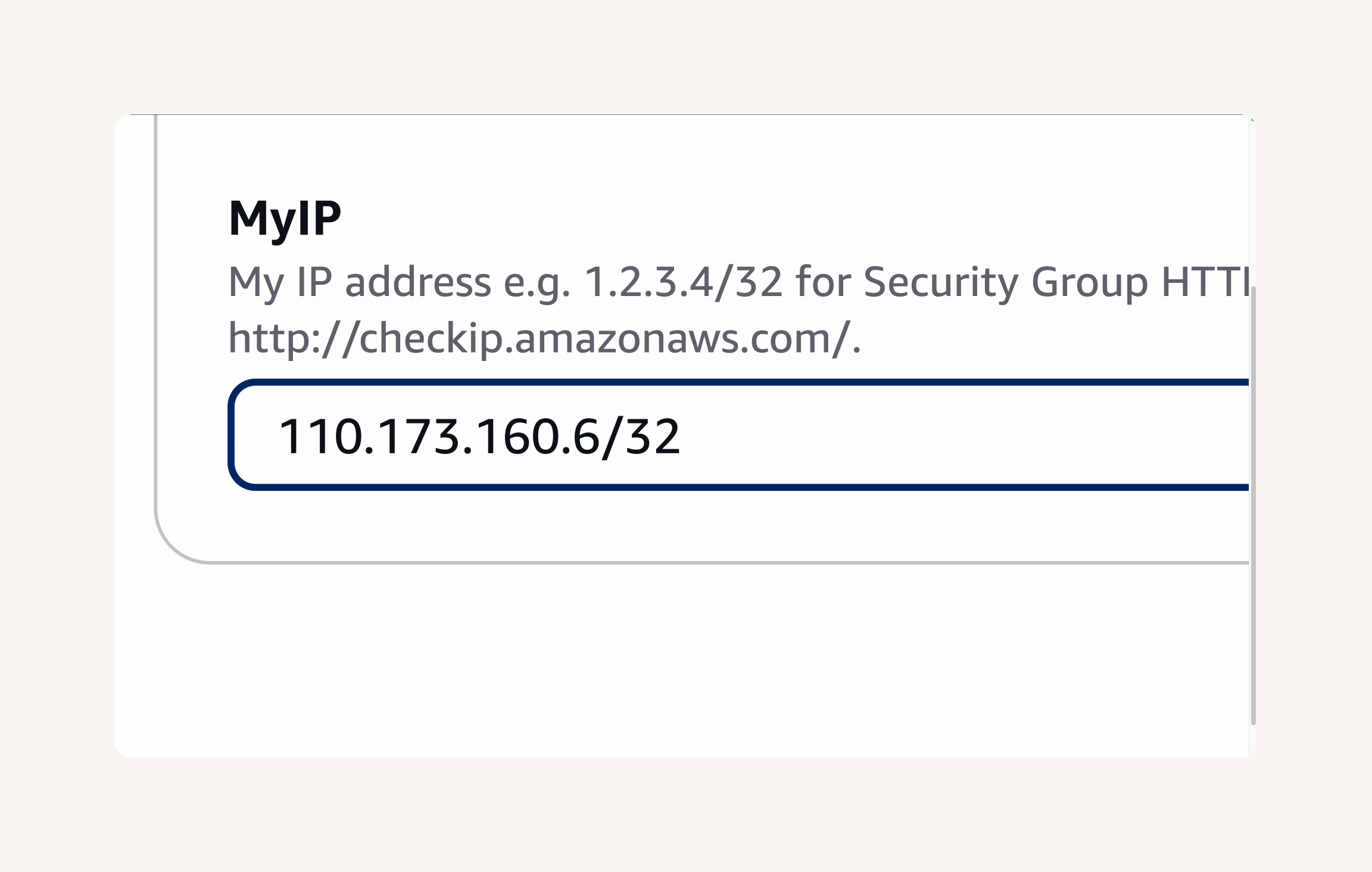

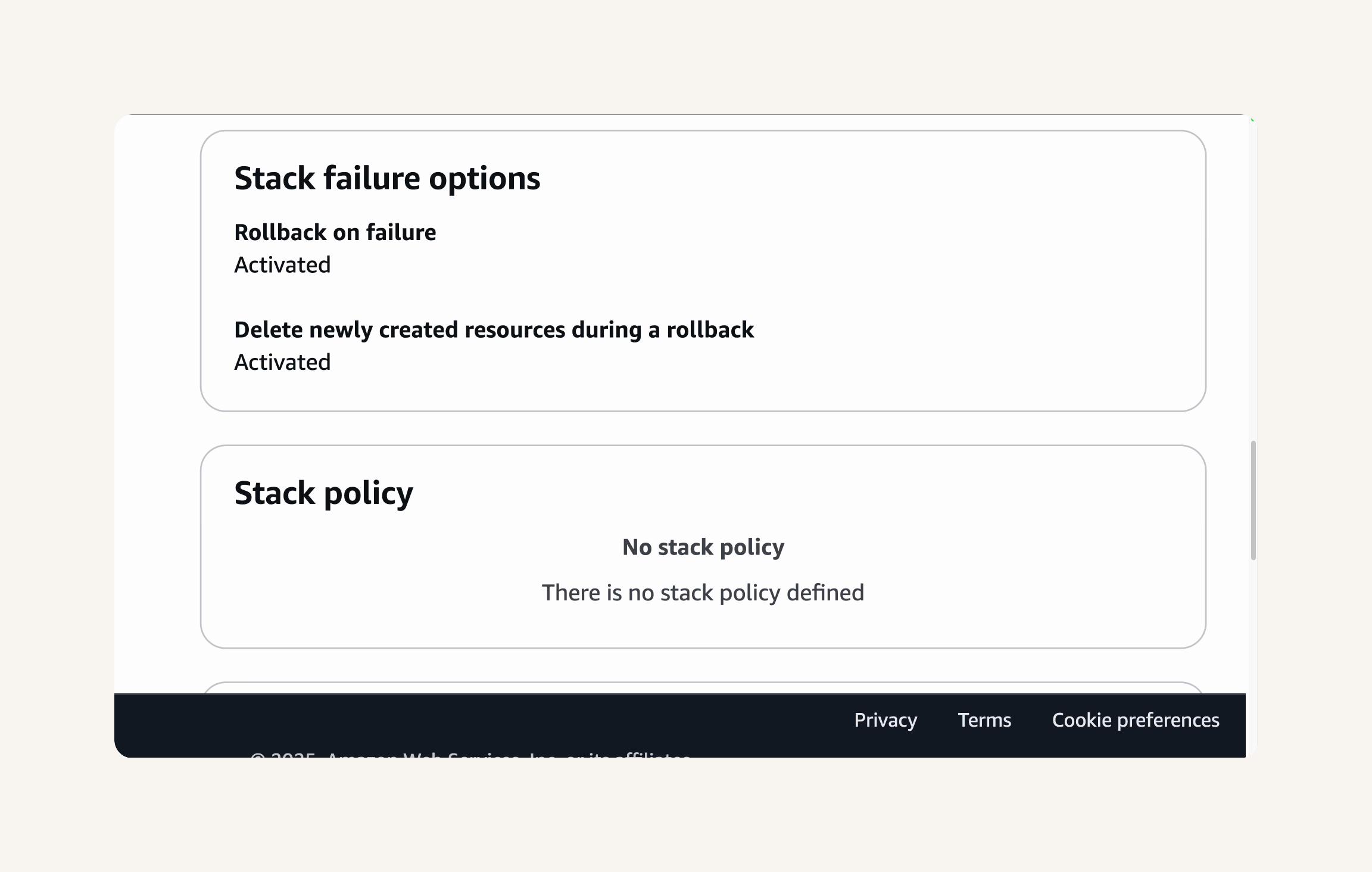

Step-1 Set up deployment architecture with CloudFormation

-

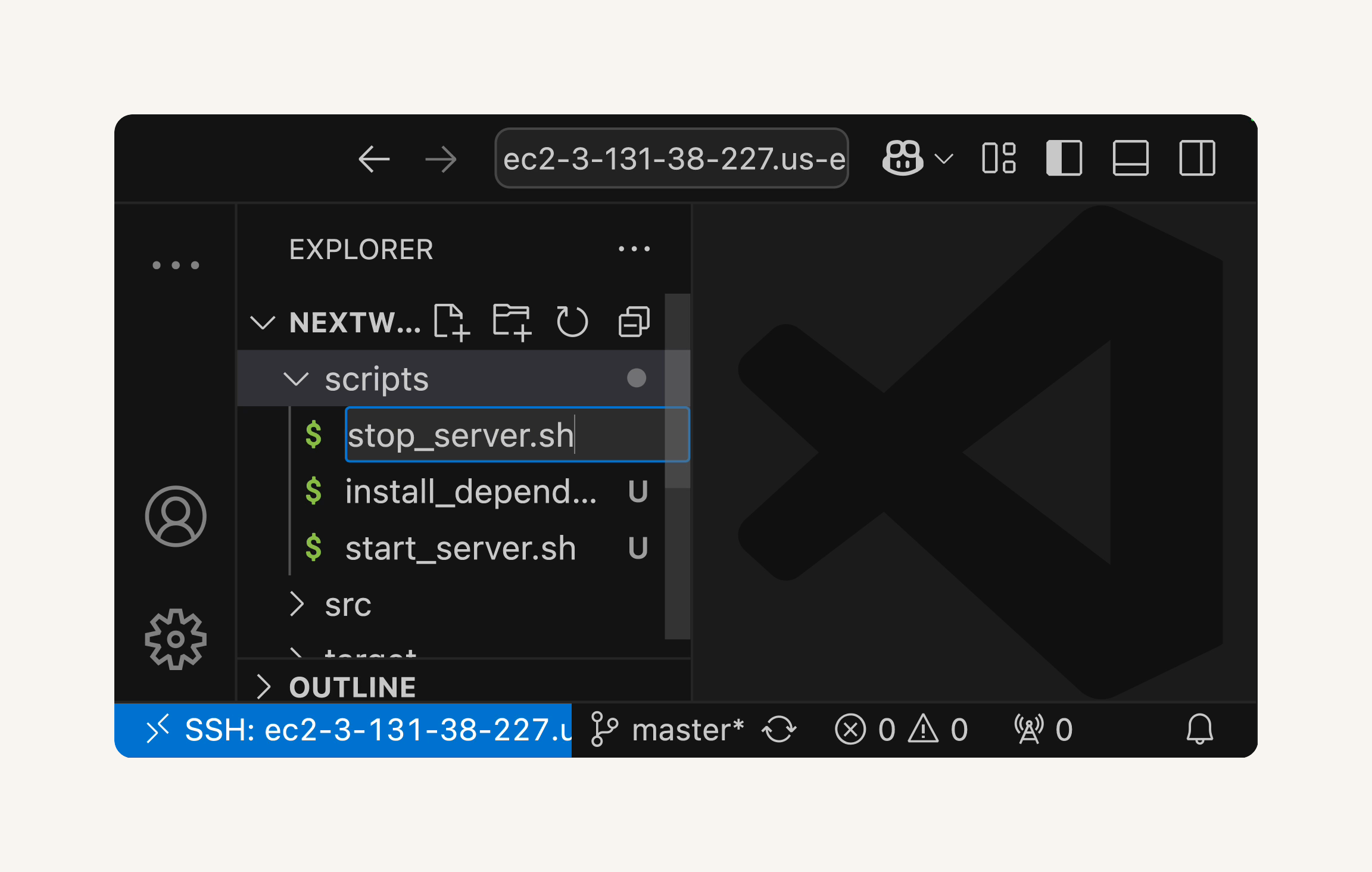

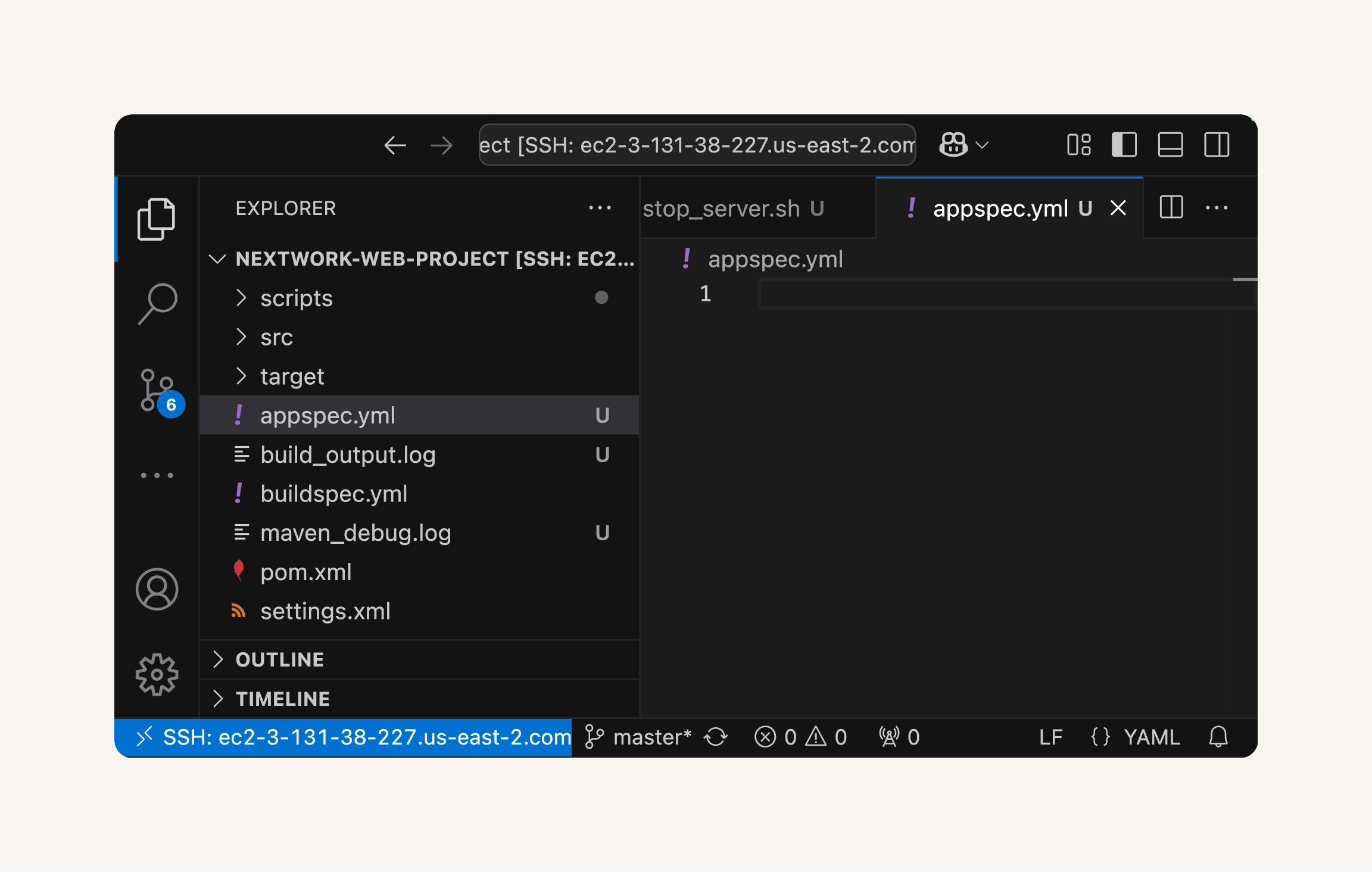

Step-2 Prepare deployment scripts and appspec.yml

-

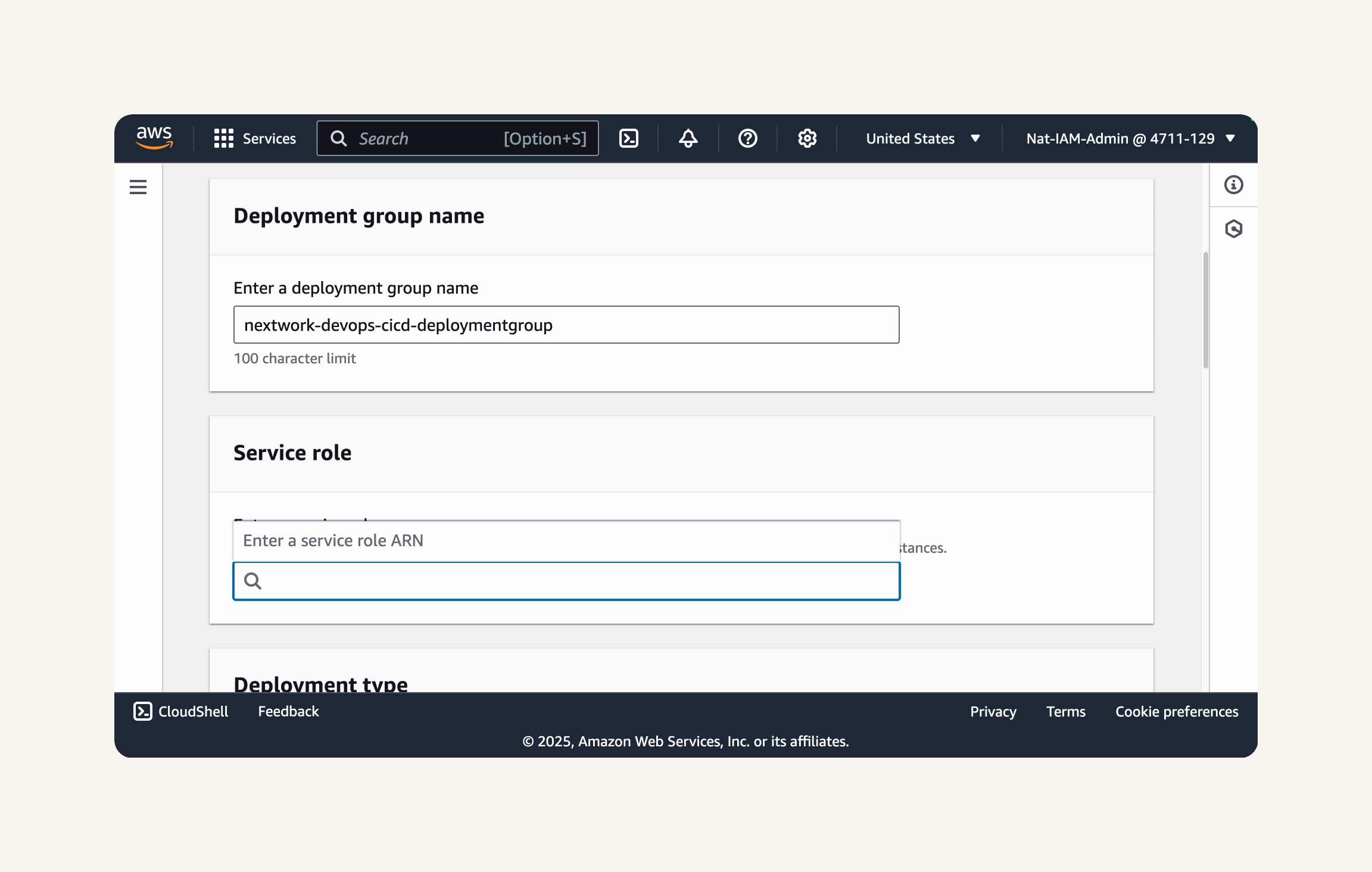

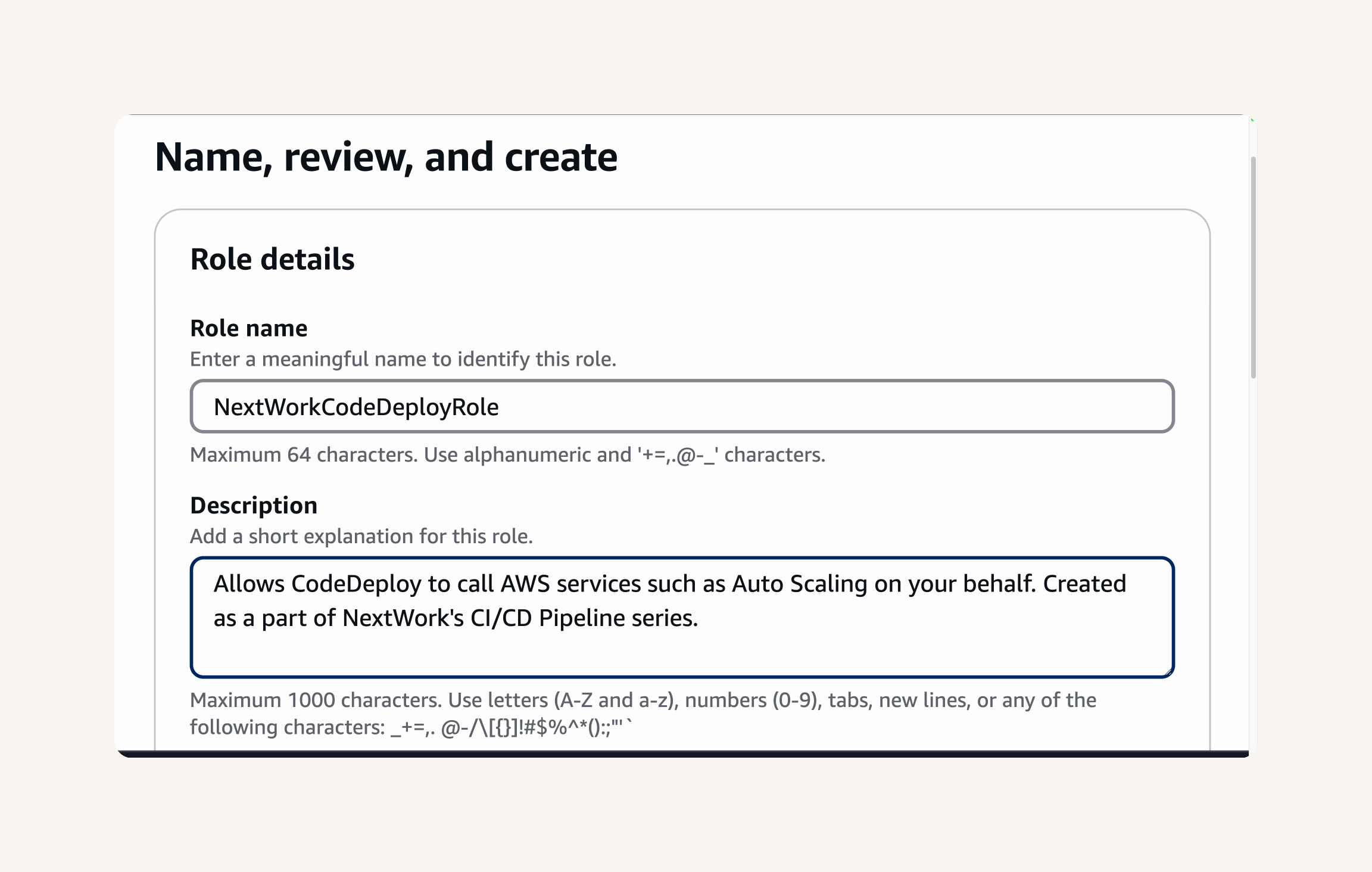

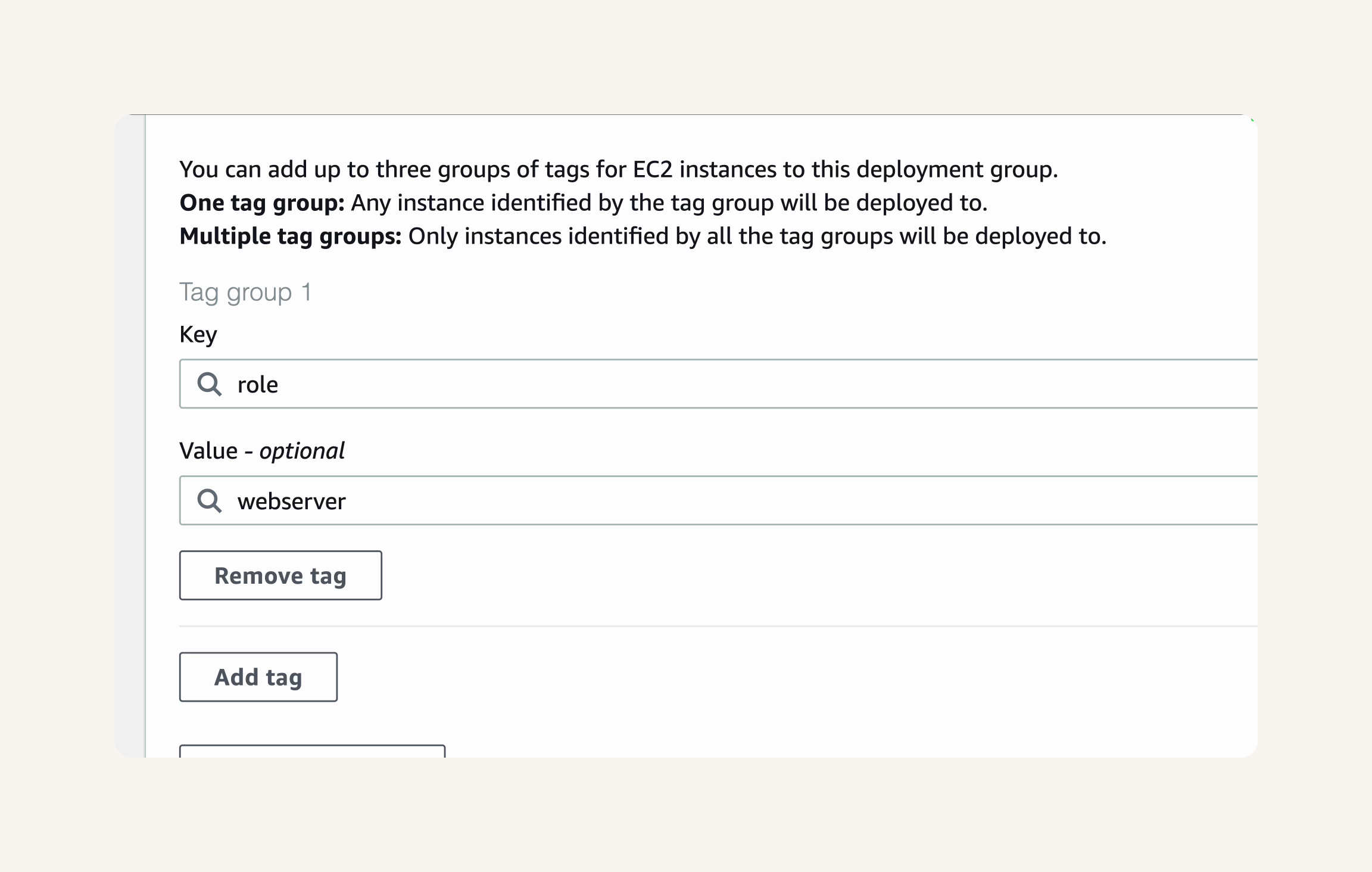

Step-3 Set up a CodeDeploy application and deployment group

-

Step-4 Deploy your web app with CodeDeploy

-

Step-5 (Optional for Experts) Roll back a deployment!

-

Summary (Part-5)

-

-

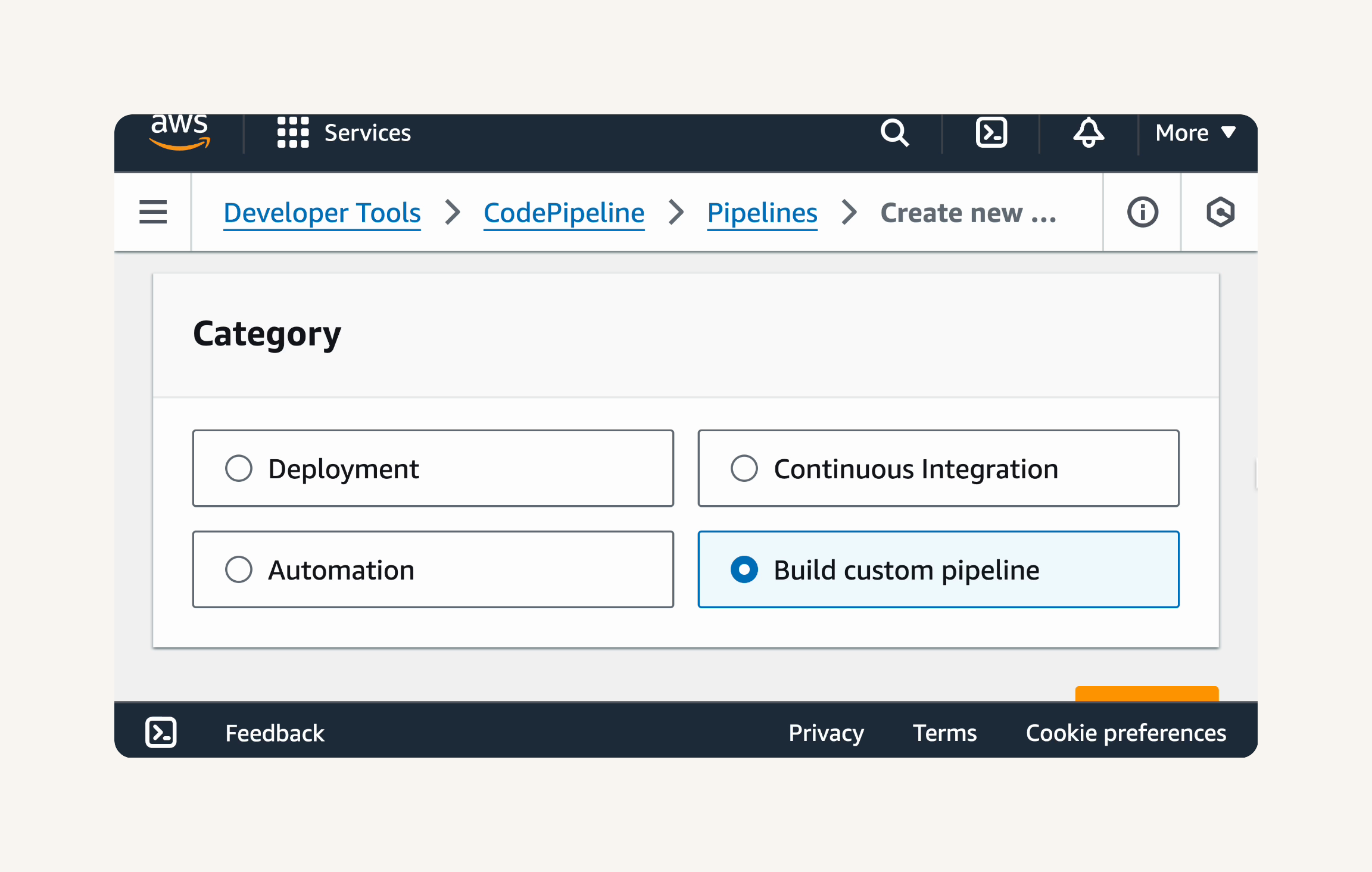

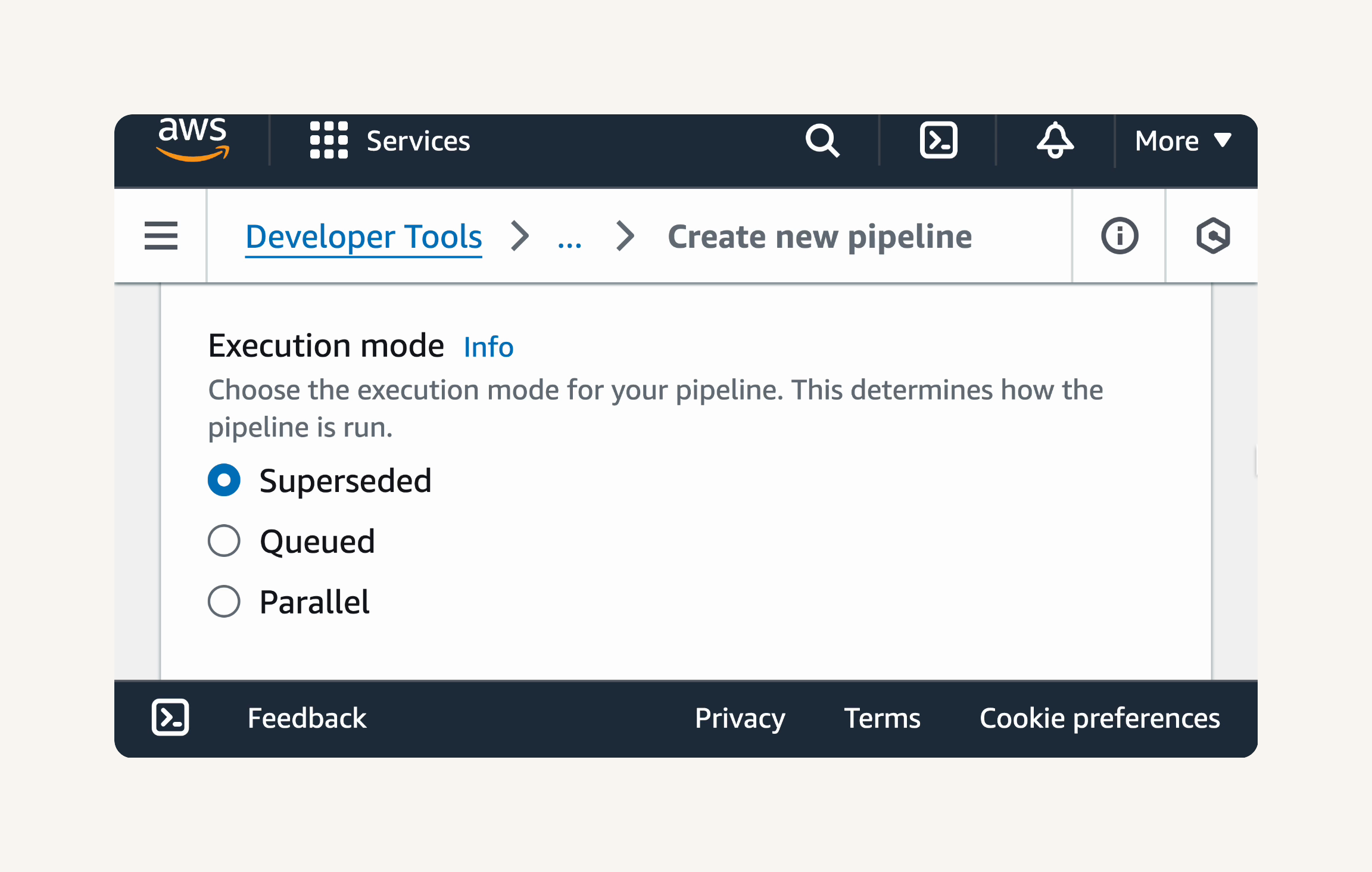

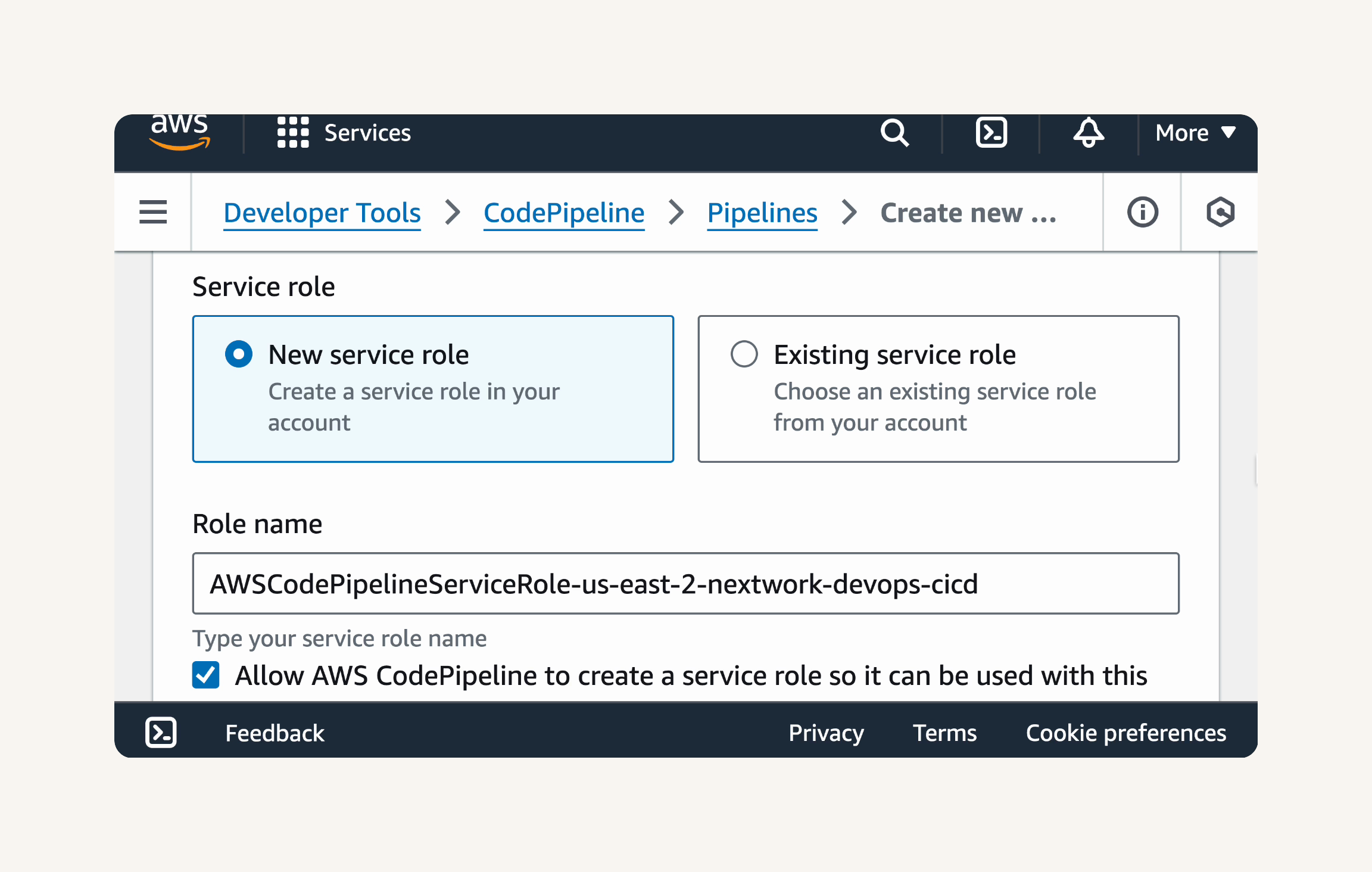

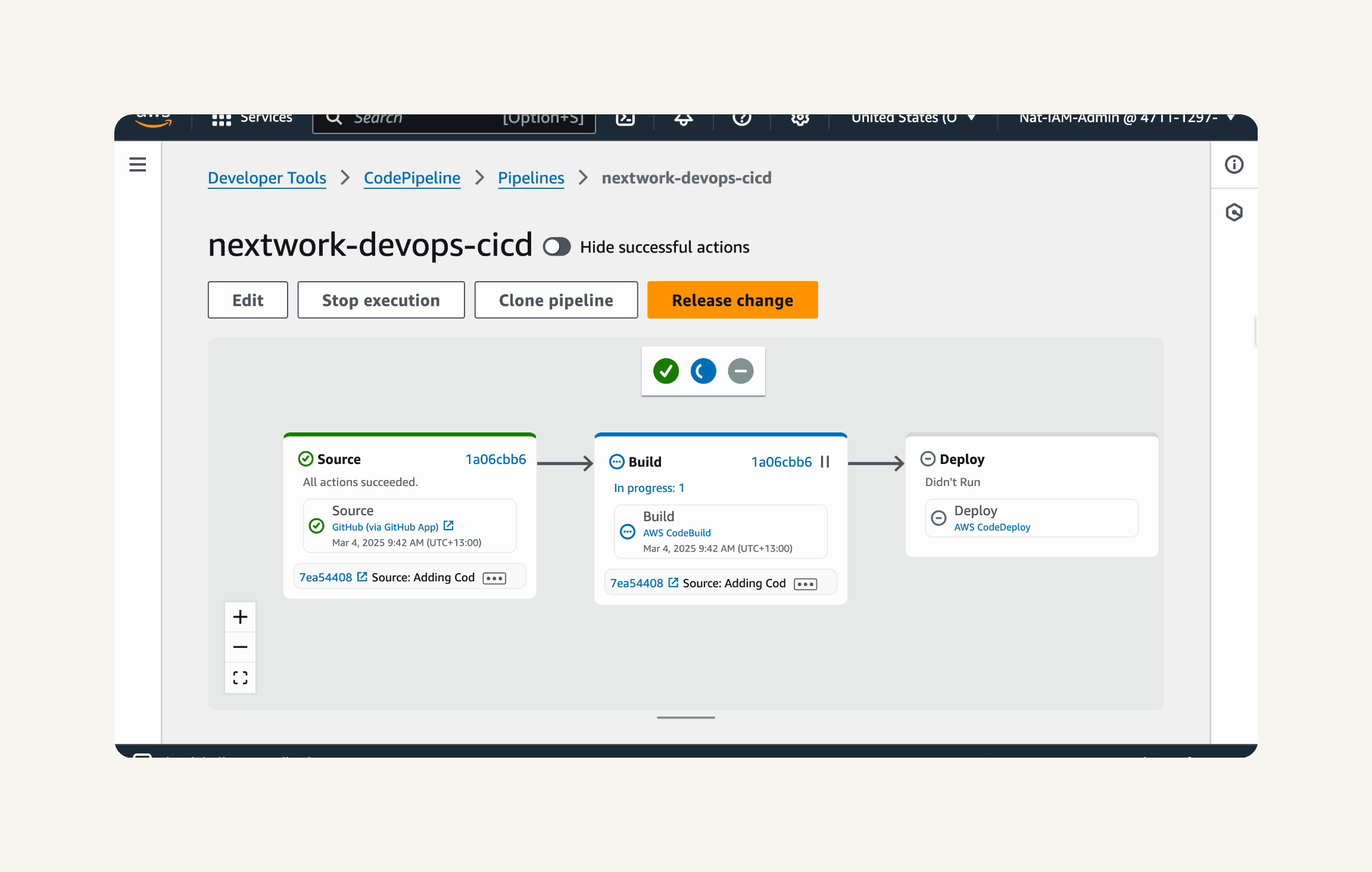

Part-6 Build a CI/CD Pipeline with AWS

-

What You'll Need

-

Key Concepts

-

Before We Start

-

Roadmap (Part-6)

-

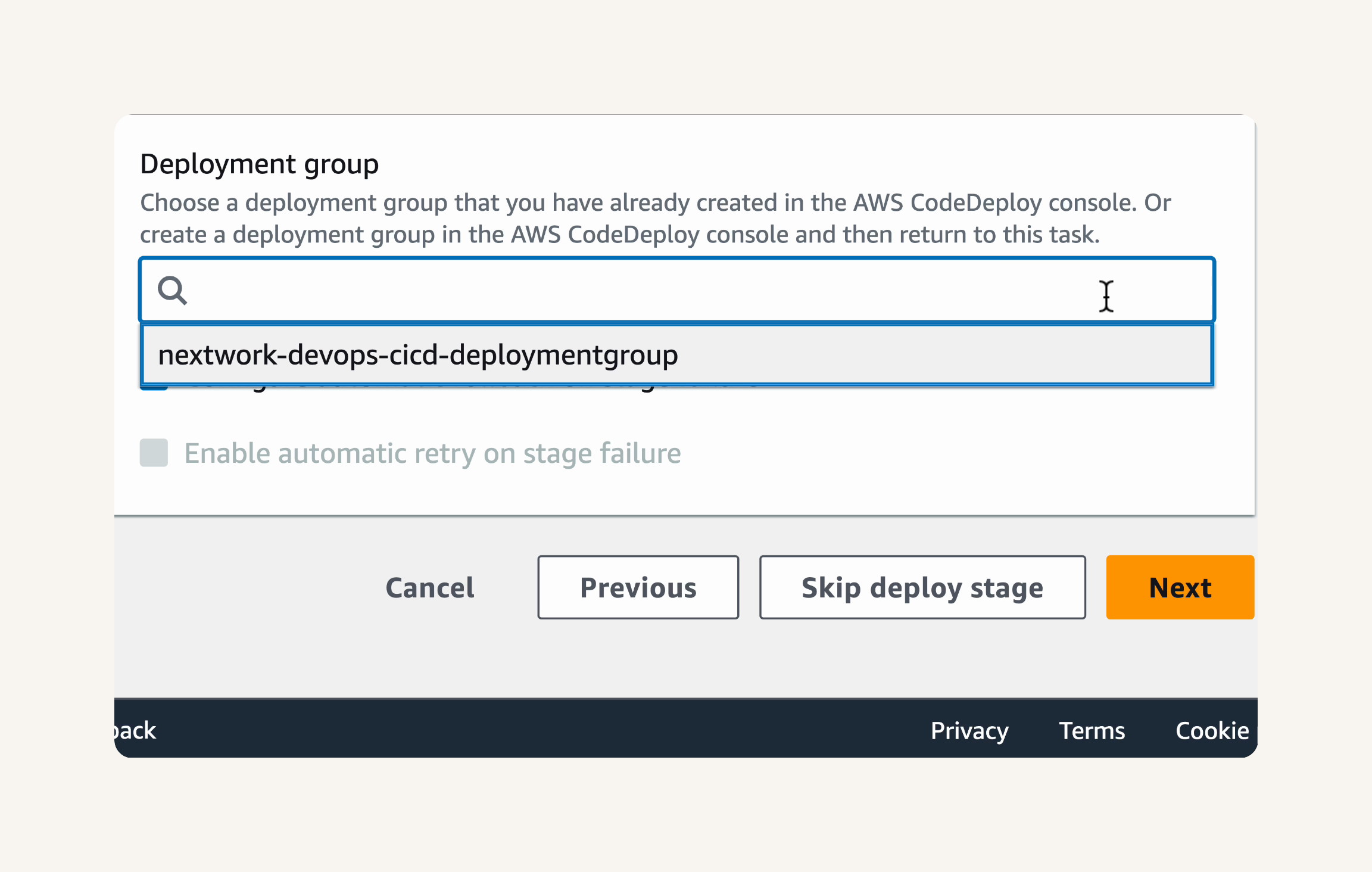

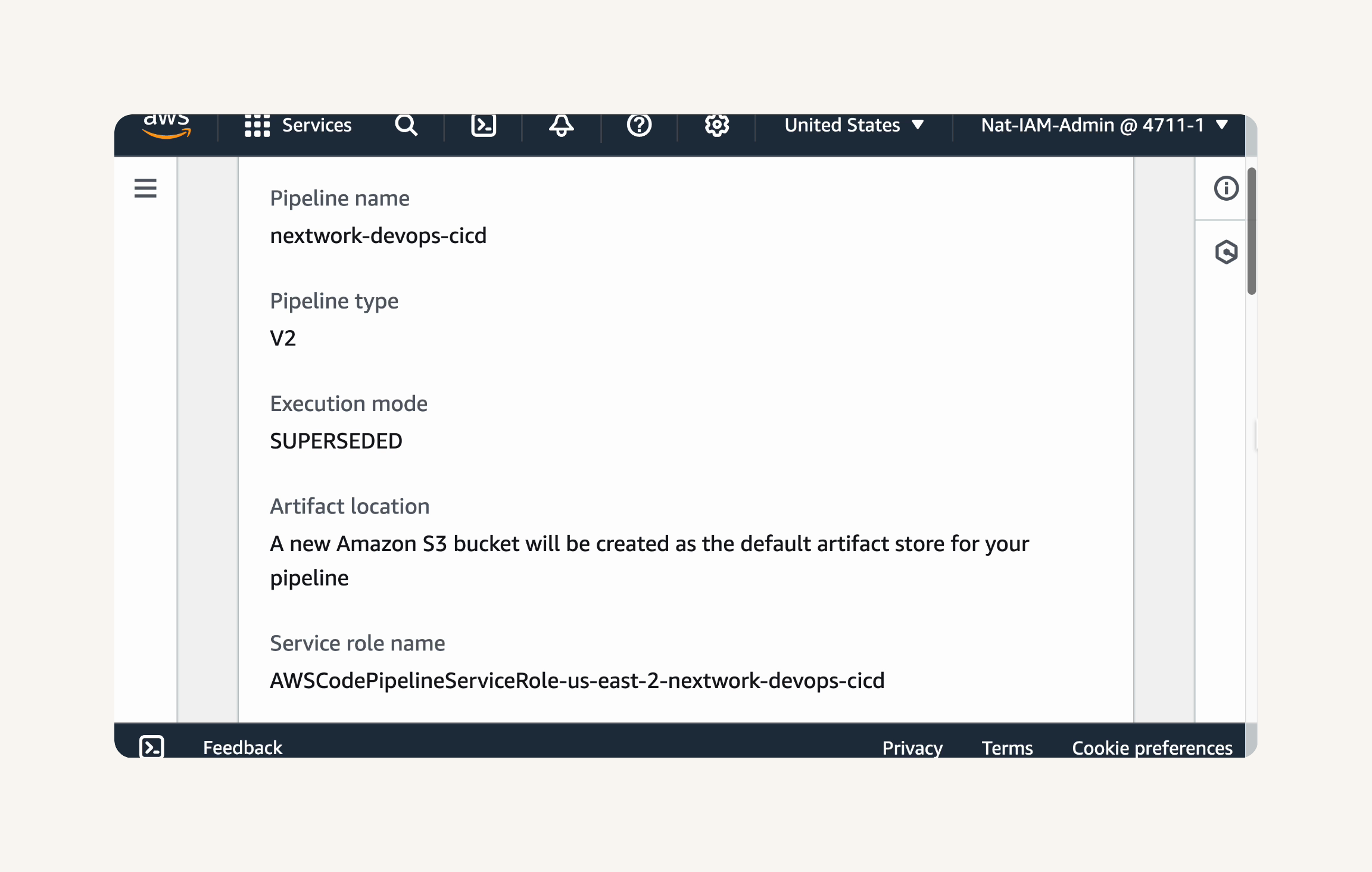

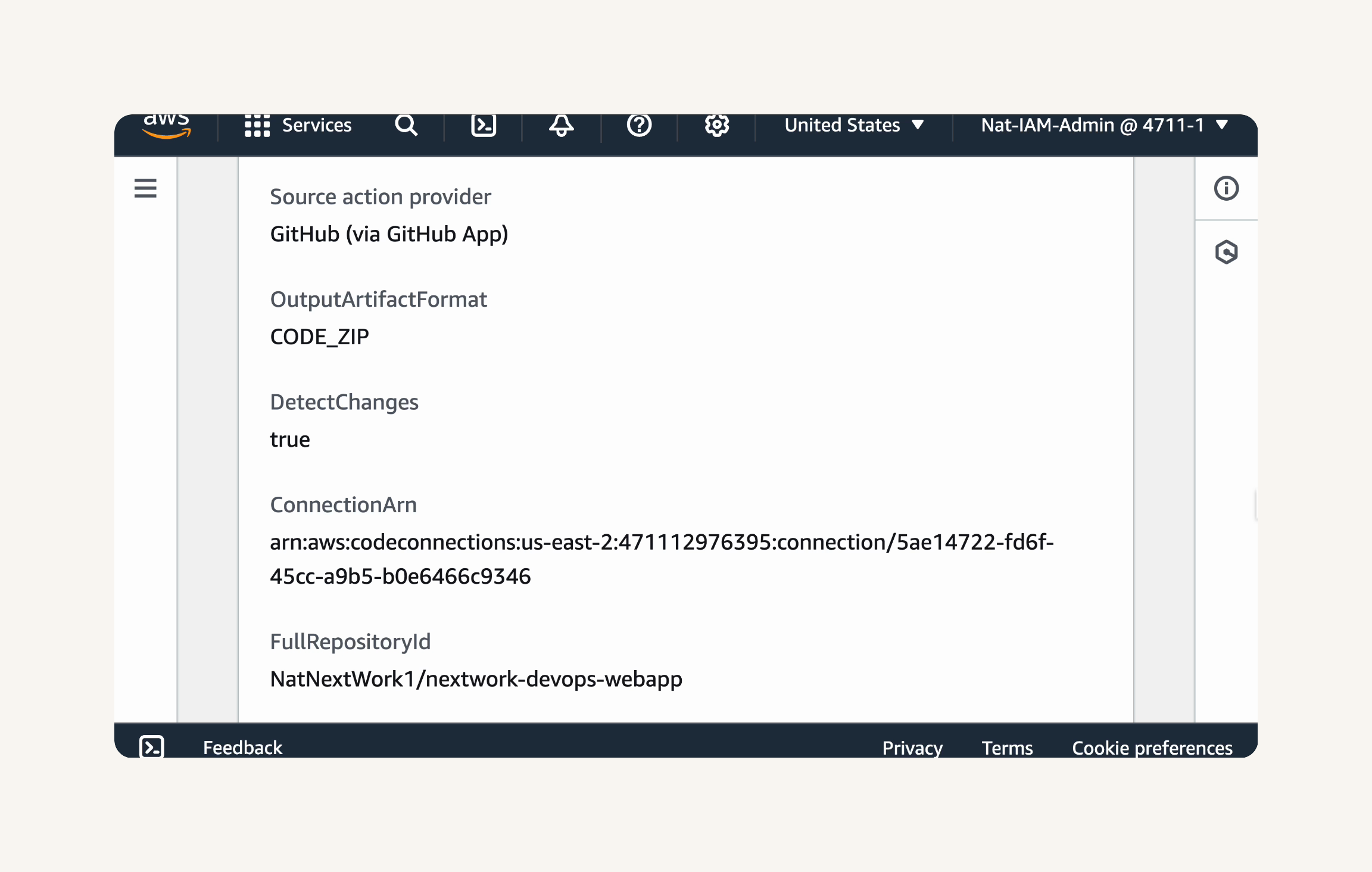

Step-1 Configuring the Source, Build and Deploy Stages

-

Summary (Part-6)

-

-

Clean Up Your Resources

This is a 100% hands-on challenge. You'll be working in the AWS console from day one.

Don't worry if you're new to AWS or DevOps—we've designed this challenge to be beginner-friendly:

-

🤝 Step-by-step instructions guide you through each project.

-

🎥 Live demos of every project on YouTube.

-

📖 Clear explanations of DevOps concepts as we go.

-

📝 Line-by-line explanations of any code (no coding experience required).

-

🔍 Troubleshooting tips to help you solve common errors.

-

🔥 A supportive community to answer your questions and help you out.

-

Set Up a Web App in the Cloud

-

Connect a GitHub Repo with AWS

-

Secure Packages with CodeArtifact

-

Continuous Integration with CodeBuild

-

Deploy a Web App with CodeDeploy

-

Build a CI/CD Pipeline with AWS

Here are the tools and technologies used in this project:

-

AWS (Amazon Web Services)

-

Amazon EC2

-

VS Code (Visual Studio Code)

-

Maven (Apache Maven)

-

Java (Amazon Corretto 8)

-

Git

-

GitHub

-

AWS CodeArtifact

-

AWS CodeBuild

-

AWS CodeDeploy

-

AWS CodePipeline

-

Amazon S3

-

AWS IAM

-

AWS CloudFormation

- AWS Cloud - basic knowledge

- Version control

- Software development lifecycle

- Networking - basic knowledge

- Linux bash scripting

DevOps is all about getting teams to build and deploy code faster without sacrificing reliability. Think of it as a blend of automating tasks, making sure apps are running consistently everywhere, and smart monitoring to catch issues early.

Before DevOps, development and operations teams worked separately with minimal communication. Developers would build software in their own environment, then hand it off to operations to deploy it to users.

But operations couldn't immediately deploy the code. They needed to carefully test it first, make sure it wouldn't break existing systems, and prepare the infrastructure - all without knowing how the code was written.

This created delays, misunderstandings, and frustration on both sides: developers wanted to ship features quickly, while operations wanted to make sure the code is stable before it's deployed.

To address this conflict, DevOps is a set of practices that combines development (writing code) and operations (deploying and maintaining code) to shorten development cycles and deliver reliable software.

💡 If DevOps is a set of practices, what is a DevOps engineer?

DevOps engineers implement DevOps practices in their organization. They...

Build automated pipelines (e.g. using AWS CodePipeline, Jenkins, GitHub Actions) that test, package, and deploy software, so releases become faster and more reliable.

Containerize applications using tools like Docker and Kubernetes, which helps applications scale.

Turn cloud infrastructure into code (e.g. AWS CloudFormation, Terraform), so teams can create and manage resources more efficiently than manually configuring them.

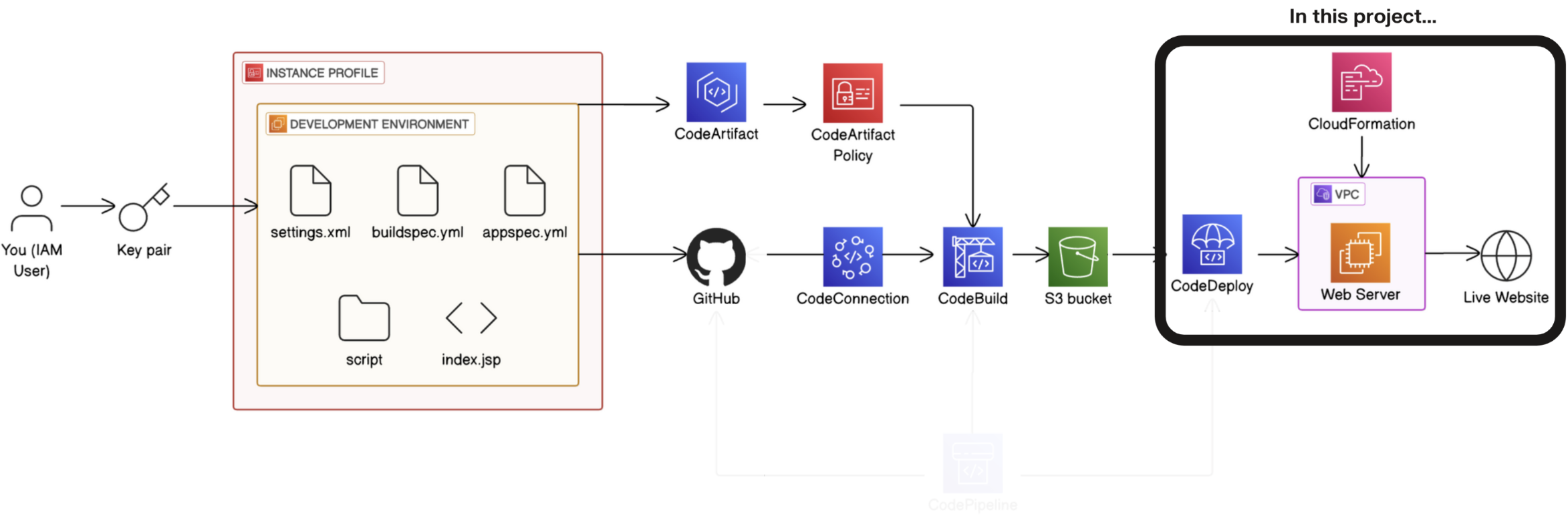

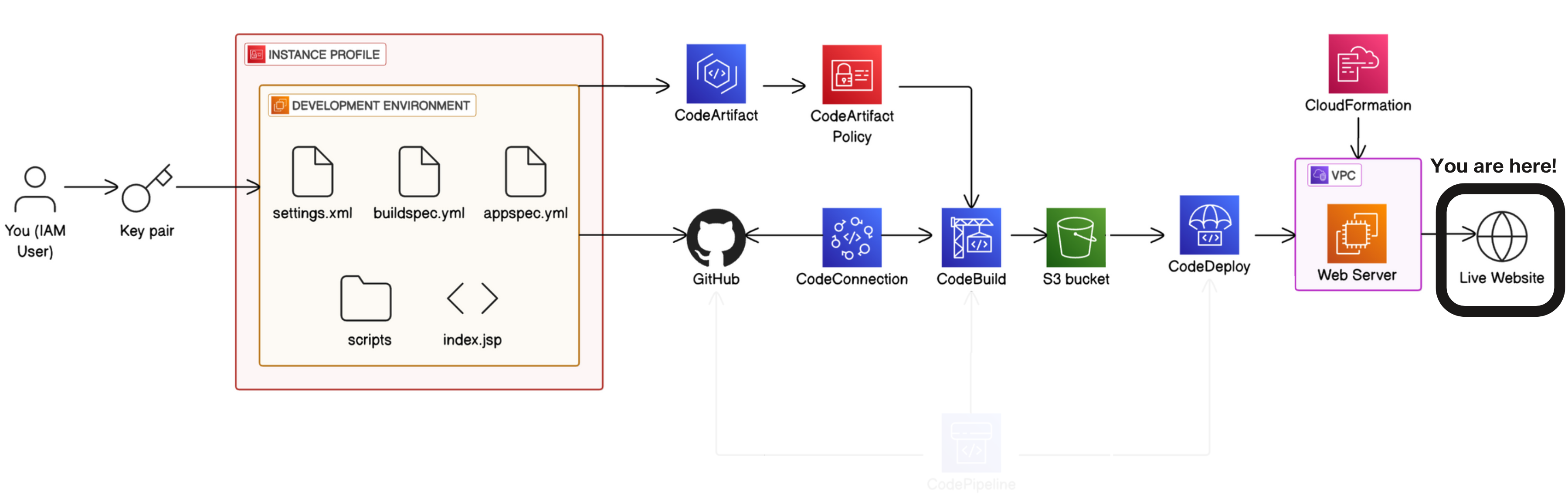

At the heart of DevOps is CI/CD - Continuous Integration and Continuous Delivery/Deployment.

CI/CD stands for Continuous Integration/ Continuous Development. This process helps teams shortening Development life cycle by deploying small and frequent code changes after series of automated tests are being applied to the code using different tools.

The goal of CI/CD is to make the flow from code changes to a live application as efficient and reliable as possible. To do this, automations are set up across the software development and deployment process.

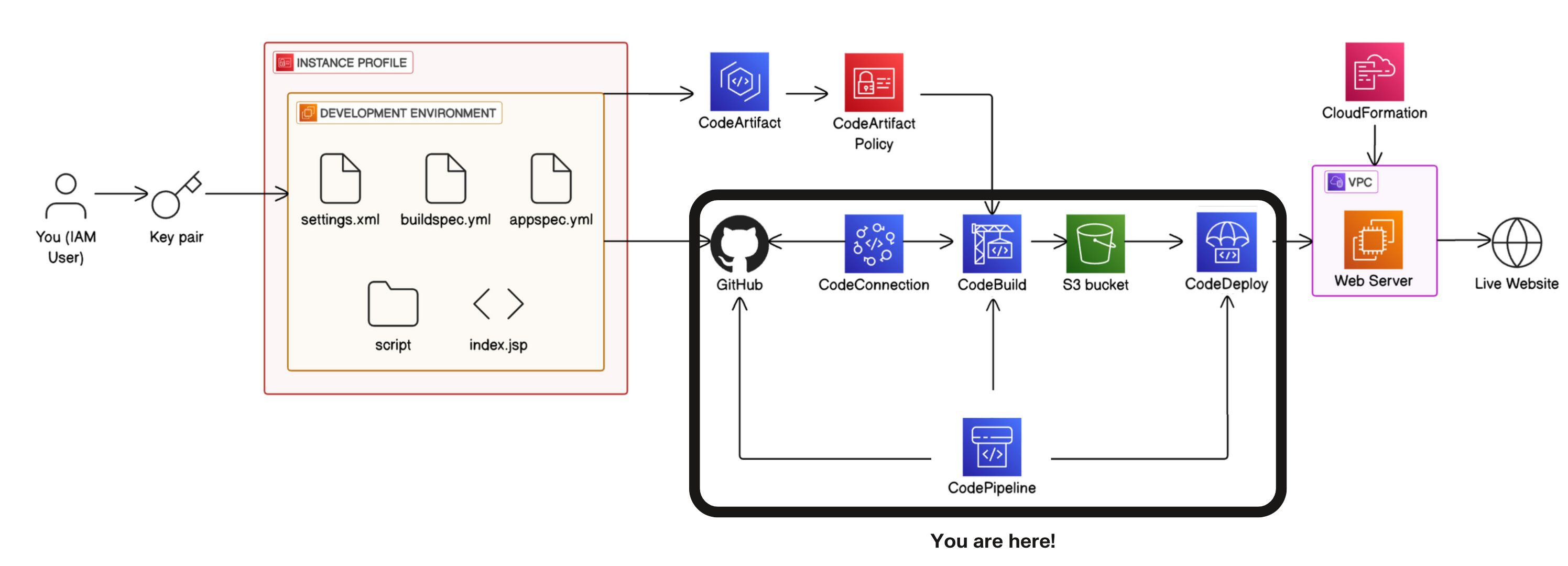

-

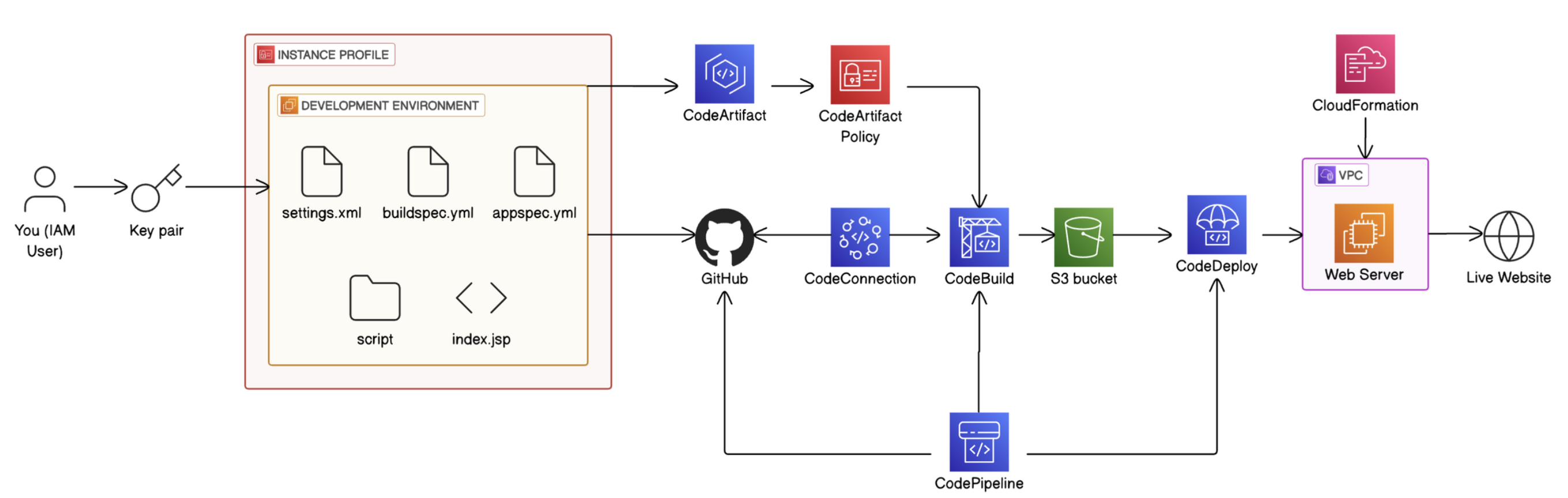

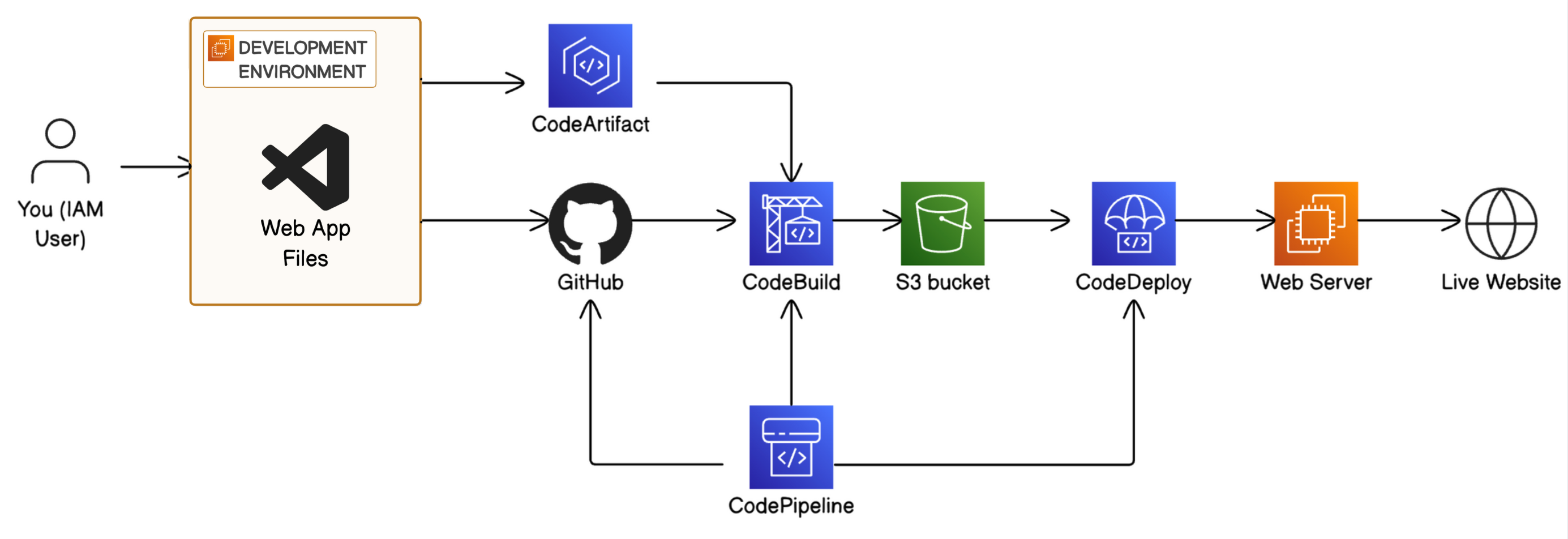

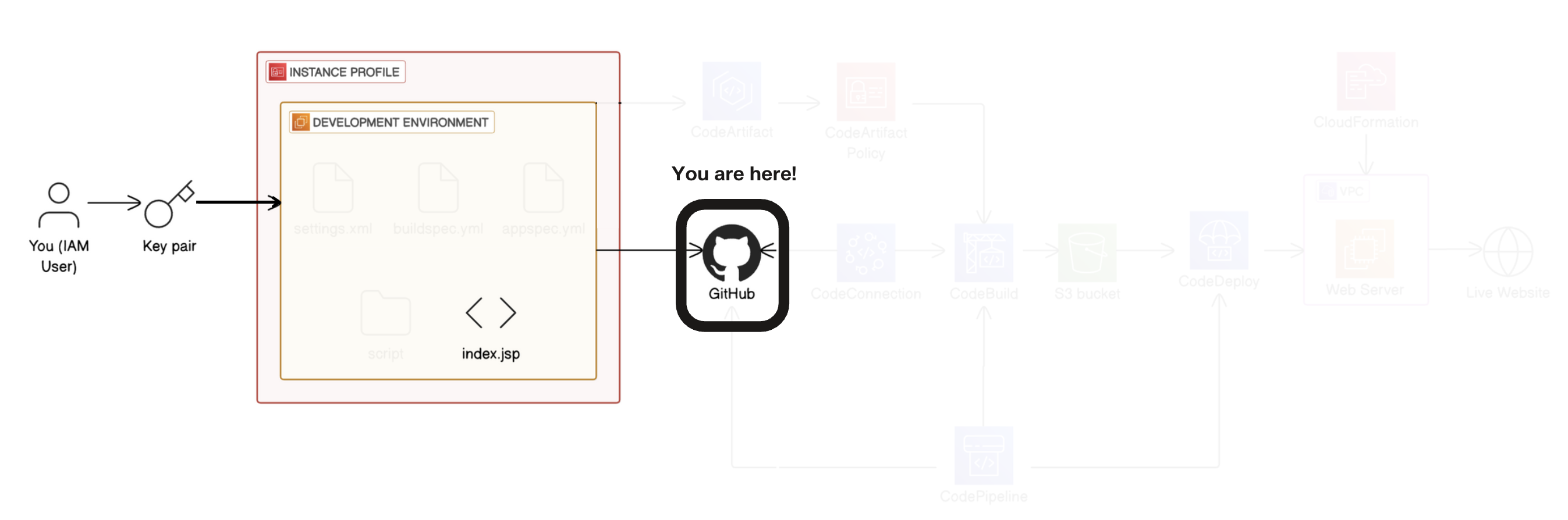

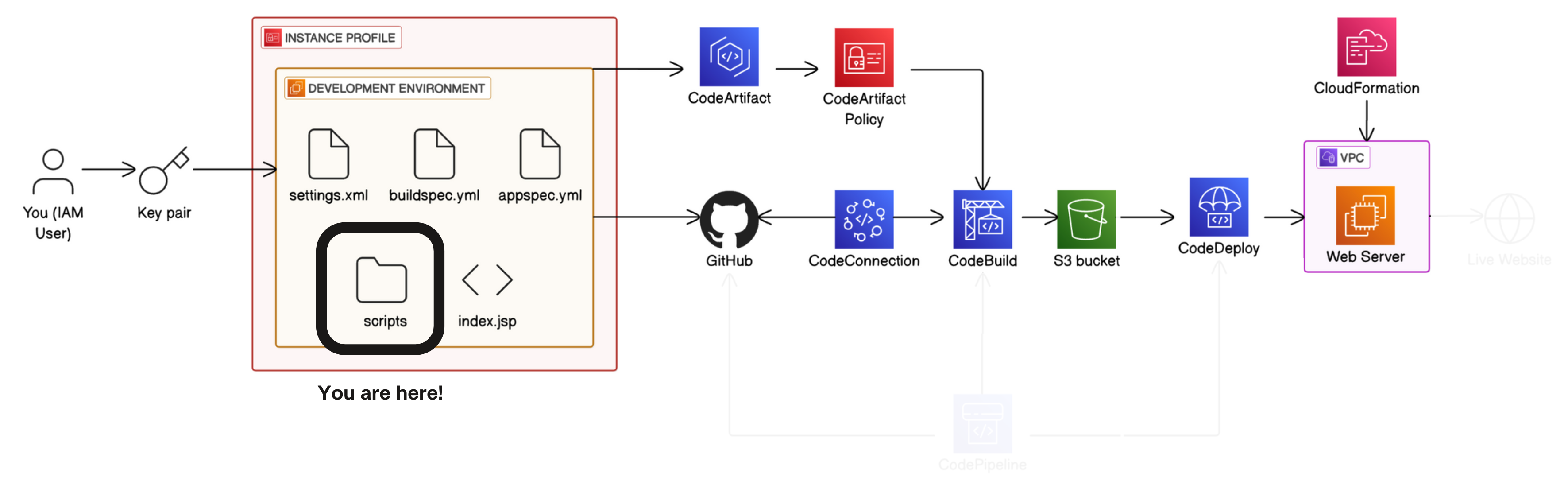

In project 1 of this challenge, you'll start by setting up a Development environment i.e. the test environment where developers write code.

-

In project 2, you'll connect your development environment to GitHub i.e. the repository storing different versions of the code.

-

In project 3, you'll store dependencies in CodeArtifact i.e. the pantry for pre-built code your app needs to function.

-

In project 4, you'll build your code with CodeBuild i.e. the process that prepares code for deployment.

💡 CI (continuous integration) means the build process starts automatically - whenever developers update the code in GitHub (i.e. a new code is added), CodeBuild will automatically build the updated code.

- In project 5, you'll use CodeDeploy to deploy your code to a Web server/Live application i.e. servers that deliver code to users.

💡 CD (continuous delivery) means deployment happens automatically whenever a new build is successful.

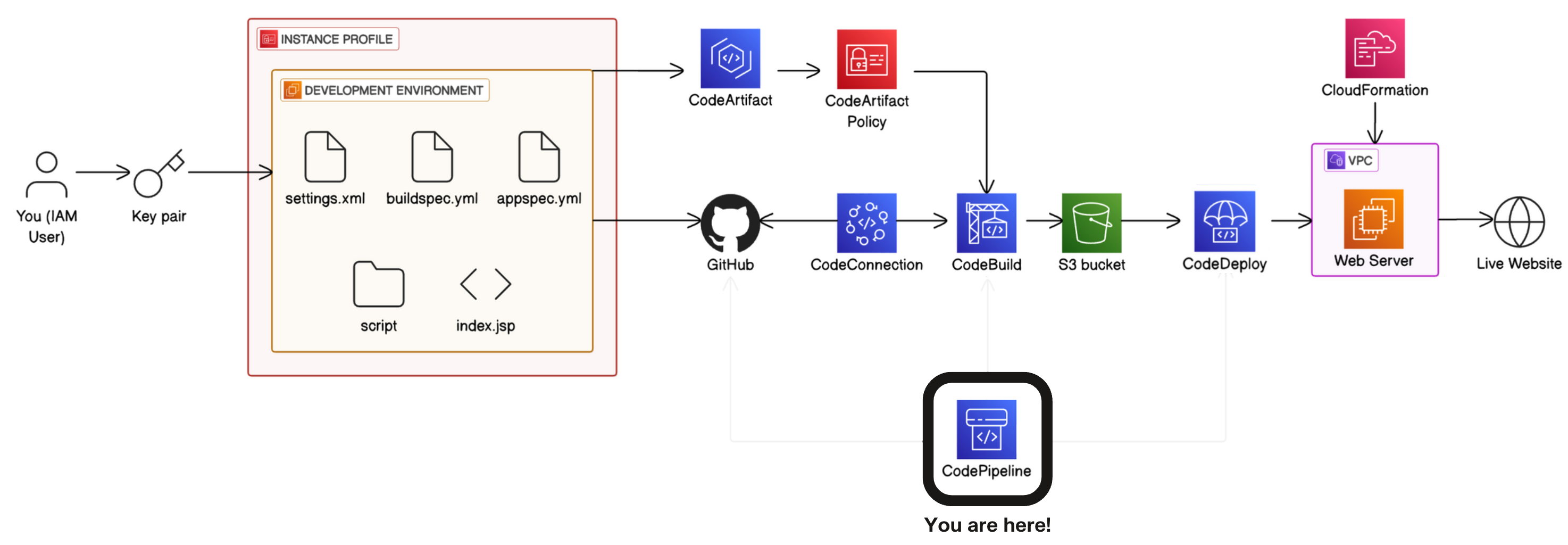

- Finally, in project 6, you'll set up the CI/CD pipeline with CodePipeline i.e. the automated workflow that makes sure code is automatically flowing from one stage to the next.

# Part-1. Set Up a Web App in the Cloud

Welcome to Part-1 of the 6 Parts DevOps Challenge!

Today, you'll learn the basics of building a web app using AWS and another tool called VS Code. This will lay the foundation for your DevOps work for the rest of this challenge.

- An AWS account - Create one here!

Get ready to:

-

💻 Launch an EC2 instance.

-

🔌 Use VS Code to set up a remote SSH connection to your EC2 instance.

-

☕️ Install Maven and Java and generate a basic web app.

-

💎 Edit code without VS Code - you'll see why IDEs are so well loved after this!

I started this project by launching an EC2 instance because we would like to deploy our code onto it. EC2 is a compute resource (CPU & RAM) when combined with storage, it works like a VM.

- Switch your Region to the one closest to you.

- Head to Amazon EC2 in your AWS Management Console.

- In your EC2 console, select Instances from the left hand navigation panel.

- Choose Launch instances.

-

Let's set up our EC2 instance.

-

In Name, enter the value

nextwork-devops-siddique. -

Choose Amazon Linux 2023 AMI under Amazon Machine Image(AMI).

- Leave t2.micro under Instance type.

- Under Key pair (login), choose Create a new key pair.

The key pair type specifies the algorithm for generating cryptographic keys.

We use RSA (Rivest-Shamir-Adleman), a widely trusted and supported algorithm known for its strength in digital signatures and encryption.

After setting up my key pair, AWS automatically downloaded my private key in .pem (Privacy Enhanced Mail) format, which is now the standard for cryptographic keys and compatible with many servers like EC2 instances.

- Use

nextwork-keypairas your key pair's name. - Keep the Key pair type as RSA, and the Private key file format as .pem

- Select create key pair.

- A new file will automatically download to our local computer - nice! This is our private key.

- Before we lose track of our .pem file, let's organize it in our computer.

- Head to our local computer's desktop.

- Create a new folder in your desktop called DevOps.

- Move your .pem file from your Downloads folder into your DevOps folder.

SSH (Secure Shell) is a protocol ensuring only authorized users access a remote server. When connecting to your EC2 instance, SSH checks your private key against the server’s public key.

After authorization, SSH establishes a secure, encrypted connection between you and the EC2 instance, protecting all transferred data. This makes SSH ideal for working with virtual servers.

- Back to our EC2 instance setup, head to the Network settings section.

- For Allow SSH traffic from, select the dropdown and choose My IP. This makes sure only I can access my EC2 instance.

- Double check that the IP address under My IP is correct - you can check your Public IP by clicking here.

- Your IP address would be different from what's under My IP, select Custom from the dropdown instead. Enter your IP and make sure to add a /32 to the end e.g.

192.140.151.176/32 - Leave the default values for the remaining sections.

- Choose Launch Instance.

- The EC2 instance has been launched successfully.

VS Code is a free, lightweight, and powerful source code editor designed and maintained by Microsoft. It's widely used for writing and editing code, offering features like syntax highlighting, auto-indentation, and debugging tools.

To install VS Code:

- Head to the Visual Studio Code website.

- Install VS Code by following the installation instructions for your OS e.g. Linux, Mac, Windows.

💡 How can I decide which setting/chip option I should pick?

If you're unsure of which chip/settings option to pick for your device:

- Mac: Select the Apple icon from the top left hand corner of your computer's menu bar. Select About this Mac, and note whether your Chip says Apple or Intel.

- Windows: Click the Start button and search for System Information. Note whether your System Type says x64-based or ARM-based PC.

- Linux: Open a terminal and run uname -m. Note whether the output says x86_64 or aarch64/arm64.

- Once downloaded, you might need to unzip a zip file to access VS Code.

- Open VS Code in your local computer (you'll find it in your Downloads folder).

- If a popup asks you to confirm opening VS Code, select Open.

- Welcome to VS Code!

- Select Terminal from the top menu bar.

- Select New Terminal from the dropdown.

A terminal lets you control your computer with text commands instead of clicks—for example, creating a folder by typing a command rather than right-clicking. It's like texting instructions to your operating system.

All computers have a terminal: Windows uses Command Prompt or PowerShell, while macOS and Linux use Terminal.

For this project, I first ran:

- cd C:\users\siddique\7 Days DevOps challenge\ to navigate to the DevOps folder.

- I also set my private key's permissions by running:

chmod 400 nextwork-keypair.pem- For windows - use following code block to set permissions on .pem private key file.

icacls "nextwork-keypair.pem" /reset

icacls "nextwork-keypair.pem" /grant:r "USERNAME:R"

icacls "nextwork-keypair.pem" /inheritance:r

💡 What is icacls?

Icacls (which stands for Integrity Control Access Control Lists) is a tool for Windows that lets you decide who can open or change the files on your system. In these icacls commands, you're using:

- /reset to remove default permission settings on the file

- /grant:r "USERNAME:R" to give the current user (that's you!) read access to your secret key

- /inheritance:r to make sure changes in the permissions of other files and the DevOps folder won't change the permission settings for this file.

To connect to my EC2 instance, I ran the command:

ssh -i C:\users\siddique\7 Days DevOps challenge\nextwork-keypair.pem ec2-user@MyEC2IP💡 What does this command do?

ssh starts a secure shell connection to your EC2 instance.

-i specifies the identity file (your .pem file) you're using to authenticate a connection.

ec2-user@[YOUR PUBLIC IPV4 DNS] specifies the username (ec2-user) and the address of the EC2 instance (Public DNS) to connect to.

A server's IPV4 DNS is its public URL which translates into public IP of that EC2 instance.

Apache Maven helps developers build and organize Java projects, and acts as a package manager by automatically downloading needed dependencies.

We're using Maven because it kick-starts web projects with archetypes—templates that set up project foundations, like web apps.

Later, we'll use Maven to quickly set up the web app structure, letting us start developing the app sooner.

- Install Apache Maven using the commands below. We can copy and paste all of these lines into the terminal together, no need to run them line by line.

wget https://archive.apache.org/dist/maven/maven-3/3.5.2/binaries/apache-maven-3.5.2-bin.tar.gz

sudo tar -xzf apache-maven-3.5.2-bin.tar.gz -C /opt

echo "export PATH=/opt/apache-maven-3.5.2/bin:$PATH" >> ~/.bashrc

source ~/.bashrc💡 What do these commands do?

The first command downloads a setup file that tells your computer where to find Apache Maven. Then, the second command extracts the downloaded package and saves it in a folder called opt. Then, the last two commands save a path to the extracted package, so that you can run Maven commands from any directory after this!

- Alternatively, you can run following command to install apache maven

sudo yum install maven -y- To verify that Maven is installed correctly, run the following command next:

mvn -vNow I am going to install Java 8, or more specifically, Amazon Correto 8.

Java is a popular programming language used to build different types of applications, from mobile apps to large enterprise systems.

Maven, which we just downloaded, is a tool that NEEDS Java to operate. So if we don't install Java, we won't be able to use Maven to generate/build our web app today.

To install Amazon Correto:

- Run following commands:

sudo dnf install -y java-1.8.0-amazon-corretto-devel

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-amazon-corretto.x86_64

export PATH=/usr/lib/jvm/java-1.8.0-amazon-corretto.x86_64/jre/bin/:$PATH💡 What do these commands do?

The first command installs Java Amazon Corretto 8. Then, the second command tells your EC2 instance how it can find Java, because it'll need to know Java's location to run Java commands! The last command is a time saver - you're saving Java's location so you can run Java commands from anywhere in your EC2 instance without needing to type out the full location each time.

- To verify that Java is installed correctly, run the following command next:

java -version💡 Important

If the command above doesn't return openjdk version 1.8 (=> Java 8), run the following command that allows you to choose the correct Java version:sudo alternatives --config java

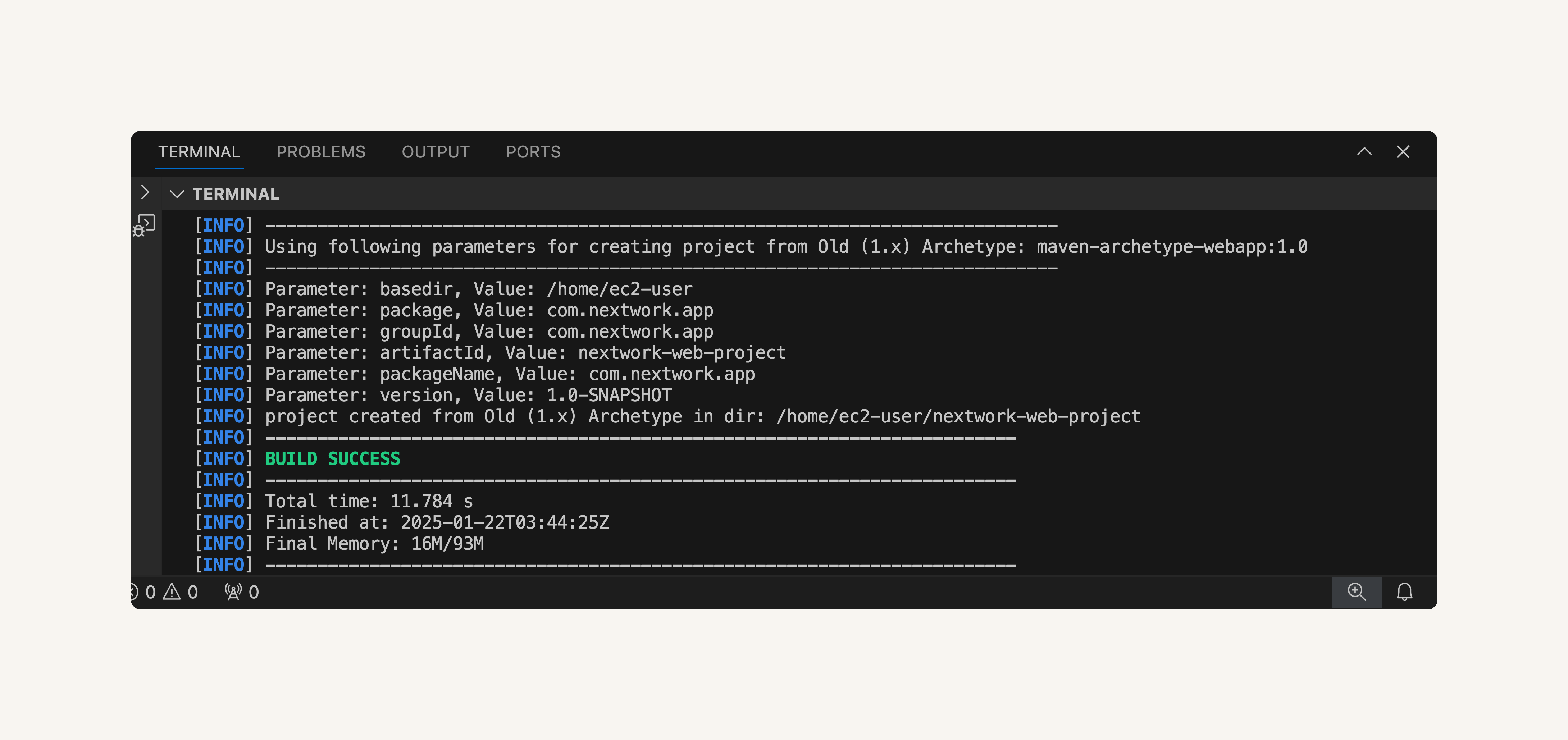

I generated a Java web app using the command:

mvn archetype:generate \

-DgroupId=com.nextwork.app \

-DartifactId=nextwork-web-project \

-DarchetypeArtifactId=maven-archetype-webapp \

-DinteractiveMode=false💡 Break down these commands for me... What is mvn?

When you run mvn commands, you're asking Maven to perform tasks (like creating a new project or building an existing one).The mvn archetype:generate command specifically tells Maven to create a new project from a template (which Maven calls an archetype). This command sets up a basic structure for your project, so you don't have to start from scratch.

💡 Extra for Experts: Some of the details we've specified in this command are...

-DartifactId=nextwork-web-project names your project

-DarchetypeArtifactId=maven-archetype-webapp specifies that you're creating a web application.

-DinteractiveMode=false runs the command without pausing for user input, so Maven will go ahead and install everything without waiting for your confirmation.

- Watch out for a BUILD SUCCESS message in your terminal once your application is all set up.

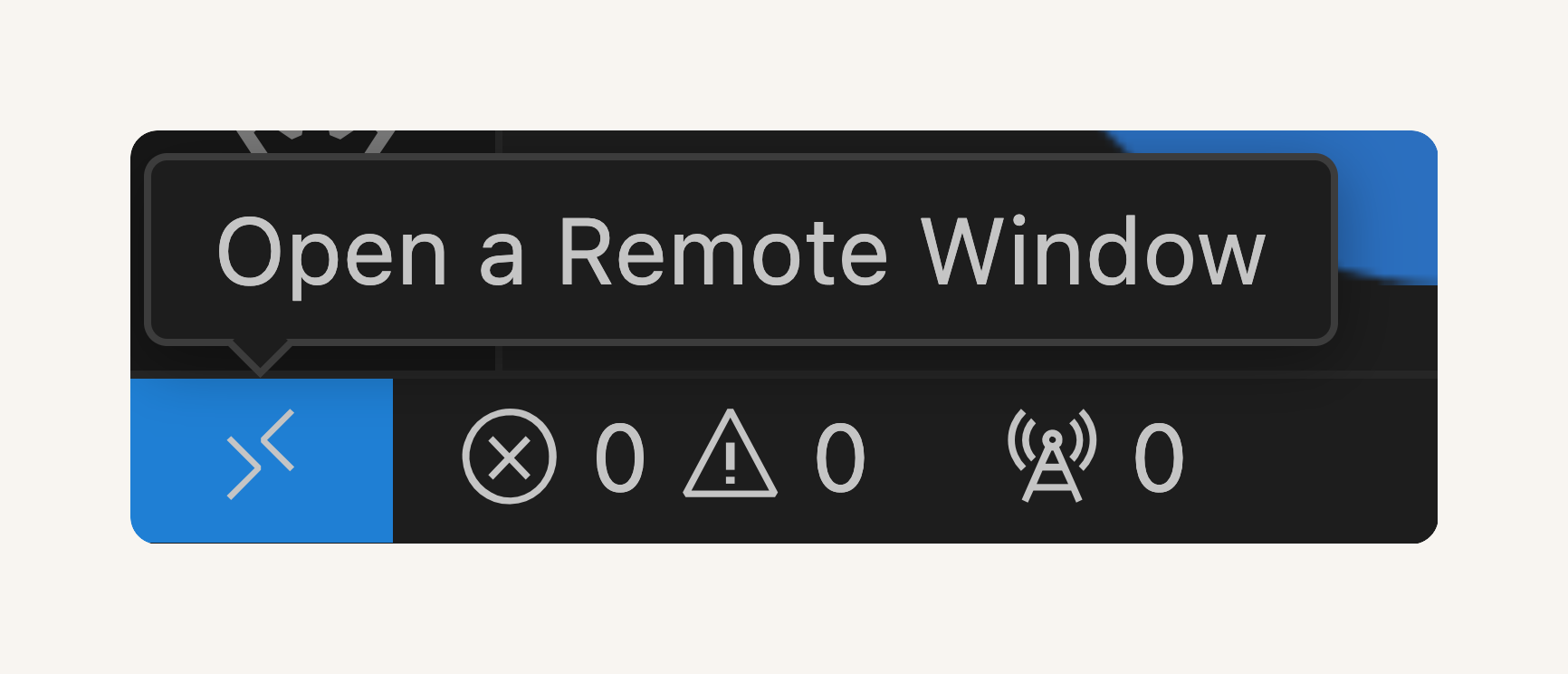

The Remote - SSH extension in VS Code lets us connect directly via SSH to another computer securely over the internet. This lets us use VS Code to work on files or run programs on that server as if we were doing it on our own computer, which will come in handy when we edit the web app in our EC2 instance!

In this step, we're going to:

- Install an extension in VS Code.

- Use the extension to set up a connection between VS Code and your EC2 instance.

- Explore and edit your Java web app's files using VS Code.

💡 Wait...aren't I already connected to my EC2 instance with SSH?

Yes, connecting with SSH in the terminal lets you send text commands to your EC2 instance, but you don't get all the benefits of having an IDE like VS Code.When you connect VS Code itself to your EC2 instance (not just your terminal), you unlock VS Code’s IDE features (like file navigation and code editing) directly on your EC2 instance. This will make it so much easier for you to edit and manage your web app for the rest of this DevOps series.

- Click on the Extensions icon at the side of your VS Code window.

- In the search bar, type Remote - SSH and click Install for the extension.

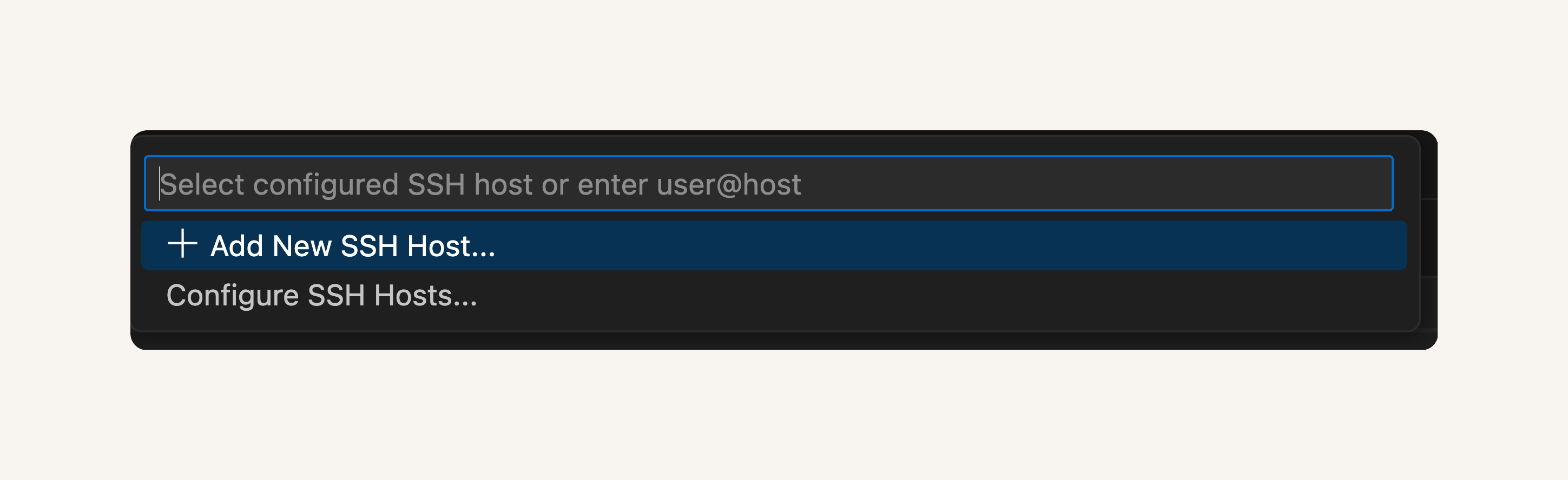

- Click on the double arrow icon at the bottom left corner of your VS Code window. This button is a shortcut to use Remote - SSH.

- Select Connect to Host...

- Select + Add New SSH Host

💡 What is an SSH Host?

An SSH Host is the computer or server you're connecting to using SSH. It's the target location where you want to run commands or manage files; in our case, the SSH Host is the EC2 instance we created.

-

Enter the SSH command you used to connect to your EC2 instance: ssh -i

[PATH TO YOUR .PEM FILE]ec2-user@[YOUR PUBLIC IPV4 DNS]- Replace

**[PATH TO YOUR .PEM FILE]**with the actual path to your private key file (e.g.,~/Desktop/DevOps/nextwork-keypair.pem). Delete the square brackets! - Replace

**[YOUR PUBLIC IPV4 DNS]**with the Public DNS you just found. Delete the square brackets!

- Replace

-

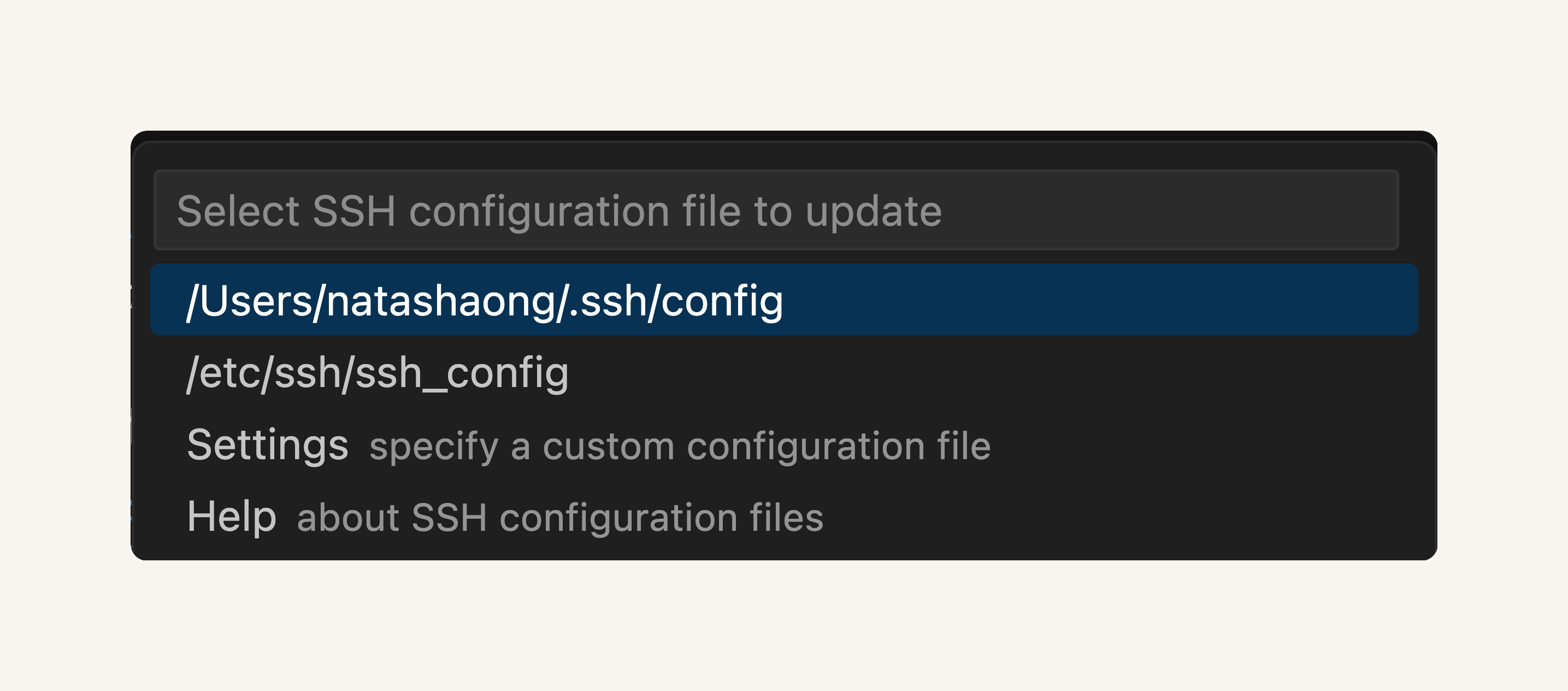

Select the configuration file at the top of your window. It should look similar to

/Users/username/.ssh/config

- A Host added! popup will confirm that you've set up your SSH Host

- Select the blue Open Config button on that popup.

- Confirm that all the details in your configuration file look correct:

- Host should match up with your EC2 instance's IPv4 DNS.

- IdentityFile should match up to nextwork-keypair.pem's location in your local computer.

- User should say ec2-user

- Now you’re ready to connect VS Code with your EC2 instance!

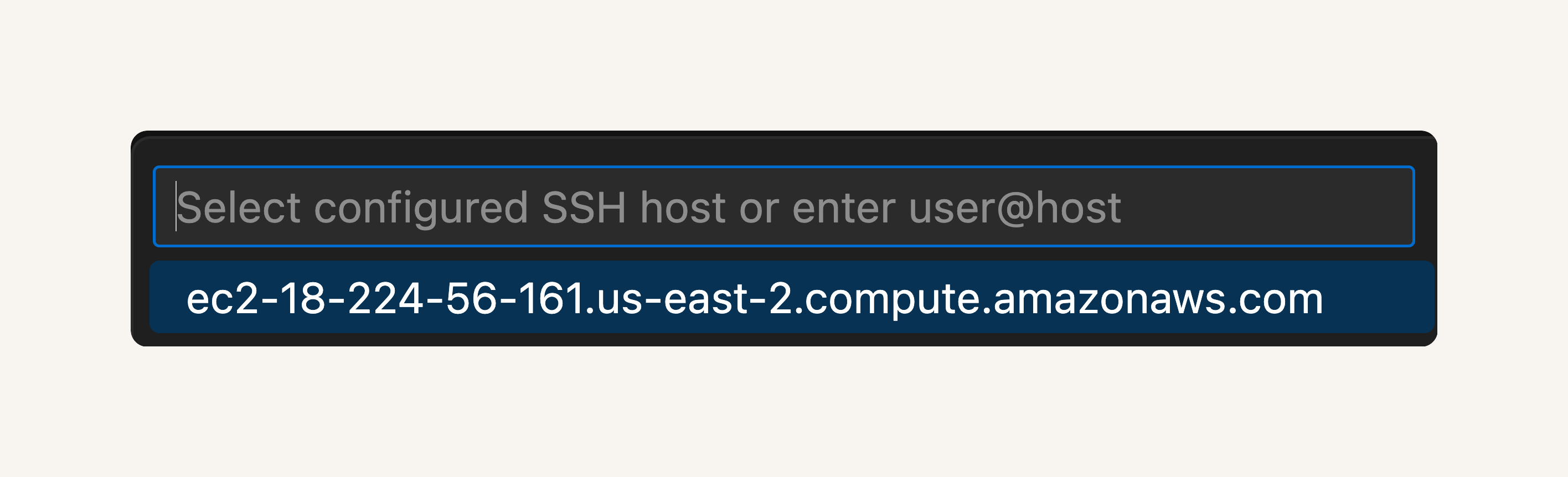

- Click on the double arrow button on the bottom left corner and select Connect to Host again.

- You should now see your EC2 instance listed at the top.

- Select the EC2 instance and off we gooooooooooo to a new VS Code window

✈️ - Check the bottom right hand corner of your new VS Code window - it should show your EC2 instance's IPV4 DNS.

Nice work - we've connected VS Code with your EC2 instance! 🥳

Now let's open up your web app's files.

- From VS Code's left hand navigation bar, select the Explorer icon.

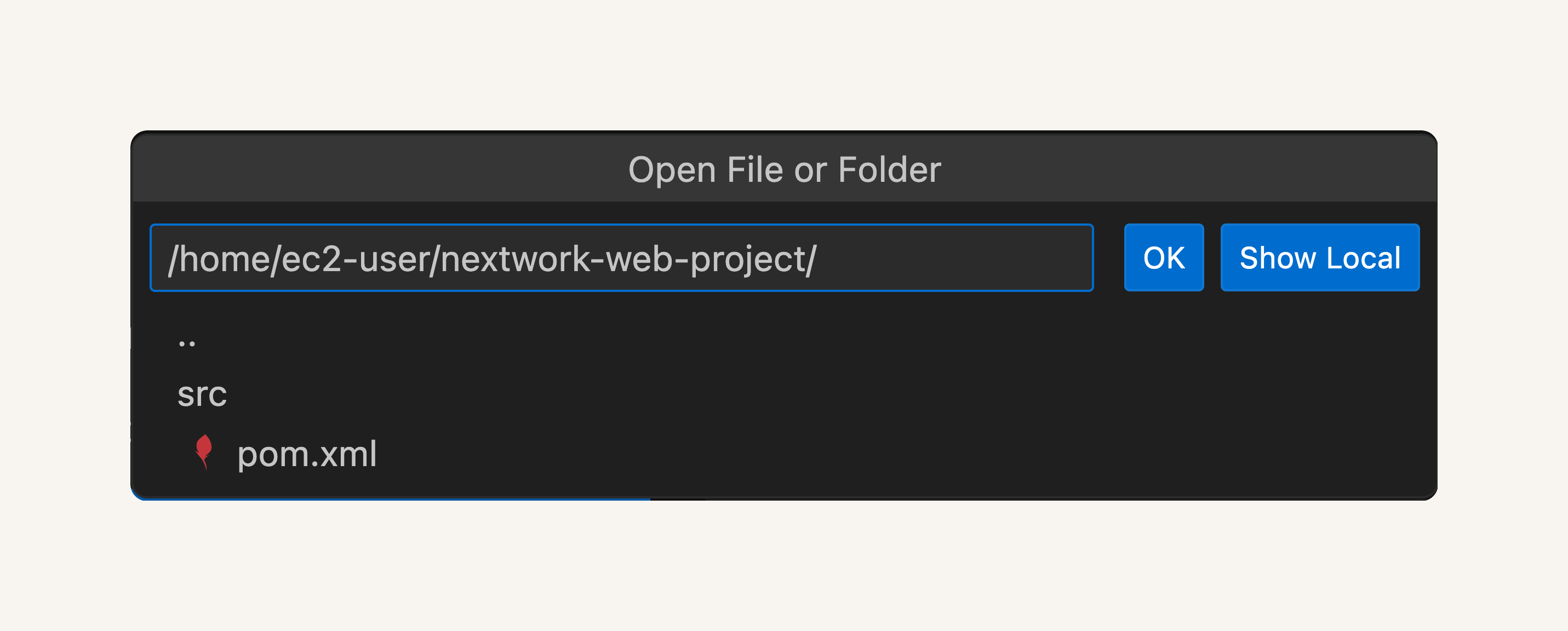

- Select Open folder.

- At the top of your VS Code window, you should see a drop down of different file and folder names. Ooooo, this is VS Code asking you which specific file/folder you'd like to open!

- Enter /home/ec2-user/nextwork-web-project.

- Press OK.

- VS Code might show you a popup asking if you trust the authors of the files in this folder. If you see this popup, select Yes, I trust the authors.

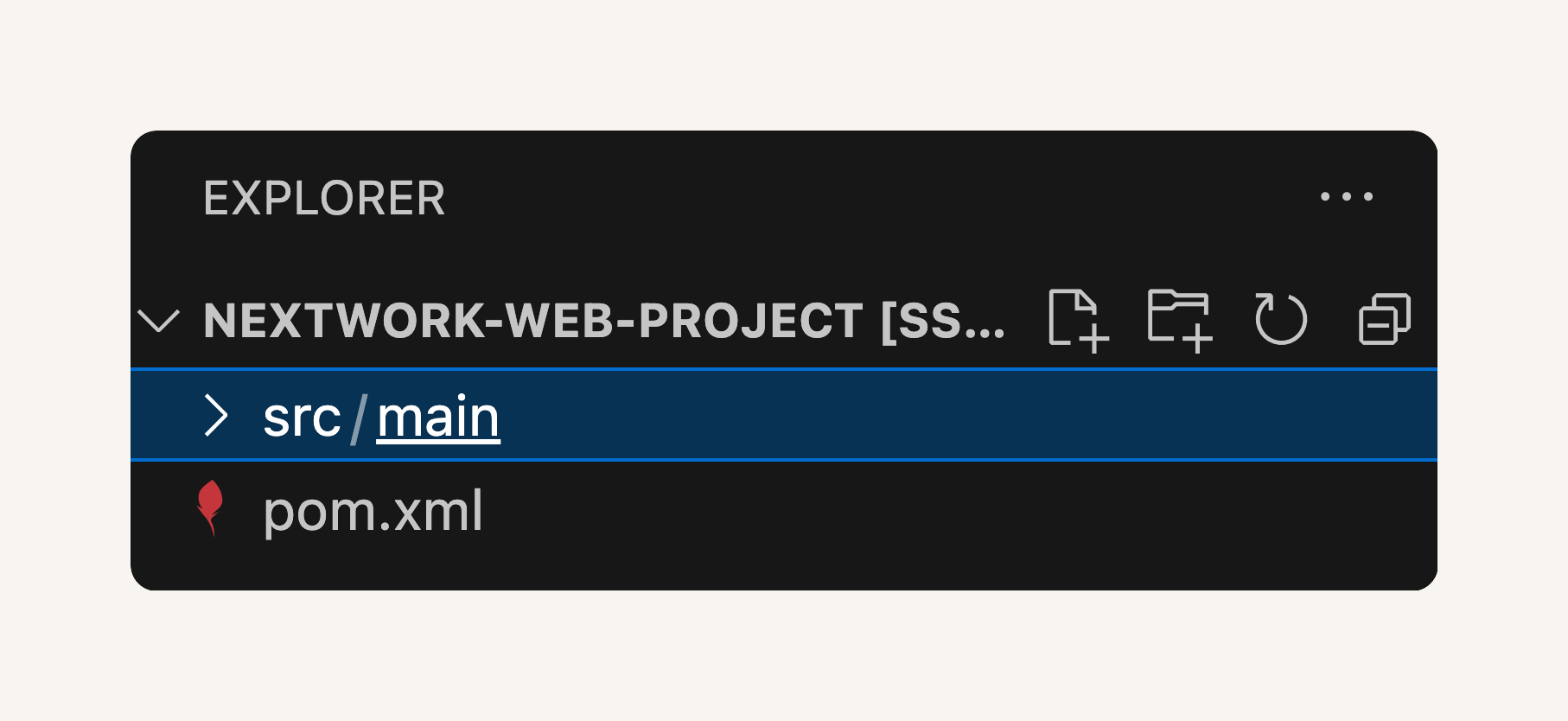

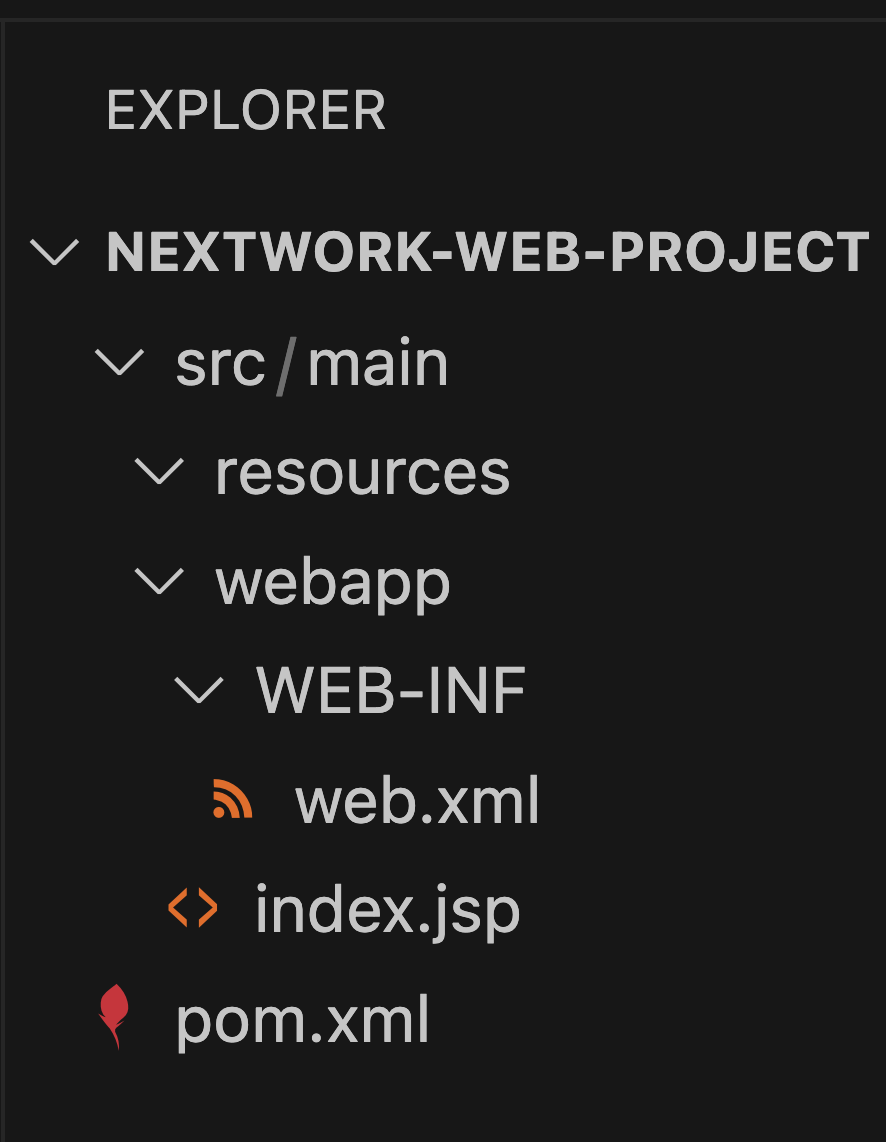

- Check your VS Code window's file explorer again - a folder called nextwork-web-project is here!

💡 What are all these files and subfolders?

All the files and subfolders you see under nextwork-web-project are parts of a web app! You can start working right away on the content you want to display on your web app, since Maven's taken care of the basic structuring and setup.Let's get to know some of these web app files/folders:

The src (source) folder holds all the source code files that define how your web app looks and works.

src is further divided into webapp, which are the web app's files e.g. HTML, CSS, JavaScript, and JSP files, and resources, which are the configuration files a web app might need e.g. connection settings to a database.

pom.xml is a Maven Project Object Model file. It stores information and configuration details that Maven will use to build the project. We'll use pom.xml later in this project series!

- Exploring done! So how can VS Code help you edit your application files? Let's find out.

- From your file explorer, click into index.jsp.

💡 What is index.jsp? What's the difference from HTML?

index.jsp is a file used in Java web apps. It's similar to an HTML file because it contains markup to display web pages.However, index.jsp can also include Java code, which lets it generate dynamic content.

This means content can change depending on things like user input or data from a database. Social media apps are great examples of web apps because the content you see is always changing, updating and personalised to you. HTML files are static and can’t include Java code. That's why it's so important to install Java in your EC2 instance - so you can run the Java code in your web app!

-

Welcome to editor view of

index.jsp. Now we're really using VS Code's IDE abilities - editing code is much easier here than in the terminal. -

Let's try modifying

index.jspby changing the placeholder code to the code snippet below. Don't forget to replace{YOUR NAME}from the following code with your name:

<html>

<body>

<h2>Hello {YOUR NAME}!</h2>

<p>This is my NextWork web application working!</p>

</body>

</html>- Save the changes you've made to index.jsp by selecting Command/Ctrl + S on your keyboard.

💡 How will I know I've saved my work?

Look at the name of the open index.jsp tab at the top of your editor 🔍 The dot next to the name should go away once your changes are saved.

We've just learnt how to set up a web app on an EC2 instance AND connect it with VS Code, one of the most popular and practical IDEs out there.

We'll be building on the foundation we've set up here over the rest of the DevOps series, more exciting learnings are ahead.

Welcome to Part-2 of the 6 Parts DevOps Challenge!

Today, you'll learn how to store your web application's code in a Git repository.

🙋♀️ I'm a complete beginner - what does a Git repository mean?

No worries if you're new to repositories, you'll learn what they mean and why they're important as you go in this project.In short, Git repositories are essential in a CI/CD pipeline because they store your code safely in the cloud, and tracks every change your team makes to the code! Using a shared repository makes it much easier for engineering teams to hand off code, collaborate and update each other on changes they've made.

- An AWS account - Create one here!

If you are following along since part-1 of this project, you may proceed further. However if at any point you deleted your resources, make sure to go back and set up every thing need for this project from DevOps - Complete CICD Pipeline With AWS CodePipeline#Part-1. Set Up a Web App in the Cloud till this part of the project. Good Luck!

Get ready to:

- 🐱 Set up Git and GitHub.

- 🤝 Connect your web app project to a GitHub repo.

- 🪄 Make changes to your web app code - and watch your GitHub repo update too.

- 💎 Set up a README file for your repo.

Now that your development environment is ready, the next step is to set up Git on your EC2 instance.

In this step, you're going to:

- Install Git on your EC2 instance.

💡 What is Git?

Git is like a time machine and filing system for your code. It tracks every change you make, which lets you go back to an earlier version of your work if something breaks. You can also see who made specific changes and when they were made, which makes teamwork/collaboration a lot easier.Extra for Experts: Git is often called a version control system since it tracks your changes by taking snapshots of what your files look like at specific moments, and each snapshot is considered a 'version'.

- In the EC2 instance's terminal, install Git:

sudo dnf update -y

sudo dnf install git -y💡 What do these commands do?

sudo dnf update -ytells your EC2 instance to find all the latest updates of software it has (e.g. Java, Maven) and install them straight away.

sudo dnf install git -yinstalls Git on your EC2 instance.

It's best practice to update your existing software before installing new ones, just in case there are compatibility issues between new and old software.Extra for Experts:

-yis a shortcut for "yes," meaning you're giving your EC2 instance your approval in advance for any time the system might ask questions like "should I proceed with the installation?"

- Verifiy the installation:

git --versionGit is installed woohoo! Next up, we'll set you up with GitHub.

In this step, you're going to:

- Set up a GitHub account.

- Create a GitHub repository.

If you already have a GitHub account:

- Sign in to GitHub.

- Scroll to the heading 🗂️ Set up a new repository in this step.

If you don't have a GitHub account:

- Head to GitHub's signup page.

💡 What is Github?

GitHub is a place for engineers to store and share their code and projects online. It's called GitHub because it uses Git to manage your projects' version history.

- Log into your GitHub account once you've verified your email.

💡 What is the difference between Git & Github?

If Git is the tool for tracking changes, think of GitHub as a storage space for different version of your project that Git tracks. Since GitHub is a cloud service, it also lets you access your work from anywehere and collaborate with other developers over the internet.💡 Why would I use Github? Isn't the code in my EC2 instance already in the cloud?

Even though your code is on a cloud server like EC2, GitHub helps you use Git and see your file changes in a more user-friendly way. It's just like how using an IDE (VS Code) makes editing code easy.GitHub is also especially useful in situation where you're working in teams and need to share your updates and reviews to a shared code base.

🗂️ Set up a new repository

Nice, you're ready to set up a new repository on GitHub!

💡 What is a repository?

To store your code using Git, you create repositories (aka 'repos'), which are folders that contain all your project files and their entire version history. Hosting a repo in the cloud, like on GitHub, means you can also collaborate with other engineers and access your work from anywhere.

- After signing in to GitHub, click on the + icon next to your GitHub profile icon at the top right hand corner.

- Select New repository.

- Select Create repository.

- This loads up a new page where you can create a repository.

- Under Owner, click on the Choose an owner dropdown and select your GitHub username.

- Under Repository name, enter

nextwork-web-project - For the Description, enter

Java web app set up on an EC2 instance. - Choose your visibility settings. We'd recommend selecting Public to make your repository available for the world to see.

- Select the checkbox next to Add a README file.

💡 What is a README?

A README is a piece of text that introduces and explains your project, like what the project does, how to set it up, and how to use it. Having a README is super common practice in software development (not just on GitHub) and helps anyone get up to speed on your project's purpose and function quickly.

Select Create repository.

So we now have a place in the cloud that will store our code and track the changes we make.

But... this storage folder (your GitHub repo) still doesn't know where your web app files are.

Lets connect our GitHub repo with our web app project stored in your EC2 instance.

In this step, you're going to:

- Set up a local git repo in your web app folder.

- Connect your local repo with your GitHub repo.

- Check that you are in the right folder by running this command in your terminal:

pwd

💡 What does pwd do?

pwd stands for print working directory, and this command asks your server "where am I right now?" The terminal will show you the exact location of the directory (folder) you're in.

- If you're not in nextwork-web-project, use cd to navigate your terminal into your web app project.

- Now let's tell Git that we'd like to track changes made inside this project folder.

git init💡 What does git init do?

To start using Git for your project, you need to create a local repository on your computer.When you run

git initinside a directory e.g. nextwork-web-project, it sets up the directory as a local Git repository which means changes are now tracked for version control.💡 What's a local repository?

The local repository is where you use Git directly on your own EC2 instance. The edits you make in your local repo is only visible to you and isn't shared with anyone else yet.This is different to the GitHub repository, which is the remote/cloud version of your repo that others can see.

💡 WOAH! I got a bunch of yellow text when I ran this command

This yellow text is just Git giving you a heads-up about naming your main branch master and suggesting that you can choose a different name like 'main' or 'development' if you want.💡 What is a main branch?

You can think of Git branches as parallel versions or 'alternate universes' of the same project. For example, if you wanted to test a change to your code, you can set up a new branch that lets you diverge from the original/main version of your code (called master) so you can experiment with new features or test bug fixes safely.We won't create new branches in this project and we'll save all new changes directly to master, but it's best practice to make all changes in a separate branch and then merge them into master when they're ready.

- Head back to your GitHub repository's page.

- In the blue section of the page titled Quick setup — if you’ve done this kind of thing before, copy the HTTPS URL to your repository page.

Now let's connect your local project folder with your Github repo!

- Run this command. Don't forget to replace

[YOUR GITHUB REPO LINK]with the link you've just copied.

git remote add origin [YOUR GITHUB REPO LINK]

#Example

git remote add origin https://github.com/git-muhammad-sadique-i987/nextwork-web-project.git💡 What does 'remote add origin' mean?

Your local and GitHub repositories aren't automatically linked, so you'll need to connect the two so that updates made in your local repo can also reflect in your GitHub repo.When you set

remote add origin, you're telling Git where your GitHub repository is located. Think of origin as a bookmark for your GitHub project's URL, so you don't have to type it out every time you want to send your changes there.

Next, we'll save our changes and push them into GitHub.

- Run this command in your terminal:

git add . 💡 What does this command do?

git add .stages all (marked by the '.') files in nextwork-web-project to be saved in the next version of your project.💡 What does staging mean?

When you stage changes, you're telling Git to put together all your modified files for a final review before you commit them. This is incredibly handy because you get to see all your edits in one spot, which means its much easier to check if there were are mistakes or unwanted changes before you commit.

- Run this command next in your terminal:

git commit -m "Updated index.jsp with new content"💡 What does this command do?

git commit -m "Updated index.jsp with new content"saves the staged changes as a snapshot in your project’s history. This means your project's version control history has just saved your latest changes in a new version.

-mflag lets you leave a message describing what the commit is about, making it easier to review what changed in this version.

- Finally, run this command:

git push -u origin master # Replace master with main if your production branch is called `main`

💡 What does this command do?

git push -u origin masteruploads i.e. 'pushes' your committed changes to origin, which you've bookmarked as your GitHub repo. 'master' tells Git that these updates should be pushed to the master branch of your GitHub repo.By using

-uyou're also setting an upstream for your local branch, which means you're telling Git to remember to push to master by default. Next time, you can simply rungit pushwithout needing to define origin and master.

- Ah we're so close, but Git can't push your work to the Github repository yet. It's now asking for a username!

💡 Why is Git asking for my username?

Git needs to double check that you have the right to push any changes to the remote origin your local repo is connected with. To do this, Git is now authenticating your identity by asking for your GitHub credentials.

- Enter your Github username, and press Enter on your keyboard.

- Next, enter your password. You'll notice that as you type this out, nothing shows on your terminal. This is totally expected - your terminal is hiding your input for your privacy. Press Enter on your keyboard when you've typed out your password, even if you don't see it printed out in your terminal.

- Hmmmm, now Git is letting us know that it can't actually accept our password.

💡 What does this mean?

GitHub phased out password authentication to connect with repositories over HTTPS - there are too many security risks and passwords can get intercepted over the internet 🤺 You need to use a personal access token instead, which is a more secure method for logging in and interacting with your repos.💡 What is a token?

A token in GitHub is a unique string of characters that looks like a random password. For example, a GitHub token might look likeghp_xHJNmL16GHSZSV88hjP5bQ24PRTg2s3Xk9ll. As you can imagine, tokens are great for security because they're unique and would be very hard to guess.

Now that we know passwords won't work for authentication, we'll have to find a replacement.

Let's generate an authentication token on GitHub!

In this step, you're going to:

- Set up a token on GitHub.

- Use the generated token to access your GitHub repo from your local repo.

- Find the Generate new token (classic) option in your GitHub settings.

- Give your token a descriptive note, like

Generated for EC2 Instance Access. This is a part of NextWork's 6 Parts DevOps Challenge. - Lower the token expiration limit to 7 days.

💡 What is a token expiration limit?

A token expiration limit is how long your personal access token would work for. After this time period, the token expires and no longer grants access, so you'll need to generate a new token.

- Select the checkbox next to repo.

💡 What do all these scopes mean?

We use scopes to decide what kind of permissions your token will grant. Each scope you pick gives the token the ability to do even more things with your GitHub account. In our case, we picked the repo scope, which means the token can even access and control private repositories in your account.

- Nice, a new token (a long string of random letters) is generated!

💡 I also see a banner at the top of the screen... what does this mean?

You might see a banner at the top of the page that says "Some of the scopes you've selected are included in other scopes. Only the minimum set of necessary scopes has been saved."This simply means the scope

repois the overarching scope for a broad range of permissions, so some of the smaller scopes you'be checked underrepooverlaps with the main one.

- Make sure to copy your token now. Keep it safe somewhere else, you won't be able to see your token once you close this tab.

- Switch back to your VS Code terminal.

- Run

git push -u origin masteragain, which will trigger Git to ask for your GitHub username and password.

💡 What does the message in the terminal mean?

This message appears when you successfully push changes to a GitHub repository - nice work. It shows the progress of transferring objects (like files and commits), how many objects were processed, and tells you that your local branch is now tracking the remote branch after the push.

- Head to your GitHub repository in your web browser.

- Refresh the page, and you’ll see your web app files in the repository, along with the commit message you wrote.

When you add, commit and push your changes, you might notice the terminal automatically sets two other things - your name and email address - before it asks for your GitHub username.

💡 Why does my terminal need my name and email?

Git needs author information for commits to track who made what change. If you don't set it manually, Git uses the system's default username, which might not accurately represent your identity in your project's version history.

- Run

git logto see your history of commits, which also mentions the commit author's name.

- Hmmm EC2 Default User isn't really your name, and the EC2 instance's IPv4 DNS is not your email. Let's configure your local Git identity so Git isn't using these default values.

- Run these commands in your terminal to manually set your name and email. Don't forget to replace "Your name" with your name (keep the quote marks), and

[email protected]with your email address.

git config --global user.name "Your Name"

git config --global user.email [email protected]Nice work! You've set up your local Git identity, which means Git can associate your changes in the local repo to your name and email.

This setup is best practice for keeping a clear history of who made which changes ✅

Great success with getting your GitHub connection all set up.

So we've learnt how to link your EC2 instance's files with a cloud repo, now let's see what happens when you make new changes to your web app files.

In this step, we'll edit index.jsp again using VS Code, and run commands that pushes those changes to your GitHub repository too.

In this step, you're going to:

- Make changes to your web app.

- Commit and push those changes.

- Keep your GitHub page open, and switch back to your EC2 instance's VS Code window.

- Find index.jsp in your file navigator on the left hand panel.

- Find the line that says This is my NextWork web application working! and add this line below:

<p>If you see this line in Github, that means your latest changes are getting pushed to your cloud repo :o</p>- Save your changes by pressing Command/Ctrl + S on your keyboard while keeping your index.jsp editor open.

- Head back to your GitHub tab and click into the src/main/webapp folders to find index.jsp.

- Click into index.jsp - have there been any updates to index.jsp in your GitHub repo?

💡 Hmmm it's still looking the same...

You won't see your changes in GitHub yet, because saving changes in your VS Code environment only updates your local repository. Remember that the local repository in VS Code is separate from your GitHub repository in the cloud.To make your changes visible in GitHub, you need to write commands that send (push) them from your local repository into your origin.

- Head back to your VS Code window.

- In the terminal, let's stage our changes:

git add. - Ready to see what changes are staged? Run

git diff --stagednext.

💡 What does this command do?

git diff --stagedshows you the exact changes that have been staged compared to the last commit. Now you get to review your modifications in your code that you are about to save into your local repo's version history!💡 Extra for Experts: Did you know you can view these changes using VS Code too?

- Select the Source Control icon on the side of your VS Code window.

- Under the Saved Changes heading, select index.jsp.

- You'll see your change in a new window that hightlights the new line you've added to index.jsp!

- Nice, these changes are what we want to save and send to GitHub, lets do that with these commands:

git commit -m "Add new line to index.jsp"

git push🙋♀️ Help! My terminal isn't letting me enter commands!

This doesn't apply to everyone, but your terminal might stay stuck in the previous step to show you your staged changes. Enter q into the terminal to quit this view and return to running commands.Extra for Experts: Interesting, why would by terminal stay stuck?

When you run certain Git commands like git status, Git uses a pager to handle the output (by default, this is usually on most Unix-like systems so other operating systems might now show this).The pager lets you scroll through information that can't fit on a single terminal window, and the terminal will look like it's "stuck" because it's waiting for you to finish reviewing the output. While in this mode, you can't enter new commands until you quit pager view.

- You might need to enter your username and token again to complete your push.

💡 I have to enter my username and password AGAIN?!

This is because we set up the connection to our GitHub repo using its HTTPS URL. Using HTTPS is straightforward compared to other options e.g. SSH, but it's also stateless, which means Git doesn't remember your credentials for security reasons. That's why Git asks for your GitHub credentials 👏 every time 👏 you pull from or push to your GitHub repo.Top tip: right after you enter your username and token again, you can run

git config --global credential.helper storeto ask Git to remember your credential details for next time!

- Head back to your GitHub tab - do you see your changes now?

In this optional section we will add shiny cherry on top to your GitHub repository - a README file.

- Set up a

README.mdfile in your project directory.

💡 What is a README file?

A README is a document that introduces and explains your project, like what the project does, how to set it up, and how to use it.Having a README is super common practice in software development (not just on GitHub).

When recruiters or potential collaborators look at your project, a well-organized README can really boost their impression of your work and showcase your documentation skills!

- Write your README using the following template:

# Java Web App Deployment with AWS CI/CD

Welcome to this project combining Java web app development and AWS CI/CD tools!

<br>

## Table of Contents

- [Introduction](#introduction)

- [Technologies](#technologies)

- [Setup](#setup)

- [Contact](#contact)

- [Conclusion](#conclusion)

<br>

## Introduction

This project is used for an introduction to creating and deploying a Java-based web app using AWS, especially their CI/CD tools.

The deployment pipeline I'm building around the Java web app in this repository is invisible to the end-user, but makes a big impact by automating the software release processes.

<br>

## Technologies

Here’s what I’m using for this project:

- **Amazon EC2**: I'm developing my web app on Amazon EC2 virtual servers, so that software development and deployment happens entirely on the cloud.

- **VS Code**: For my IDE, I chose Visual Studio Code. It connects directly to my development EC2 instance, making it easy to edit code and manage files in the cloud.

- **GitHub**: All my web app code is stored and versioned in this GitHub repository.

- **[COMING SOON] AWS CodeArtifact**: Once it's rolled out, CodeArtifact will store my artifacts and dependencies, which is great for high availability and speeding up my project's build process.

- **[COMING SOON] AWS CodeBuild**: Once it's rolled out, CodeBuild will take over my build process. It'll compile the source code, run tests, and produce ready-to-deploy software packages automatically.

- **[COMING SOON] AWS CodeDeploy**: Once it's rolled out, CodeDeploy will automate my deployment process across EC2 instances.

- **[COMING SOON] AWS CodePipeline**: Once it's rolled out, CodePipeline will automate the entire process from GitHub to CodeDeploy, integrating build, test, and deployment steps into one efficient workflow.

<br>

## Setup

To get this project up and running on your local machine, follow these steps:

1. Clone the repository:

```bash

git clone https://github.com/yourusername/nextwork-web-project.git

```

2. Navigate to the project directory:

```bash

cd nextwork-web-project

```

3. Install dependencies:

```bash

mvn install

```

<br>

## Contact

If you have any questions or comments about the NextWork Web Project, please contact:

Your Name - [Your Email](mailto:[email protected])

<br>

## Conclusion

Thank you for exploring this project! I'll continue to build this pipeline and apply my learnings to future projects.

A big shoutout to **[NextWork](https://learn.nextwork.org/app)** for their project guide and support. [You can get started with this DevOps series project too by clicking here.](https://learn.nextwork.org/projects/aws-devops-vscode?track=high)

💡 Woah! Is this... code?

Nope! Instead of code, this is Markdown, a text language that lets you format text that you'll display on a webpage. With Markdown, you can make words bold, create headers, add links, and use bullet points—all with simple symbols added to your text.It’s useful for creating documents like README files that need to look clean and easy to read without complex software, making it a favorite for writing on platforms like GitHub.

💡 What do the different symbols mean?

Here's a quick Markdown cheatsheet!Headers

Text styling

text to make text bold

text to make text italic

texttostrike throughtextLists

- or * for unordered bullet lists

1., 2., etc., for ordered lists

Links

Images

to embed an imageCode

Put text inbetween

backticksfor inline code like thisUse triple backticks ``` for multi-line code blocks

Like this!

Blockquotes

- Use > for block quotes

Like this one!

-

Complete this README file:

- In the ## Contact section, replace Your Name with your name.

- Replace Your Email and

[email protected]with your email.

-

Make this README file your own by adding a few extra details. Here are a few ideas on what you could add...

-

## Introduction:Add two bulletpoints on why you're doing this project or how it fits into your career or personal growth goals. -

## Technologies:Add any challenges you've faced and the solutions you've found while using these tools. -

## Setup:Add troubleshooting tips for common setup issues. -

## Contact:Add a link to your LinkedIn profile or professional websites, and add your professional profile photo.

-

-

Push the README into your GitHub repo.

- You should see a shiny new README file displayed in your GitHub repository.

We have learnt how to:

- Set up a GitHub repository: You created a new repository in AWS GitHub to securely store and manage the source code for your Java web app.

- ☁️ Configure Git and a local repository: You established your Git identity with your username and email. You also initialized a local repo with your GitHub repo as the remote origin.

- 🫸 Make Your first commit and push: You added all your files to the staging area, committed them, and pushed these changes to the master branch of your GitHub repository, making your code available in the cloud.

- 💎 **Set up a README file for your repo:**You gave your GitHub repo the ultimate cherry on top - an informative and welcoming README file that introduces your project and offers tips on how to use the code.

Next up, we need to find a way to store our web app's packages and dependencies, which are pieces of code your web app relies on in order to work. This is where AWS CodeArtifact comes into play 👀

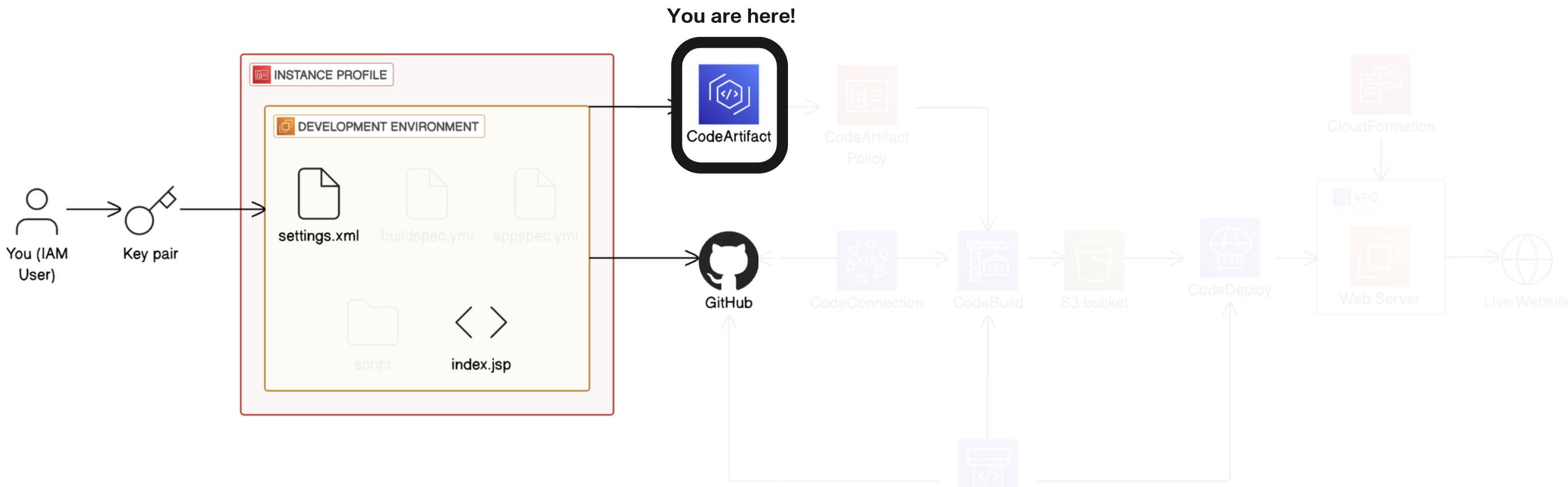

Welcome to Part-3 of the 6 Parts DevOps Challenge!

Today, we're working with AWS CodeArtifact to secure your web app's packages.

- An AWS account - Create one here!

If you are following along since part-1 of this project, you may proceed further. However if at any point you deleted your resources, make sure to go back and set up every thing need for this project from DevOps - Complete CICD Pipeline With AWS CodePipeline#Part-1. Set Up a Web App in the Cloud till this part of the project. Good Luck!

💡 Why am I learning about AWS CodeArtifact?

When building apps, you don't create everything from scratch. Instead, you often use pre-made "packages" (chunks of code) that other developers have already created. It's just like making pasta with pre-made pasta sauce, instead of making everything from scratch.CodeArtifact is an artifact repository, which means you use it to store all of your app's packages in one place. It's an important part of a CI/CD pipeline because it makes sure an engineering team is always using the same, verified versions of packages when building and deploying the app, which reduces errors and security risks!

Get ready to:

- 🗂️ Set up CodeArtifact as a repository for your project's dependencies.

- 🛡️ Use IAM roles and policies to give your web app access to CodeArtifact.

- ✅ Verify your web app's connection to CodeArtifact!

- 💎 Become a package uploader - create and add your own packages to your CodeArtifact repository!

Now, let's set up AWS CodeArtifact, a fully managed artifact repository service. We'll use it to store and manage our project's dependencies, ensuring secure and reliable access to Java packages.

This is important because CodeArtifact provides a centralized, secure, and scalable way to manage dependencies for our Java projects, improving build consistency and security.

In this step, you're going to:

- Create and configure a new CodeArtifact repository.

- In the AWS Management Console, head to

CodeArtifact - In the CodeArtifact console, in the left-hand menu, click on Repositories.

- Click the Create repository button to start creating a new repository.

💡 What is AWS CodeArtifact?

CodeArtifact is a secure, central place to store all your software packages. When you're building an application, you typically use dozens of external packages or libraries - things other developers have created that you don't want to build from scratch.An artifact repository gives you a consistent, reliable place to store and retrieve these components. This gives you three big benefits:

- 1️⃣ Security: Everyone in a team retrieves packages from a secure repository (CodeArtifact), instead of downloading from unsafe sources on the internet (hello, security risks)!

- 2️⃣ Reliability: If public package websites go down, you have backups in your CodeArtifact repository.

- 3️⃣ Control: Your team can easily share and use the same versions of packages, instead of everyone working with a different version of the same package.

- Create a new CodeArtifact repository

nextwork-devops-cicd. - In the Repository description - optional field, enter:

This repository stores packages related to a Java web app created as a part of NextWork's CI/CD Pipeline series.

Configure Maven Central as an upstream repository for your CodeArtifact repository.

-

Under Public upstream repositories - optional, select the checkbox next to maven-central-store.

-

This will configure Maven Central as an upstream repository for your CodeArtifact repository.

💡 What are upstream repositories?

Upstream repositories are like backup libraries that your primary repository can access when it doesn't have what you need. If you didn't set up CodeArtifact or have an upstream repository, your build would fail because a package is missing!When your application looks for a package that isn't in your CodeArtifact repository, CodeArtifact will check its upstream repositories (like Maven Central in our case) to find it.

Once found, Maven will then store a copy in your CodeArtifact repository for future use. This gives you three major benefits that you'll appreciate as your projects grow:

- Speed - After the first download from Maven Central, retrieving packages directly from CodeArtifact will speed up how quickly your app starts up and runs.

- Reliability - If Maven Central goes down (which happens more often than you'd think!), your builds keep working because you've got local copies

- Control - You can audit which external packages are being used in your organization and even block problematic ones if needed.

💡 What is Maven Central?

Maven Central is essentially the App Store of the Java world - it's the most popular public repository where developers publish and share Java libraries. When you're building Java applications, chances are you'll need packages from Maven Central. It contains virtually every popular open-source Java library out there, from database connectors to testing frameworks and UI components.By connecting our CodeArtifact repository to Maven Central, we're setting up a system where we get the best of both worlds: access to all these public libraries, but with the added benefits of caching, control, and consistency that come with our private CodeArtifact repository.

- Set up nextwork as your CodeArtifact domain.

💡 What is a CodeArtifact domain?

A CodeArtifact domain is like a folder that holds multiple repositories belonging to the same project or organization. We like using domains because they give you a single place to manage permissions and security settings that apply to all repositories inside it. This is much more convenient than setting up permissions for each repository separately, especially in large companies where many teams need access to different repositories.With domains, you can ensure consistent security controls across all your package repositories in an efficient way.

- Under Domain selection, choose This AWS account.

- Under Domain name, enter

nextwork.

- Click Next to proceed.

-

Now we're on the last page! Let's Review and create.

-

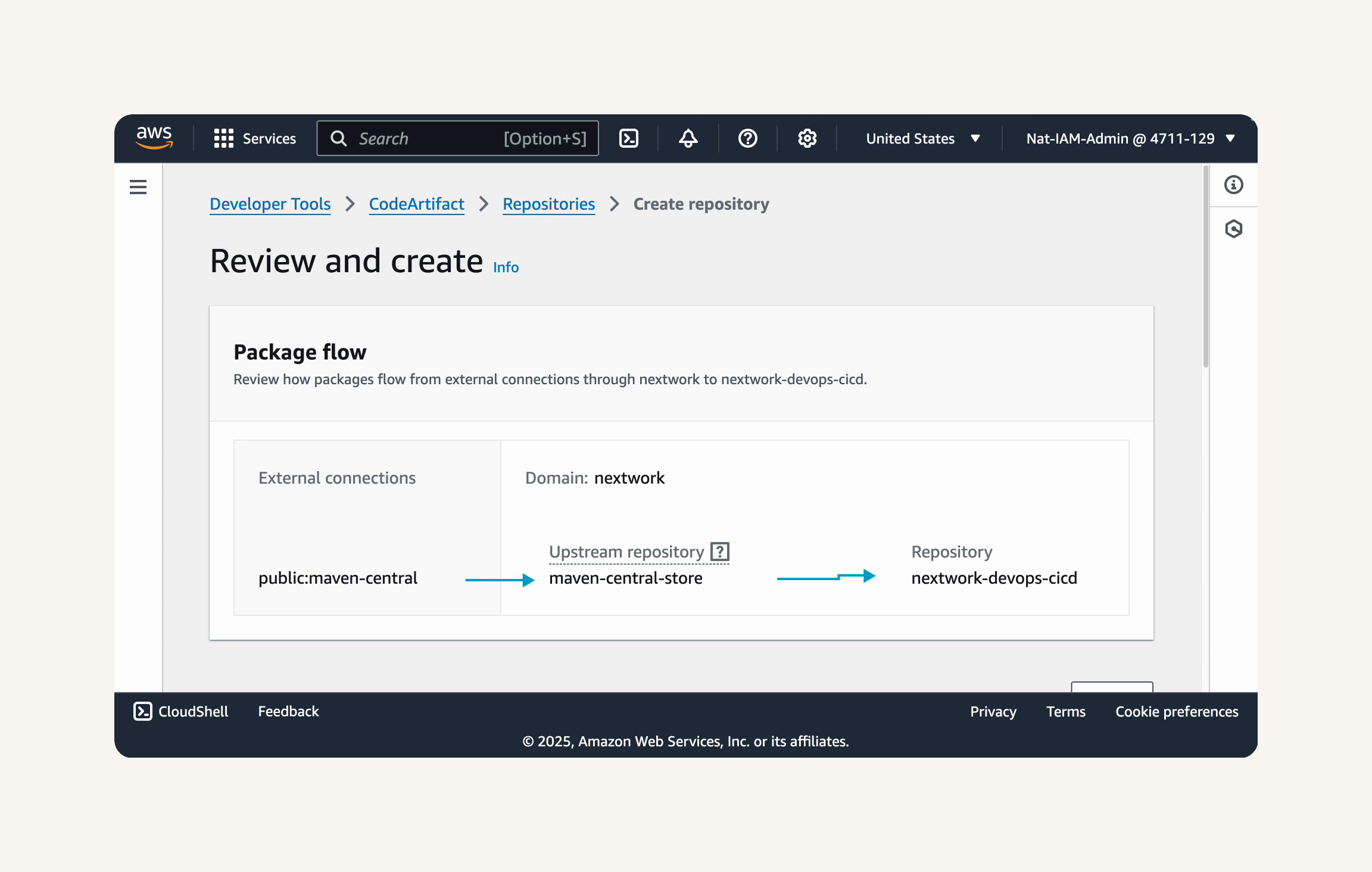

Review the details of your repository configuration, including the package flow at the top.

💡 What is this package flow diagram?

The package flow diagram shows you exactly how dependencies will travel to your application. When your project needs a dependency, it first looks in your CodeArtifact repository. If the package is already there, great! It uses that version. If not, CodeArtifact automatically reaches out to Maven Central to fetch it.This is important to understand because it affects how quickly your builds run and how resilient they are to network issues. The first time you request a package, it might take a moment longer as CodeArtifact fetches it from Maven Central. But every build afterwards will be faster because the package is now cached in your repository. It's like the difference between ordering groceries for delivery versus already having them in your fridge!

- Finish creating the repository.

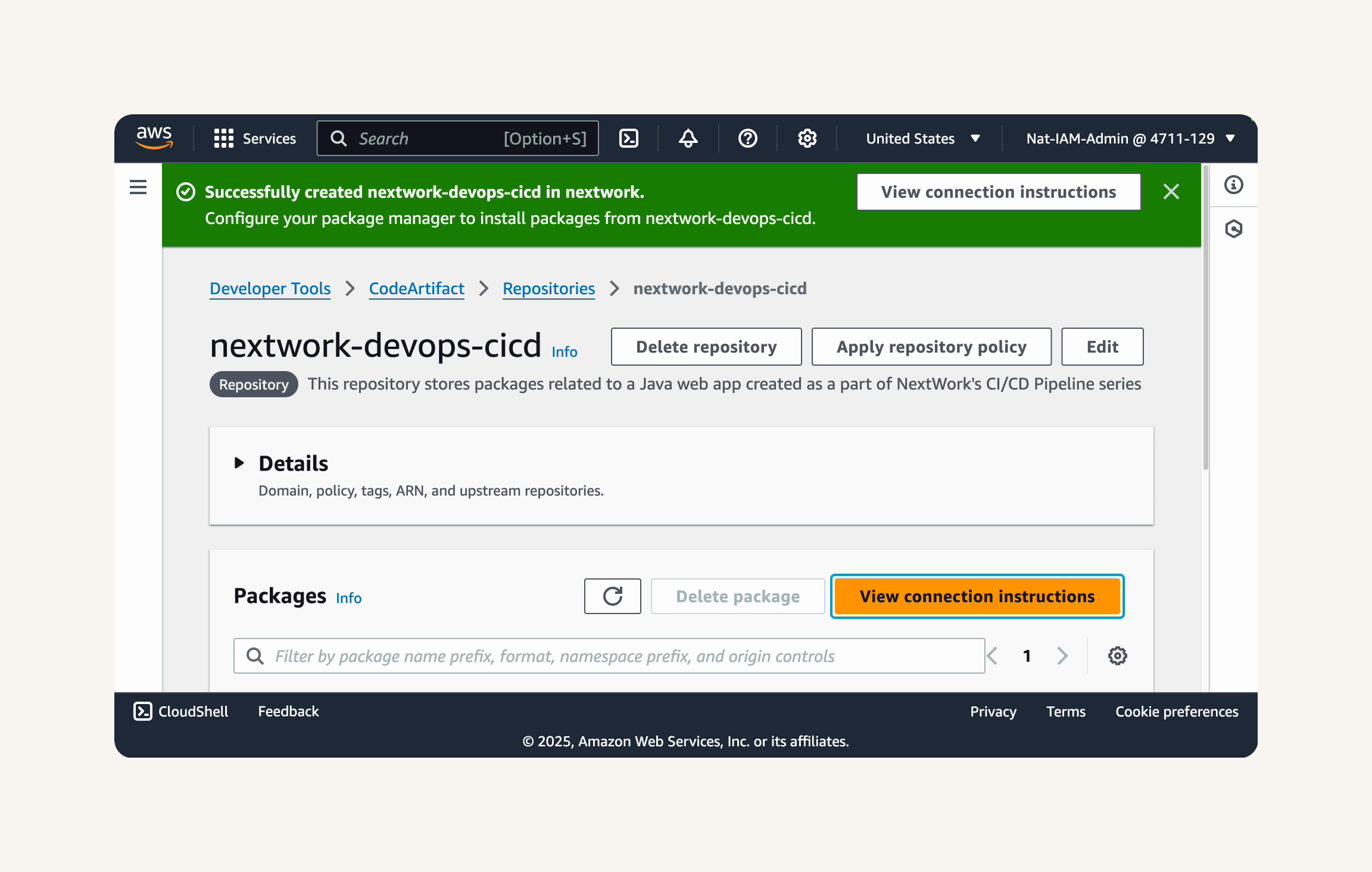

-

You'll be taken to the repository's details page.

-

You should see a success message at the top of the page, telling us that the CodeArtifact repository

nextwork-devops-cicdhas been successfully created.

Great job! You've set up your AWS CodeArtifact repository.

In the next step, we'll start connecting our web app's package manager, Maven, to this repository.

For Maven to start working with CodeArtifact, we need to create an IAM role that grants our EC2 instance the permission it needs to access CodeArtifact.

Otherwise, Maven can try all it wants to command your EC2 instance to store and retrieve packages from CodeArtifact, but your EC2 instance simple wouldn't be able to do anything! And going another layer deeper, IAM roles are made of policies; so we need to create policies first before setting up the role.

In this step, you're going to:

-

Try connecting Maven with CodeArtifact (error!)

-

Create a new IAM policy.

-

Set up the policy to grant an EC2 instance access to CodeArtifact.

- On your newly created repository's page, click the View connection instructions button at the top right corner.

-

In the Connection instructions page, we're configuring how Maven will connect to your CodeArtifact repository.

-

For Operating system, select Mac and Linux.

-

For Package manager client, select mvn (Maven).

💡 How did we know to choose Mac & Linux for operating system, mvn as package manager?

Even if you're doing this project on a Windows computer, don't forget that the EC2 instance we're using was launched with Amazon Linux 2023 as its AMI!

mvnis short for Maven, which is the tool we installed to manage the building process for our Java web app. This also makes Maven our package manager, i.e. the tool that helps us install, update and manage the external packages our web app uses.

-

🚨 Double check that you're using Mac and Linux as the operating system. Even if you're doing this project on a Windows computer, Mac and Linux is the right choice - your EC2 instance is an Amazon Linux 2023 instance!

-

Make sure that Configuration method is set to Pull from your repository.

-

Nice! The menu will now show you the steps and commands needed to connect Maven to your CodeArtifact repository.

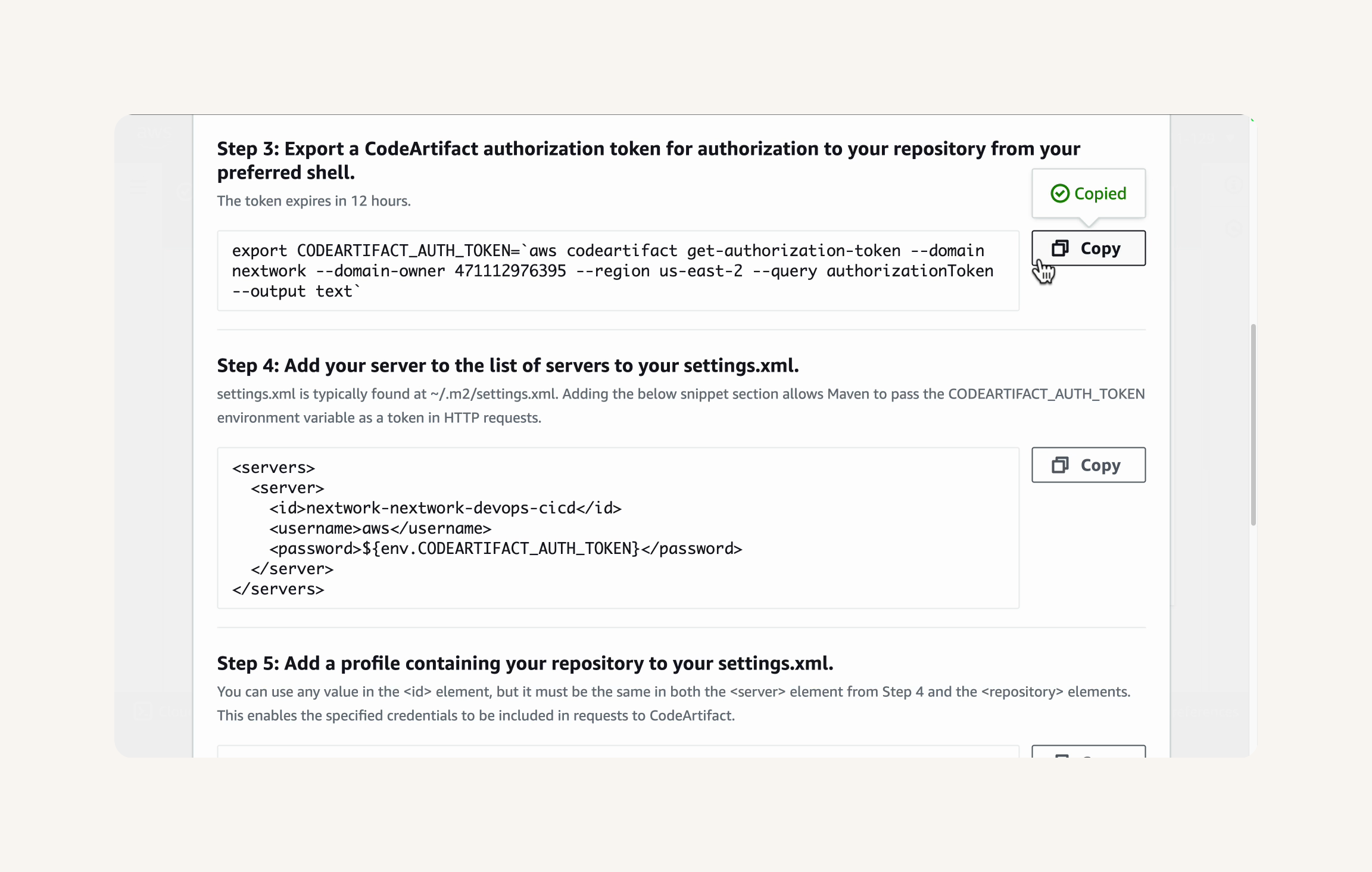

- In the Connection instructions dialog, find Step 3: Export a CodeArtifact authorization token....

💡 What is this step for?

😳 "Export a CodeArtifact authorization token for authorization to your repository from your preferred shell" sounds a little technical!It actually just means you need to run the command in Step 3 to give your terminal a temporary password. That password will grant your development tools (i.e. Maven) access to your repositories in CodeArtifact.

Maven uses this token whenever it needs to fetch something from your CodeArtifact repository.

- Copy the entire command in Step 3.

-

Go back to your VS Code terminal, which is connected to your EC2 instance.

-

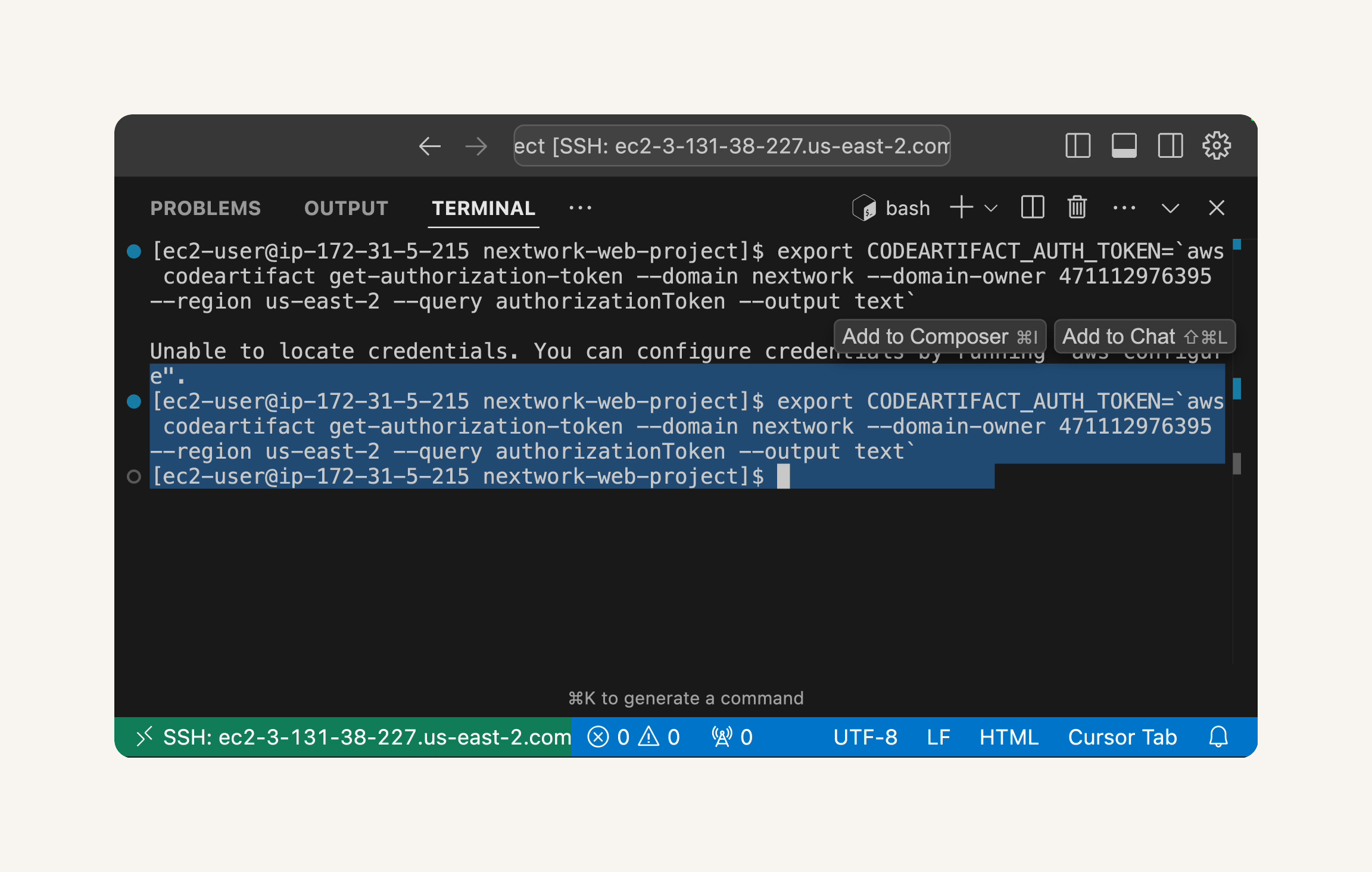

Paste the copied command into the terminal and press Enter to run it.

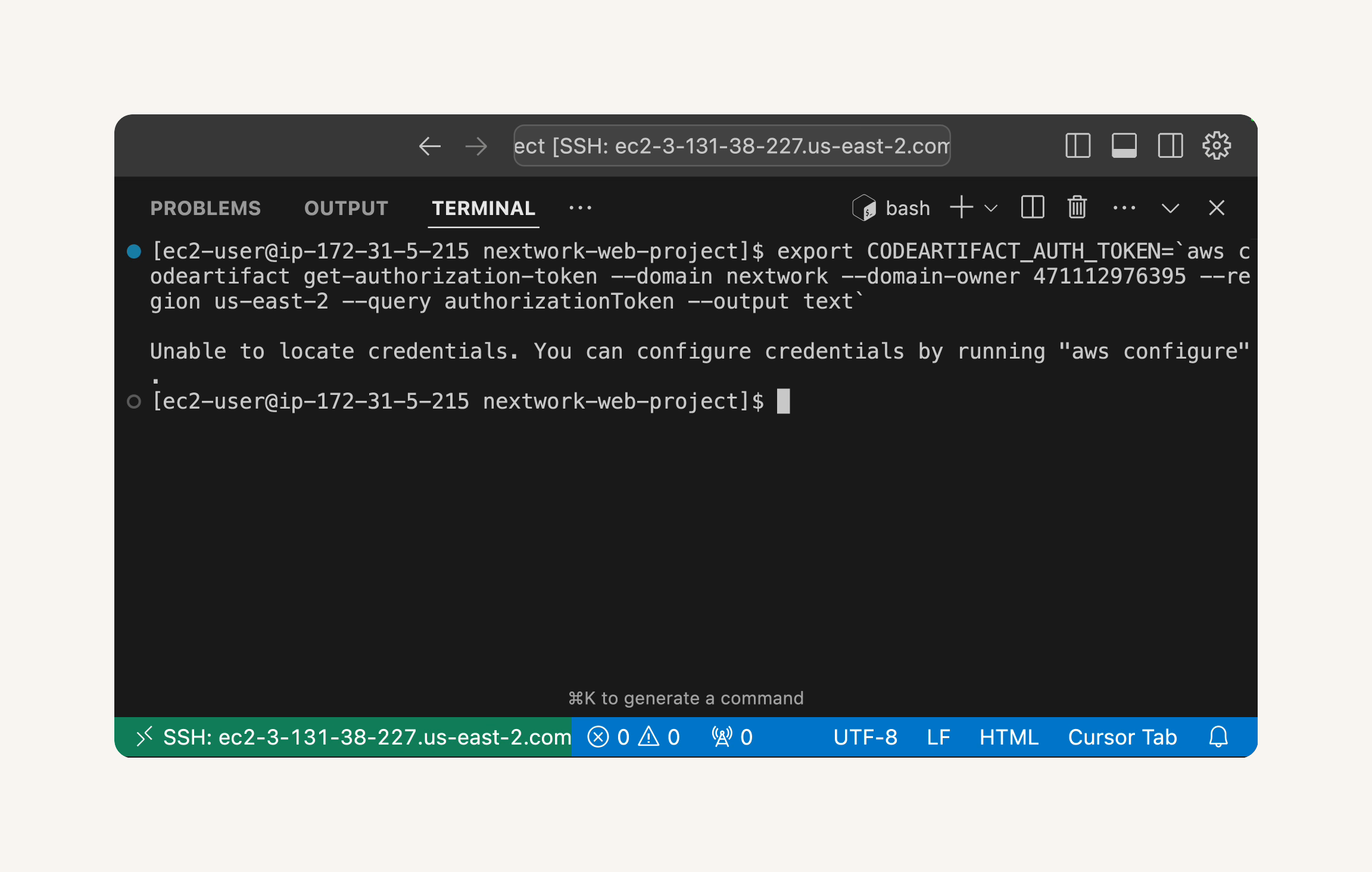

- Looks like we got an error!

💡 Why did we get this error?

ThatUnable to locate credentialserror is actually a good security feature in action! Your EC2 instance is essentially saying, "I don't know who you are, so I can't let you access CodeArtifact."This happens because, by default, your EC2 instance doesn't have permission to access your other AWS services (including CodeArtifact). This is intentional - AWS follows the "principle of least privilege," meaning resources only get the minimum permissions they need to function.

- In the AWS Management Console, head to the the IAM console.

💡 What is IAM?

IAM stands for Identity and Access Management. You'll use AWS IAM to manage the access level that other users and services have to your resources.

- In the IAM console, in the left-hand menu, click on Policies.

- Click the Create policy button to start creating a new IAM policy.

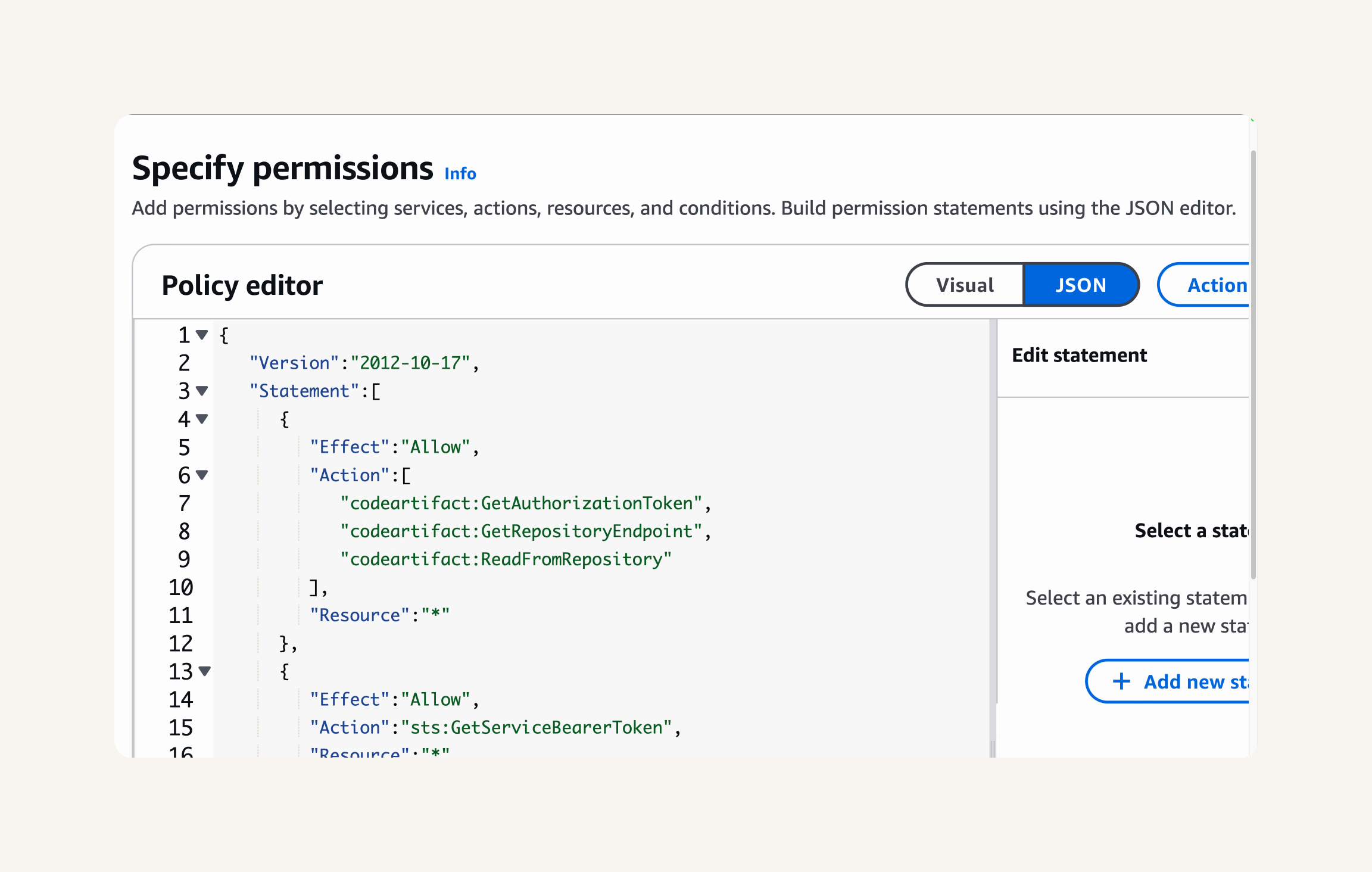

- On the Create policy page, select the JSON tab.

- Replace the default content in the text editor with the following JSON policy document. Copy and paste the entire JSON code block:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"codeartifact:GetAuthorizationToken",

"codeartifact:GetRepositoryEndpoint",

"codeartifact:ReadFromRepository"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "sts:GetServiceBearerToken",

"Resource": "*",

"Condition": {

"StringEquals": {

"sts:AWSServiceName": "codeartifact.amazonaws.com"

}

}

}

]

}

💡 What's in this policy?

This JSON document is like a security rulebook. It's written in a specific format that AWS understands, with two main parts:

The first part (

codeartifact:*actions) gives permission to get authentication tokens, find repository locations, and read packages from repositories.The second part (

sts:GetServiceBearerToken) allows temporarily elevated access specifically for CodeArtifact operations.The

"Resource": "*"means these permissions apply to all relevant resources, while the Condition narrows the second permission to only work with CodeArtifact.This follows the "principle of least privilege" - granting only the minimum permissions needed to perform the required tasks, enhancing your security posture.

- After pasting the JSON policy document, click the Next button at the bottom right.

-

On the Review policy page, in the Policy name field, enter

codeartifact-nextwork-consumer-policy. -

In the Description - optional field, add a description like:

Provides permissions to read from CodeArtifact. Created as a part of NextWork CICD Pipeline series.

- Review the Summary of your policy to ensure the permissions and details are correct.

- Click the Create policy button to create the IAM policy.

- After clicking Create policy, you should see a success message at the top of the IAM Policies page, telling us that the policy

codeartifact-nextwork-consumer-policyhas been successfully created.

Well done! You've created an IAM policy that will allow EC2 instances to access CodeArtifact.

Now that we've created the IAM policy for CodeArtifact access, let's attach it to an IAM role and then associate that role with our EC2 instance. This will grant our EC2 instance the permissions it needs to securely access CodeArtifact. Finally, we'll verify the connection to CodeArtifact from our EC2 instance.

This is important because attaching the IAM role to our EC2 instance is what actually grants the instance the permissions defined in the policy, enabling secure access to CodeArtifact.

In this step, you're going to:

-

Create a new IAM role for EC2 that has your new policy attached.

-

Attach the IAM role to your EC2 instance.

-

Re-run the export token command, this time seeing a successful response 🙏

- In the IAM console, in the left-hand menu, click on Roles.

💡 What's the difference between a policy and a role?

Think of a policy as the actual list of permissions - it's a document that specifies exactly what actions are allowed or denied on which AWS resources. For example, "allow reading from this S3 bucket" or "allow publishing to CodeArtifact."A role is the container that holds these policies and can be assumed by users, applications, or AWS services. You attach policies to roles, then assign the role to whoever needs those permissions.

This separation is powerful because:

You can attach the same policy to multiple roles

A role can have multiple policies attached

You can modify a policy once and affect all roles using it

Roles can be assumed temporarily, while policies define the permanent permission boundaries

It's like the difference between writing down rules (policies) and creating a job position (role) that follows those rules. The position can be filled by different people or services, but the rules remain consistent.

- Click the Create role button to start creating a new IAM role.

- For Select entity type, choose AWS service.

- Under Choose a use case, select EC2 from the list of services.

- Click Next to proceed to the Add permissions step.

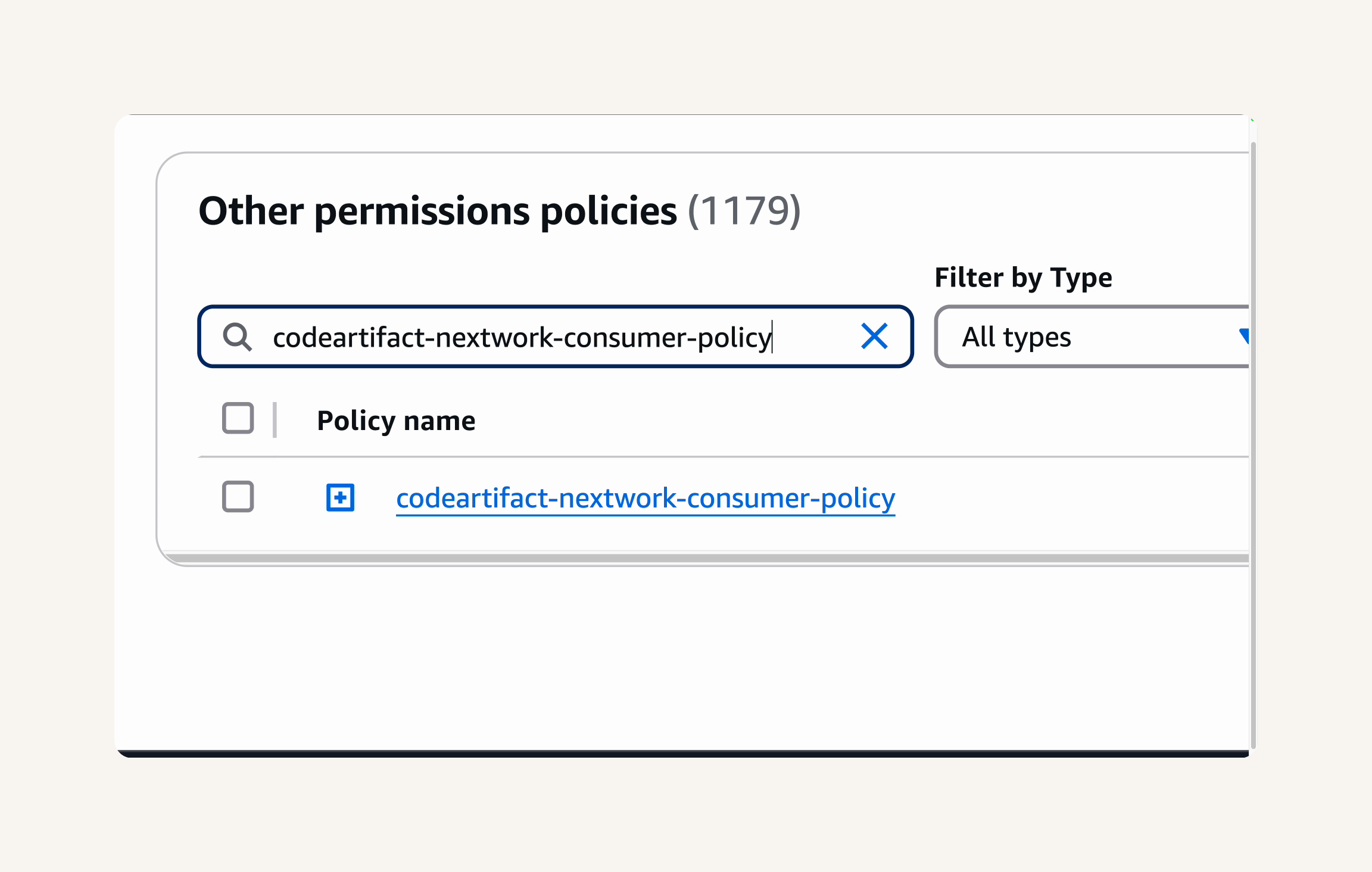

-

In the Add permissions step, in the Filter policies search box, type

codeartifact-nextwork-consumer-policy. -

Select the checkbox next to the

codeartifact-nextwork-consumer-policythat you created in the previous step. -

Click Next to head to the Name, review, and create step.

-

In the Name, review, and create step:

-

In the Role name field, enter

EC2-instance-nextwork-cicd. -

In the Description - optional field, enter:

`Allows EC2 instances to access services related to the NextWork CI/CD pipeline series.

-

- Next, in the review page, click the Create role button to create the IAM role.

- After clicking Create role, you should see a success message at the top of the IAM Roles page, telling us that the IAM role

EC2-instance-nextwork-cicdhas been successfully created. Your new role will be listed in the roles table.

-

Now, we need to associate this IAM role with your EC2 instance.

-

Head back to the EC2 console.

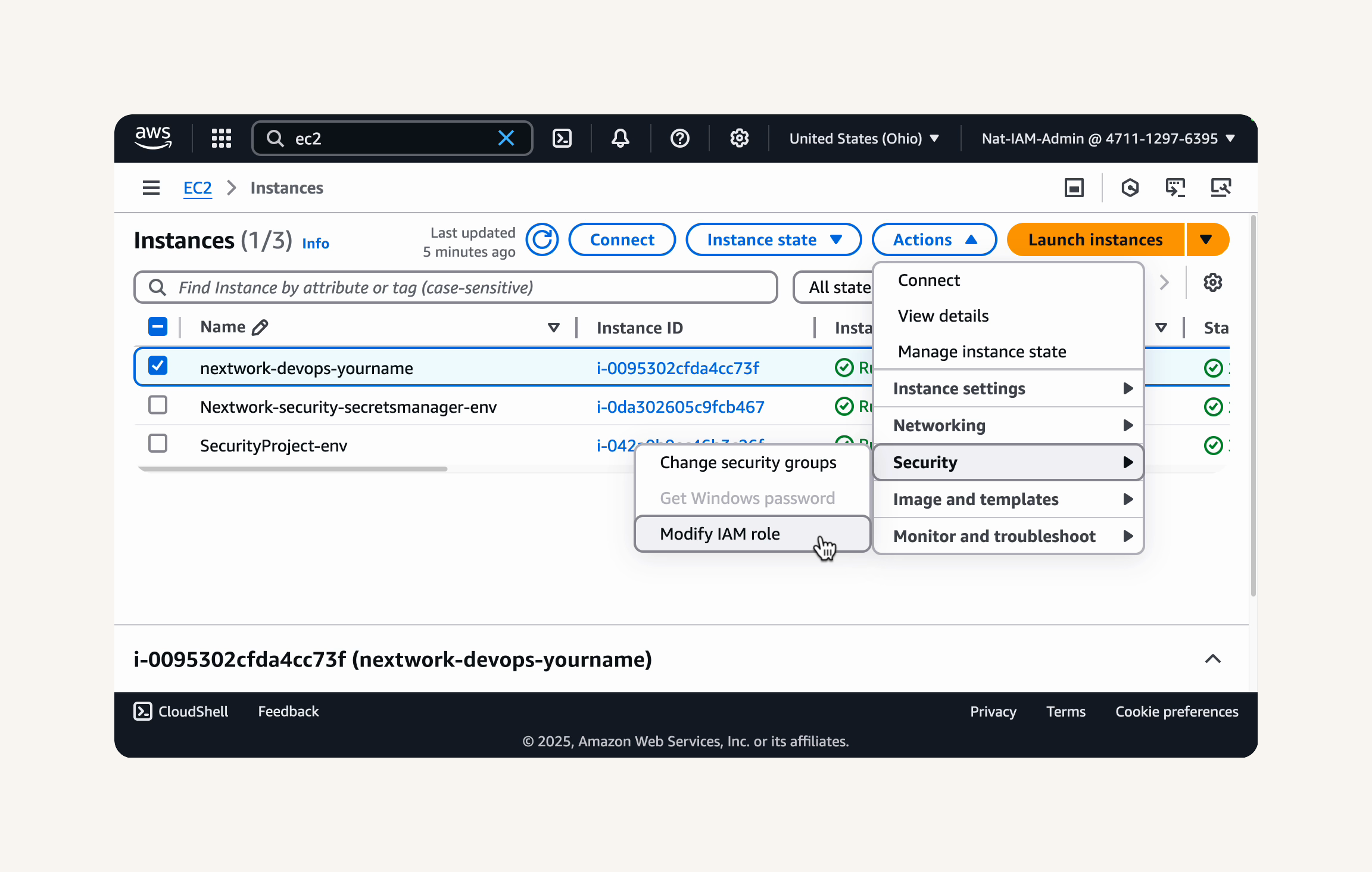

- Head to the Instances tab.

- Select your running EC2 instance (

nextwork-devops-yourname).

- Click on Actions in the menu bar, then select Security, and then Modify IAM role.

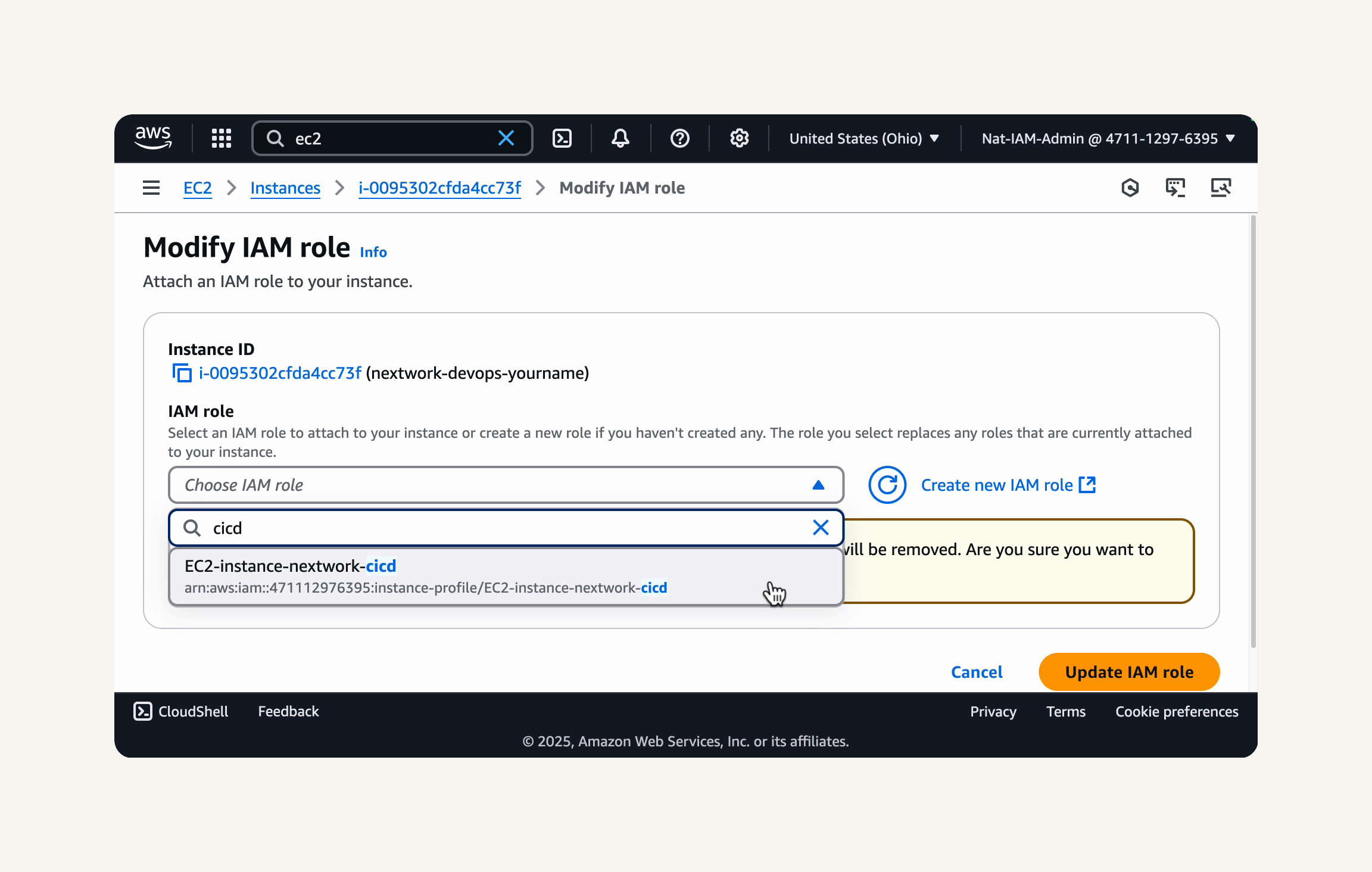

- In the Modify IAM role dialog box, select Refresh to load the IAM roles available for your EC2 instance.

-

Under IAM role, select the IAM role you just created,

EC2-instance-nextwork-cicd, from the dropdown menu. ` -

Select Update IAM role to attach the role to your EC2 instance.

-

After attaching the IAM role, you should see a green banner at the top of the EC2 Instances dashboard confirming that the IAM role was successfully modified for your instance.

💡 Extra for Experts: How do IAM roles work with EC2 instances?

When you attach an IAM role to an EC2 instance, AWS automatically provides and rotates temporary security credentials for that instance. This means that applications running on the instance, like our Maven build, can automatically use these temporary credentials to make AWS API calls without you having to handle credential management.That's why attaching an instance profile is considered a best practice compared to using hardcoded credentials (i.e. running commands in your EC2 terminal to manually assign it permanent credentials)!

Now that your EC2 instance has the necessary IAM role attached, let's re-run the command to export the CodeArtifact authorization token.

This time, your EC2 instance should be able to retrieve the token, since it has the necessary permissions from the IAM role.

-

Head back to your VS Code terminal connected to your EC2 instance.

-

Re-run the same export token command from Step 3.

💡 Recap: What is a CodeArtifact authorization token?

The authorization token is like a temporary ID badge for your build tools to access CodeArtifact. When you run that token command, AWS checks your identity and issues this digital badge that's valid for 12 hours. Your build tools (like Maven) then present this token whenever they need to grab something from your CodeArtifact repository.Why use temporary tokens instead of permanent credentials? It's all about security! If a token is ever compromised, it automatically expires in hours instead of giving permanent access. Plus, you don't have to worry about storing sensitive credentials in your build configuration files. The system automatically handles the authentication process behind the scenes, making your life easier while keeping your repositories secure. Just remember that you'll need to refresh this token if you come back to the project after more than 12 hours!

- This command will retrieve a temporary authorization token for CodeArtifact and store it in an environment variable named

CODEARTIFACT_AUTH_TOKEN.

🙋♀️ Still getting "Unable to locate credentials" after attaching the IAM role?

This happens sometimes! There can be a delay before your EC2 instance recognizes its new IAM role.Let's get this fixed:

Wait 2-3 minutes for the IAM role to fully propagate to your EC2 instance

Double-check in the EC2 console that the IAM role

EC2-instance-nextwork-cicdis showing as attached to your instanceReview your IAM policy to make sure it has the correct permissions and no syntax errors

If nothing else works, you can try rebooting your EC2 instance as a last resort - but only do this if you're comfortable with the restart process

Fantastic! You've successfully attached the IAM policy and role to your EC2 instance. Your instance now has the permissions to access your CodeArtifact repository securely. Let's verify the connection in the next step.

Let's make sure everything is set up correctly by verifying the connection to our CodeArtifact repository from our EC2 instance. We'll configure Maven to use CodeArtifact and then try to compile our web app, which should now download dependencies from CodeArtifact.

In this step, you're going to:

-

Finish setting up the connection between Maven and CodeArtifact.

-

Compile your Maven project using the

settings.xmlfile. -

See your CodeArtifact repository automatically store your project's dependencies!

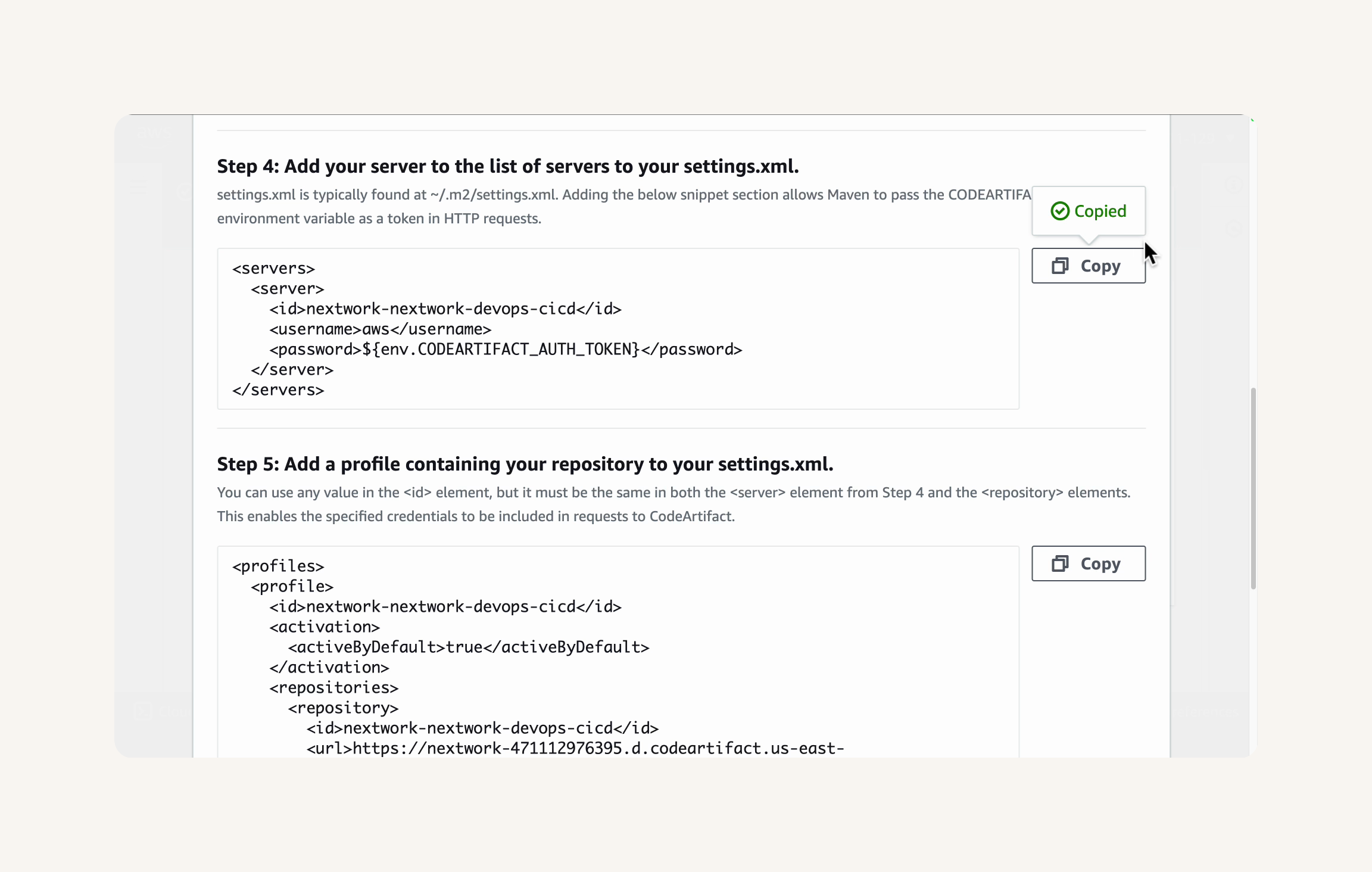

- You might notice that we still have a few steps left in CodeArtifact's connection settings panel!

💡 What are the code in Steps 4, 5, and 6 saying?

The code snippets define repository URLs, authentication details, and other settings so that Maven knows how to connect with CodeArtifact to fetch and store your project's dependencies.Let's break each section down:

The servers section is where your store your access details to the repositories you're connecting with your web app project. In this example, you've added your authentication token to access your local CodeArtifact repository.

The profiles section is where you write a rulebook on when Maven should use which repository. We only have one package repository in this project, so our profiles section is more straightforward than other projects that might be pulling from multiple repositories! Our profiles section is telling Maven to go to the nextwork-packages repository to find the tools / packages needed to build your Java web app.

The mirrors section sets up backup locations that Maven can check if it can't find what it needs in the first local repository it goes to. The backup location that we'll set by default is... our CodeArtifact repository again. This means that for any repository requests (denoted by the asterisk * in the * line), Maven will redirect those requests to the same CodeArtifact repository since it's our only local repository. It might seem unnecessary now, but mirrors are great in complex scenarios and is a great fallback option to set up from the start!

-

We'll use the code in each step in a minute, but we'll have to set up a special file called settings.xml first.

-

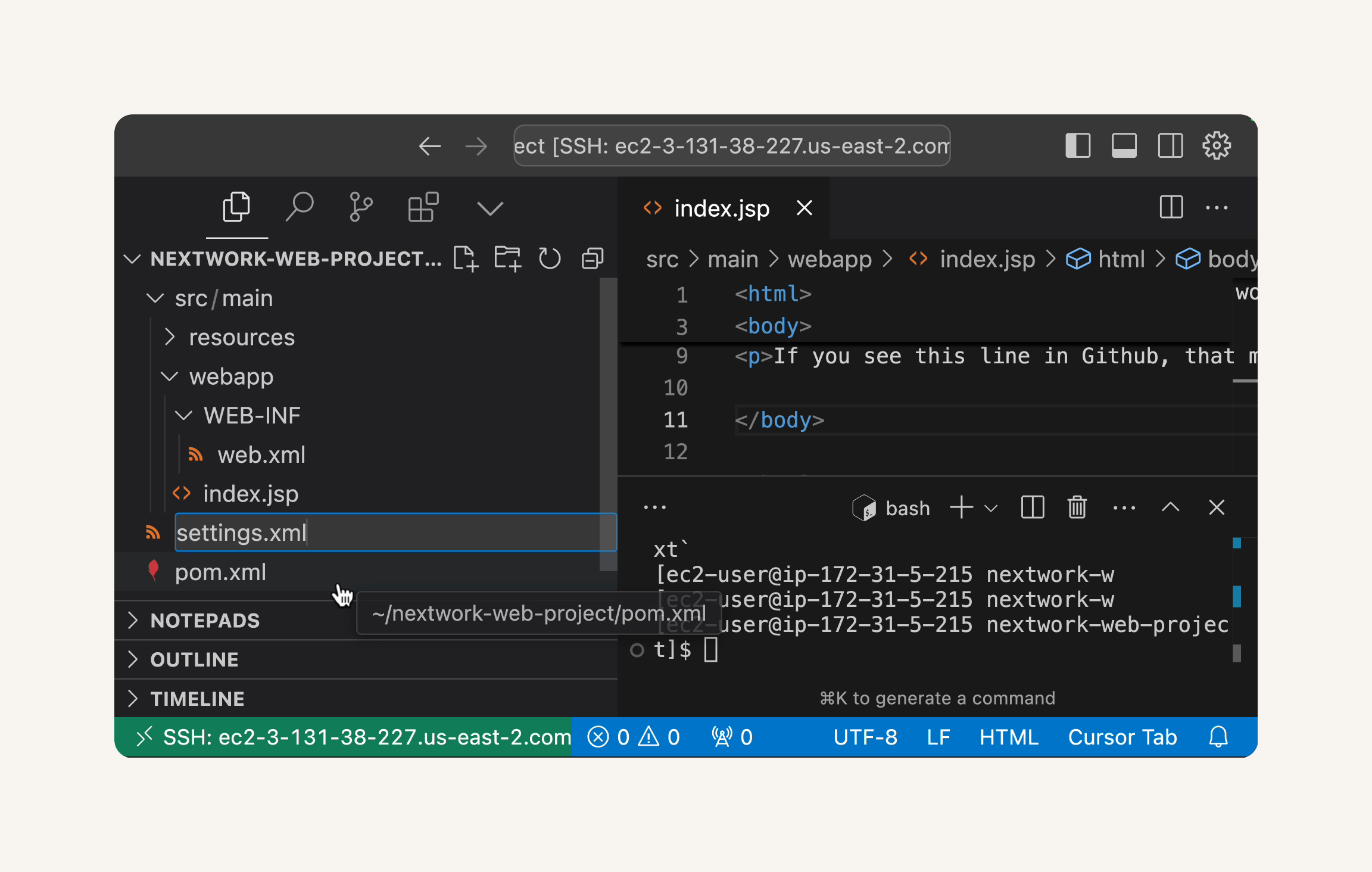

In VS Code, in your left hand file explorer, head to the root directory of your

nextwork-web-project. -

Create a new file at the root of your

nextwork-web-projectdirectory.

- Name the new file

settings.xml.

💡 What is settings.xml?

settings.xml is like a settings page for Maven - it stores all the settings we saw in Steps 4-6 of the connection window. It tells Maven how to behave across all your projects. In our case, we need a settings.xml file to tell Maven where to find the dependencies and how to get access to the right repositories (e.g. the ones in CodeArtifact).💡 Extra for Experts: What's xml?

xml is a markup language that lets you structure data and write instructions for a server. It's just like how html is a markup language that lets you structure data and write instructions for a web browser to display a web page.You might also notice pom.xml, which is a file that was automatically created in your repository's root directory when you set up your web app for the first time.

pom.xml tells Maven the ingredients list (i.e. dependencies) for your web app and how to put them together to build the app. Then, once Maven knows what dependencies to look for, settings.xml tells Maven where to find the dependencies and how to get access to the right repositories (e.g. the ones in CodeArtifact).

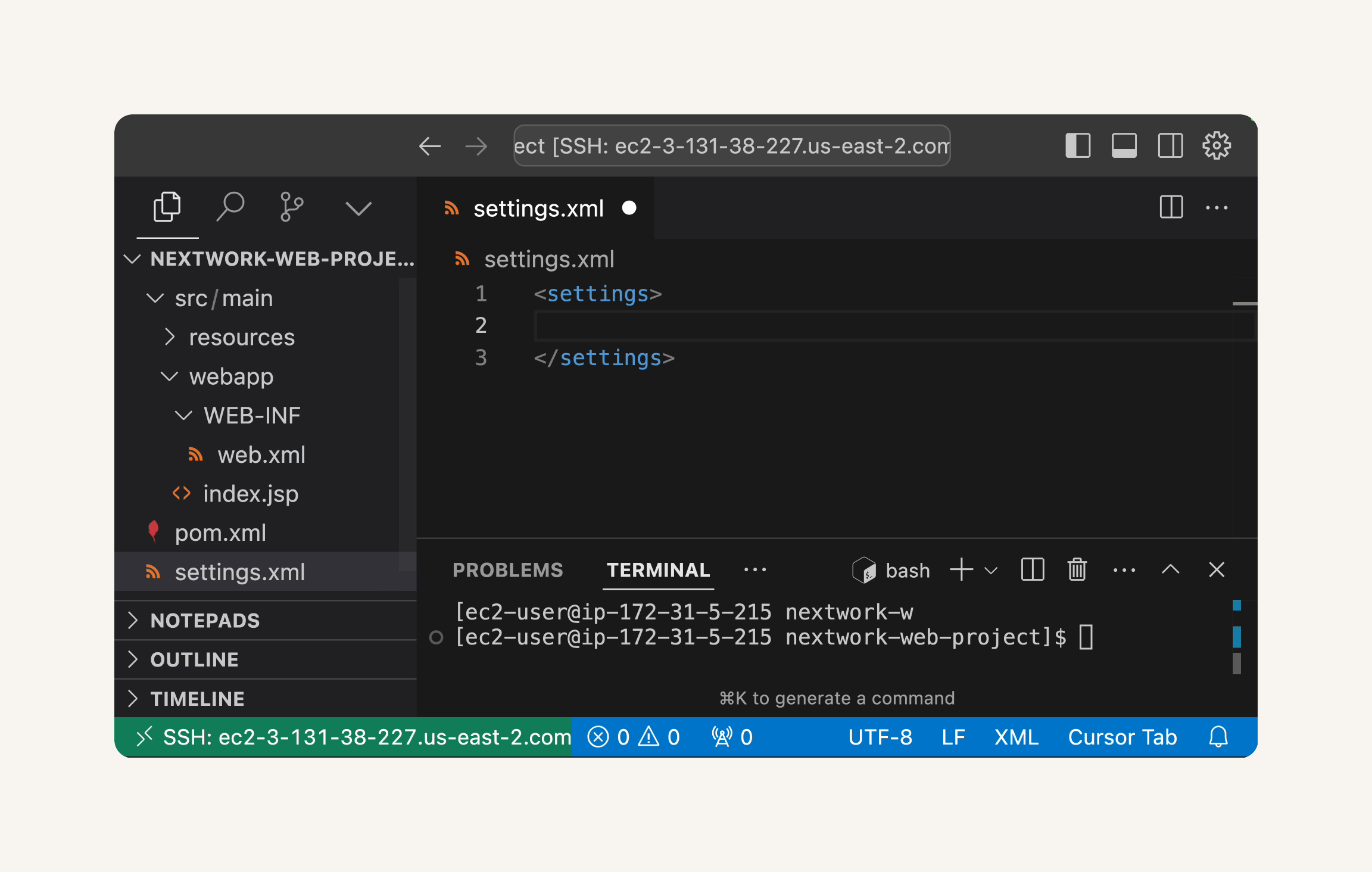

- Open the settings.xml file. If you created a new file, it will be empty.

- In your settings.xml file, add the

<settings>root tag if it's not already there:

<settings>

</settings>-

Go back to the CodeArtifact connection settings panel.

-

From the Connection instructions dialog, copy the XML code snippet from Step 4: Add your server to the list of servers in your settings.xml.

- Paste the code in the settings.xml file, in between the

<settings>tags.

- Let's copy the XML code snippet from Step 5: Add a profile containing your repository to your settings.xml.

- Paste the code snippet you copied right underneath the

<servers>tags. Make sure the<profiles>tags are also nested inside the<settings>tags.

- Finally, paste the XML code snippet from Step 6: (Optional) Set a mirror in your settings.xml... right underneath the

<profiles>tags.

- Save the

settings.xmlfile.

💡 Recap: What is settings.xml?

Thesettings.xmlfile is Maven's control center - it's where you tell Maven how to behave across all your projects.When we add CodeArtifact information to this file, we're essentially telling Maven: "Hey Maven, whenever you need to download a dependency, look in this CodeArtifact repository first, and here's how to authenticate yourself."

By configuring settings.xml properly, we're creating a seamless connection between Maven and CodeArtifact. Your builds will automatically authenticate and pull dependencies from the right place without you having to think about it again. It's one of those "set it up once, benefit forever" kinds of configurations that make a developer's life much easier.

-

In your VS Code terminal, run

pwdto check that you're in the root directory of yournextwork-web-project -

If you're not at the root directory, run

cd nextwork-web-projectto get there! -

Next, we'll compile your project.

💡 What does compiling mean?

Compiling is like translating your project's code into a language that computers can understand and run. When you compile your project, you're making sure everything is correctly set up and ready to turn into a working app.

- Run the Maven compile command, which uses the

settings.xmlfile we just configured:

mvn -s settings.xml compile- Press Enter to execute the command.

🙋♀️ Getting a "401 Unauthorized" error when running Maven?

This is a common authentication issue with CodeArtifact. Let's troubleshoot it together!Try these steps:

Double check that your connection window is using the MacOS and Linux instructions - you should not be using the Windows instructions, even if you're doing this project on a Windows computer.

Verify your token exists by running:

echo $CODEARTIFACT_AUTH_TOKEN- you should see a long string of charactersDouble-check your

settings.xmlfile against the CodeArtifact connection instructions - make sure server IDs, repository URLs, and profile settings are all correctCheck that your IAM role and policy are properly set up and attached to your EC2 instance

If all else fails, try clearing your Maven cache:

rm -rf ~/.m2/repositoryand runmvn -s settings.xml compileagain

- As Maven compiles your project, observe the terminal output.

-

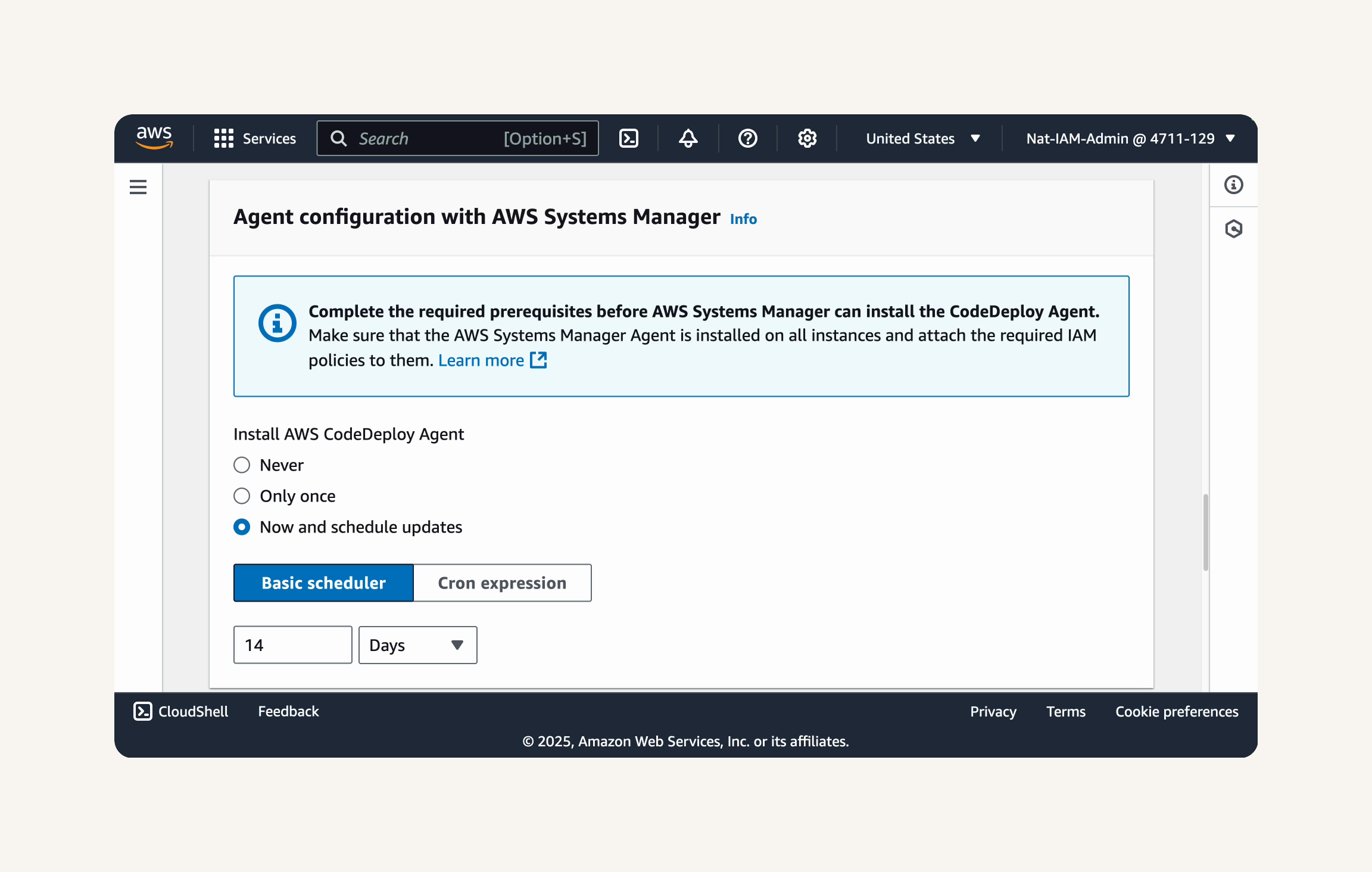

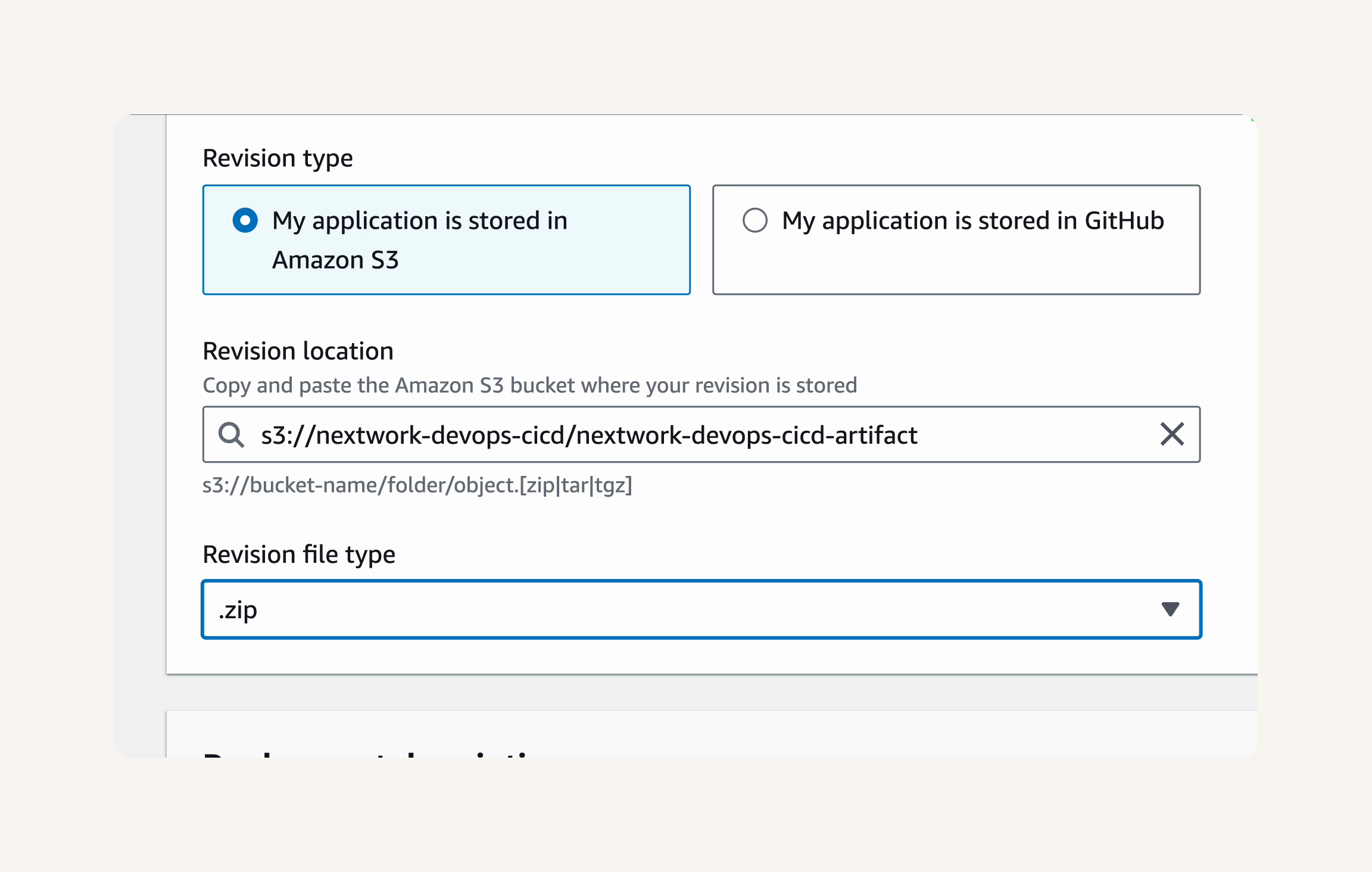

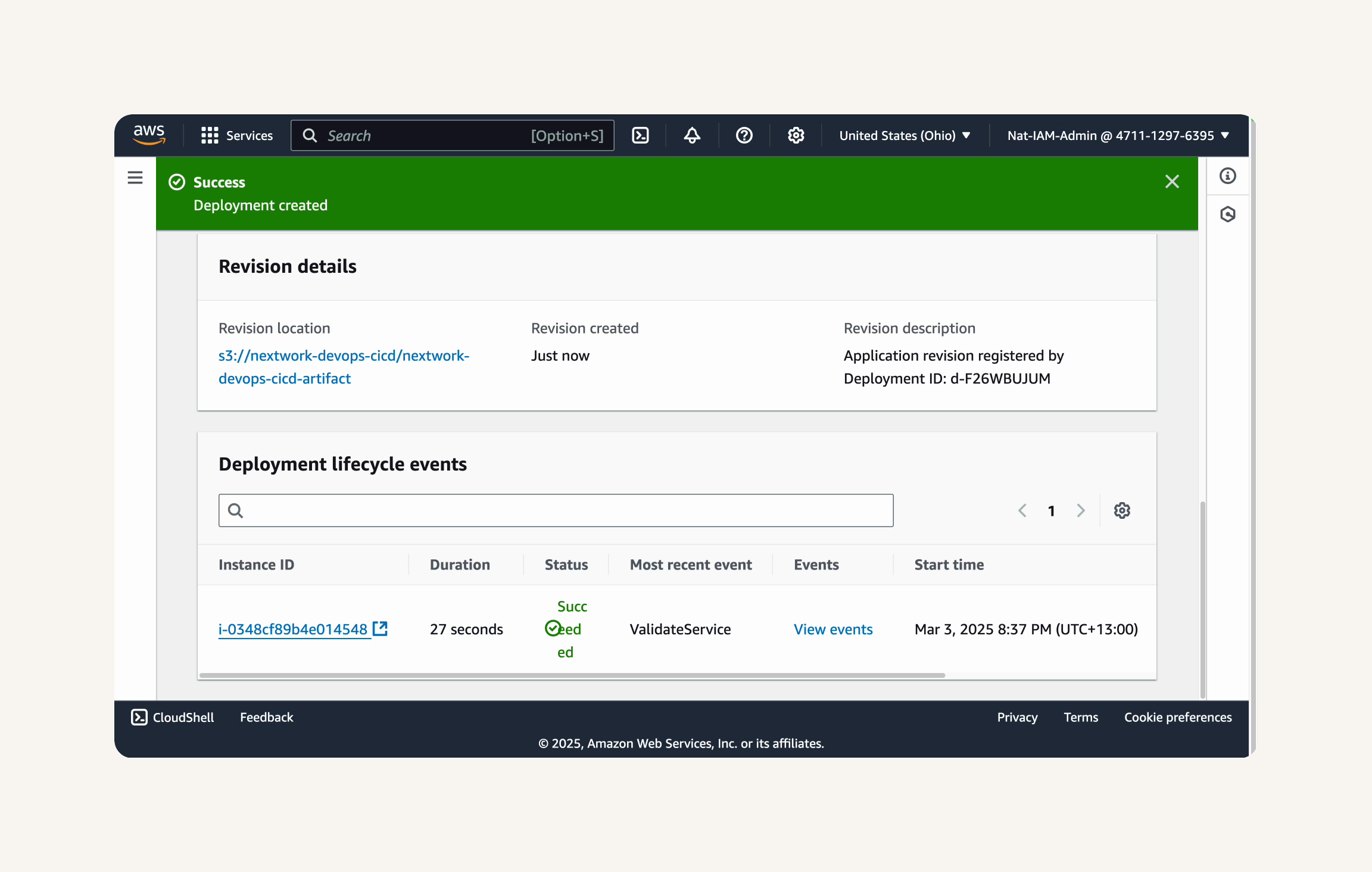

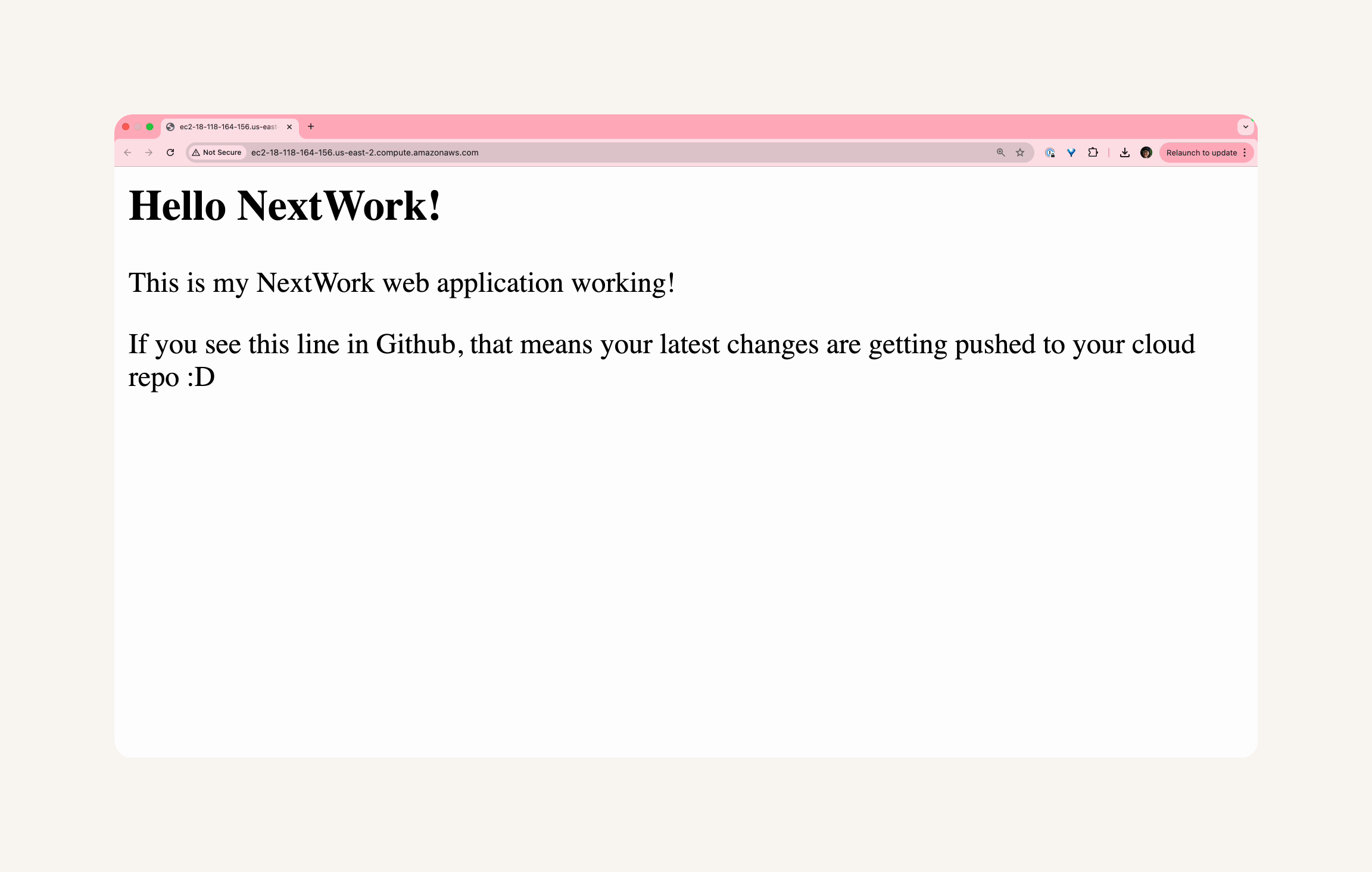

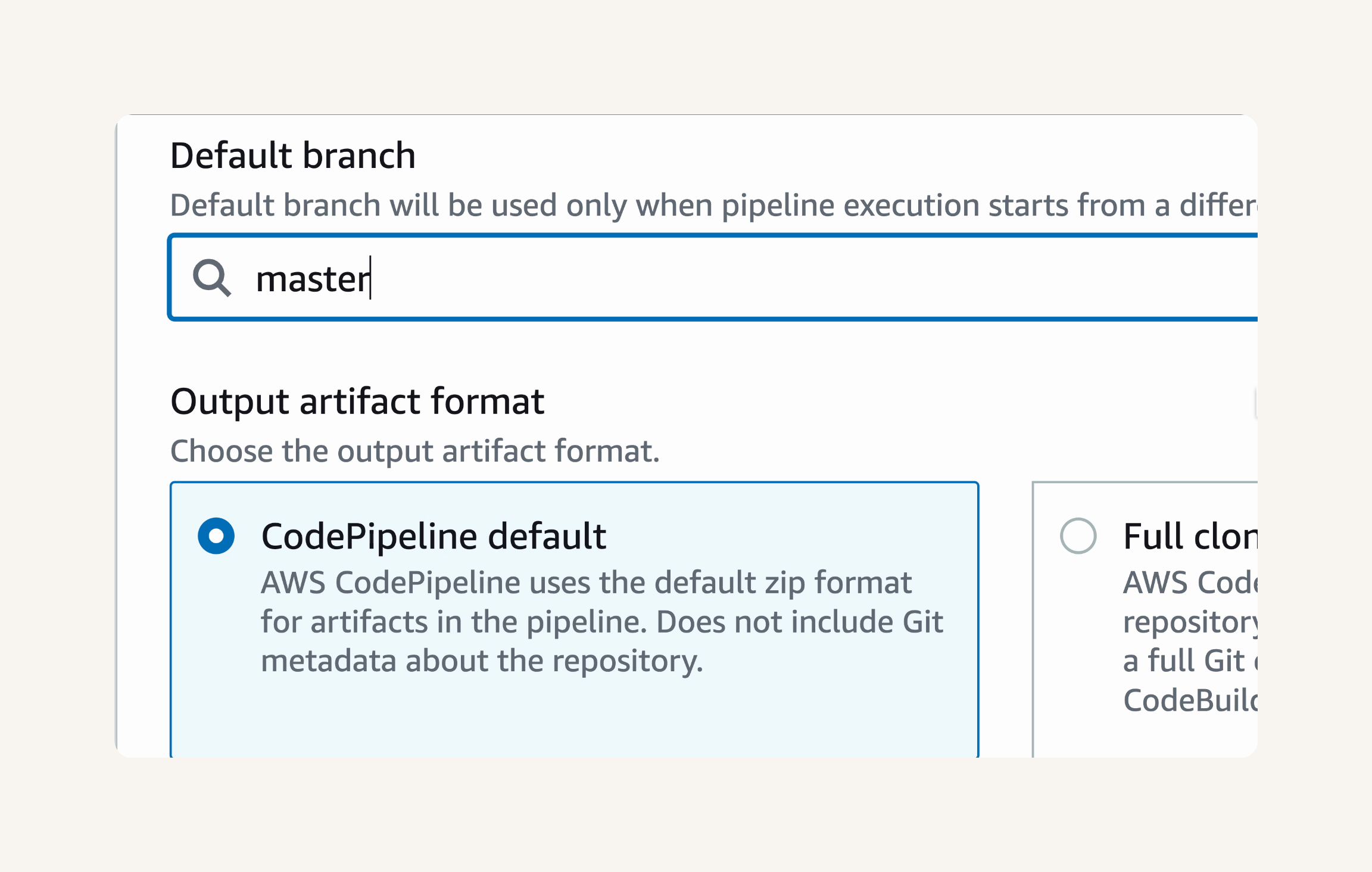

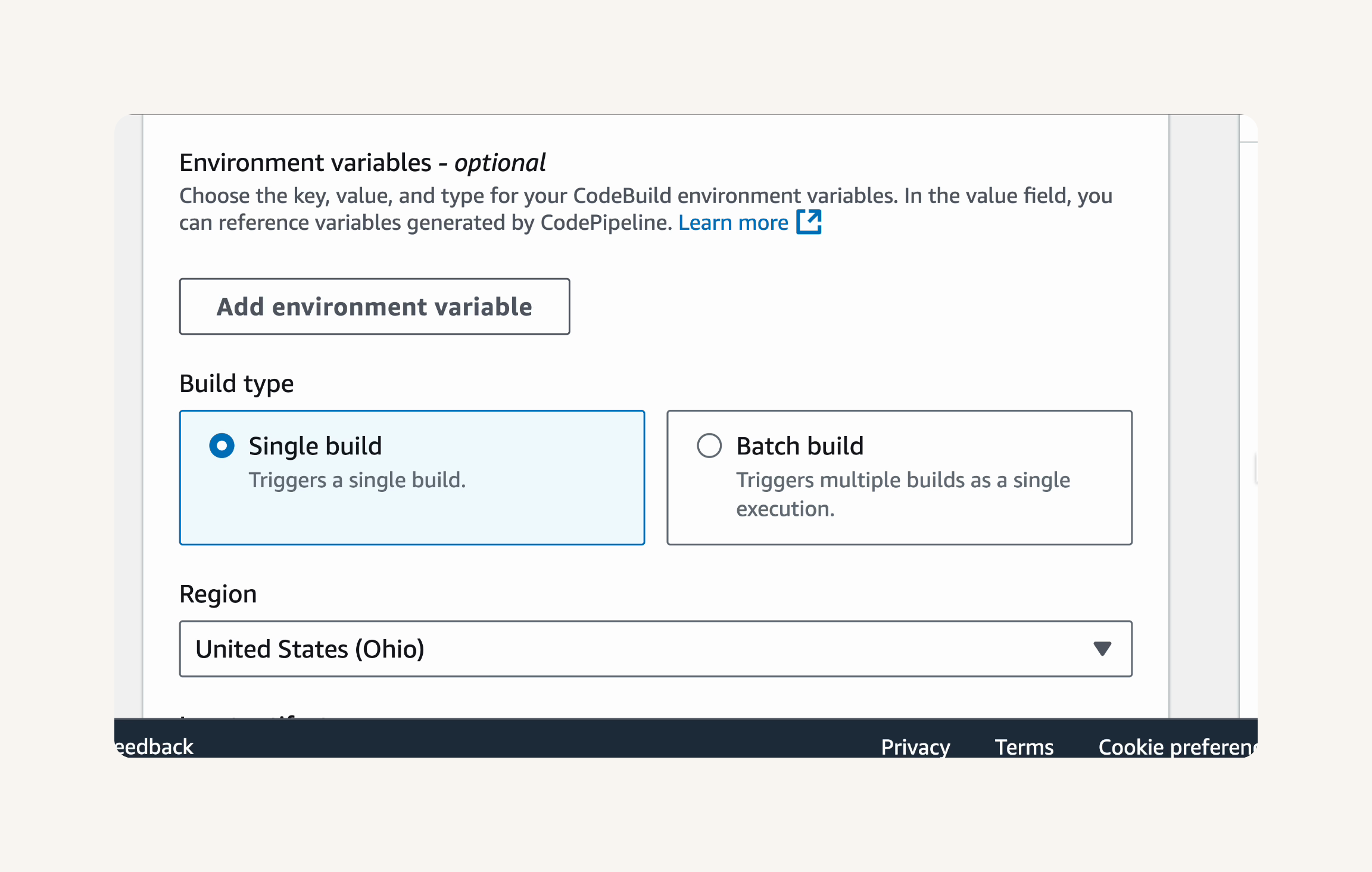

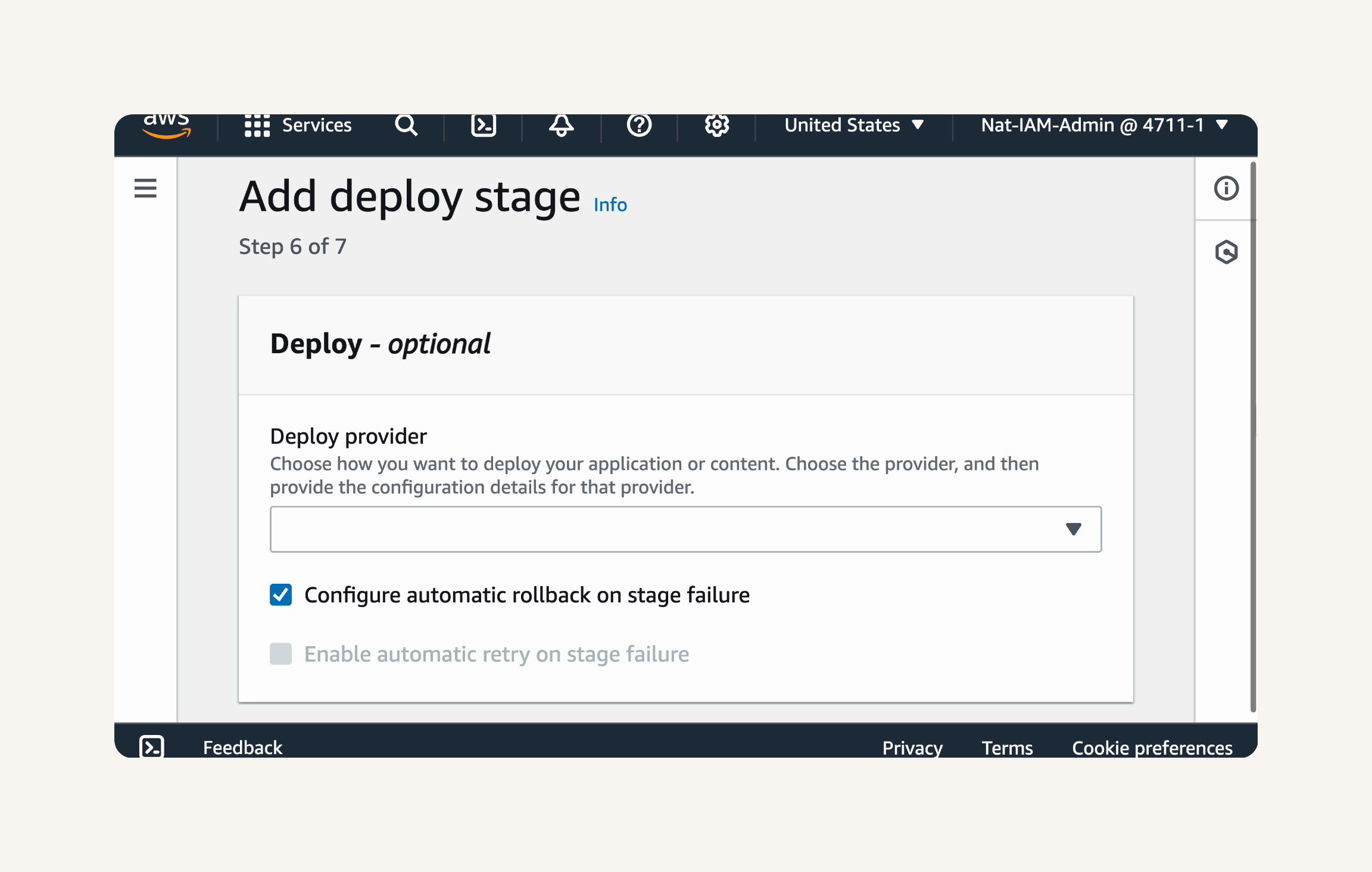

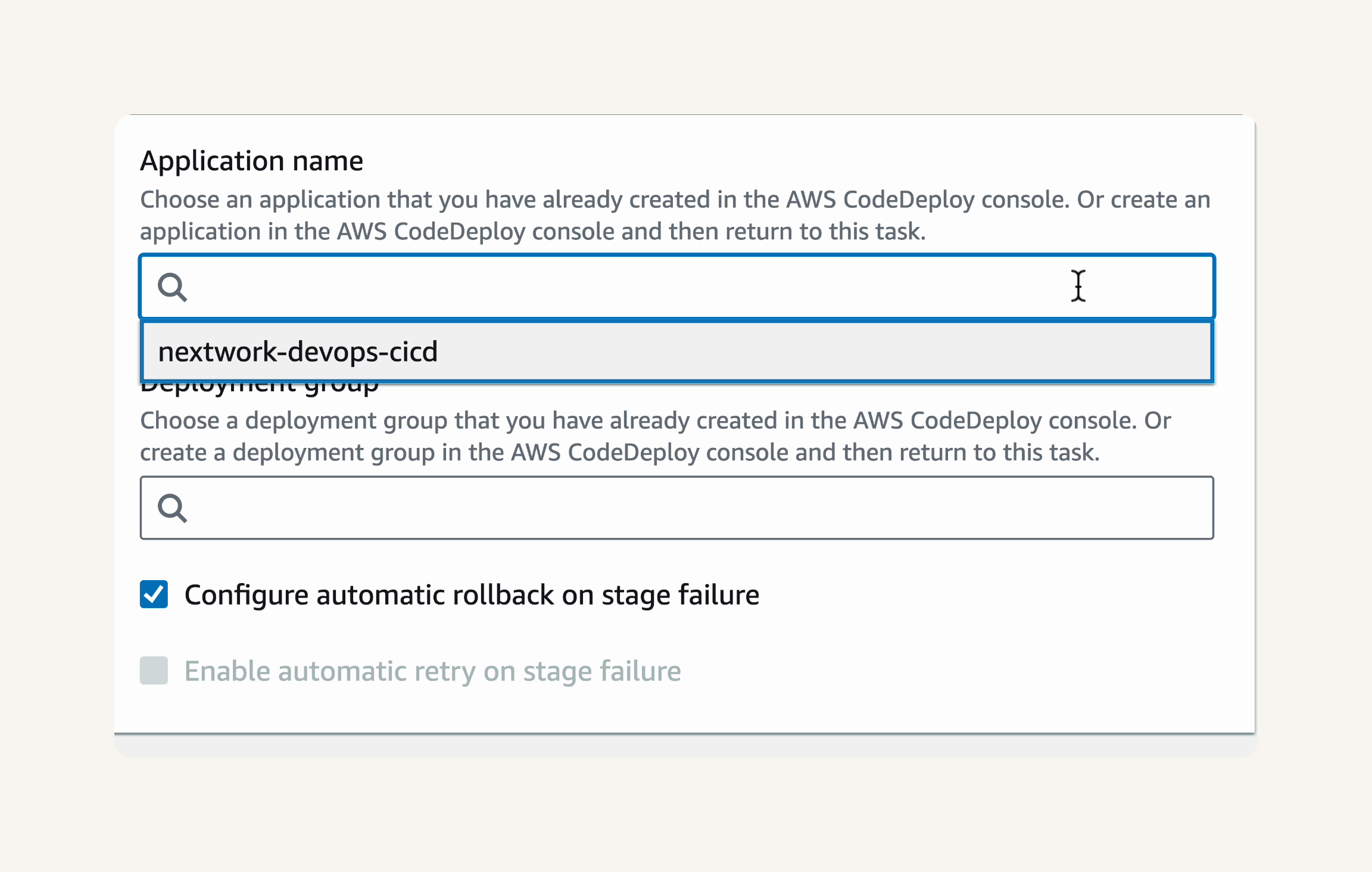

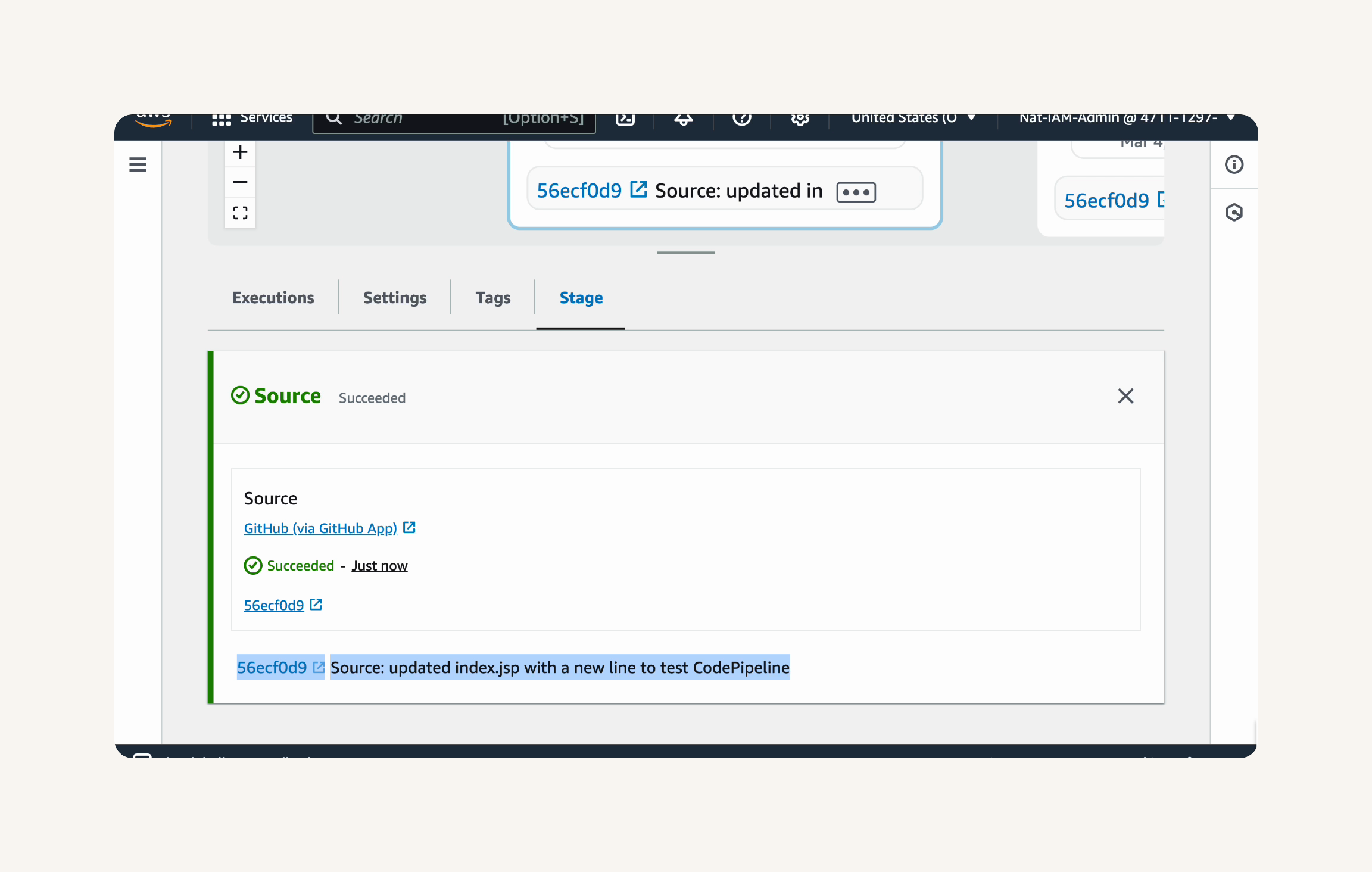

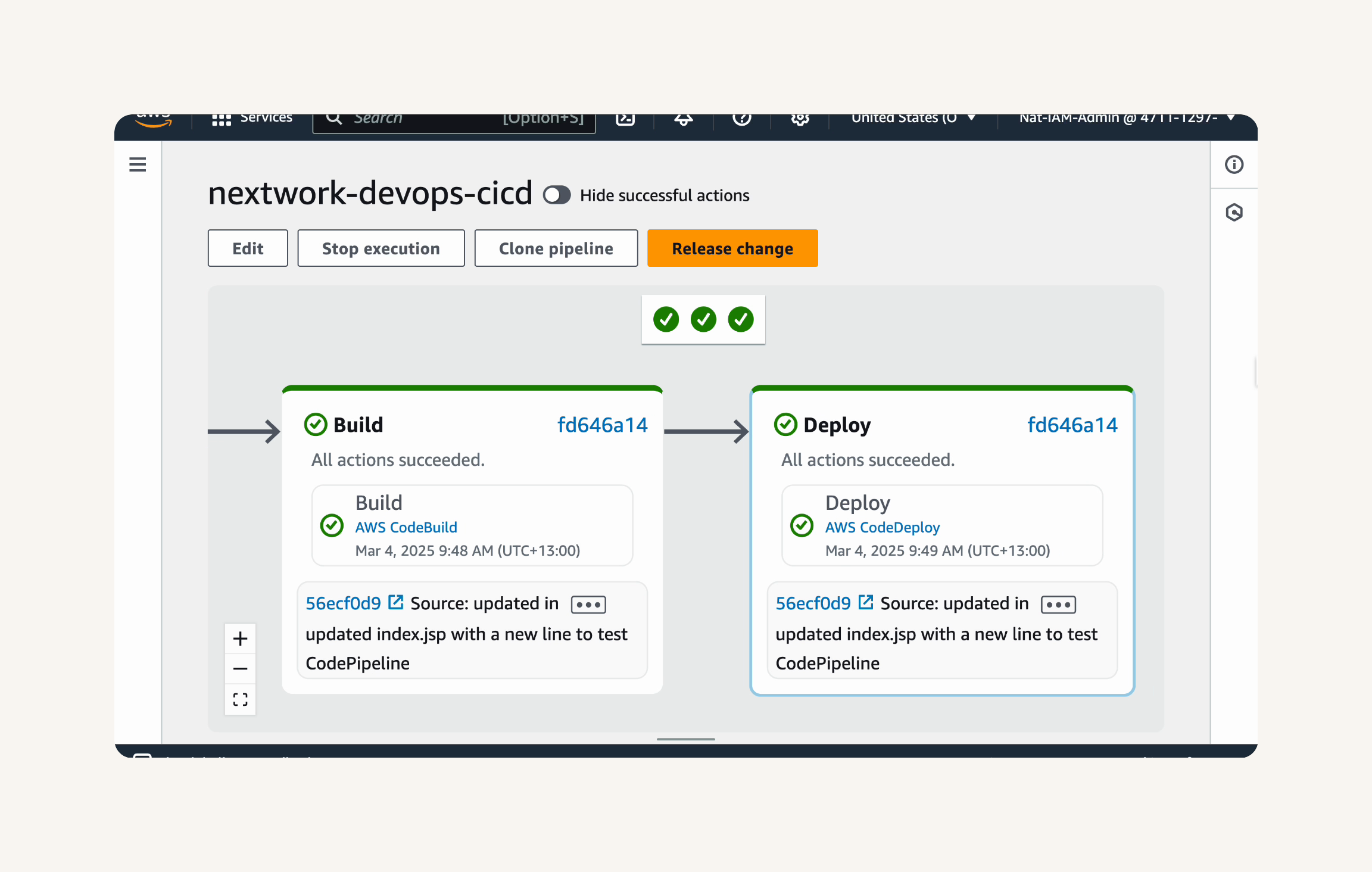

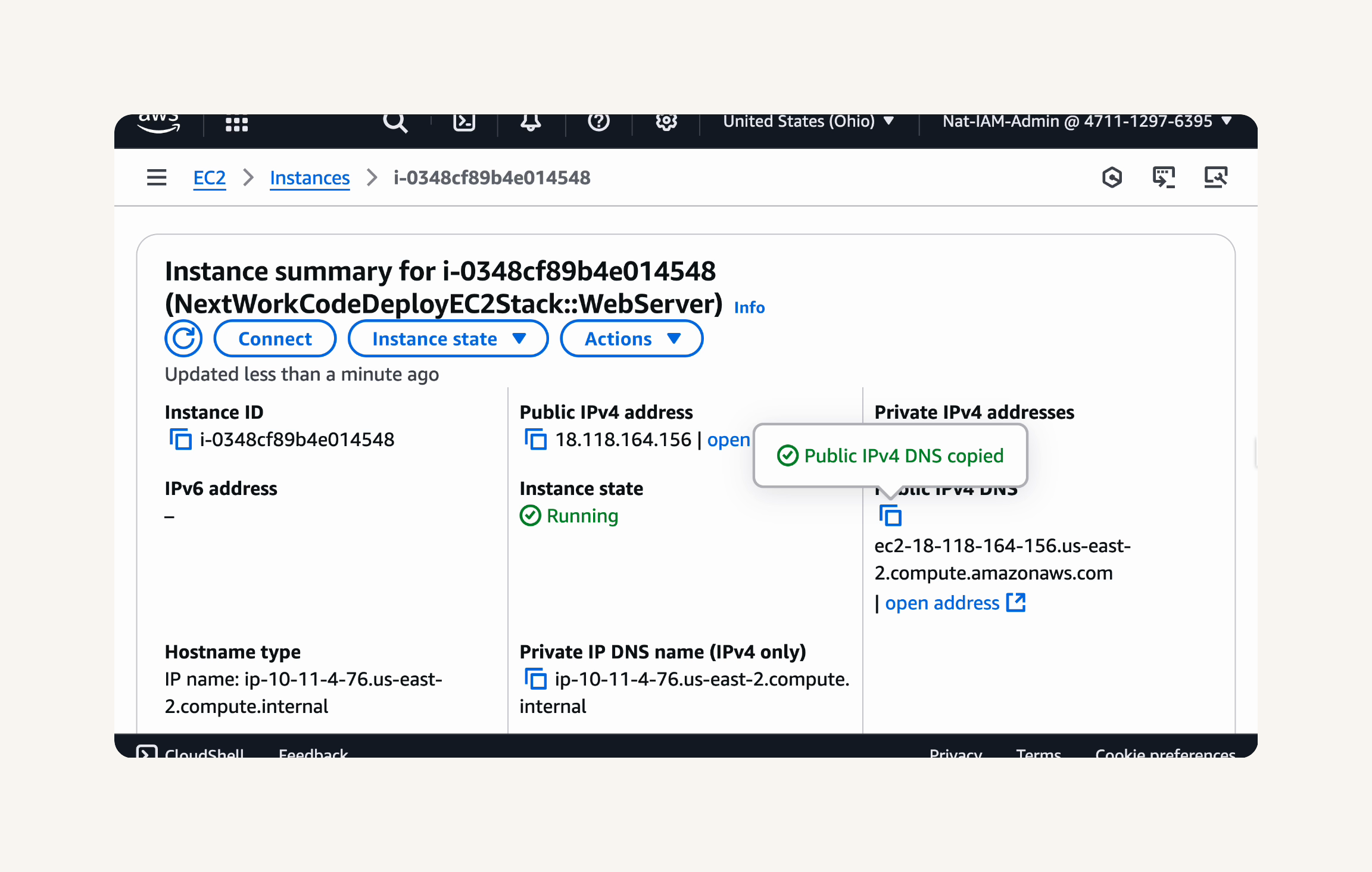

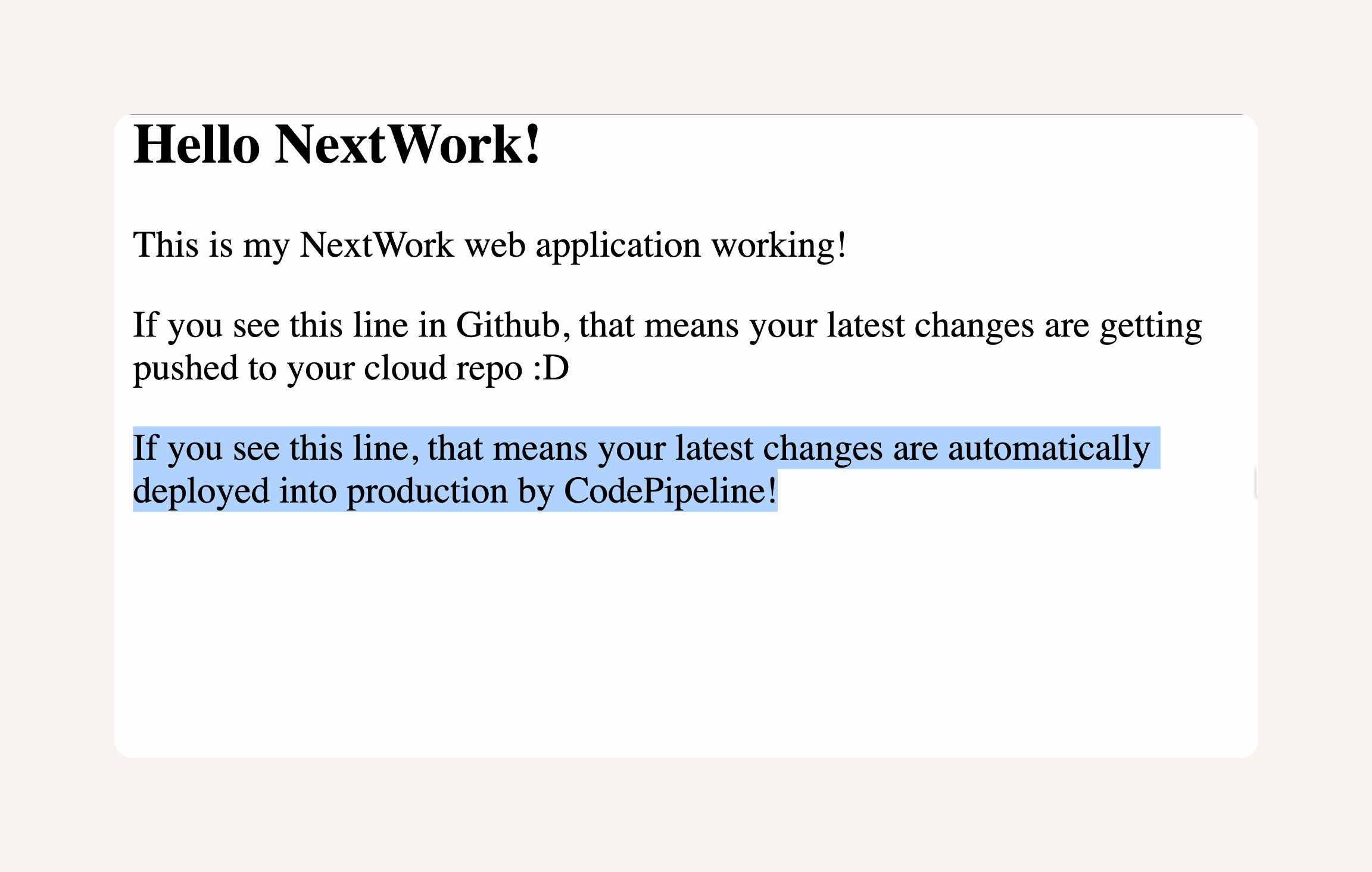

You should see messages like