Install Elasticsearch, Logstash and Kibana (ELK stack) - dryshliak/hadoop GitHub Wiki

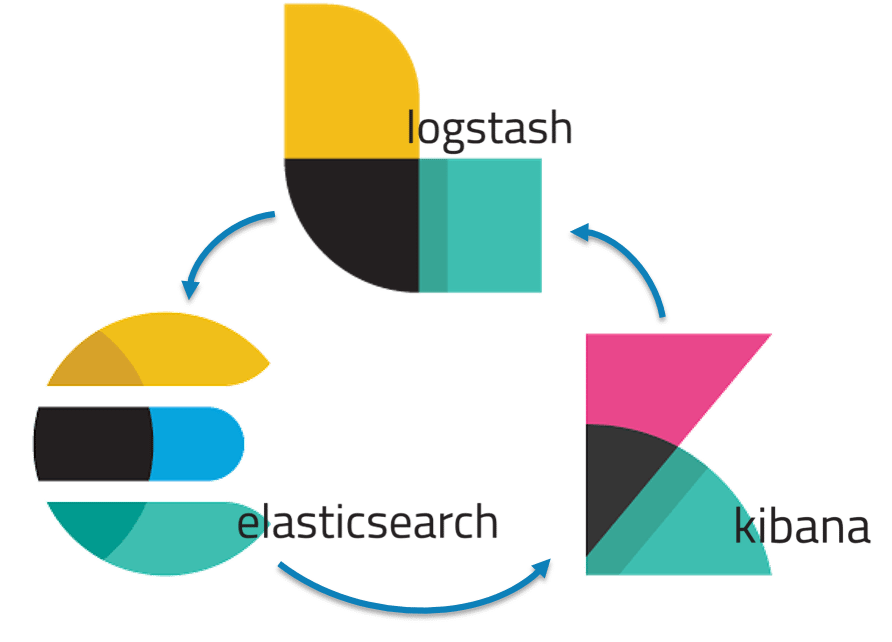

It is a powerful collection of three open source tools: Elasticsearch, Logstash, and Kibana.

Elasticsearch is a NoSQL database which is based on Lucene search engine and is built with RESTful APIs. It is a highly flexible and distributed search and analytics engine. Also, it provides simple deployment, maximum reliability, and easy management through horizontal scalability. It provides advanced queries to perform detailed analysis and stores all the data centrally for quick search of the documents.

Logstash is the data collection pipeline tool. It the first component of ELK Stack which collects data inputs and feeds it to the Elasticsearch. It collects various types of data from different sources, all at once and makes it available immediately for further use.

Kibana is a data visualization tool. It is used for visualizing the Elasticsearch documents and helps the developers to have an immediate insight into it. Kibana dashboard provides various interactive diagrams, geospatial data, timelines, and graphs to visualize the complex queries done using Elasticsearch. Using Kibana you can create and save custom graphs according to your specific needs.

- Virtual machine for ELK stack with 4 GB of RAM and 20 GB of disc space

- Ubuntu 16.04

- Java 8

- SSH access

-

Install VirtualBox https://www.virtualbox.org/wiki/Downloads

-

Prepare three instances appropriate version you can find by below URL

http://releases.ubuntu.com/16.04/ubuntu-16.04.6-server-amd64.iso -

During instance preparing add second adapter “Host-only Adapter” in the Network setting. You can face with recognition second adapter, to resolve this please read this article

- Choose "OpenSSH server” to have ssh access to instance

-

On all instances you need to setup hosts files with FQDN names to resolve local DNS names on each node (as explained here) and also remove this line 127.0.1.1<---->"node name"

-

Disable firewall

sudo service ufw stop && sudo ufw disable- Before starting of installing any applications or software, please makes sure your list of packages from all repositories and PPA’s is up to date or if not update them by using this command:

sudo apt-get update && sudo apt-get dist-upgrade -y- Install OpenJDK Java. (You may want to install Oracle Java, however there is no strict requirement in the official documentation).

sudo apt-get install openjdk-8-jdk -yLet's install the latest version of Elasticsearch (for now it is 6.6.0

- Download and install the public signing key and save the repository definition to /etc/apt/sources.list.d/elastic-6.x.list:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-6.x.list- Install Elasticsearch.

sudo apt-get update && sudo apt-get install elasticsearch- Start Elasticsearch service.

sudo systemctl enable elasticsearch

sudo systemctl start elasticsearch- Configuring Elasticsearch service.

Now you have cluster, which consists of 1 nodу (master and data, by default). Elasticsarch service is listening on port 9200 (http) - for client queries and 9300(tcp) - for interal nodes comunication. You can check the status of it by running next command:

curl -X GET "localhost:9200/_cluster/health?pretty" You should see similar output:

If you want to dive deep into elasticsearch, you need to spin up 3-nodes cluster and configure it.

- Let's install the latest version of Kibana (for now it is 6.6.0). Since we already added repository for ELK, we may proceed installing:

sudo apt-get install kibana

sudo systemctl enable kibana

sudo systemctl start kibanaNow Kibana is running on localhost on port 5601.

- To be able to browse it from you host machine or from elsewhere you have to add next parameter to /etc/kibana/kibana.yml and restart service:

sudo bash -c "echo \"server.host: 0.0.0.0\" >> /etc/kibana/kibana.yml"

sudo systemctl restart kibanaAfter that, your Kibana service will become accesible from outside, and you cak check it by entering in brwserthe kibana URL, which consists of ip address of virtual machine and port 5601: (e.g.http://192.168.56.101:5601)

The Kibana home page shou;d looks like below:

- Connecting Kibana to Elasticsearch.

By default Kibana will try to connect Elasticsearch on localhost:9200, so in out case no need to do any manipulation. If you have elasticsearch cluster running on multiple nodes, you have to setup Coordinating node, which is neither master nor data node and point Kibana to this node.

- Let's install the latest version of Logstash (for now it is 6.8.1). Since we already added repository for ELK and installed java, we may proceed installing:

sudo apt-get install logstash

sudo systemctl enable logstash

sudo systemctl start logstash- Configuring Logstash to collect system logs Actually Logstash can be configured to collect logs directly, but acording to the best practices, it is not recommended to install Logstash to the server which produces logs, since Logstash is very hard application, written on Java, which consume a lot of memory and could affect the server performance. In this case, it's better to use Filebeat as a log shipper from destination server to Logstash. In current installation we will use only Logstash to simplefy our vanil architecture. We need to add logstash configuration file for parsing log files on local machine /9let's use auth logs).

sudo chmod 644 /var/log/auth.log

sudo bash -c 'cat <<EOF> /etc/logstash/conf.d/logstash.conf

input {

file {

path => "/var/log/auth.log"

start_position => "beginning"

type => "logs"

}

}

filter {

if [type] == "auth" {

grok {

match => { "message" => "%{SYSLOGBASE} %{GREEDYDATA:message}" }

overwrite => [ "message" ]

}

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "logstash-index"

}

}

EOF

'

sudo systemctl restart logstashAfter this you should be able to configure default index pattern using logstash-index in Kibana Web UI and see similar picture like below: