Kubernetes and Docker - dennisholee/notes GitHub Wiki

Src: https://dzone.com/articles/docker-containers-and-kubernetes-an-architectural

Src: https://vocon-it.com/2018/12/03/how-to-create-a-kubernetes-cluster-with-kubeadm-kubernetes-series-3/

- Install Docker https://docs.docker.com/install/linux/docker-ce/ubuntu/

- Install Kubernetes Admin

kubeadmhttps://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/ - Setup cluster https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

Copy the below script to docker-install.sh and run from sudo

#!/usr/bin/bash

# Remove files

yum remove docker docker-common docker-selinux docker-engine-selinux docker-engine docker-ce

# Install prerequisite packages

yum install -y yum-utils device-mapper-persistent-data lvm2 http://mirror.centos.org/centos/7/extras/x86_64/Packages/container-selinux-2.95-2.el7_6.noarch.rpm

# Add docker community edition repo

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# Install docker community edition package

yum install docker-ce

# Enable docker service

systemctl enable docker.service

# To execute:

# chmod u+x docker-install.sh

# sudo ./docker-install.shInstall kubernetes

Src: https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# Set SELinux in permissive mode (effectively disabling it)

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

Install kubeadm kubectl and kubelet

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# Set SELinux in permissive mode (effectively disabling it)

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

Src: https://www.cyberciti.biz/faq/install-use-setup-docker-on-rhel7-centos7-linux/

Note: Install selinux-container to resolve this error:

Error: Package: containerd.io-1.2.6-3.3.el7.x86_64 (docker-ce-stable)

Requires: container-selinux >= 2:2.74

Error: Package: 3:docker-ce-18.09.7-3.el7.x86_64 (docker-ce-stable)

Requires: container-selinux >= 2.9

- Containers uses two features of the Linux kernel:

- namespaces

- cgroups

Src: http://www.abusedbits.com/2016/09/docker-host-networking-modes.html

List docker network docker network ls

Inspect docker network docker network inspect {NETWORK}

Build Docker Image

docker build -t .

Build Docker Image and publish to Google Container Registry

gcloud auth configure-docker

docker build -t gcr.io/{project}/{image_name} .

docker push asia.gcr.io/{project}/{image_name}

List Docker images

docker images

Publish Docker image

docker push

Docker containers

# Get the container name

docker container ls

# Builds a new image from the source code.

docker build -t {REPOSITORY}:{TAG} .

# Creates a writeable container from the image and prepares it for running.

Docker create

# Creates the container (same as docker create) and runs it.

docker run -d -p {HOST_PORT}:{CONTAINER_PORT} -v {HOST_PATH}:{CONTAINER_PATH} --net {NETWORK} {IMAGE}

# Start container

docker container start <container name>

# Open bash

docker exec -it <container name> /bin/bash

- The applications in a Pod all use the same network namespace (same IP and port space), and can thus “find” each other and communicate using localhost.

- Applications in a Pod must coordinate their usage of ports.

- Each Pod has an IP address in a flat shared networking space that has full communication with other physical computers and Pods across the network.

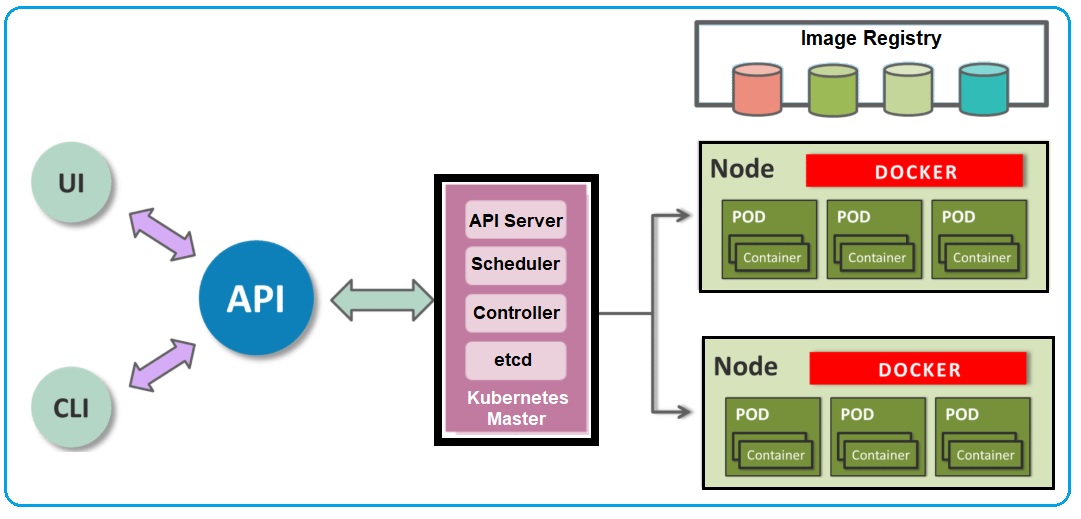

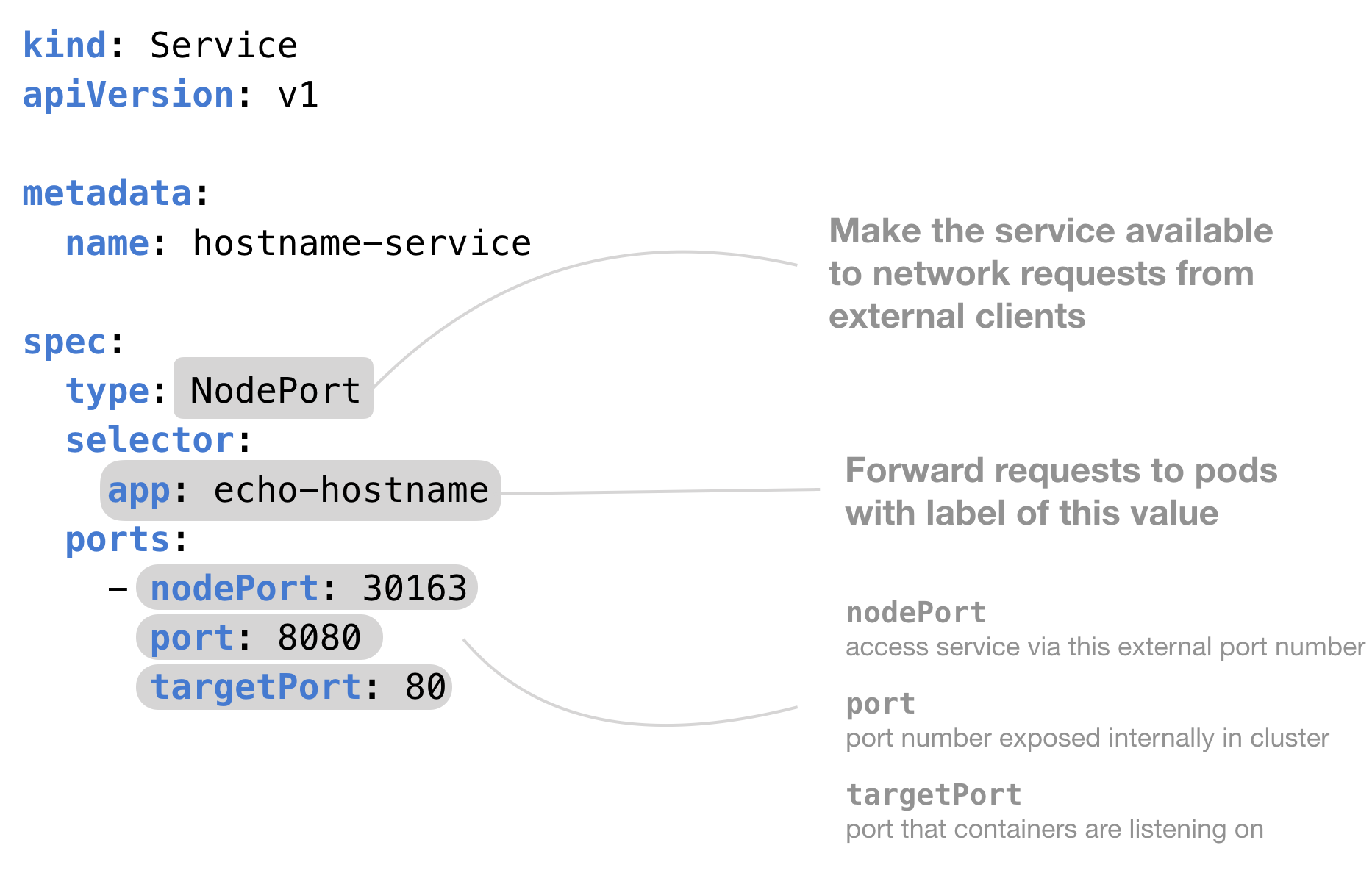

Multiple pods running across multiple nodes of the cluster can be exposed as a service. This is an essential building block of microservices. The service manifest has the right labels and selectors to identify and group multiple pods that act as a microservice.

Src: https://matthewpalmer.net/kubernetes-app-developer/articles/service-kubernetes-example-tutorial.html

kubectl get namespaces

kubectl create ns {Namespace name}

apiVersion: vl

kind: Pod

metadata:

name: my-ns-pod

namespace: my-ns

labels:

app: myapp

spec :

containers:

name: myapp-container

image: busybox

command: I ' sh ' . ' -c ' , ' echo Hello Kubernetes!

Services in Kubernetes expose their endpoint using a common DNS pattern. It looks like this:

<Service Name>.<Namespace Name>.svc.cluster.local

Deploy Docker image

kubectl run hello-web --image=gcr.io/${PROJECT_ID}/hello-app:v1 --port 8080

Docker instance

kubectl get pods

Filter data

kubectl get svc -o json

{

"apiVersion": "v1",

"items": [

{

"apiVersion": "v1",

"kind": "Service",

"metadata": {

"creationTimestamp": "2020-01-15T13:58:33Z",

"labels": {

"component": "apiserver",

"provider": "kubernetes"

},

"name": "kubernetes",

"namespace": "default",

"resourceVersion": "149",

"selfLink": "/api/v1/namespaces/default/services/kubernetes",

"uid": "1e8645fe-379f-11ea-979c-025000000001"

},

"spec": {

"clusterIP": "10.96.0.1",

"ports": [

{

"name": "https",

"port": 443,

"protocol": "TCP",

"targetPort": 6443

}

],

"sessionAffinity": "None",

"type": "ClusterIP"

},

"status": {

"loadBalancer": {}

}

},

{

"apiVersion": "v1",

"kind": "Service",

"metadata": {

"creationTimestamp": "2020-01-16T15:01:29Z",

"labels": {

"run": "mynode"

},

"name": "mynode",

"namespace": "default",

"resourceVersion": "15816",

"selfLink": "/api/v1/namespaces/default/services/mynode",

"uid": "139fda6c-3871-11ea-979c-025000000001"

},

"spec": {

"clusterIP": "10.104.138.145",

"ports": [

{

"port": 3000,

"protocol": "TCP",

"targetPort": 3000

}

],

"selector": {

"run": "mynode"

},

"sessionAffinity": "None",

"type": "ClusterIP"

},

"status": {

"loadBalancer": {}

}

}

],

"kind": "List",

"metadata": {

"resourceVersion": "",

"selfLink": ""

}

}

kubectl get svc -o jsonpath='{range .items[*]} {.spec.type } {range .spec.ports[*]} {"-> "}{.port} {"\n" }'Edit deployment

kubectl edit deployment {deployment_name} -n {namespace}

Delete deployment

kubectl delete deployment {deployment_name}

To get a Shell in a Container

# Get list of pods to connect to

kubectl get pods

# Open shell to specified container

kubectl exec -t -i {container} bash

# Examples

kubectl exec -ti busybox -- nslookup {service_name}

Sample configuration script https://cloud.google.com/kubernetes-engine/docs/tutorials/external-metrics-autoscaling

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: pubsub

spec:

minReplicas: 1

maxReplicas: 5

metrics:

- external:

metricName: pubsub.googleapis.com|subscription|num_undelivered_messages

metricSelector:

matchLabels:

resource.labels.subscription_id: my-subscription

targetAverageValue: "2"

type: External

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: pubsub

To get "hpa" details kubectl describe hpa {hpa_name}

To get replication state kubectl get deployment {app_name}

https://cloud.google.com/solutions/continuous-integration-helm-concourse

Latest Helm releases: https://github.com/helm/helm/releases

- Setup GCP's GKE if an existing kubernetes cluster doesn't exists

gcloud container clusters create {cluster_name} --zone {zone} --machine-type {machine_type} --node-num {node_count}

Note: To initialise kubectl from remote host: gcloud container clusters get-credentials {cluster_name} --zone {zone}

- Download the latest Helm release

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.12.1-linux-amd64.tar.gz

tar xvf helm-v2.12.1-linux-amd64.tar.gz

- Create the "tiller" service account

kubectl create serviceaccount tiller --namespace kube-system

- Install Helm on cluster

cd linux-amd64

./helm init --service-account tiller

kubectl run knginx --image nginx --replicas=3

# List the nodes the pods are running one

kubectl get pod -o=w

# Load balance service

kubectl expose deployment knginx --type=LoadBalancer --port 80 --target-port 80

# Scale deployment

kubectl scale --replicas=3 deployment/knginx

Unable to connect to cluster.

kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

Update GCP cluster config settings to kubectl as follows:

gcloud container clusters get-credentials {cluster_name} --zone {cluster_zone}

Invalid image specified.

View deployment kubectl describe deployment {deployment_name}

kubectl describe deployment knginx

NAME READY STATUS RESTARTS AGE

knginx-5ff7558cfb-jqszl 0/1 ImagePullBackOff 0 15m

View status kubectl describe pod {pod-name}

kubectl describe pod knginx-5ff7558cfb-jqszl

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 15m default-scheduler Successfully assigned knginx-5ff7558cfb-jqszl to gke-ktest-tnodepool-134abd2d-bxxm

Normal SuccessfulMountVolume 15m kubelet, gke-ktest-tnodepool-134abd2d-bxxm MountVolume.SetUp succeeded for volume "default-token-84bll"

Normal SandboxChanged 15m (x5 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm Pod sandbox changed, it will be killed and re-created.

Normal Pulling 15m (x2 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm pulling image "gcr.io/nginx:v1"

Warning Failed 15m (x2 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm Failed to pull image "gcr.io/nginx:v1": rpc error: code = Unknown desc = Error response from daemon: Get https://gcr.io/v2/nginx/manifests/v1: denied: Token exchange failed for project 'nginx'. Please enable or contact project owners to enable the Google Container Registry API in Cloud Console at https://console.cloud.google.com/apis/api/containerregistry.googleapis.com/overview?project=nginx before performing this operation.

Warning Failed 15m (x2 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm Error: ErrImagePull

Normal BackOff 5m (x47 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm Back-off pulling image "gcr.io/nginx:v1"

Warning Failed 22s (x69 over 15m) kubelet, gke-ktest-tnodepool-134abd2d-bxxm Error: ImagePullBackOff

Based on the following tutorial https://github.com/meteatamel/istio-on-gke-tutorial

- Login to docker

docker login

gcloud auth configure-docker

- Build and push docker image https://cloud.google.com/container-registry/docs/pushing-and-pulling?hl=en_US&_ga=2.34561256.-897601027.1546532291

docker build -t asia.gcr.io/{project_name}/istio-helloworld-csharp:v1 .

docker push asia.gcr.io/{project_name}/istio-helloworld-csharp:v1

docker run -it ubuntu bash

apt update -y

apt-get install -y software-properties-common

apt-add-repository ppa:ansible/ansible

apt install -y ansible

# Add host (clients)

# For each host copy your working compute environment's public key for future SSH connectivity

ssh-copy-id <host IP address>

ansible-inventory -i /kube-cluster/ --list