Example: Simplified Anisotropic Diffusion - codeplaysoftware/visioncpp GitHub Wiki

To see the whole code of this example: link.

This tutorial will show how to implement a simplified version of the Perona-Malik Anisotropic Diffusion[^perona]. This technique is capable of smoothing the image preserving the edge information. With this technique we can use to get a better edge detection and segmentation.

It is a non-linear filter, and it smooths the image based in the neighbourhood. It uses the exponential function to determine how much smoothness it will apply in the region.

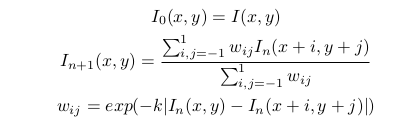

The equation below shows the equations to implement the simplified version of the Perona-Malik Anisotropic Diffusion.

It is an iterative method where I0(x,y) is the original image. There are two parameters:

- k: This parameter is the factor in the exponential function related to detect edges, the bigger the value, more regions will be considered edges.

- Number of iterations: This parameter says how many times we are going to apply the filtering in the image. The more we apply, smoother the image gets, but preserving edges.

For the implementation of this algorithm in VisionCpp we basically need to write down the equations. In the code below we can see our functor which implements the simplified version of the anisotropic diffusion. We use the cl::sycl::float4 data structure since it has implemented the operator overloading for basic math operations.

// operator which implements the simplified anisotropic diffusion

struct AniDiff {

template <typename T>

visioncpp::pixel::F32C3 operator()(T nbr) {

// init output pixel

cl::sycl::float4 out(0, 0, 0, 0);

// init sum variable, which is used to normalize

cl::sycl::float4 sum_w(0, 0, 0, 0);

// get center pixel

cl::sycl::float4 p1(nbr.at(nbr.I_c, nbr.I_r)[0],

nbr.at(nbr.I_c, nbr.I_r)[1],

nbr.at(nbr.I_c, nbr.I_r)[2], 0);

// iterate over a 3x3 neighbourhood

for (int i = -1; i <= 1; i++) {

for (int j = -1; j <= 1; j++) {

// get neighbour pixel

cl::sycl::float4 p2(nbr.at(nbr.I_c + i, nbr.I_r + j)[0],

nbr.at(nbr.I_c + i, nbr.I_r + j)[1],

nbr.at(nbr.I_c + i, nbr.I_r + j)[2], 0);

// computes the weight which basically is the difference between pixels

cl::sycl::float4 w = cl::sycl::exp((-k) * cl::sycl::fabs(p1 - p2));

// sum the weights for normalization

sum_w += w;

// store the output

out += w * p2;

}

}

// normalize output and return

out = out / sum_w;

return visioncpp::pixel::F32C3(out.x(), out.y(), out.z());

}

};Since it is an iterative method, the use of the device only memory can be used to simplify the creation of the expression tree. The device only memory is a type of terminal node which is on device, it means that you can assign temporary data without the need of using host memory.

We can split the creation of the expression tree in 4 steps:

- Creation of terminal nodes

- Convert input data to float

- Apply the Anisotropic Diffusion

- Copy device memory to host.

Like the others tutorials of VisionCpp first we need to initialize our terminal nodes which contains the input, the output data and the device only memory. The device only memory will be used to assign all temporary computations.

auto in_node =

visioncpp::terminal<visioncpp::pixel::U8C3, COLS, ROWS,

visioncpp::memory_type::Buffer2D>(input.data);

auto out_node =

visioncpp::terminal<visioncpp::pixel::U8C3, COLS, ROWS,

visioncpp::memory_type::Buffer2D>(output.get());

// device only memory

auto device_memory =

visioncpp::terminal<visioncpp::pixel::F32C3, COLS, ROWS,

visioncpp::memory_type::Buffer2D>();Then we need to convert the input data to float.

// convert to float

auto frgb = visioncpp::point_operation<visioncpp::OP_U8C3ToF32C3>(in_node);

// assign to temporary device memory

auto exec1 = visioncpp::assign(device_memory, frgb);The next step is to create the nodes to apply the anisotropic diffusion.

// apply anisotropic diffusion

auto anidiff =

visioncpp::neighbour_operation<AniDiff, 1, 1, 1, 1>(device_memory);

// assign to the temporary device memory

auto exec2 = visioncpp::assign(device_memory, anidiff);In the last step we need to assign the device data to host.

// convert to uchar

auto urgb =

visioncpp::point_operation<visioncpp::OP_F32C3ToU8C3>(device_memory);

// assign to the host memory

auto exec3 = visioncpp::assign(out_node, urgb);After creating the expression tree, we can execute it. For the anisotropic diffusion computation we create a loop which is a parameter that controls how blurry the image will be. So when we run the exec1 and exec2, all the computation is done on the device. And the exec3 will finally transfer the device data to host which can be used for displaying the result.

// execution (convert to float)

visioncpp::execute<visioncpp::policy::Fuse, 32, 32, 16, 16>(exec1, dev);

// apply anisotropic diffusion several times

for (int i = 0; i < iters; i++) {

visioncpp::execute<visioncpp::policy::Fuse, 32, 32, 16, 16>(exec2, dev);

}

// return image to host memory

visioncpp::execute<visioncpp::policy::Fuse, 32, 32, 16, 16>(exec3, dev);The steps above computed a simplified version of the anisotropic diffusion. The images below show the results. As we can see, it smooths the image preserving the edge information.

| Reference Image^itacoatiara |

|---|

|

| Anisotropic Diffusion |

|---|

|

[^perona]: Perona, Pietro, and Jitendra Malik. "Scale-space and edge detection using anisotropic diffusion." IEEE Transactions on pattern analysis and machine intelligence 12.7 (1990): 629-639.