Computer Vision Methods - brohan-byte/Flowcus GitHub Wiki

Gaze Tracking

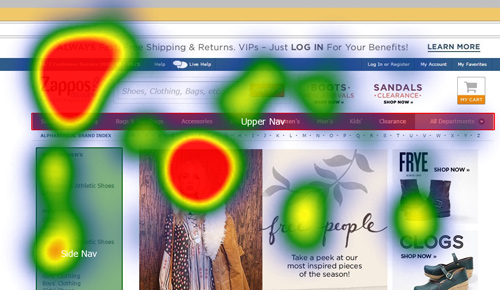

Gaze tracking (or eye-tracking) is a technology that detects and analyzes where a person is looking by tracking eye movements, gaze direction, and fixation points. It typically uses computer vision and infrared cameras to monitor the position of the eyes and determine the user’s focus.

![]()

In our project, gaze tracking plays a crucial role in several ways:

- It allows the application to detect whether the user is actively looking at the screen and, if so, whether they are focusing on relevant areas. This helps determine if the user is staying engaged or getting distracted.

- If the user looks away from the screen—such as when checking their phone—the gaze tracker will no longer receive a visual feed to process. This data is then analyzed alongside pose estimation and object detection systems to assess whether the user is distracted.

This project uses the Google MediaPipe Iris for gaze estimation, the documentation for which can be found here

Pose Estimation

Pose estimation is a computer vision technique that detects and tracks key points on the human body (such as the head, shoulders, and hands) to determine body posture and movement. It helps identify physical actions, such as looking down or turning away.

Pose estimation plays a crucial role in distraction detection, especially when identifying key objects like a phone or a notebook, which can indicate whether the user is engaged or distracted.

For example, if neither a phone nor a notebook is visible within the camera’s field of view, gaze tracking alone can determine whether the user is looking at the screen. However, pose estimation helps further refine this detection by analyzing the user's head position. If the system detects a downward head tilt or an unusual posture, it can infer potential distractions and trigger an alert.

In the example above, I was looking at my phone, which was outside the camera’s field of view. By analyzing facial key points relative to other body key points, the system can detect if my head is tilted downward, helping determine whether I am distracted.

Flowcus uses Google Mediapipe for pose estimation, the documentation for which can be found here

Object Detection

Object detection is a computer vision technique used to identify and locate objects within an image or video

Object detection allows the system to detect and track the positions of key objects on the desk, such as the phone and notebook. By knowing where these objects are, Flowcus can identify when the user is interacting with them, providing context for potential distractions.

By tracking the location of the user's hand relative to the phone or notebook, object detection helps Flowcus differentiate between writing in a notebook (a productive task) and using a phone (a potential distraction). This makes it easier to send alerts when the user is distracted.

For Flowcus, we'll be using the RoboFlow Object Detection API where the model is trained on the COCO dataset, the documentation for which can be found here