Azure Network - barialim/architecture GitHub Wiki

Table of Content

Azure Services to speed up your Application

In Azure there are a lot of services for speeding up your applications, and some time its difficult to choose the right services for your use cases.

Options for Speeding your apps in Azure

- Azure CDN (Content Delivery Network): you can use this to get your content file closer to your users.

- Azure Cache: you can use in-memory cache to store data, and have it pull out very quickly

- Azure Traffic Manager: you can use it to Route traffic from users to applications that are most performant

- Azure Front Door: which you can put in Front of your application, and can guide your user to your applications; kind of similar to Azure TM but on a different level. Azure FD uses HTTP(s) stack, whereas, Azure TM uses DNS and other protocols aswell.

How CDNs uses reverse proxy

Reference: R1

Traffic Management

| Features | Load Balancer | Application Gateway | Traffic Manager | Front Door |

|---|---|---|---|---|

| Service | Network load Balancer | Web Traffic load balancer | DNS-based Traffic load balancer | Global application Delivery |

| OSI Layer | 4 (TCP/UDB) | 7 (HTTP/s) | 7 (DNS) | 7 (HTTP/s) |

| Type | Internal/Public | Standard & WAF | - | Standard & Premium |

| Routing | IP-based | Path-based | Performance, Weighted, Priority, Geographic | Latency, Priority, Weighted, Session |

| Global/Regional Service | Global | Regional | Global | Global |

| Recommended traffic | Non-HTTP(s) | HTTP(s) | Non-HTTP(s) | HTTP(s) |

| SSL Offloading/Termination | - | Supported | - | Supported |

| Web Application Firewall | - | Supported | - | Supported |

| Sticky Session | Supported | Supported | - | Supported |

| VNet Peering | Supported | Supported | - | - |

| SKU | Basic & Standard | Standard & WAF (v1&2) | - | Standard & Premium |

| Redundancy | Zone redundant & ZOnal | Zone redundant | Resilient to regional failure | Resilient to regional failures |

| Endpoint Monitoring | Health probes | Health probes | HTTP/S GET requests | Health prob |

| Pricing | Standard Load Balancer – charged based on the number of rules and processed data. | Charged based on Application Gateway type, processed data, outbound data transfers, and SKU. | Charged per DNS queries, health checks, measurements, and processed data points. | Charged based on outbound/inbound data transfers, and incoming requests from client to Front Door POPs. |

Azure Application Gateway

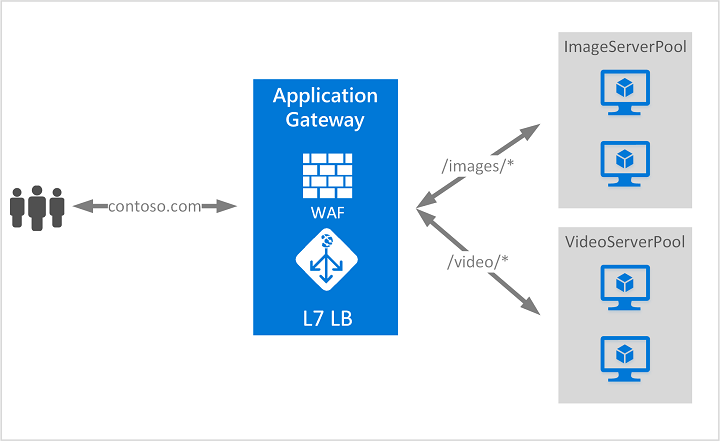

Azure Application Gateway operate at the application layer (OSI layer 7 - HTTP) load balancing and route traffic based on the incoming URL path.

✅ For example: So if /images is in the incoming URL, you can route traffic to a specific set of servers (known as a pool) configured for images. If /video is in the URL, that traffic is routed to another pool that's optimized for videos.

Other names

- application gateway

- URL-based gateway

- Regional gateway

Main features

- It's URI-path-based (i.e. /image) routing traffic service

- It's routes traffic over HTTP/s protocol

- It include WAF that protects your workload from common exploits like SQL injection attacks or cross-site scripting attacks, to name a few.

- It offers CPU-intensive TLS termination/offloading between AGW & backend server to the gateway to save some processing load needed to encrypt and decrypt traffic.

Reference: R1

Azure Load Balancer

Azure load balancer operates at the transport layer (OSI layer 4 - TCP and UDP) load balancing and route traffic based on source IP address and port, to a destination IP address and port.

Google definition of Load balancer: "Load balancing is a practice of distributing traffic across more than one server to improve performance & availability".

Load balancer distributes inbound flows that arrive at the load balancer's front end to backend pool instances. These flows are according to configured load-balancing rules and health probes. The backend pool instances can be Azure Virtual Machines or instances in a virtual machine scale set.

✅ For example: it provide outbound connections for virtual machines inside your virtual network by translating their private IP addresses to public IP addresses.

Other names

- network traffic load balancer

- transport load balancer

- hardware load balancer

Main features

- SSL offloading NOT supported

- It's Regional TCP/UDP load balancing & port forwarding engine.

- Route traffic based on source IP address & port to destination IP address and port.

- It's high performance, low-latency L4 Load balancing service for (in/outbound) for all TCP & UDP protocols.

- It's fast and built to handle millions of requests per second while ensuring your solution is highly available. It is zone-redundant, ensuring high availability across Availability Zones.

Reference: R1

Type of Load Balancers

Public

A public load balancer can provide outbound connections for virtual machines (VMs) inside your virtual network.

It simply means, opening a public gateway via public IP address to your private IP address. Public Load Balancers are used to load balance internet traffic to your VMs.

Internal

An internal (or private) load balancer is used where private IPs are needed at the frontend only. Internal load balancers are used to load balance traffic inside a virtual network. A load balancer frontend can be accessed from an on-premises network in a hybrid scenario.

Figure: Balancing multi-tier applications by using both public and internal Load Balancer

Methods

Global Availability

Basically the way this method works is that it looks at the virtual servers in the pool. Say there’s virtual server “A” and virtual server “B” (“A” being the first option). If both of these options are passing healthchecks and are available, ALL traffic would go to “A” until the healthcheck fails to “A”. If “A” fails, all traffic would go to “B” virtual server until “A” becomes available again.

Round Robin

Round Robin distributes requests by going down the list, top to bottom, of pool members, distributing connections evenly on all members (servers)

Topology

The GTM distributes DNS requests using proximity based load balancing. This is determined by comparing location information in the DNS message to the topology records configured in a topology statement.

Weight Round Robin

Weight Round Robin "also known as RATIO". With this method you assign ratios to servers depending on their ability to perform. You could have 2 servers that perform really well that may take on twice as many connections and 2 servers that don’t do well take on half as much. This keeps servers that can’t handle the loads needed from getting overwhelmed.

Load Balancer and Availability Zones

For info see Link

Azure Traffic Manager

Before I start explaining what is Azure Traffic Manager, you must understand what is Load balancing?

Google definition of Load balancer: "Load balancing is a practice of distributing traffic across more than one server to improve performance & availability".

Azure Traffic Manager is a DNS-based (uses DNS to distribute traffic across several servers) traffic load balancer while still provide HA and responsiveness. Traffic manager is purpose built for distributing public/external facing traffic to your backend application instances/services (referred to as Endpoints in the context of TM) deployed globally.

Traffic Manager uses DNS to direct the client requests to the appropriate service endpoint (application instance) based on traffic-routing methods & health of the endpoints (Command nslookup DNS name to return whether service endpoint is healthy??).

Other names

- DNS-based load balancer

- Global load balancer

- Endpoints-based load balancer

- Internet-facing load balancer

Main feature

- It's high performant with low-latency - users are directed to the closest endpoint in terms of the lowest network latency.

- It's for external traffic from public internet to your cross-region application backend pool.

What type of traffic can be routed to TM

See: https://docs.microsoft.com/en-us/azure/traffic-manager/traffic-manager-faqs#what-types-of-traffic-can-be-routed-using-traffic-manager For internal cross-region traffic, see: Azure Cross-region load balancer

Reference: R1, Reference Multi-region Architecture

Azure Front Door

Azure Front Door is HTTP(s)-based HA and scalable secure cloud ADN/CDN web application acceleration service. It helps to accelerate web traffic by providing single entry point to your globally distributed backend application pool with near real-time failover. It also offers a Global HTTP load-balancing capabilities with instant failover.

It uses Microsoft Global Edge Network (Azure private network connecting all DCs across the global) to ensure that traffic always remain inside the Microsoft Global Network.

Other names

- Application Delivery Network web acceleration Platform

- Content Delivery Network web acceleration Platform

Main features

- Active probing; as soon as one of backend goes down, the failover is instantance

- It offers CPU-intensive TLS/SSL termination/offloading at the edge close to users to save some processing load needed to encrypt and decrypt traffic.

- Global HTTP load-balancing with instant failover

- It include WAF that protects your workload from common exploits like SQL injection attacks or cross-site scripting attacks, to name a few.

- Single platform for static and dynamic acceleration

- Built-in intelligent security to protect your apps and content

Reference: R1

Difference between Traffic Manager & Frontdoor

When choosing a global load balancer between Traffic Manager and Azure Front Door for global routing, you should consider what’s similar and what’s different about the two services. Both services provide

- Multi-geo redundancy: If one region goes down, traffic seamlessly routes to the closest region without any intervention from the application owner.

- Closest region routing: Traffic is automatically routed to the closest region

Reference: R1

Difference between Azure Load Balancer and Application Gateway

Azure Load Balancer works with traffic at Layer 4. Application Gateway works with Layer 7 traffic, and specifically with HTTP/S (including WebSockets).

Application Gateway is purpose built for URI-path-based routing or host header. It can be used to do TLS/SSL termination; saving some of processing load needed to encrypt and decrypt said traffic. However, sometimes unencrypted communication to the servers is not acceptable because of security requirements, compliance requirements, or application may only accept a secure connection. In these situations, Application Gateway also supports end-to-end TLS/SSL encryption.

Application Load balancer is purpose built for distributing load (incoming network traffic) across a group of backend resources or servers. Azure Load Balancer distributes inbound flows that arrive at the load balancer’s front end to backend pool instances. These flows are according to configured load balancing rules and health probes. The backend pool instances can be Azure Virtual Machines or instances in a virtual machine scale set.

Summary of Traffic Management

- Application Load balancer: Global TCP & UDP based network load balancer

Source IP & Port▶️ ->Destination IP & Port - Application Gateway: Regional HTTP(s)-based or URI-path-based web traffic load balancer

/image▶️ ->/image - Traffic Manager: Global DNS-based load balancer

- Front Door : Global HTTPs-based application A/CDN (Application/Content Delivery Network) service that helps to accelerate web traffic by providing single entry point to your globally distributed backend application pool with near real-time failover.

Reference: R1

WAF on Application Gateway

Azure Web Application Firewall (WAF) on Azure Application Gateway provides centralized protection of your web applications from common exploits and vulnerabilities. Web applications are increasingly targeted by malicious attacks that exploit commonly known vulnerabilities. SQL injection and cross-site scripting are among the most common attacks.

Reference: R1

Azure Firewall vs NSG

https://darawtechie.com/2019/09/05/azure-firewall-vs-network-security-group-nsg/ https://social.technet.microsoft.com/wiki/contents/articles/53658.azure-security-firewall-vs-nsg.aspx

Terminology

- DNS: is often referred to as the Internet's phonebook bc it translates websites domains (like google.com) into a long numerical IP address.

- DNS Server*: stores and translate domain names (like google.com) into a numerical IP address.

- IP Address: is a numerical label server use to identify websites and any device connected to the Internet. See DNS resolution..

- DNS resolution: is the process which domain names are translated to IP addresses. In DNS-resolution, an Internet user's browser contacts a DNS server to request the destination website's correct IP address. The act of requesting an IP address from a domain is called a DNS query.

- DNS-based load balancing: It does this by providing different IP addresses in response to DNS queries. Load balancers can use various methods or rules for choosing which IP address to share in response to a DNS query. One of the most common DNS load balancing techniques is called round-robin DNS. For more info see Link

- Bandwidth Throttling: is when your internet provider deliberately slowing down your internet possibly after customer reaches its monthly data limit.

- How to stop throttling: use VPN as it encrypt your online activities/traffic. This way your Internet Service Provider (ISP) will not be able to see your encrypted traffic, and it cannot be discriminated.

- API Gateway Throttling: Some API Gateways provide throttling filters to throttle request to your backend API to limit the number of request at a specific period of time to prevent your API from being overwhelmed by too many requests.

- Point-of-Presence (POP): in the context of Azure Front Door, it's like a hub/interface that sits between Azure Front Door & your backend application pool to speed your request. See R1