gke eks aks multi cloud small top 1.4 ahr profile quick start - apigee/ahr GitHub Wiki

This walkthrough allows you install a multi-cloud topology of Apigee Hybrid. The required infrastructure is provisioned. Clusters are provisioned. Hybrid runtimes are deployed.

Same set of scripts allows you provision either

- GKE/EKS

or

- GKE/AKS/AKS topologies.

All required configuration files and scripts are located in the examples-mc-gke-eks directory of the ahr repo

https://github.com/apigee/ahr/tree/main/examples-mc-gke-eks-aks.

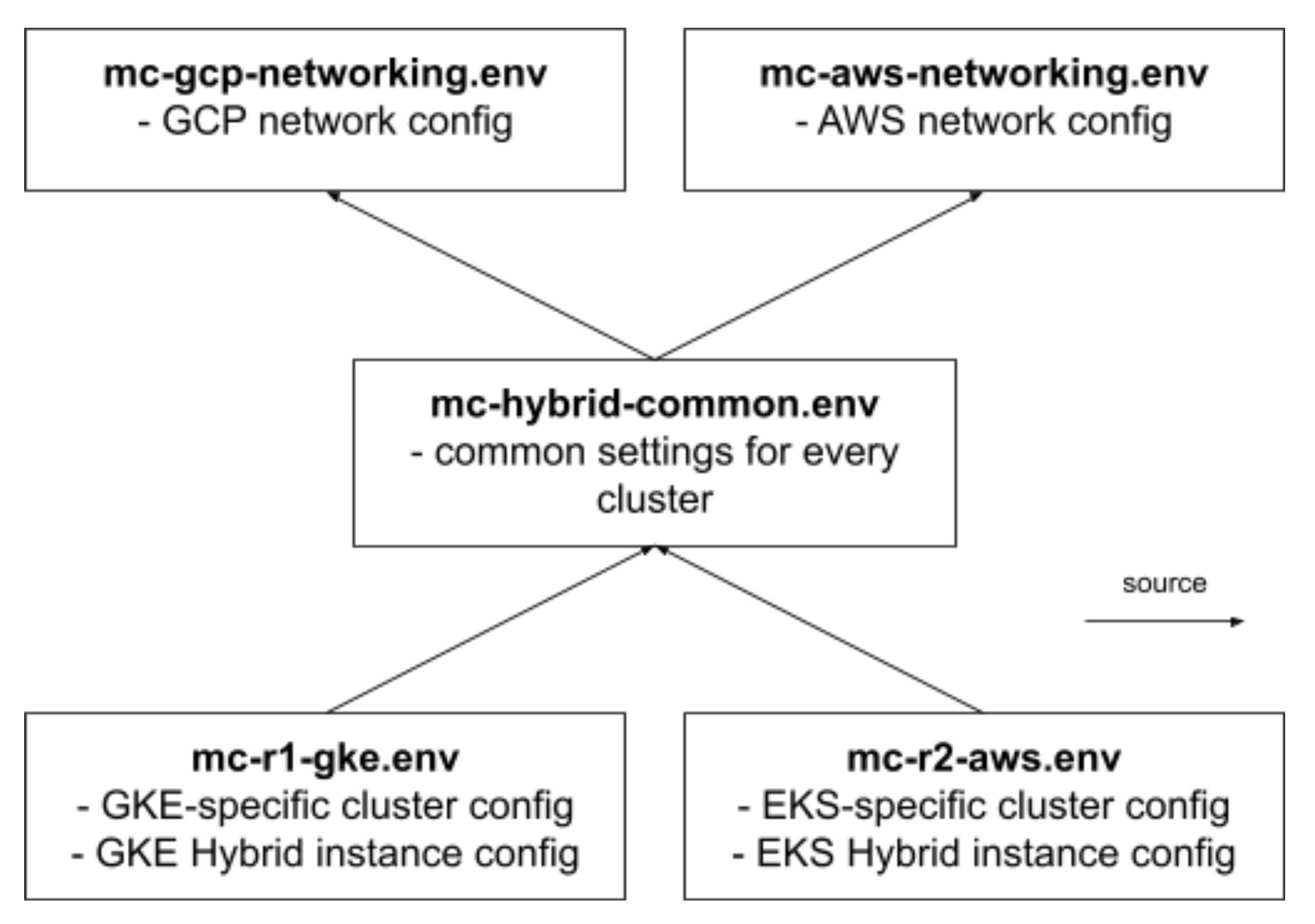

Structure of the config files for:

- GKE/EKS topology:

- GKE/EKS/AKS

We are using terraform modules to install

-

GKE and AWS [and Azure] VPCs as well as configure VPN peering connection between them

-

we then overlay private networks for our Hybrid clusters and firewall rules, routes, and security groups to enable connectivity for Cassandra 7001,7000 gossip ports for internode communication.

We then use curl to send a request to a GCP cluster creation API with a json datagram that describes GKE private cluster

We use ClusterConfig manifest with eksctl command to create an EKS cluster.

We then install Apigee Hybrid into a GKE cluster.

Then a sample ping proxy is deployed into our Hybrid org.

Afterwards, we install an Apigee hybrid runtime into a EKS cluster while extending Cassandra ring across the clouds using a seed node.

Finally, we install an Apigee hybrid runtime into an AKS cluster while extending Cassandra ring across the clouds using a seed node.

This terraform project allows configures non-default networking on either GCP/AWS or GCP/AWS/Azure combo with jumpboxes on each cloud and a sample 7000,7001 ports allowed to communication across clouds.

Of course, you need an active account at each of the two or three clouds.

NOTE: To install GKE/EKS, follow the same sequence of install step and skip actions/operations marked as Azure only.

Use your working computer terminal or a VM to overcome the timeout limit of CloudShell. We recommend to provision a default VM in your GCP project.

As a multi-cloud Apigee hybrid provisioning is a long-running process, let's provision a bastion VM. Bastion VM is also useful for troubleshooting, at it would be able to access private network addresses.

We are going to:

- create a Service Account;

- add Editor and Network Admin roles to it;

- provision a VM with scope and service account that will allow execute the provisioning script successfully;

- invoke SSH session at the VM.

-

In the GCP Console, activate Cloud Shell

-

Define PROJECT variable

export PROJECT=<your-project-id>

export BASTION_ZONE=europe-west1-b- Create a service account for installation purposes.

Click at the Authorize button when asked.

export INSTALLER_SA_ID=installer-sa

gcloud iam service-accounts create $INSTALLER_SA_ID- Add IAM policy bindings with required roles

roles='roles/editor

roles/compute.networkAdmin

roles/iam.securityAdmin

roles/container.admin'

for r in $roles; do

gcloud projects add-iam-policy-binding $PROJECT \

--member="serviceAccount:$INSTALLER_SA_ID@$PROJECT.iam.gserviceaccount.com" \

--role=$r

done- Create a compute instance with installer SA identity that will be used to execute script.

gcloud compute instances create bastion \

--service-account "$INSTALLER_SA_ID@$PROJECT.iam.gserviceaccount.com" \

--zone $BASTION_ZONE \

--scopes cloud-platform-

In GCP Console, open Compute Engine/VM instances page, using hamburger menu.

-

The for bastion host, click SSH button to open an SSH session.

?. Have a GCP, AWS, and Azure projects ready.

?. Create a bastion VM in your GCP project.

?. Install utilites required by cloud cli utilities

sudo apt-get update

sudo apt -y install mc jq git python3-pip?. Clone Ahr repo and define Ahr variables

export AHR_HOME=~/ahr

cd ~

git clone https://github.com/apigee/ahr.git

?. Define HYBRID_HOME

export HYBRID_HOME=~/apigee-hybrid-multicloud

mkdir -p $HYBRID_HOME

cp -R $AHR_HOME/examples-mc-gke-eks-aks/. $HYBRID_HOME

?. Install kubectl if it is absent

sudo apt-get install kubectl

?. CLIs

cd $HYBRID_HOME

./cli-terraform.sh

./cli-aws.sh

# skip for gke/eks install

./cli-az.sh

$AHR_HOME/bin/ahr-verify-ctl prereqs-install-yq

source ~/.profile?. GCP: For Qwiklabs/CloudShell:

# populate variables as appropriate or

# use those pre-canned commands if you're using qwiklabs

export PROJECT=$(gcloud projects list|grep qwiklabs-gcp|awk '{print $1}')

# export GCP_OS_USERNAME=$(gcloud config get-value account | awk -F@ '{print $1}' )

export GCP_OS_USERNAME=$USER?. AWS: for a current session

export AWS_ACCESS_KEY_ID=<access-key>

export AWS_SECRET_ACCESS_KEY=<secret-access-key>

export AWS_REGION=us-east-1

export AWS_PAGER=?. Define AWS user-wide credentials file

mkdir ~/.aws

cat <<EOF > ~/.aws/credentials

[default]

aws_access_key_id = $AWS_ACCESS_KEY_ID

aws_secret_access_key = $AWS_SECRET_ACCESS_KEY

region = $AWS_REGION

EOF?. Azure [skip for gke/eks install]

az login?. Check we are logged in

echo "Check if logged in gcloud: "

gcloud compute instances list

echo "Check if logged in aws: "

aws sts get-caller-identity

echo "Check if logged in az: " [skip for gke/eks install]

az account show

WARNING: Install takes around 40 minutes. If you are using Cloud Shell (which by design is meant for an interactive work only), make sure you keep your install session alive, as CloudShell has an inactivity timeout. For details, see: https://cloud.google.com/shell/docs/limitations#usage_limits

./install-apigee-hybrid-gke-eks-aks.sh |& tee mc-install-`date -u +"%Y-%m-%dT%H:%M:%SZ"`.log

./install-apigee-hybrid-gke-eks.sh |& tee mc-install-`date -u +"%Y-%m-%dT%H:%M:%SZ"`.log

We deliberated created hybrid istio ingress as Internal Load Balacers in each environment with an intention to set up a Global Load Balancer in front of them.

We also provisioned three jumpboxes in each VPC to be able to troubleshoot clusters and send test requests.

?. At the bastion host, source an environment

source $HYBRID_HOME/source.env

?. Execute echo command to resolve values of environement variables

echo curl --cacert $RUNTIME_SSL_CERT https://$RUNTIME_HOS

T_ALIAS/ping -v --resolve "$RUNTIME_HOST_ALIAS:443:$RUNTIME_IP" --http1.1

?. At the vm-gcp jumpbox, copy the curl request from the previous command output; either copy a certificate file or add -k to the curl request. Execute it.

After the install finished, you can use provisioned jumpboxes and suggested commands to check connectivity bewtween VPCs.

# to define jumpboxes IP address

pushd infra-gcp-aws-az-tf

source <(terraform output |awk '{printf( "export %s=%s\n", toupper($1), $3)}')

popd

gcloud compute config-ssh --ssh-key-file ~/.ssh/id_gcp

gcloud compute ssh vm-gcp --ssh-key-file ~/.ssh/id_gcp --zone europe-west1-b

ssh $USER@$GCP_JUMPBOX_IP -i ~/.ssh/id_gcp

ssh ec2-user@$AWS_JUMPBOX_IP -i ~/.ssh/id_aws

# skip for aks intall

ssh azureuser@$AZ_JUMPBOX_IP -i ~/.ssh/id_az

hostname -i

# uname -a

# sudo apt install -y netcat

# sudo yum install -y nc

while true ; do echo -e "HTTP/1.1 200 OK\n\n $(date)" | nc -l -p 7001 ; doneFor connectivity check use following commands:

# source R#_CLUSTER variables

export AHR_HOME=~/ahr

export HYBRID_HOME=~/apigee-hybrid-multicloud

source $HYBRID_HOME/mc-hybrid-common.env

# gke

kubectl --context $R1_CLUSTER run -i --tty busybox --image=busybox --restart=Never -- sh

# eks

kubectl --context $R2_CLUSTER run -i --tty busybox --image=busybox --restart=Never -- sh

# aks

kubectl --context $R3_CLUSTER run -i --tty busybox --image=busybox --restart=Never -- sh

#

hostname -i

# nc-based server

while true ; do echo -e "HTTP/1.1 200 OK\n\n $(date)" | nc -l -p 7001 ; done

# nc client

nc -v 10.4.0.76 7001

# delete busybox containers

kubectl --context $R1_CLUSTER delete pod busybox

kubectl --context $R2_CLUSTER delete pod busybox

kubectl --context $R3_CLUSTER delete pod busybox

# for each cluster: 1, 2, 3

kubectl --context $R1_CLUSTER run -i --tty --restart=Never --rm --image google/apigee-hybrid-cassandra-client:1.0.0 cqlsh

# at the shell, execute cqlsh and enter password, default: iloveapis123

cqlsh apigee-cassandra-default-0.apigee-cassandra-default.apigee.svc.cluster.local -u ddl_user --ssl

describe tables;

# WARNING: Replace my organization name with your organization name with dashes replaced with underscores!!

select * from kms_qwiklabs_gcp_03_d1330351146a_hybrid.developer;

TODO:

ahr-runtime-ctl apigeectl delete -f $RUNTIME_CONFIG --all

ahr-sa-ctl delete-sa

To remove walkthrough-created objects

ahr-cluster-ctl delete

gcloud compute addresses delete runtime-ip --region=$REGION

pushd infra-cluster-az-tf

terraform destroy

popd

pushd infra-cluster-gke-eks-tf

terraform destroy

popd

pushd infra-gcp-aws-az-tf

terraform destroy

popd