EKS Hybrid Small topology 1.4 AHR Manual - apigee/ahr GitHub Wiki

- GCP project

- AWS project

- Credentials:

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- export AWS_REGION=us-east-1

- AWS Console credentials

- 3 VMs with minimal spec:

- t2.xlarge (4 cores)

- Credentials:

?. Install aws CLI

curl -O https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip

unzip awscli-exe-linux-x86_64.zip

mkdir ~/bin

./aws/install -i ~/bin -b ~/bin

?. Add ~/bin to the PATH

Because GCP CloudShell contains following fragment:

# set PATH so it includes user's private bin if it exists

if [ -d "$HOME/bin" ] ; then

PATH="$HOME/bin:$PATH"

fi

in the ~/.profile file, after you create a ~/bin directly, it is sufficient to open a new shell to add it to your PATH.

Alternatively, if you wish to stay in the same shell, execute

export PATH=~/bin:$PATH

?. Check aws functions correctly

aws --version

aws-cli/2.1.19 Python/3.7.3 Linux/5.4.49+ exe/x86_64.debian.10 prompt/off

?. Populate credentials and region environment variables as appropriate See also: https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-configure.html

export AWS_ACCESS_KEY_ID=AKIAQOKOK....VFHP

export AWS_SECRET_ACCESS_KEY=uJRTPbJKj...QhgQKj4Fnl+wmX7kPO0G

export AWS_REGION=us-east-1

?. Configure AWS credentials

mkdir ~/.aws

cat <<EOF > ~/.aws/credentials

[default]

aws_access_key_id = $AWS_ACCESS_KEY_ID

aws_secret_access_key = $AWS_SECRET_ACCESS_KEY

region = $AWS_REGION

EOF

?. Test the connectivity to your AWS project

aws s3api list-buckets

You should see some healthy json output.

?. Install eksctl

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C ~/bin

?. Verify installation

eksctl version

0.36.0

?. Define HYBRID_HOME installaton folder

export HYBRID_HOME=~/apigee-hybrid-install

mkdir -p $HYBRID_HOME

?. Environement variables that describe a kubernetes cluster

export CLUSTER=hybrid-cluster

export CLUSTER_VERSION=1.17

?. Create EKS cluster using eksctl utility

eksctl create cluster \

--name $CLUSTER \

--version $CLUSTER_VERSION \

--without-nodegroup

Output:

[ℹ] eksctl version 0.36.0

[ℹ] using region us-east-1

[ℹ] setting availability zones to [us-east-1c us-east-1d]

[ℹ] subnets for us-east-1c - public:192.168.0.0/19 private:192.168.64.0/19

[ℹ] subnets for us-east-1d - public:192.168.32.0/19 private:192.168.96.0/19

[ℹ] using Kubernetes version 1.17

[ℹ] creating EKS cluster "hybrid-cluster" in "us-east-1" region with

[ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=us-east-1 --cluster=hybrid-cluster'

[ℹ] CloudWatch logging will not be enabled for cluster "hybrid-cluster" in "us-east-1"

[ℹ] you can enable it with 'eksctl utils update-cluster-logging --enable-types={SPECIFY-YOUR-LOG-TYPES-HERE (e.g. all)} --region=us-east-1 --cluster=hybrid-cluster'

[ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "hybrid-cluster" in "us-east-1"

[ℹ] 2 sequential tasks: { create cluster control plane "hybrid-cluster", no tasks }

[ℹ] building cluster stack "eksctl-hybrid-cluster-cluster"

[ℹ] deploying stack "eksctl-hybrid-cluster-cluster"

[ℹ] waiting for CloudFormation stack "eksctl-hybrid-cluster-cluster"

...

[ℹ] waiting for the control plane availability...

[✔] saved kubeconfig as "/home/student_02_e9a7a2320a49/.kube/config"

[ℹ] no tasks

[✔] all EKS cluster resources for "hybrid-cluster" have been created

[ℹ] kubectl command should work with "/home/student_02_e9a7a2320a49/.kube/config", try 'kubectl get nodes'

[✔] EKS cluster "hybrid-cluster" in "us-east-1" region is ready

?. Create cluster command puts $CLUSTER credentials into ~/.kube/config, which means we can use kubectl right away

kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-75b44cb5b4-2zwdx 0/1 Pending 0 4m42s

kube-system coredns-75b44cb5b4-kztl6 0/1 Pending 0 4m42s

?. Check cluster creation details

eksctl utils describe-stacks --region=$AWS_REGION --cluster=$CLUSTER

?. Check cluster creation status

aws eks --region $AWS_REGION describe-cluster --name $CLUSTER --query "cluster.status"

"CREATING"

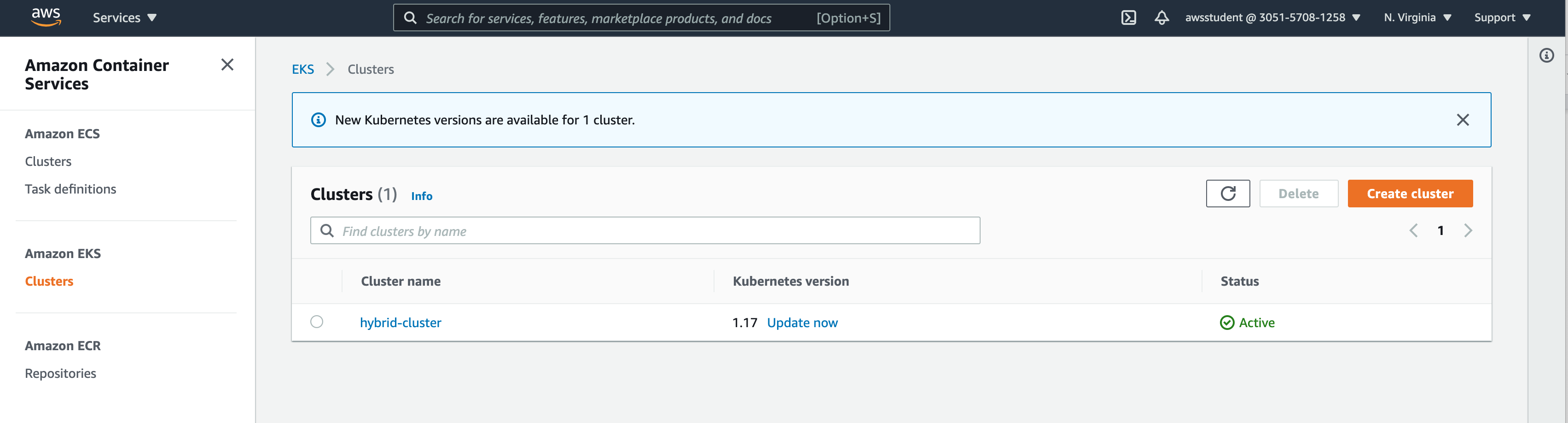

?. Verify cluster using AWS Console

https://eksctl.io/usage/managing-nodegroups/

?. Environment Variables

export EKS_NODE_GROUP=apigee-ng

?. Define ClusterConfig manifest

cat <<EOF > $HYBRID_HOME/aws-ng.yaml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: $CLUSTER

region: $AWS_REGION

nodeGroups:

- name: apigee-ng

labels: { role: workers }

instanceType: t2.xlarge

desiredCapacity: 3

volumeSize: 10

privateNetworking: true

EOF

?. Create apigee-ng node group

eksctl create nodegroup --config-file=$HYBRID_HOME/aws-ng.yaml

Output:

[ℹ] eksctl version 0.36.0

[ℹ] using region us-east-1

[ℹ] will use version 1.17 for new nodegroup(s) based on control plane version

[ℹ] nodegroup "apigee-ng" will use "ami-0a72fb454e7ae750d" [AmazonLinux2/1.17]

[ℹ] 1 nodegroup (apigee-ng) was included (based on the include/exclude rules)

[ℹ] will create a CloudFormation stack for each of 1 nodegroups in cluster "hybrid-cluster"

[ℹ] 2 sequential tasks: { fix cluster compatibility, 1 task: { 1 task: { create nodegroup "apigee-ng" } } }

[ℹ] checking cluster stack for missing resources

[ℹ] cluster stack has all required resources

[ℹ] building nodegroup stack "eksctl-hybrid-cluster-nodegroup-apigee-ng"

[ℹ] --nodes-min=3 was set automatically for nodegroup apigee-ng

[ℹ] --nodes-max=3 was set automatically for nodegroup apigee-ng

[ℹ] deploying stack "eksctl-hybrid-cluster-nodegroup-apigee-ng"

[ℹ] waiting for CloudFormation stack "eksctl-hybrid-cluster-nodegroup-apigee-ng"

...

[ℹ] no tasks

[ℹ] adding identity "arn:aws:iam::305157081258:role/eksctl-hybrid-cluster-nodegroup-a-NodeInstanceRole-VXJ2VSDBSQGR" to auth ConfigMap

[ℹ] nodegroup "apigee-ng" has 0 node(s)

[ℹ] waiting for at least 3 node(s) to become ready in "apigee-ng"

[ℹ] nodegroup "apigee-ng" has 3 node(s)

[ℹ] node "ip-192-168-120-223.ec2.internal" is ready

[ℹ] node "ip-192-168-92-232.ec2.internal" is ready

[ℹ] node "ip-192-168-93-233.ec2.internal" is ready

[✔] created 1 nodegroup(s) in cluster "hybrid-cluster"

[✔] created 0 managed nodegroup(s) in cluster "hybrid-cluster"

[ℹ] checking security group configuration for all nodegroups

[ℹ] all nodegroups have up-to-date configuration

?. Clone ahr repository

cd ~

git clone https://github.com/apigee/ahr.git

?. Add ahr utilities directory to the PATH

export AHR_HOME=~/ahr

export PATH=$AHR_HOME/bin:$PATH

?. Define install directory location and install environment file configuration

export HYBRID_HOME=~/apigee-hybrid-install

export HYBRID_ENV=$HYBRID_HOME/hybrid-1.4.env

?. Clone hybrid 1.4 template configuration

mkdir -p $HYBRID_HOME

cp $AHR_HOME/examples/hybrid-sz-s-1.4.sh $HYBRID_ENV

?. Configure GCP project variable.

Define a value for your project. For a qwiklabs project, you can use a following command

export PROJECT=$(gcloud projects list --filter='project_id~qwiklabs-gcp' --format=value'(project_id)')

?. Check the project value

echo $PROJECT

?. gcloud default project value

gcloud config set project $PROJECT

ahr-verify-ctl api-enable

For detailed documentation, Network load balancing on Amazon EKS: https://docs.aws.amazon.com/eks/latest/userguide/load-balancing.html

As we are using EKS cluster configured with 2 subnets, we need two EIP Allocations ?. Create EIP address and save the PublicIp value into a RUNTIME_IP variable

Also: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/elastic-ip-addresses-eip.html https://docs.aws.amazon.com/cli/latest/reference/ec2/allocate-address.html

export RUNTIME_IP1=$(aws ec2 allocate-address | jq --raw-output .PublicIp)

export RUNTIME_IP2=$(aws ec2 allocate-address | jq --raw-output .PublicIp)

?. OPTIONAL: You can see details of the allocated address using following command.

aws ec2 describe-addresses --public-ips $RUNTIME_IP

Sample Output:

{

"Addresses": [

{

"PublicIp": "18.205.68.14",

"AllocationId": "eipalloc-045fb16bc45ed2575",

"Domain": "vpc",

"PublicIpv4Pool": "amazon",

"NetworkBorderGroup": "us-east-1"

}

]

}

?. Edit the $HYBRID_ENV file to substitute value of the RUNTIME_IP variable to the IP address for Istio Ingress Gateway.

sed -i '/^[ \t]*'"RUNTIME_IP1"'=/{h;s/=.*/='"$RUNTIME_IP1"'/};${x;/^$/{s//'"RUNTIME_IP1"'='"$RUNTIME_IP1"'/;H};x}' $HYBRID_ENV

sed -i '/^[ \t]*'"RUNTIME_IP2"'=/{h;s/=.*/='"$RUNTIME_IP2"'/};${x;/^$/{s//'"RUNTIME_IP2"'='"$RUNTIME_IP2"'/;H};x}' $HYBRID_ENV

?. To be able to attache an EKS cluster to a GCP Anthos Dashboards, we need to create a GKE Hub cluster membership.

# Create service account

gcloud iam service-accounts create anthos-hub --project=$PROJECT

# Add gkehub.connect role

gcloud projects add-iam-policy-binding $PROJECT \

--member="serviceAccount:anthos-hub@$PROJECT.iam.gserviceaccount.com" \

--role="roles/gkehub.connect"

# Create and download json key `$HYBRID_HOME/anthos-hub-$PROJECT.json`

gcloud iam service-accounts keys create $HYBRID_HOME/anthos-hub-$PROJECT.json \

--iam-account=anthos-hub@$PROJECT.iam.gserviceaccount.com --project=$PROJECT

# Define an AWS user name

export AWS_USER=awsstudent

# Define a cluster context

export AWS_CLUSTER_CONTEXT=$AWS_USER@$CLUSTER.$AWS_REGION.eksctl.io

# register a new membership

gcloud container hub memberships register $CLUSTER \

--context=$AWS_CLUSTER_CONTEXT \

--kubeconfig=~/.kube/config \

--service-account-key-file=$HYBRID_HOME/anthos-hub-$PROJECT.json

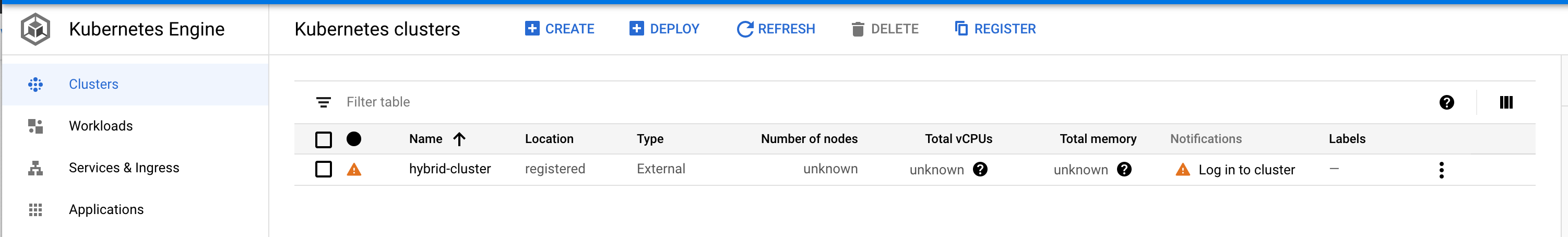

?. At this point, we can see our cluster in the GKE Kubernetes Engine/Clusters list

kubectl create serviceaccount anthos-user

kubectl create clusterrolebinding aksadminbinding --clusterrole view --serviceaccount default:anthos-user

kubectl create clusterrolebinding aksadminnodereader --clusterrole node-reader --serviceaccount default:anthos-user

kubectl create clusterrolebinding aksclusteradminbinding --clusterrole cluster-admin --serviceaccount default:anthos-user

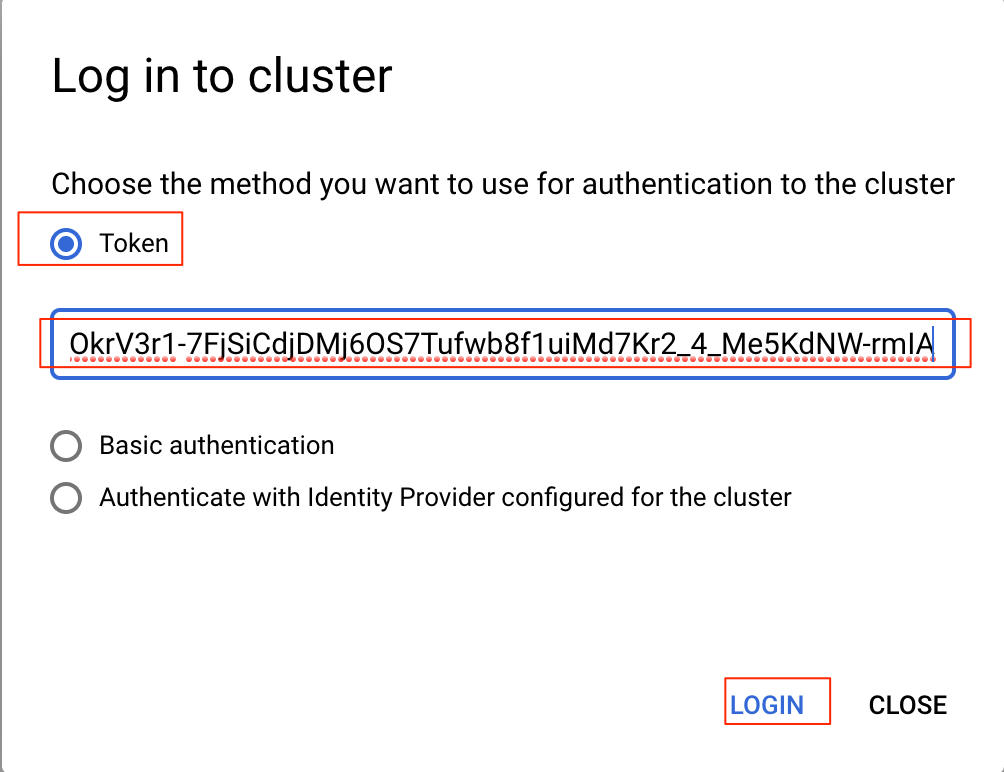

To login into the EKS cluster, extact the cluster secret

CLUSTER_SECRET=$(kubectl get serviceaccount anthos-user -o jsonpath='{$.secrets[0].name}')

kubectl get secret ${CLUSTER_SECRET} -o jsonpath='{$.data.token}' | base64 --decode

?. Select and copy the secret value. Make sure there are no extra characters (like end-of-lines) in the cluster string.

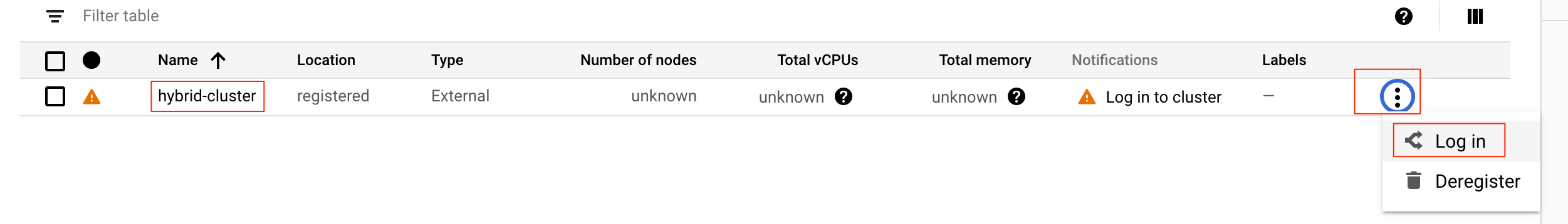

?. In the cluster list, for your $CLUSTER name click at the three-dots action menu and select Log in action.

?. Chose Token method and insert the token value, copied at the previous step

The connectiong is successfully established.

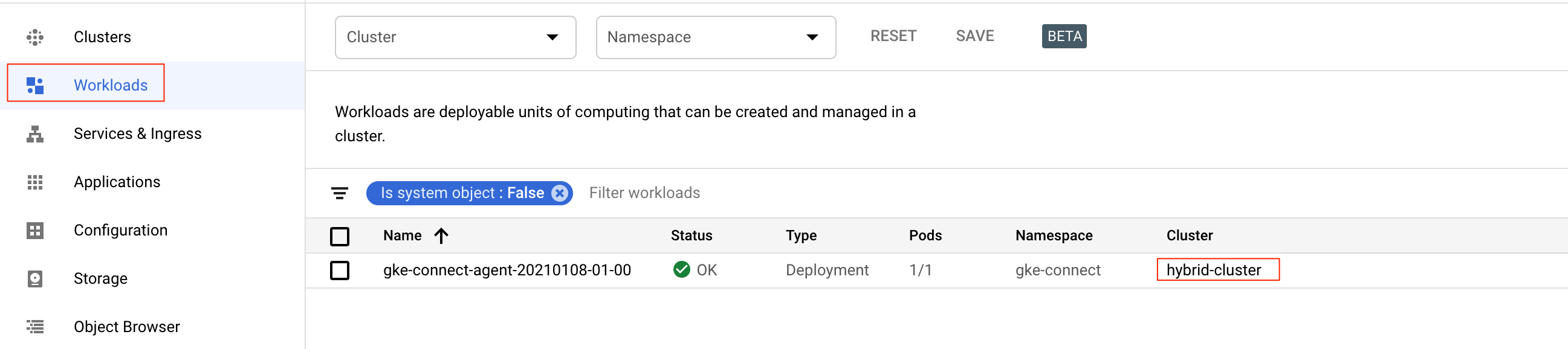

?. To verify connection, select Workloads menu item and you can see non-system containers, installed in the EKS cluster.

source $HYBRID_ENV

?. To install certificate manager, execute

kubectl apply --validate=false -f $CERT_MANAGER_MANIFEST

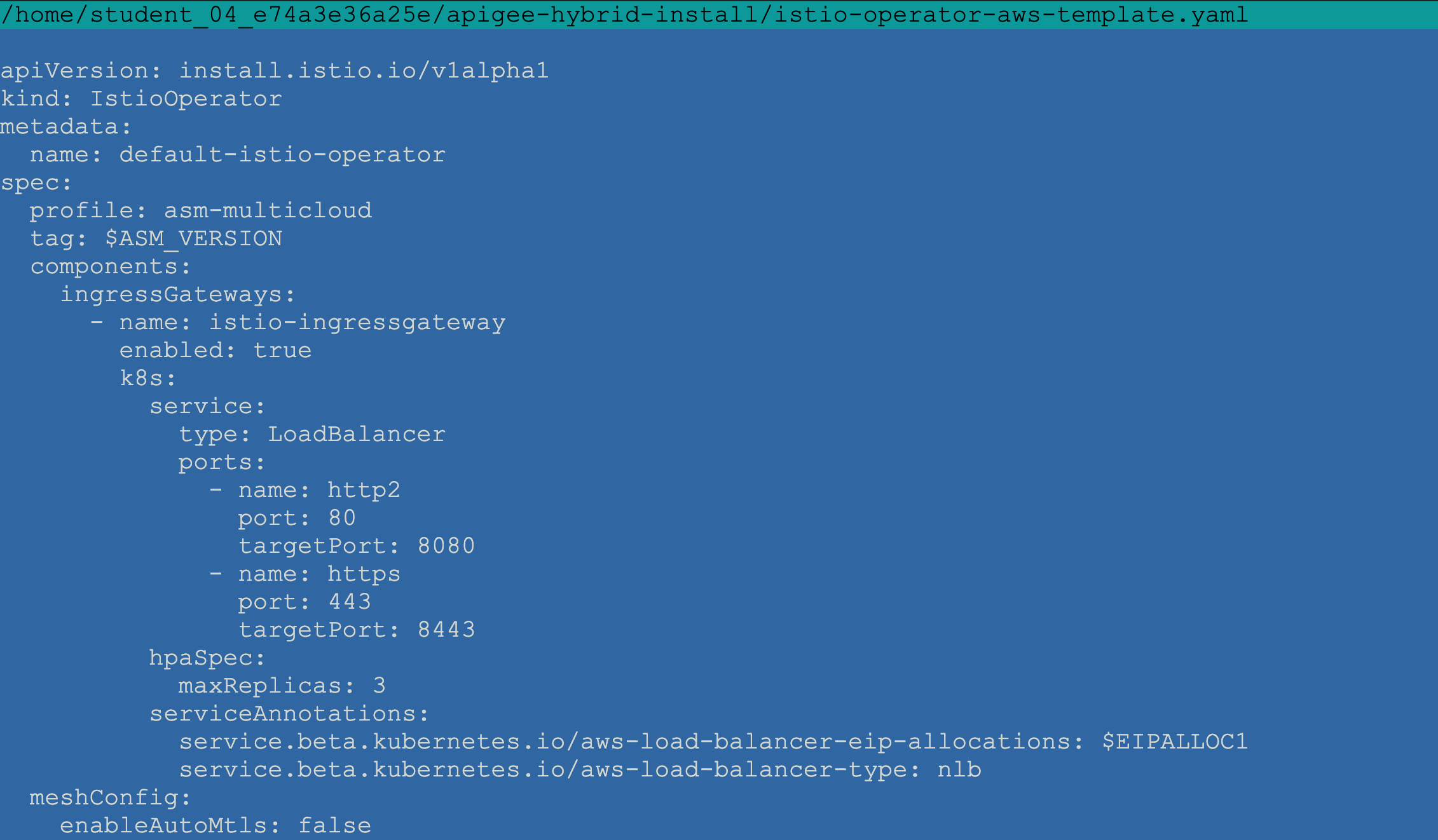

We are going to use IstioOperator manifest to install istio and configure ingress gateway.

As it happens, for each and every cloud or on-prem, we usually need to configure custom annotations that configure a load balancer for this particular infrastructe.

The provided template contains a common part of the configuration. We are going to copy this generic common template into a istio-operator-aws-template.yaml file. Then we will amend it with AWS-specific annotations.

Finally we resolve the environment variable references into values in this template into an istio-operator.yaml file, that we eventually will install using istioctl install command.

?. Override ASM_PROFILE value in the current session and in the HYBRID_ENV configuration file

export ASM_PROFILE=asm-multicloud

sed -i -E "s/^(export ASM_PROFILE=).*/\1$ASM_PROFILE/g" $HYBRID_ENV

?. To correctly generate istio-operator.yaml file, we need to populate environment variables that the template uses.

For asm-multicloud profile, they are ASM_VERSION and RUNTIME_IP, which we already have defined in the current session. We also need a convenience variable that defines ASM_RELEASE (major.minor numbers)

export ASM_RELEASE=$(echo "$ASM_VERSION"|awk '{sub(/\.[0-9]+-asm\.[0-9]+/,"");print}')

?. Copy the istio-operator template into aws template yaml file with a name istio-operator-aws-template.yaml

cp $AHR_HOME/templates/istio-operator-$ASM_RELEASE-$ASM_PROFILE.yaml $HYBRID_HOME/istio-operator-aws-template.yaml

?. To add required AWS Load Balancer annotations, we can either manually edit them, or use provided yq command to automatically edit the aws template.

For manual edit:

vi $HYBRID_HOME/istio-operator-aws-template.yaml

Then at the location

spec.components.ingressGateways[name=istio-ingressgateway].k8s.serviceAnnotations

Add service annotations:

serviceAnnotations: service.beta.kubernetes.io/aws-load-balancer-eip-allocations: eipalloc-0c8e3967c48f72dfc service.beta.kubernetes.io/aws-load-balancer-type: nlb

Also, for AWS/EKS, remove loadBalancerIP stanza.

[ ] remove loadBalancerIP

To edit automatically:

?. asm template manipulations require yq utility to be present. Either you already have it, or install it using your preferred method or use opinionated minimally-intrusive ahr method

ahr-verify-ctl prereqs-install-yq

?. Populate EIPALLOC1, EIPALLOC2, and EIPALLOCATIONS variables that contains eip allocation of AWS static IP address

export EIPALLOC1=$(aws ec2 describe-addresses --public-ips $RUNTIME_IP1 | jq --raw-output .Addresses[0].AllocationId)

export EIPALLOC2=$(aws ec2 describe-addresses --public-ips $RUNTIME_IP2 | jq --raw-output .Addresses[0].AllocationId)

export EIPALLOCATIONS="$EIPALLOC1,$EIPALLOC2"

?. Add serviceAllocations fragment

yq merge -i $HYBRID_HOME/istio-operator-aws-template.yaml - <<"EOF"

spec:

components:

ingressGateways:

- name: istio-ingressgateway

k8s:

serviceAnnotations:

service.beta.kubernetes.io/aws-load-balancer-eip-allocations: $EIPALLOCATIONS

service.beta.kubernetes.io/aws-load-balancer-type: nlb

EOF

?. For AWS LB configuration, remove .loadBalancerIP line

yq delete -i $HYBRID_HOME/istio-operator-aws-template.yaml '**.k8s.service.loadBalancerIP'

?. Verify final state of the istio operator template

?. Generate istio operator manifest by resolving the variables

ahr-cluster-ctl template $HYBRID_HOME/istio-operator-aws-template.yaml > $ASM_CONFIG

?. Get asm installation and add its bin directoty to the PATH

source <(ahr-cluster-ctl asm-get $ASM_VERSION)

?. Install Istio

istioctl install -f $ASM_CONFIG

Output:

! addonComponents.grafana.enabled is deprecated; use the samples/addons/ deployments instead

! addonComponents.kiali.enabled is deprecated; use the samples/addons/ deployments instead

! addonComponents.prometheus.enabled is deprecated; use the samples/addons/ deployments instead

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Addons installed

✔ Installation complete

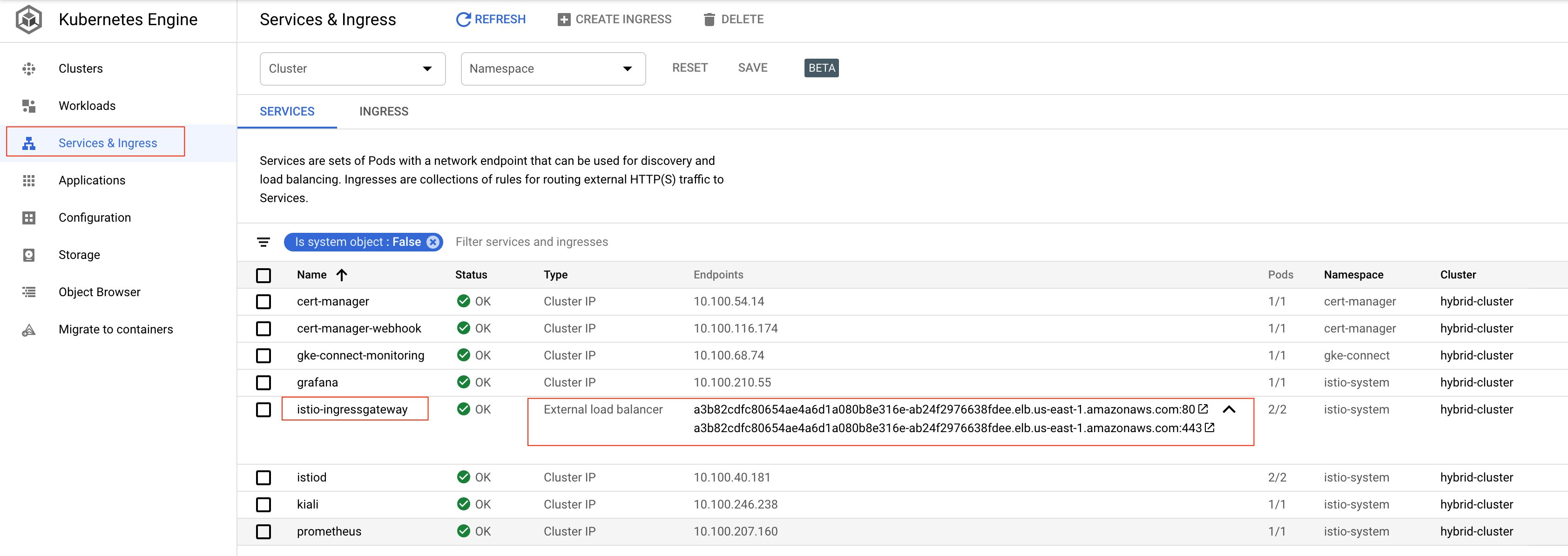

?. Verify Istio Ingress Gateway Extternal Load Balancers configuration at Kubernetes Engine/Services & Ingress

?. Get Hybrid distribution code

ahr-runtime-ctl get-apigeectl

?. Define APIGEECTL_HOME and add its bin directory to the PATH

export APIGEECTL_HOME=$HYBRID_HOME/$(tar tf $HYBRID_HOME/$APIGEECTL_TARBALL | grep VERSION.txt | cut -d "/" -f 1)

export PATH=$APIGEECTL_HOME:$PATH

?. Create Organization and define Analytics region

ahr-runtime-ctl org-create $ORG --ax-region $AX_REGION

?. Create Environment $ENV

ahr-runtime-ctl env-create $ENV

?. Define Environment Group and Host Name

ahr-runtime-ctl env-group-create $ENV_GROUP $RUNTIME_HOST_ALIAS

?. Assign environment $ENV to the environment Group $ENV_GROUP

ahr-runtime-ctl env-group-assign $ORG $ENV_GROUP $ENV

?. Create all apigee component Services Accounts in directory SA_DIR

ahr-sa-ctl create-sa all

ahr-sa-ctl create-key all

?. Configure synchronizer and apigeeconnect component

ahr-runtime-ctl setsync $SYNCHRONIZER_SA_ID

?. Verify Hybrid Control Plane Configuration

ahr-runtime-ctl org-config

?. Observe:

- check that the organizaton is hybrid-enabled

- check that apigee connect is enabled

- check is sync is correct

?. Create Key and Self-signed Certificate for Istio Ingress Gateway

ahr-verify-ctl cert-create-ssc $RUNTIME_SSL_CERT $RUNTIME_SSL_KEY $RUNTIME_HOST_ALIAS

?. Inspect certificate contents

openssl x509 -in $RUNTIME_SSL_CERT -text -noout

?. check validity as of today

ahr-verify-ctl cert-is-valid $RUNTIME_SSL_CERT

?. Generate Runtime Configuration yaml file

ahr-runtime-ctl template $AHR_HOME/templates/overrides-small-1.4-template.yaml > $RUNTIME_CONFIG

?. Inspect generated runtime configuration file

vi $RUNTIME_CONFIG

?. Observe:

- TODO:

?. Install the runtime auxiliary components

ahr-runtime-ctl apigeectl init -f $RUNTIME_CONFIG

?. Wait till ready

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

?. Install Hybrid runtime components

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG

?. Wait till ready

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

$AHR_HOME/proxies/deploy.sh

For a test Request for Ping proxy we need to use any of the two IP addressed, provisioned by AWS via EIP allocations. Let's pick up first one.

curl --cacert $RUNTIME_SSL_CERT https://$RUNTIME_HOST_ALIAS/ping -v --resolve "$RUNTIME_HOST_ALIAS:443:$RUNTIME_IP1" --http1.1

?. Turn the TRACE-ing on, re-send a test request and observe TRACE session results