4. Autoencoder and related variants - ZYL-Harry/Machine_Learning_study GitHub Wiki

Generative model

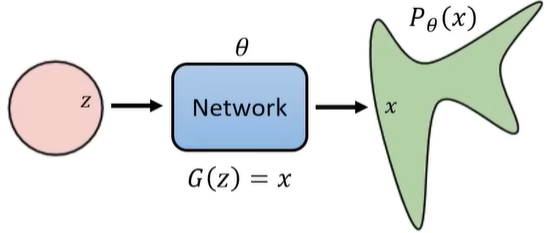

Generative model is a kind of model used in self-supervised learning, it is mainly based on the idea of "generate x based on given feature z with a network G", which is shown in the following picture.

In theory, the object is to maximize P(x) as

In practice, the loss function of the basic generative model is the reconstruction loss with MSE loss as:

Autoencoder (AE)

Autoencoder (AE) computes P(x|z) as a binary selection:

This may cause a problem that most of P(x|z) will be 0 even there is only a little difference (e.g. pixel) between G(z) and x.

In Autoencoder, the loss function is just the reconstruction loss.

Variational Autoencoder (VAE)

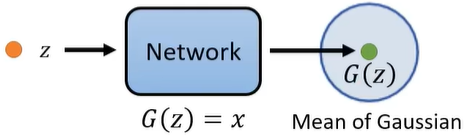

To make up for the sensitivity of Autoencoder, Variantional Autoencoder (VAE) uses a gaussian distribution to describe the influence range of G(z), which is shown as

then, P(x|z) satisfies

Based on the introduction of the gaussian distribution of P(x|z), the object of maximizing P(x) can be calculated. Before calculation, q(z|x) with any distribution is first multiplied (because the integral of q(x|z) with respect to z is equal to 1). The whole process of calculating process is:

Noted that logP (x) can be included in the integral because it is independent of z.

It can be observed that logP(x) consists of two parts and the second one is the KL-divergence (Kullback-Leibler divergence) between q(z|x) and P(z|x), which is a non-negative number. Therefore, the object can be changed as:

This is the lower bound of logP(x). Then, it can be calculated as:

Then, in the first part, q(z|x) can be calculated with an encoder and P(z|x) can be calculated with a decoder, the second part can be used as a cost function with the object to minimize it.

In practice, P(z) is N(0,I), which is a standard Multivariate Gaussian prior and enforces the learned latent factors to be as independent as possible. The block diagram is shown in the following figure:

The transformation shown in the right part of the figure is because