1. InfoNCE Loss - ZYL-Harry/Machine_Learning_study GitHub Wiki

Cross-entropy Loss

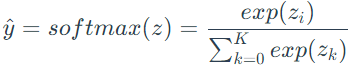

Softmax is the function to convert all elements of a vector into the range of [0, 1] based on their probability:

Based on the transformation of prediction with softmax, the Cross-Entropy loss can be calculated with label in one-hot type:

In contrstive learning, each negative sample is regarded as a class, so the computing consumption is too large. One way is to use NCE loss (noise contrastive estimation) to regard positive and negative samples as only two classes.

NCE Loss

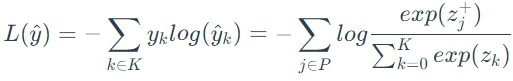

NCE loss (noise contrastive estimation) regards positive and negative samples as only two classes: data sample and noise sample. However, the computing consumption is still large. Sampling from negative samples is a choice to estimate the distribution of the noise sample:

Details can be found out at Notes on Noise Contrastive Estimation and Negative Sampling.

InfoNCE Loss

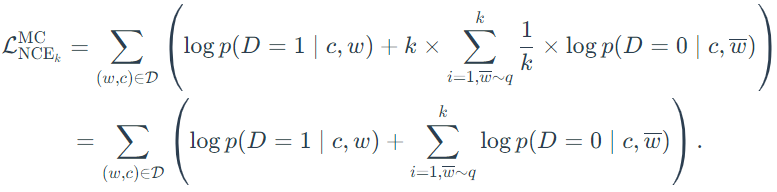

However, training with only two classes can't work well because some of the noise samples actually not belong to one class and simply regarding them as one class makes it less discriminative for them. Therefor InfoNCE loss is proposed to deal with it still as a multi-class problem by randomly sampling k negative samples. InfoNCE loss is calculated as:

It can be found out that the difference between InfoNCE loss and CrossEntropy loss is that the logits calculated by former one is based on the similarity between the selected and positive samples or negative samples, while the logits calculated by latter one is based on the prediction directly computed from a model.