TRAIN model_params - WHOIGit/ifcb_classifier GitHub Wiki

Model Architecture Selection

The overall architecture of a model to be trained is set by the MODEL positional argument. All listed models automatically get modified to account for the requisite number of output classes being trained for.

neuston_net.py SRC MODEL TRAIN_ID.

Following are the available architecture models to choose from:

- inception_v3

- alexnet

- squeezenet

- vgg: vgg11 vgg13 vgg16 vgg19

- resnet: resnet18 resnet34 resnet50 resnet101 resnet152

- densenet: densenet121 densenet161 densenet169 densenet201

- inception_v4 *recently added as a custom model (not built in to pytorch, no pre-trained weights option)

With one exception, these models (incl. access to pre-trained weights) are accessible via the Pytorch framework out-of-the box. Pre-trained weights are derived from model trainings on the ImageNet dataset.

Opinion: We recommend inception_v3

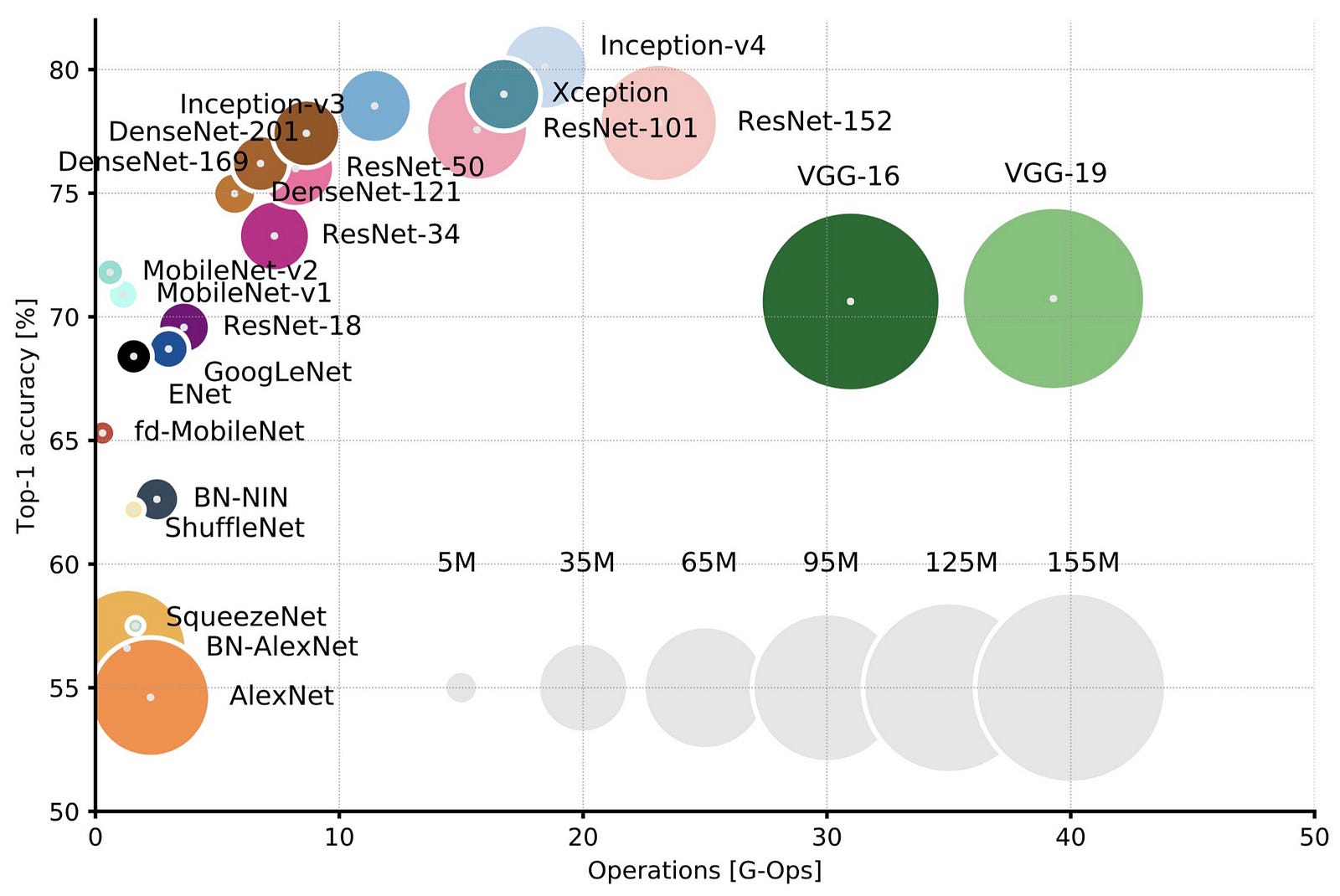

Below is a ball chart of available CNN model performance. The y-axis represents classification accuracy (higher is better), the x axis represents computational performance/steps (smaller is better), and the ball size represents the size of the model (smaller is better, less memory intensive). The data from this chart is derived from model performance against the ImageNet dataset.

Model Parameters

These training flags adjust traits of the trained model.

Model Parameters:

--untrain If set, initializes MODEL ~without~ pretrained neurons. Default (unset) is pretrained.

--img-norm MEAN STD Normalize images by MEAN and STD. This is like whitebalancing.

eg1: "0.667 0.161", eg2: "0.056,0.058,0.051 0.067,0.071,0.057"

Pretrained Weights (--untrain)

When a model is initiated for training, it can be a blank canvas (untrained/not-pretrained) or come with pretrained weights deriving from having been trained against the ImageNet dataset.

From our research, we've found that models with pretrained weights train in fewer epochs and with less variability than models that start from scratch. Because of neuutston_net TRAIN defaults to using pretrained weights when available. To disable pretrained weights, use the --untrain flag.

Detail: in the args.yml file, and internally in a model .ptl file's hparams, this flag is save under "pretrained" as a boolean, not as "untrain"

Image Normalization (--img-norm)

Intensity normalization is the act of normalizing overall pixel values in multiple images into the same statistical distribution. By standardizing the input range of each pixel in an image, a neural net can more easily perform gradient descent to find good weights (example). To standardize image inputs, an image mean (MEAN) and standard deviation (STD) must be provided. MEAN and STD are themselves derived from the corpus of training data and may consist of a single value each, or three values each (one mean and std value for each RGB channel of an image). A drawback of image normalization during training is that it adds complexity for inference; the same normalization transformation must be also applied during inference, otherwise inference will not perform well.

Opinion: for ifcb images, image normalization training gains are marginal and not worth the added complexity at inference

Note: for ifcb images MEAN and STD values of 0.667 and 0.161 respectively are appropriate. Single values for each are fine since the images are grayscale.

To calculate MEAN and STD, consider using neuston_util.py CALC_IMG_NORM)