Stanford Convolution and Pooling - SummerBigData/MattRepo GitHub Wiki

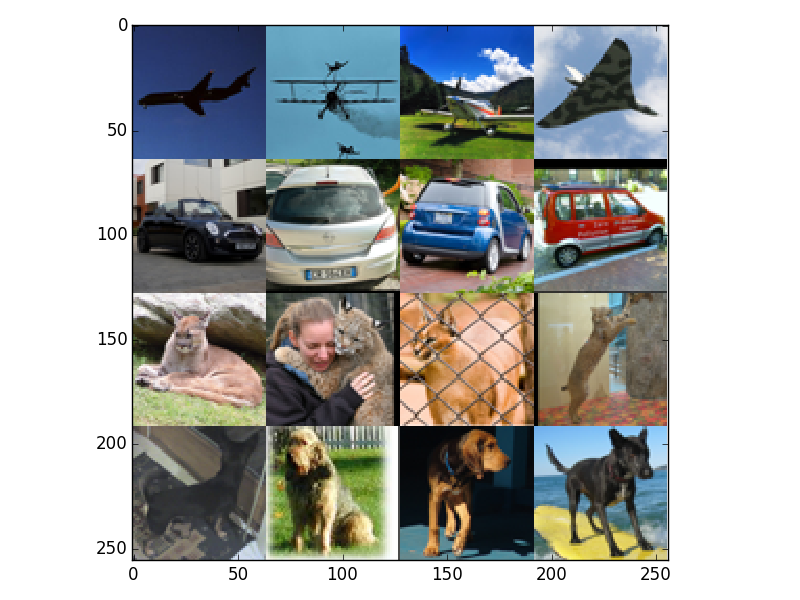

In this exercise we are applying convolution and pooling to classify images using features we learned from our (8, 8) patches in our linear decoder. We will then train our convolved and pooled features using softmax regression. Our training set and test set was from a subset of the STL-10 images that were (64, 64). We used 2000 training images and 3200 testing images. There were a total of four class where class 1 were images of planes, class 2 were images of cars, class 3 were images of cats and class 4 were images of dogs. Here are a couple of examples of the different pictures in each class.

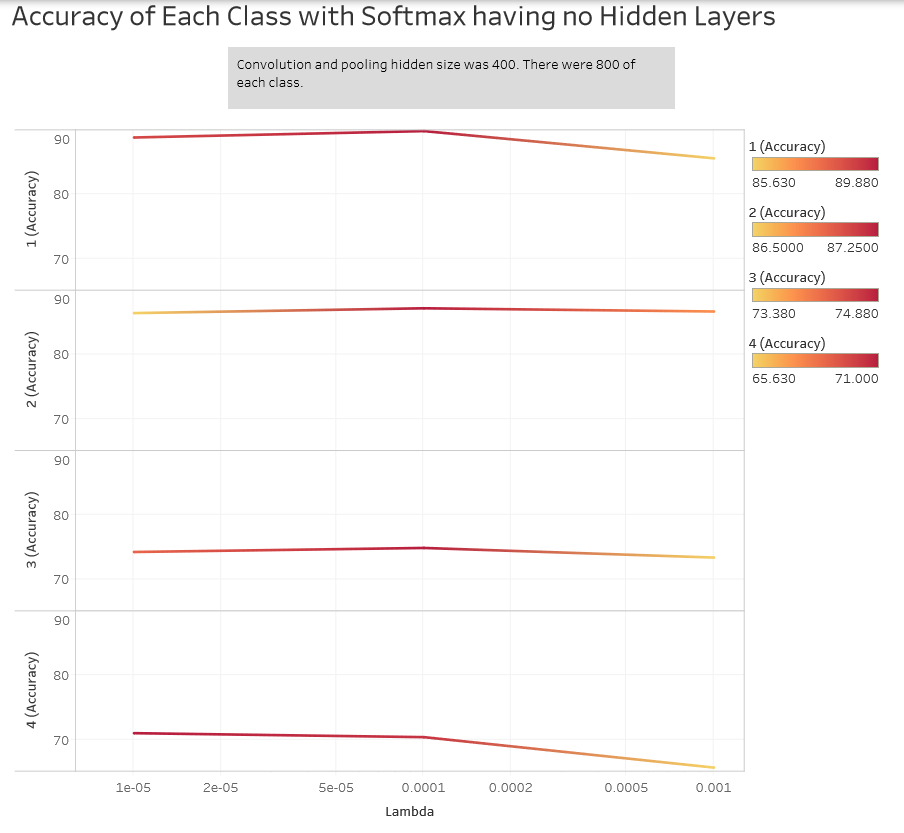

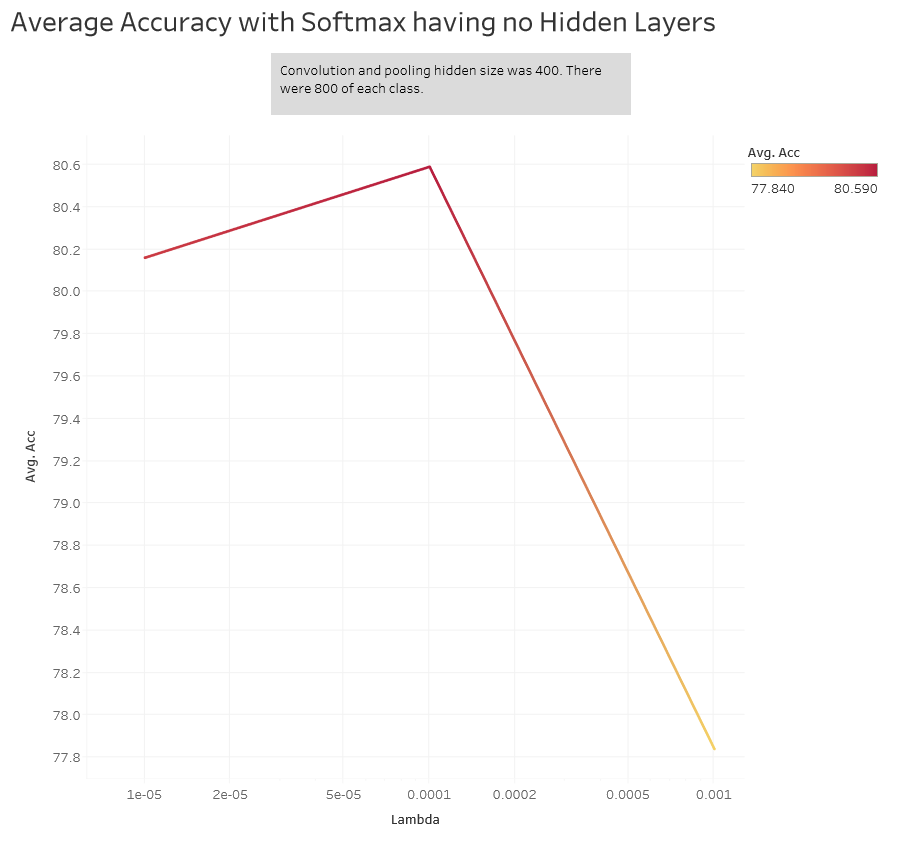

We set up our convolution and pooling code and ran it on the full amount of training and test images using the features we obtained from our linear decoder. After that we first set up our softmax code with no hidden layer and trained it with the full training set and tested it on the full test set. Here are the resulting accuracies.

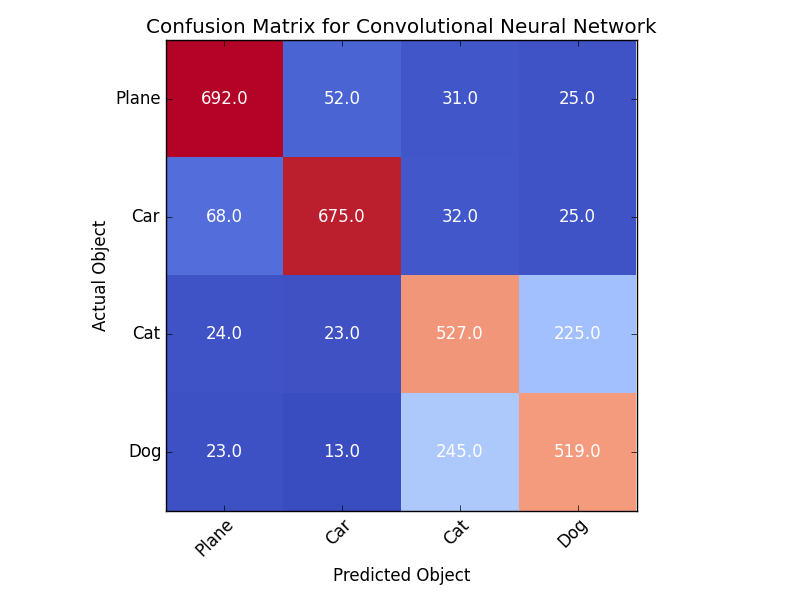

We checked to see what classes were being mixed up.

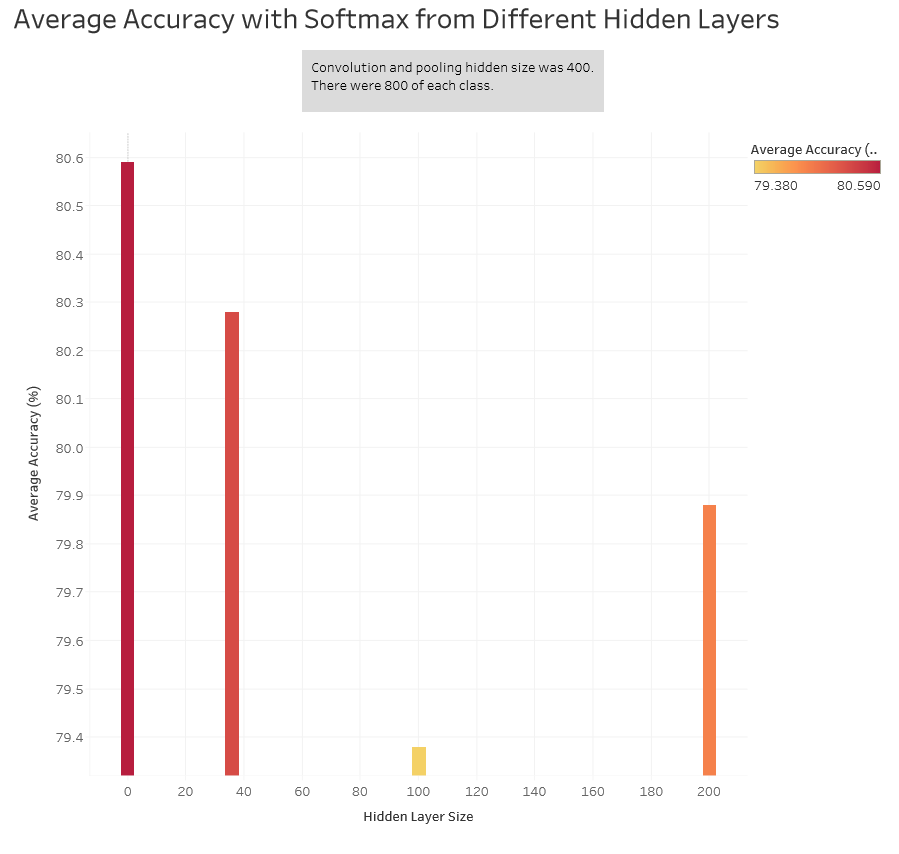

We then went a step forward and added a hidden layer of different sizes to see if we could increase out accuracy. We did this by computing our hidden layer using logistic regression and computed those weights in our cost function. We still used softmax regression for our output layer. Here is a comparison of the different hidden layers accuracies.

It seems that adding hidden layers did not help us become more accurate.