Live Streaming with Apple's ReplayKit - Straas/Straas-iOS-sdk GitHub Wiki

ReplayKit Live is newly introduced in iOS 10. Using the ReplayKit framework, we can build up a broadcasting service to let users broadcast their app's content. A broadcasting service needs to implement two app extensions, Broadcast UI and Broadcast Upload extension. The Broadcast UI extension provides a user interface which lets users sign into the service and set up a broadcast. The Broadcast Upload extension receives the video and audio sample buffers and transmits them to the broadcasting service.

The following sections will demonstrate how to build a broadcasting service based on StraaS Streaming SDK.

- Swift 3.2/Xcode 9.x or later.

- iOS 10 or later.

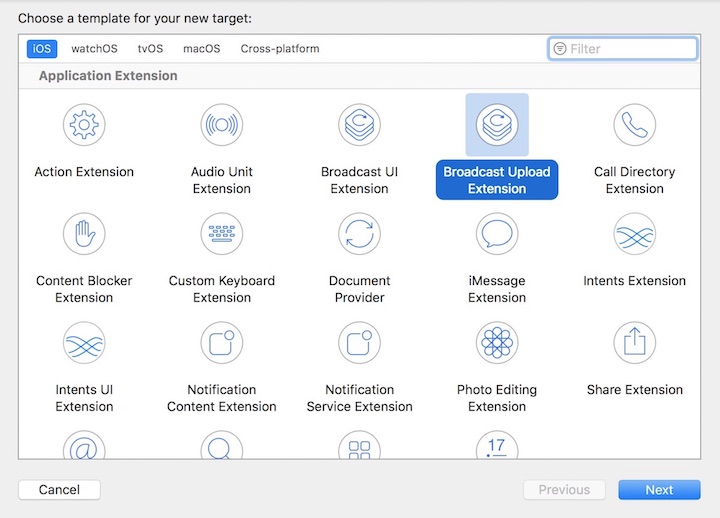

Adding a Broadcast Upload extension and a Broadcast UI extension provides several initial files you need and configures your Xcode project to build the extensions.

- Open your app project in Xcode.

- Go to File > New > Target.

- Choose Broadcast Upload extension from iOS panel.

- Click Next.

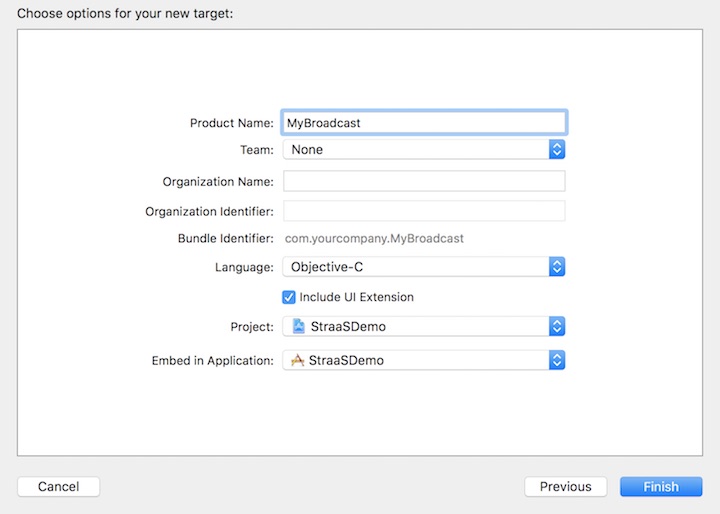

- Specify the name and other options for your extension.

- Enable the "Include UI Extension" option.

- Click Finish.

Figure 1 Adding Broadcast Upload and Broadcast UI extension

Figure 2 Configuring your extension

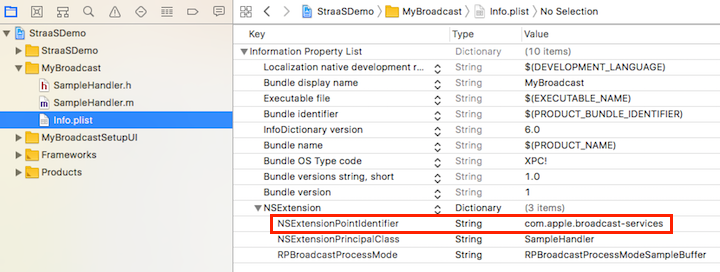

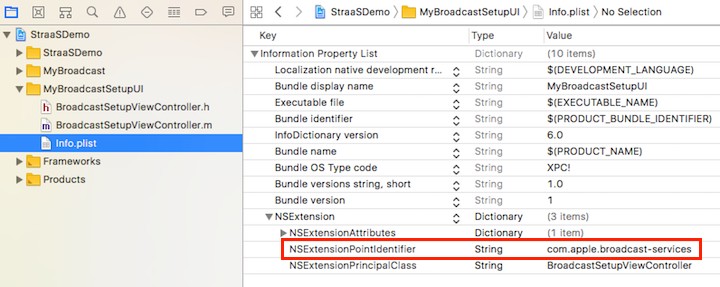

The extensions generated by Xcode 9 are configured for iOS 11. If you want to make the extensions compatible to iOS 10, make sure to modify the Info.plist by setting NSExtensionPointIdentifier to com.apple.broadcast-services for both extensions.

Figure 3 For iOS 10 compatibility, change the value of NSExtensionPointIdentifier key from com.apple.broadcast-services-upload to com.apple.broadcast-services.

Figure 4 For iOS 10 compatibility, change the value of NSExtensionPointIdentifier key from com.apple.broadcast-services-setupui to com.apple.broadcast-services.

-

Edit your Podfile.

pod 'StraaS-iOS-SDK/Streaming' -

Run the following command to install the pod.

$ pod install

To work with StraaS SDK, you have to specify the client id by adding a new key STSSDKClientID to the extension's info.plist and configure your extension with the following codes.

[STSApplication configureApplication:^(BOOL success, NSError *error) {

// If successful, you are now ready to use StraaS iOS SDK.

}];See SDK Configuration for more details.

When an app requests for a screen broadcast, the Broadcast UI extension is first launched. In this extension, you can design your login and configuration UI. Any UI-related codes should be implemented here because the Broadcast Upload extension is a background service without any user interface.

The primary goal of the UI extension is to get the broadcast stream's RTMP URL and then pass to the upload extension. The class STSStreamingManager provides several methods to achieve that goal.

- Create a streaming manager with broadcaster's member token.

NSString * JWT = <#member_token_of_the_broadcaster#>;

self.streamingManager = [STSStreamingManager streamingManagerWithJWT:JWT];-

Create a new live event and get its live id. (How to create a new live event)

-

Get RTMP URL.

NSString * liveId = <#live_id#>;

[self.streamingManager getStreamingInfoWithLiveId:liveId success:^(STSStreamingInfo * _Nonnull streamingInfo) {

self.endpointURL = [NSString stringWithFormat:@"%@/%@", streamingInfo.streamServerURL, streamingInfo.streamKey];

} failure:^(NSError * _Nonnull error) {

}];- Launch upload extension with the stream information.

(void)userDidFinishSetup {

// Dictionary with setup information that will be provided to broadcast extension when broadcast is started

NSDictionary * setupInfo = @{ @"endpointURL" : self.endpointURL };

// URL of the resource where broadcast can be viewed that will be returned to the application

NSURL * broadcastURL = self.endpointURL;

// Tell ReplayKit that the extension is finished setting up and can begin broadcasting

[self.extensionContext completeRequestWithBroadcastURL:broadcastURL setupInfo:setupInfo];

/*

* For iOS 10, use the following codes instead.

RPBroadcastConfiguration * broadcastConfig = [[RPBroadcastConfiguration alloc] init];

[self.extensionContext completeRequestWithBroadcastURL:broadcastURL broadcastConfiguration:broadcastConfig setupInfo:setupInfo];

*/

}When a broadcast begins, the video and audio frames captured by ReplayKit framework are transmitted to your upload extension. StraaS SDK provides a class, STSLiveStreamer, which lets you start/stop a live stream and push the video and audio frames to the RTMP server.

In our VideoChat sample, we implemented a singleton class named STSRKStreamer which utilizes STSLiveStreamer. STSRKStreamer is more convenient to use since it keeps an instance of STSLiveStreamer while broadcasting and has a retry feature when it fails to start a stream.

Add the following codes in SampleHandler.m.

- (void)broadcastStartedWithSetupInfo:(NSDictionary<NSString *,NSObject *> *)setupInfo {

// User has requested to start the broadcast. Setup info from the UI extension will be supplied.

self.endpointURL = [NSURL URLWithString:(NSString *)setupInfo[@"endpointURL"]];

// Configure video settings

STSVideoConfiguration * videoConfig = [STSVideoConfiguration new];

videoConfig.videoSize = CGSizeMake(<#video_width#>, <#video_height#>);

videoConfig.frameRate = 30;

// Configure audio settings

STSAudioConfiguration * audioConfig = [STSAudioConfiguration new];

audioConfig.sampleRate = STSAudioSampleRate_44100Hz;

audioConfig.numOfChannels = 1;

audioConfig.replayKitAudioChannels = @[kSTSReplayKitAudioChannelMic, kSTSReplayKitAudioChannelApp];

// Start streaming

STSRKStreamer * streamer = [STSRKStreamer sharedInstance];

streamer.videoConfig = videoConfig;

streamer.audioConfig = audioConfig;

[streamer startStreamingWithURL:endpointURL retryEnabled:YES success:^{

NSLog(@"start streaming successfully : %@", endpointURL);

} failure:^(NSError * error) {

NSLog(@"fail to start streaming, %@", error);

}];

}The video and audio data are sent to a callback method of RPBroadcastSampleHandler named processSampleBuffer:withType:. We can override this method and send video frames to the RTMP server via STSRKStreamer.

- (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType {

switch (sampleBufferType) {

case RPSampleBufferTypeVideo:

[[STSRKStreamer sharedInstance] pushVideoSampleBuffer:sampleBuffer];

break;

}

}There are two audio channels from ReplayKit.

- RPSampleBufferTypeAudioApp: The audio data from the app.

- RPSampleBufferTypeAudioMic: The audio data from the device's microphone.

You can push audio data of which channel you want by calling [[STSRKStreamer sharedInstance] pushAudioSampleBuffer:ofAudioChannel:]. StraaS SDK also supports sending two channels of audio data and takes the responsibility to mix audio channels for you.

- (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType {

switch (sampleBufferType) {

case RPSampleBufferTypeAudioApp:

[[STSRKStreamer sharedInstance] pushAudioSampleBuffer:sampleBuffer ofAudioChannel:kSTSReplayKitAudioChannelApp];

break;

case RPSampleBufferTypeAudioMic:

[[STSRKStreamer sharedInstance] pushAudioSampleBuffer:sampleBuffer ofAudioChannel:kSTSReplayKitAudioChannelMic];

break;

}

}STSRKStreamer only processes the audio data of registered channels. You can register them by specifying the audio configuration before starting a broadcast.

STSAudioConfiguration * audioConfig = [STSAudioConfiguration new];

audioConfig.replayKitAudioChannels = @[kSTSReplayKitAudioChannelMic, kSTSReplayKitAudioChannelApp];When a live broadcast is paused by the broadcasting app, the instance of RPBroadcastSampleHandler will be notified by a callback method broadcastPaused. You can stop streaming by calling [[STSRKStreamer sharedInstance] stopStreamingWithSuccess:failure:].

- (void)broadcastPaused {

// User has requested to pause the broadcast. Samples will stop being delivered.

[[STSRKStreamer sharedInstance] stopStreamingWithSuccess:^(NSURL * streamURL) {

NSLog(@"stop streaming successfully : %@", streamURL);

} failure:^(NSURL * streamURL, NSError * error) {

NSLog(@"fail to stop streaming : %@, %@", streamURL, error);

}];

}When a live broadcast resumes, you can restart streaming by calling [[STSRKStreamer sharedInstance] startStreamingWithURL:retryEnabled:failure:].

- (void)broadcastResumed {

// User has requested to resume the broadcast. Samples delivery will resume.

STSRKStreamer * streamer = [STSRKStreamer sharedInstance];

[streamer startStreamingWithURL:streamer.streamURL retryEnabled:YES success:^{

NSLog(@"start streaming successfully");

} failure:^(NSError * error) {

NSLog(@"fail to start streaming, %@", error);

}];

}If you start a stream immediately after you stop it, it may fail with an error. Because once a stream stops, the server will take around 10 seconds to release related resources. STSRKStreamer has a retry feature to reconnect to the server when it fails to start a stream. You can enable this feature by specifying retryEnabled to YES when calling startStreamingWithURL:retryEnabled:success:failure: method.

When a live broadcast is stopped by the broadcasting app, You can stop streaming by calling [[STSRKStreamer sharedInstance] stopStreamingWithSuccess:failure:].

- (void)broadcastFinished {

// User has requested to finish the broadcast.

[[STSRKStreamer sharedInstance] stopStreamingWithSuccess:^(NSURL * streamURL) {

NSLog(@"stop streaming successfully : %@", streamURL);

} failure:^(NSURL * streamURL, NSError * error) {

NSLog(@"fail to stop streaming : %@, %@", streamURL, error);

}];

}