L1 and L2 Regularization - SoojungHong/MachineLearning GitHub Wiki

Regularization

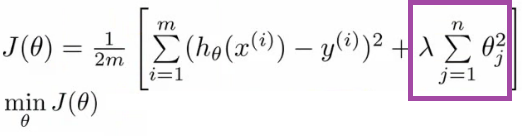

Regularization basically adds the penalty as model complexity increases. Regularization parameter (lambda) penalizes all the parameters except intercept so that model generalizes the data and won’t overfit.

J(theta) is cost function

In above function shows, as the complexity is increasing, regularization will add the penalty for higher terms. This will decrease the importance given to higher terms and will bring the model towards less complex equation.

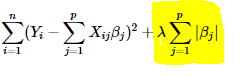

L1 regularization technique is called Lasso Regression

“absolute value of magnitude” of coefficient as penalty term to the loss function.

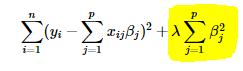

L2 regularization technique is called Ridge Regression

Key difference

The key difference between these techniques is that Lasso shrinks the less important feature’s coefficient to zero thus, removing some feature altogether. So, this works well for feature selection in case we have a huge number of features. Ridge regression adds “squared magnitude” of coefficient as penalty term to the loss function.