General_Opencv - RicoJia/notes GitHub Wiki

========================================================================

========================================================================

-

Contour is joining continuous pts. Find contour also returns hirarchy (each contour obj has a label, this hierarchy returns which contour is the parent of which (parent enclosed child). )

- Contour always needs 1. grayscale 2. thresholding/canny edge, so only interesting stuff will be preserved, with the same intensity value.

- Contour algo always finds edges.

-

findChessboardCorners. More in-depth explanation

- binarization (OTSU), it's adaptive and can adapt to uneven lighting

-

will draw a white rectangle

-

Theory. see link

- Find such threshold that "between-class variance is maximized". Regular variance for discrete space is

sigma^2 = (sum(i - u))^2/n. So for a specific value, the covariance it brings issigma(x_i)^2 = n_i/n * (x_i - u)^2. So for the two classes, between-class var =n_1/n * (x_1 - u)^2 + n_2/n * (x_2 - u)^2 - Therefore it's sensitive to noise, also if markers are too small, then that threshold may not be easy to find.

- Find such threshold that "between-class variance is maximized". Regular variance for discrete space is

-

- dilate the image (white are larger), so we get black polygons

- try different params here

- check if each polygon has 4 vertices. Then use respect ratio, area to find squares

- Then take 4 squares in 田 as a big square (so there're two black squares intersecting), then its center will be the corner point

- If there're PRECISELY the specified number of corner points, we will get the checkboard

-

cornerSubPixfinds the exact corner in a small neighborhood

- binarization (OTSU), it's adaptive and can adapt to uneven lighting

-

convexHull and get the 2D mask, then apply on RGB image

hull = cv2.convexHull(np.array(vertices)) mask = np.zeros(image.shape[:2], dtype="uint8") cv2.fillPoly(mask, pts=[hull], color=[255,255,255]) image = cv2.bitwise_and(image, image, mask = mask)

-

Compare histogram

cv2.calcHist: generate 3d histogram. link -

R Treeprovides good query result, TODO (R* Tree) -

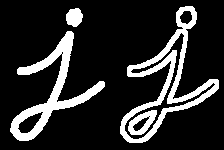

Aruco Marker Detection

1. Thresholding 自适应阈值化 (output: black & white image) 2. Contour filtering 轮廓尺寸过滤 (output: black & white quadrilateral contours, which are points in ccw) 3. Bits extraction 透视变换去除扭曲 1. Find perfect square that's close to the quadrilateral in size. 2. Prospective transform the quadrilateral to square 4. Otsu提取黑白位 - find the threshold for black & white 5. 网格划分 6. Marker identification 1. Find number of white&black in each box 2. Rotate the box 4 times, find the correct code in dictionary. 3. Error-correction 7. Corner refinement(边框位置精确化) 1. find subpixels 2. Keep ONLY ONE MARKER for each id, keep the one with the longest circumference 3. REMOVE markers too close to image corners 8. Pose estimation - solvepnp

- pip install opencv-contrib-python

-

QR code vs aruco?

- QR code can store much more info than aruco.

- 3 points for localizing the rectangle

- You also have localizing patterns to detect where bits will go.

- a column in each block is a byte

- QR code can store much more info than aruco.

-

cv.rectangledoesn't care about the bounds -

draw circles

cv2.circle(image, tuple(uv), int(0.5 * det.size * self.pixels_per_meter), color=(0, 0, 255), thickness=2) #or im_with_keypoints = cv2.drawKeypoints(im, keypoints, np.array([]), (0,0,255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)``` -

rectangles:

- smallest enclosing rectangle:

(center(x,y), (width, height), angle_of_rotation) = cv2.minAreaRect(points)- Rotated rectangle is: RotatedRect initializer format is (center, size, angle).

- check intersection of two rotated rectangles:

r1 = cv2.rotatedRectangleIntersection(rect1, rect2)

- smallest enclosing rectangle:

-

gradient_img = cv2.morphologyEx(img, cv2.MORPH_GRADIENT, kernel)

- this is the difference b/w erosion and dilation

- this is the difference b/w erosion and dilation

-

blur:

im2, contour, hier = cv2.findContours(bin_img, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE) -

cv2.adaptiveThreshold(src, dst, 125, Imgproc.ADAPTIVE_THRESH_MEAN_C, Imgproc.THRESH_BINARY, 11, 12). Link- thresholding is to set intensity to 0 if its value doesn't meet a certain threshold. This function will set its threshold value in each neighborhood

- ADAPTIVE_THRESH_MEAN_C is the

adaptiveMethod, so anything lower than the mean in the neighborhood is set to 0 - 11 is the blocksize

-

cv2.subtract- performs clipping, i.e., 0 for negative values.np.subtractdoes not.

- blob detection source code

-

self.blob_detector = cv2.SimpleBlobDetector_create(params): will have different images for each intensity threshold. Each keypoint (center, radius), will never have a circular blob fully enclosed in another circle.

-

- trackbar for tuning

class Obj: def __init__(self): # for disparity img self.window_size = 15 self.speckleRange = 22 def update(self, val=0): self.window_size = int(cv2.getTrackbarPos('window_size', 'disparity')) self.speckleRange = int(cv2.getTrackbarPos('speckleRange', 'disparity')) cv2.namedWindow('disparity') cv2.createTrackbar('speckleRange', 'disparity', depth_estimator.speckleRange, 50, depth_estimator.update) cv2.createTrackbar('window_size', 'disparity', depth_estimator.window_size, 21, depth_estimator.update)

========================================================================

========================================================================

-

cv.imshow()works with uint8. Converting touint8:img = np.uint8(img)

-

Safeway to draw circle:

cv2.circle(noisy_human_figures, tuple(np.uint8(coords)), 1, color = (0, 255, 255), thickness=2) -

cv2 stores images as BGR, not RGB, so imshow is BGR too

-

plt.show()works with uint8, so you need to declare uint8:np.array([1,2,3], dtype=uint8) -

plt.imshow(cmap='gray')may not even show an image whose rgb is in [0, 54], with, or without (vmin=0, vmax=54) - use this:

cv2.imshow(np_image) cv2.waitKey(0) #in milliseconds. To avoid gray windows, put 100 instead of 1

-

-

cv2.imencode('.jpg', px_data, )[1].tofile(path)- will compress jpg into serialized data, then return it as a buffer

- e.g, nice explanation

# cv2.imread cannot work with non-english filenames img = cv2.imread('0122.jpg',cv2.IMREAD_COLOR) # '.jpg'means that the img of the current picture is encoded in jpg format, and the result of encoding in different formats is different. img_encode = cv2.imencode('.jpg', img)[1] # imgg = cv2.imencode('.png', img) data_encode = np.array(img_encode)

- cv2.imwrite(filename, img)

-

put text

isolated_human_figure = cv2.putText(isolated_human_figure, f"{matched: {self.reids[track_id].matching_history[-1]}}", (50, 50), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2, cv2.LINE_AA)

========================================================================

========================================================================

-

cv2.LUT()transform images into temperature like heatmap. The mapping is defined in lUT, in 256x1 color mapself.contrast_lut = (255 / (1 + np.exp(-self.sigmoid_k * ((np.arange(256, dtype=np.float) / 255) - self.sigmoid_x0)))).astype(np.uint8) img = cv2.LUT(img, self.contrast_lut)

- Question: what does this lookup look like

-

System design: visualization functions should always be top level - never inside any planning object!

-

- hellinger is bhatacharyya

- intersect is the sum of mins, useless.

========================================================================

========================================================================

-

line, show img

- show rgb

- WARNING: DO NOT PUT MATPLOTLIB WITH CV2!

- Normalize and show circle

-

Check for collision on 8-connected bresenham line

def collide_line(self,start,end): img = np.zeros((self._height,self._width)) img = cv2.line(img,start,end,1,1) intersection = np.logical_and( self._map, img) if np.count_nonzero(intersection)==0: # No collision return False else: #Collision return True

-

visualize histogram

# # visualize descriptors if not track_id in self.debug_axes.keys(): fig, self.debug_axes[track_id] = plt.subplots(1,2,figsize=(18, 10)) fig.canvas.set_window_title(f"ReID_axes_{track_id[-3:]}") self.debug_axes[track_id][0].clear() self.debug_axes[track_id][0].plot(self.reids[track_id].descriptors[0]) self.debug_axes[track_id][0].set_title("RGB") self.debug_axes[track_id][1].clear() self.debug_axes[track_id][1].plot(self.reids[track_id].descriptors[1]) self.debug_axes[track_id][1].set_title("HSV") plt.pause(0.1)