SystemOverview - PartnerRobotChallengeVirtual/handyman-unity GitHub Wiki

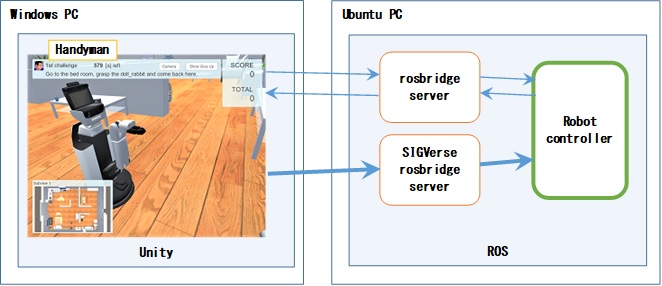

The system overview of the Handyman is described below.

System Configuration

The system configuration for this competitive challenge is outlined below.

The Windows computer runs the Handyman program. The Handyman program has been created in Unity.

The Ubuntu computer runs the rosbridge server, the SIGVerse rosbridge server, and the robot controller created by the competition participants.

The Handyman program and robot controller communicate through the basic rosbridge server, but communication with a large amount of data (sensor data, etc.) is transmitted through the SIGVerse rosbridge server.

In the Handyman program, robots move in accordance with the instructions from the robot controller when the human avatar issues commands to the robot. The robot controller sends ROS messages such as Twist and JointTrajectory to the Handyman program to move the robot in the Handyman program.

The Handyman program distributes JointState, TF, sensor information, and other ROS messages at a regular interval to the Robot Controller.

Flow of the competition

The flow of the competition is outlined below.

- Launch the robot controller, the SIGVerse rosbridge, etc on Ubuntu side.

- Launch the Handyman program on Windows side.

- Initialize the position and direction of the robot and object to grasp.

- The avatar sends the “Are_you_ready?” message to the robot.

And “Environment” message is sent at the same time. - The robot sends the “I_am_ready” message to the avatar.

- The avatar sends “Instruction” message to the robot.

(e.g.: Go to the XXXX, grasp the YYYY and bring it here.) - The robot moves to the room for instruction.

- The robot sends the “Room_reached” message to the avatar.

- The avatar checks the first statement.

If successful, points are awarded and the challenge moves on to the next task.

If unsuccessful, the task ends. - The robot looks for the object.

If the robot can find the target object, the robot should progress to grasp the object.

If the robot cannot find the target object and judges that the object does not exist, the robot should send the “Does_not_exist” message to the avatar.- If the indication is correct, a score is added and the avatar sends “Corrected_instruction” message to the robot.

The robot then should look for the new object. - If the indication is not correct, the task will end.

- If the indication is correct, a score is added and the avatar sends “Corrected_instruction” message to the robot.

- The robot grasps the object.

- The robot sends the “Object_grasped” message to the avatar.

- The avatar checks whether the grasped object is correct.

If it is successful, a score is added.

If it is failed, the task will end and the session goes to the next. - The robot carries out the instruction after grasping.

- The robot sends the “Task_finished” message to the avatar.

- The avatar checks the achievement condition of the target behavior.

If it is successful, a score is added and the session goes to the next.

- If the task ends (successfully or failed):

The avatar sends a “Task_succeeded” (successful task) or “Task_failed” (unsuccessful task) message to the robot to start the next task when participants still have attempts left.

The avatar sends the “Mission_complete” message to the robot to end the competitive challenge when participants have no attempts left. - If the time limit has passed:

The avatar sends the “Task_failed” message to the robot to indicate the task was unsuccessful. - The robot can send “Give_up” message if it is impossible to achieve the task. In that case, the task is aborted and “Task_failed” message is sent then go to the next session.

Notes

Destination of Grasped Objects

Basically please put the grasped object on a desk or the like.

However, if the destination is a person, move the arm so that the center of the grasped object is

within the sphere of the following image.

![]()