getting started analyzing snowplow data - OXYGEN-MARKET/oxygen-market.github.io GitHub Wiki

HOME » SNOWPLOW SETUP GUIDE » Step 6: Get started analyzing Snowplow data

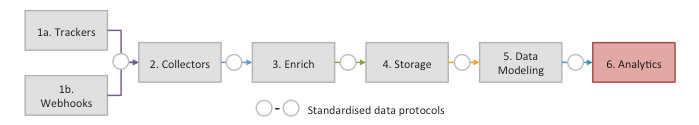

Now you have your Snowplow data being generated and loaded into S3 and potentially also Amazon Redshift, you are in a position to start analyzing the event stream or data from the derived tables in Redshift if a data model has been built.

Because Snowplow gives you access to incredibly granular, event-level and customer-level data, the possible analyses you can perform on that data are endless.

As part of this setup guide, we provide details on how to get started analyzing that data. There are many other resources we are building out that go into much more detail.

The first guide covers creating schemas with prebuilt recipes and cubes in Redshift or PostgreSQL. These can help you get started analyzing Snowplow data faster.

The next four guides cover using different tools (ChartIO, Tableau, Excel and R) to analyze Snowplow data in Redshift or PostgreSQL. The sixth guide covers how to analyze Snowplow data in S3 using Elastic MapReduce and Apache Hive specifically, as an easy-introduction to EMR more generally. The final guide (8) covers how to use Qubole with Apache Hive to analyze the data in S3, as an attractive alternative to EMR.

- Creating the prebuilt cube and recipe views that Snowplow ships with in Redshift / PostgreSQL

- Exploring, analysing and dashboarding your Snowplow data with Looker

- Setup ChartIO to create dashboards with Snowplow data

- Setup Excel to analyze and visualize Snowplow data

- Setup Tableau to perform OLAP analysis on Snowplow data

- Setup R to perform more sophisticated visualization, statistical analysis and data mining on Snowplow data

- Get started analyzing your data in S3 using EMR and Hive

- Get started analyzing your data in S3 using Qubole and Hive / Pig