Overfitting - OKStateACM/AI_Workshop GitHub Wiki

#Overfitting

From wikipedia: "In statistics and machine learning, one of the most common tasks is to fit a "model" to a set of training data, so as to be able to make reliable predictions on general untrained data. In overfitting, a statistical model describes random error or noise instead of the underlying relationship. Overfitting occurs when a model is excessively complex, such as having too many parameters relative to the number of observations. A model that has been overfit has poor predictive performance, as it overreacts to minor fluctuations in the training data."

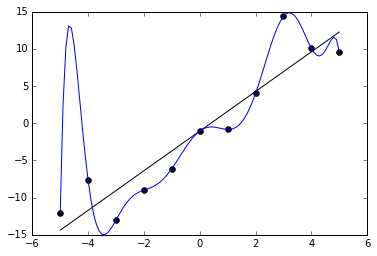

What this means is that we can have a model, in our case that would be our neural networks, that have learned to function really well for the data that we have been training it on. However, it doesn't work well at all on any other data. For instance, in our linear regression example, if we had instead used a polynomial, we may have gotten a function that had a very low loss function, but does'nt look anything like the general shape of the data.

There are many ways to combat overfitting. One is to use a simpler model. For instance, instead of using a polynomial model, use a simpler linear or quadratic model. For neural networks, this would mean reducing the number of layers, or reducing the number of neurons in each layer.

Other ways include some for of regularization where the model is relaxed in some way so that the network is not extremely sensitive to the training data. The specifics of how regularization happens isn't extremely useful at this stage in the game. Just know that there are many ways to do it.