Getting started with CyBench annotations - Nastel/gocypher-cybench-java GitHub Wiki

CyBench framework provides annotations which allows more detailed description of the benchmark, i.e. its main functions, project metadata, library metadata, parameters descriptions such as data size or key strength, or etc. Annotations which contains metadata serves for better comparison and understanding of what benchmark is measuring.

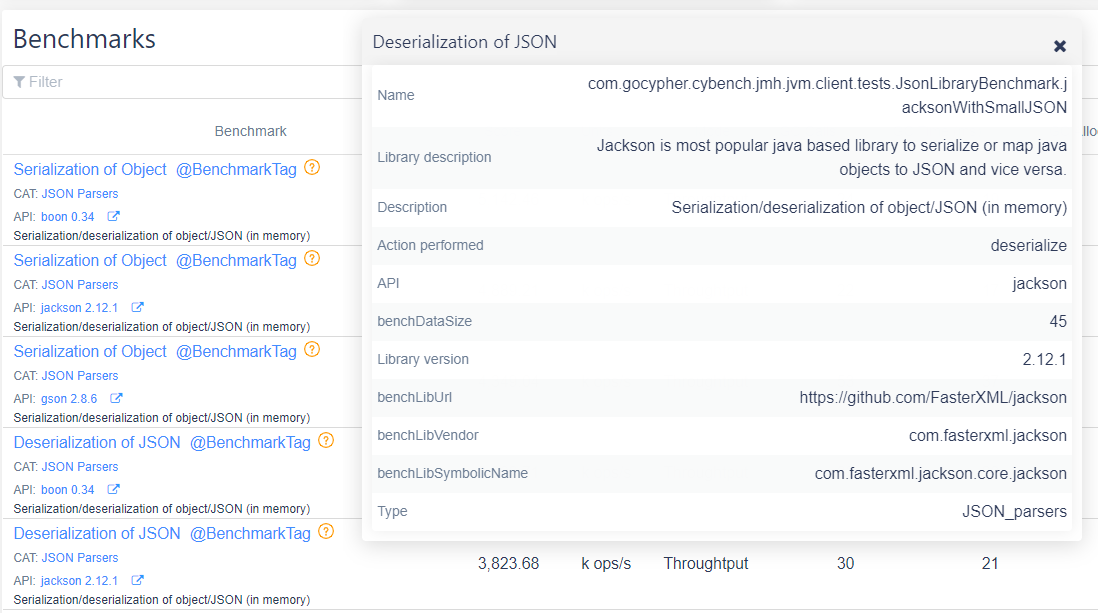

Metadata defined in the CyBench is displayed in the report details UI:

- CyBench Web application - "Report details" page

- Eclipse/Intellij plugins - "Report details view" -> tab "Benchmark Details".

- Download CyBench Maven plugin package and extract it to any directory (see https://github.com/K2NIO/gocypher-cybench-maven).

- For Maven and Gradle projects:

- Install CyBench annotations library

gocypher-cybench-annotations-1.3.5.jarto local Maven repository (see section "Start using CyBench Maven plugin" for installation details) - Add dependency to CyBench Annotation library in

pom.xmlfile

- Install CyBench annotations library

<dependency>

<groupId>com.gocypher.cybench.client</groupId>

<artifactId>gocypher-cybench-annotations</artifactId>

<version>1.3.5</version>

</dependency>- For JAVA projects:

- Add CyBench annotations library

gocypher-cybench-annotations-1.3.5.jarto project build path.

- Add CyBench annotations library

Annotations provided by CyBench supports inheritance and full object orientation i.e. they can be declared over the class, method, over the inherited abstract class or implemented interfaces. Developer shall use them in such way that it is easier to support it, i.e. shall avoid annotation with the same content in multiple places.

This annotation allows to define metadata which describes the benchmark. It is allowed to have as many of these annotations as you want put them either above class or above method which contains benchmark implementation.

The structure of the annotation content is

@BenchmarkMetaData(key = "<name of the property>", value = "<value of the property>")

There are pre-defined default keys set. If those keys are used then such data is visualized in CyBench Web Application.

-

domain - domain of the benchmark, higher abstraction layer which helps to classify benchmarks according their nature, required execution environment etc.; for example:

JAVA,Ethereum,C#,Databaseor etc. -

context - context (or category) which is represented by benchmark implementation; context represents generic area or scope to which benchmarks belongs, for example:

JSON Parsers,IO, etc. -

api - benchmarked implementation (or library) for selected context, for example:

MappedByteBuffer( is implementation of IO),Jackson(is implementation of JSON Parsers) or artifact id defined inpom.xmlfile. -

version - version of the benchmark implementation for example:

1.3.5. -

title - title of the benchmark operation, for example:

File copy. - description - description of the benchmark implementation including some details what it does.

- isLibraryBenchmark - flag which defines if it is a library/framework benchmark or just any other functionality benchmark.

-

libSymbolicName - library or framework symbolic name, could be artifact identifier defined in

pom.xmlfile (or combination of group id and artifact id) or bundle symbolic name from manifest, for example:jackson-coreorcom.fasterxml.jackson.core.jackson-core. -

libVersion - version of benchmarked library, for example:

2.11.2 - libDescription - short description of benchmarked library or framework, for example: “Core Jackson processing abstractions (aka Streaming API), implementation for JSON“.

-

libVendor - library or framework vendor name, for example:

FasterXML - libUrl - URL to library or framework WEB site.

-

actionName - a name of the action which is benchmarked by using any API for example:

Read, Write, ReadWrite, Serialization, Deserialization, Concatenation, Add, Remove, etc. This name helps to compare the same actions across different API’s or libraries. - dataSize - data item (string, file, array or etc.) size in bytes or length, which was used as data in benchmark implementation.

The purpose of this annotation is to tag benchmark implementation with UUID that event if its signature, method name or implementation changes, it is still known that it is this particular implementation. The value of this annotation shall be unique in the project scope. It helps to make comparisons of the different benchmark reports in the CyBench website.

Benchmark implementation method is stored in the report and also can be used for comparison of benchmark results between different reports but if the method name changes, of class name changes (which also is the part of test name) then comparison would not be available, and in such situations helps @BenchmarkTag, even if method or class signature changes if the benchmark implementation conceptually remains the same then @BenchmarkTag shall remain the same and results would be comparable between different reports.

Note - if Maven tool is used for project build process, and if @BenchmarkTag does not exist over the benchmark implementation method, then annotation is generated automatically.

The structure of the annotation content is

@BenchmarkTag(tag = "<UUID>")

As JMH framework is used for benchmark implementation execution, so its annotation are supported as well. JMH annotations could be placed over the benchmark implementation class or method, If annotation is placed over the class then the same value is applied for all benchmark annotated methods . JMH annotations defines settings for the benchmark execution and that class or method contains benchmarked content.

Some JMH annotations:

- @Benchmark - defines benchmark implementation, this mandatory annotation and must be placed above each method which contains benchmark implementation.

- @State(Scope.Benchmark) - defines that the class contains benchmarks and is mandatory if there are annotations for setup or teardown methods.

- @BenchmarkMode(Mode.Throughput) - defines mode of the benchmark either continuous calls or single call (placed above class or method)

- @OutputTimeUnit(TimeUnit.SECONDS) - defines time units used for score computation for example operations per second or micro second (placed above class or method).

- @Fork(1) - defines amount of forks (physical JVMs) created during benchmark execution (placed above class or method).

- @Threads(1) - defines amount of threads created in the JVM during benchmark execution (placed above class or method).

- @Measurement(iterations = 2, time = 5, timeUnit = TimeUnit.SECONDS) - defines measurement phase settings: count of iterations, duration of continuous calls per iteration and time units, i.e. according to example: benchmark implementation method will be called continuously for 5 seconds and process repeated 2 times (placed above class or method).

- @Warmup(iterations = 1, time = 1, timeUnit = TimeUnit.SECONDS) - defines warmup phase settings: count of iterations, duration of continuous calls per iteration and time units, i.e. according to example: benchmark implementation method will be called continuously for 1 seconds and process repeated 1 time (placed above class or method).

For more JMH usage details see this JMH tutorial.

- JAVA class example which contains CyBench annotations:

import com.gocypher.cybench.core.annotation.BenchmarkMetaData;

import com.gocypher.cybench.core.annotation.BenchmarkTag;

import org.openjdk.jmh.annotations.*;

import org.openjdk.jmh.infra.Blackhole;

@State(Scope.Benchmark)

@BenchmarkMetaData(key="isLibraryBenchmark", value="true")

@BenchmarkMetaData(key="context", value="JSON_parsers")

@BenchmarkMetaData(key="domain", value="java")

@BenchmarkMetaData(key="version", value="1.3.5")

@BenchmarkMetaData(key="description", value="Serialization/deserialization of object/JSON (in memory)")

public class JsonLibraryBenchmark {

@Benchmark

@BenchmarkTag(tag = "813b142a-e8c9-4e6e-b3fb-ad3512517e7b")

@BenchmarkMetaData(key="api", value="jackson")

@BenchmarkMetaData(key="libVendor", value="com.fasterxml.jackson")

@BenchmarkMetaData(key="libSymbolicName", value="com.fasterxml.jackson.core.jackson")

@BenchmarkMetaData(key="libUrl", value="https://github.com/FasterXML/jackson")

@BenchmarkMetaData(key="libVersion", value="2.11.2")

@BenchmarkMetaData(key="libDescription", value="Core Jackson processing abstractions (aka Streaming API), implementation for JSON")

@BenchmarkMetaData(key="dataSize", value="440203")

@BenchmarkMetaData(key="actionName", value="deserialize")

@BenchmarkMetaData(key="title", value="Deserialization of JSON")

public Object jacksonWithBigJSON(BigJson json, JacksonDeserialize impl, Blackhole bh) {

//JSON deserialization using Jackson

}

}