Cloud Flow Coding Standards - MattCollins-Jones/PowerAutomateArtemisFramework GitHub Wiki

Introduction

The purpose of this guide is to provide coding standards to be used in Power Automate. This will cover creation of flows, layout/design, coding, naming conventions and more. This is a living document and will change over time as new features are implemented or better standards are defined. As with anything, peoples opinions may differ and I've taken feedback and updated this based on their ideas. I'm happy to have discussions about improvements to this framework.

Flow Creation

Flows should be created inside a solution wherever possible. This will enable ALM using modern tooling. Solutions do require Dataverse environments. When creating a flow, the flow should be named:

- Trigger Table Name (CUD) – Description of flow

This format allows makers to see all flows triggered from a specific table, this is extremely useful when troubleshooting. Adding in the table suffix of (CUD) Create, Update, Delete; also allows people to see under what circumstances a flow triggers. This allows you to skip over flows that only trigger on delete, if you know the flow you are looking for runs on create.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| Events (C) – When event created, add to events calendar | Can identify the trigger table and trigger event, also what the flow then does | when a row is created, updated or deleted, add a row | Does not identify the table or trigger, so no idea where this is triggered from inside an app unless you click into the flow |

| Manual Trigger – List flows in Environment | Identifies this is a manual trigger and the flow action | Button->List flows in Environment, Compose | Poorly formatted, include actions automatically in the name that aren't relevant to the whole flow |

If data sources other than Dataverse are used, consider including a prefix of the data source. E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| EX Forms Response (C) – When response added, email account manager | Identifies the data is stored in Excel via Microsoft Forms | When a Response is added, email account manager | Does not identify the data source |

| SQL Scheduled flow – Execute Stored Procedure, get Contacts | Identifies SQL as a data source and that this is a flow that runs on a schedule | Execute stored procedure, get contacts, do this nightly | Does not identify the data source, and the cadence of the flow is obscured at the end of the name, instead of at the start |

This is also useful if your solution contains a mix of flows from various data sources.

You should also consider the size of your organisation and how many apps will be developed in a single environment. If a large organisation will create multiple apps in a single environment, it might be best to prefix a flow the the App they are triggered from or belong to E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| EA (CU): Events – When event created or updated, add to events calendar | EA is the acronym for Events App, this identifies which app this is connected to | Events – When event created, add to events calendar | Identifies the table this flow runs from, but not the application |

| SA (U): Opportunity - When Opp estimated closes in the past, create a task for owner | Identifies the app as the Sales App that this flow connects to. The Opportunity table may be used across multiple applications, but this may only be triggered from this application | All Applications - Opportunity - When Opp estimated closes in the past, create a task for owner | States all apps use this flow, but if that is the case, the prefix of application is not needed |

| CA: Power Apps - Create ICS file for download | Identifies this flow running from the Calendar App | Create ICS file for download | Does not identify the application or the source |

In the above examples, EA = Event App, SA = Sales App, CA = Calendar App.

Child flows, triggered from Parent flows, should be labeled as such so that you can quickly Identify them. E.g.

- Child - Retrieve data from API

After a flow is created, the description in the flow details screen should be updated. This should include the date, a version number, the person who created/update the flow and the purpose. E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| 22/12/22 MCJ V1.0 – Initial Release | Date formatted, initials of person that did the work, small note of work completed. | Today - created flow | No date of when the flow was finished, no initials of person that created it. |

| 15/01/23 MCJ V1.1 - Updated error handling, added notifications #12 #32 | Includes links to Jira/ADO tickets, that contain more information of what is features were added to the flow | Blank | No details of what the flow does, who built it, when it was edited etc |

This does not need to be done for every update, but for major changes to a flow that will be deployed to production, it is a good idea to update this. The Description field does have a limited number of characters, so if you reach this limit, considered updating previous entries to be more succinct or moving your version history to a Compose Step inside the flow itself. A compose step will consume an API call when the flow is run, meaning for high volume flows, this will not be ideal and updated the description would be a preferred method.

If you are using Azure Dev Ops, Jira or another tool for agile development, you can include the story/PBI reference in the description, as the ticket may contain more information than you can fit inside of the description. This allows developers to understand the reason for the flow, the change to the flow or back ground information that might be relevant if more changes or support is required. Furthermore, you can create flows that pull descriptions of flows into Azure DevOps Wiki pages, so using numbers formatted as #1234 translates to user story titles.

If this is a Child flow or a Parent flow, it is a good idea to put the name of the Child/Parent flow in the description. This saves time by preventing people from having to open Flows and find where a child flow is triggered to get the name, or search round looking for which parent flow triggers the child flow. If you have a large number of Parent flows, you may also consider putting "Parent" in the flow name to save time.

Flow Trigger

Flows can be triggered based on events in data sources, manually, on a schedule or from another flow. Limiting the number of runs of a flow will reduce the API calls, reduce load on servers and make your applications run better. You should implement Trigger Conditions or Filter Rows, depending on your data source.

Each data source is different, so filtering the trigger will be dependent on that. Dataverse has Filter Rows on the main trigger screen, whereas SharePoint does not, but you can implement a Triggering Condition by going to the settings of the trigger and writing the oData expression there. As Triggering Conditions are not visible unless you look at the settings for a trigger, when a Filtering Rows (or similar) is available, this should be used; when a Triggering Condition is used, a Note should be added to the trigger with details of the condition. The Flow trigger should be renamed to describe when the flow will trigger. E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| When an account record is created | Keeps details that the trigger is based on a create, update or delete trigger and identifies the table | When a row is created, updated or deleted | Does not identify the table this is triggered from |

| When a new Invoice is uploaded | Details the action the person is doing, outside of a CUD action and the type of document | When a file is created in a folder (deprecated) | Doesn't detail the type(s) of files created and uses a deprecated trigger |

| When a contact’s telephone number is updated | Identifies the table and the column that triggers the flow | Contact Telephone | Too generic, and doesn't specify the CUD action |

Flow Actions

Flow actions should be renamed as they are being created. If you write an expression manually and then change the name of an action later, you will need to update that expression in every place it is being used. This is not the case for dynamic content, but this practice should be followed regardless. An action should be renamed while still indicating the type of action that it is. As there is no way to define what an action is, a portion of the original name should remain. E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| List Rows – List Account Records | Identifies the action and the table the action is performed on | List Rows | Does not Identify the table |

| Get Row – Get Contact Record | Identifies the action and the table the action is performed on | Find Contact Record | Does not maintain the action used |

| Update Row – Update Opportunity record with customer data | Maintains action, identifies table and details what the action will do | Change Opportunity to include Account Number, Telephone Number, address... | Doesn't maintain the action name and includes too much information |

Notes can be used on actions to explain where this action is being used elsewhere in the flow; this is particularly useful if you have large flows and are unsure if altering this action, could have consequences elsewhere. You can also use notes to copy the original action name, if you need to rename the action extensively but want to maintain a record of what the action used is.

Comments are less visible than notes, as comments are the Microsoft 365 collaboration-esque design, which are stored in a Dataverse table. Not only does this mean you need to have a Dataverse environment to use them, but there are no visual indicators of a comment unless your click on Comments, so Comments can be used for collaborating on a flow, but Notes should be used for useful information about the action. Comments are also left behind in the developer/previous environment, as they are stored in a table and Dataverse solutions to not include data, Notes are part of a flow definition and are always brought through.

Variables

Variables need to be initialised before they can be used, this should be done at the start of a flow. The name of the variable should be prefixed “var” and then the name of the variable. E.g.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| varUserID | Prefix identifies it as a variable, description identifies it's the user's ID | User | Unclear that it this is a variable and that this is an ID for a user |

| varCountOfRows | Prefix identifies it as a variable, description identifies it as a number | IntRows | Uses data type variable and doesn't specify this is a count of the rows |

Although they do appear in their own section when picking dynamic content, prefixing these will help when writing expressions that include variables. Coding languages like JavaScript and CSS have typically used var for declaring variables as these are functions. Some coding languages prefix with the data type. While prefixing with data types is a good idea in some instances (See Environment Variables), prefixing with var easily identifies it as a variable and is consistent with approaches in JS and in some best practices instances, Power Fx. Giving all variables the same prefix would allow for intellisense to find them easily, so while this isn't in the product today, it might be in the future. With these variables being at the top of the flow, it is easy to open them and find their datatype without the need for them to be prefixed with it.

Variables need to be initialised first before they can be used, they cannot be initialised in a Scope or certain other controls. Therefore, it is best practice to initialise all your variables at the top of the flow, grouped together.

Scope

Scopes allow you to group several actions together, this can help users quickly and easily navigate around a flow, especially larger flows. As actions are grouped, a Scope can enable a Try-Catch error handling method, without the need to configure this per action. Your first scope contains a series of actions where you are trying to create/update/delete data, the following scope contains error handling (logging to a Dataverse table/Azure table/SharePoint List, sending emails/notifications etc) and this step only runs if the previous scope fails or times out.

If possible, you should split your Scopes to relevant actions or logical actions and use Try-Catch on these. For example, a Scope that calls an API and retrieves data, then a Scope that writes the data to a Dataverse Table. This allows you to easily see which set of actions failed, if it’s the API you can investigate that, else if it’s the Dataverse table, you can investigate that; no need to open up the scope and look through a dozen or so actions to find the failure.

Expressions

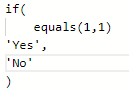

Expressions can be written using formulas to manipulate data, do data validations and write conditions. It is recommended to use the experimental editor wherever possible (Experimental at the time of writing this) so that you can easily read and update the Expression over multiple lines, instead of a single line.

When using this, it is possible to indent your code for easier reading. You cannot do this on the standard editor.

Becomes:

While the above example is basic, when you have bigger expressions or nested IF statements, it can easily become difficult to understand the code without indentation.

Keyboard shortcuts are available to indent code:

- Ctrl + ] – Increases the indent

- Ctrl + [ - Decreases the indent

Each Action in Power Automate consumes an API call, so if you use an action like Convert time zone, instead of the function that does the same thing, you are increasing your API calls per flow and could lead to throttling for bigger flows/environments/organisations. To reduce API calls, Expressions should be written directly in the Action being used for the Expression.

However, sometimes this does not make it easy to understand or to update. For example, you are building a FetchXML query which could contain multiple columns and values and you need to get the column, see if it’s blank, if it’s not then add the value and concatenate the FetchXML and then insert that into a List Rows action for Dataverse. Putting all that as several expressions in a single action would be difficult to maintain. Breaking them into smaller Compose steps and then adding just the output of those compose actions to your List Rows Action, would be better.

if(or(equals(body('Parse_JSON')?['Payload']?['Widgets'],null),equals(body('Parse_JSON')?['Payload']?['Widgets'],'')),null,concat('<condition entityname="Widget" attribute="mcj_widget" operator="eq" value="',body('Parse_JSON')?['Payload']?['Widgets'],'" />'))

Additionally, you can avoid unnecessary Apply to Each loops using expressions, as they look messy and it’s harder to maintain. If you only have a single row coming from an array, consider an expression like:

first(outputs('List_Rows_Action_Name')?['body/value'])?['contactid']

API Calls

When designing a flow that will be used in an organisation, you should consider the API calls inside a flow. Building several large flows that runs thousands of times a day and have hundreds of actions, will very easily take an organisation over its API entitlement and can lead to throttling or additional costs.

Each action in a flow is 1 API call, so reducing the number of actions, as mentioned in the previous section, is good practice. Apply to Each loops are a good example of how API calls can increase significantly.

If you have an Apply to each loop with 5 actions inside of it, and you loop through 10 records, the API calls for the Apply to each loop will be 50.

Error Handling and logging

If a Flow is critical to an organisation, it should be created with Error handling. Error handling is the process of creating a method to do something when the flow errors. This could be sending an email or a Microsoft Teams/push notification, creating a record or retrying the same action later. However, you decide to do the error handling, you should ensure it is robust and covers all the actions in a flow.

As mentioned in the Scope section, Scopes can be used to group actions together, so when creating error handling, it is simple to catch an error if one occurs inside a scope and then perform an action. You should split your scopes and error handling into logical sections, so 1 scope for calling an API, 1 scope for creating record, 1 scope for Uploading Documents to a Document library, and 1 scope for each of these scopes so a total of 6 scopes. Each error handling scope should have a similar name to the action scope. E.g., ScopeAPI and ScopeAPIFailed.

Errors should be logged somewhere for admins to review flows that have error'd/failed/cancelled/timed out. You can store flow runs in Dataverse or an Azure table and for more business critical flows, it might be worth setting up notifications from push notifications on phones to emails. This practice should be used sparingly, as an influx of notifications or emails will tend to be ignored, so keep this for business critical flows that need to be reviewed quickly, for all other flows, a dashboard that can be viewed periodically is the best idea.

There is a preview feature that will store flow runs in Dataverse and this may supersede the above paragraph once it is generally available.

Environment Variables

Environment Variables should be used parameter keys and values to be consumed in Flows or Apps to ensure healthy ALM processes. This should be things like simple configuration data, data sources where these are configurable like SharePoint, or other information that could be useful in flows. Environment variables should not be used to store passwords, API keys or other private keys. These should be handled in other technologies including but not limited to Azure Key Vault. However, storing the name of a key, then retrieved through the Key Vault connector is fine, but other methods like the Dataverse bound action, are also available.

Unlike the Variables section of these coding standards, Environment Variables should be formatted with a prefix of the data type and a description. Environment Variables values are not easily accessible in a flow or in solutions if they have been removed before deployment (although they can be found in the Dataverse tables or Default Solution) so this helps makers identify the variable they will be working with.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| StrTestEmail | Identifies the data type being String, description indicates this is a test email address | Data type is implied but not explicit, no details of what the email is for | |

| JSNConfig | Identifies this Environment Variable as being JSON, description indicates this is configuration data | varConfig | Does not identify the data type so will need to be reviewed before it can be used |

Using JSON to create configuration data that can be used across multiple flows is useful, as it prevents the creation and maintenance of multiple environment variables to do the same thing. E.g.

{ "SendEmail":true, "APIVersion":"1.12.3.2", "TestEmail":"[email protected]", "TestAge":32, "LiveOrTest":"Live" }

The above example prevents the need for 5 different Environment Variables to be created. The JSON can be parsed at the start of each flow or a function can be written to extract the values, but parsing the JSON is easier for most users and makes building and maintaining the flow more accessible. Writing an expression to get a value from this object, use:

parameters('JSNObject (mcj_JSNObject)')?['APIVersion']

Service Accounts and Service Principals

Service Accounts or Service Principals should own all flows that are critical to business usage. Named user accounts should never be used to own a Flow. This is due to people leaving the business and the extra administration involved in finding these flows and changing the ownership and connections.

If the Service Account or Service Principal (application user) owns the flow, they also need (not technical limitation, but a best practice) to create/own the connections, where possible. You can share the connections with the Service Account/Service Principal, but if the person leaves, the connection will need to be recreated, so should still be avoided.

You can create an Application User in Dataverse and Synchronise it with a Service Principal and have that account own the flows, this allows the flows to consume API calls at a higher volume than the per user accounts API. However, having an Application User (Service Principal) own a Flow, means that the flow will require a Power Automate Process license, as there is no actual user owning this, therefore it is unlicensed. Flows that require a higher API threshold will need a Power Automate Process license regardless, it’s important to consider this when architecting your flow patterns and license requirements.

Flows that run in the context of a Dynamics 365 Application, where Dynamics 365 Enterprise or Professional licenses have been purchased, can be owned by Service Principals/Application Users without requiring additional licenses and have a higher API limit.

Most larger organisations, where a Power Apps Premium License is purchased for most/all users in an organisation, a combination of Service Accounts and Service Principals should be used. Service Accounts should own the flows (in the context of an App, so licensing is adhered to) while the connections inside the flows should be Service Principals, where this connector can use Service Principals, for example Dataverse supports Service Principals whereas SharePoint does not.

This method would provide the greatest benefit for small to medium organisations, as a Service Principal is more secure than a user account and can be unaffected by MFA but runs in the context of a Power Apps Premium license. The flow will consume the Service Accounts API limits, so for large organisations with large flows that consume a lot of API request per run, consider Power Automate Process licenses and Service Principal Owners. Large to Enterprise organisations, where API throttling is a concern and cost is not, should consider the switch to Service Principals to own the flow and connections. This option is often too pricey for smaller organisations but large to enterprise will mostly be using premium features and may require tens of thousands of flow runs; these will likely outweigh the additional costs. The below table should be used as a guide only when considering flow account architecture.

The table above has several squares that are neutral, meaning these can be used if cost or other factors are not an issue, but likely the recommended alternative will be used instead. A combination of the recommended and acceptable can also be achieved in some circumstances, if the architecture, including cost, security, scalability have all been considered. This is a guide only, it should not be used to prove or disprove licensing and when in doubt, always check with Microsoft or a Microsoft Partner for licensing and architecture adherence.

For organisations where flows in an environment would connect to multiple data sources, some which can be connected to via a Service Principal and some where they cannot, taking a Service Account only approach might be the best solution for that particular flow for both connections and ownership.

Service Principals should be carefully named, as with any component of Power Automate or Code. Below are some examples of good and bad naming conventions for Service Principals.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| SvPr-Dataverse-Event | Identifies it as a Service Principal for Dataverse and an Events Application | SvPr-Dataverse | Does not identify which application or environment this Service Principal will be used for |

| SvPr-Dataverse-HO-Asset | Identifies the business area using this Service Principal as “HO” or Head Office. Good for larger organisations | SvPr-DV-HO-ASS | Too many acronyms, might be difficult to decipher for new people. |

Service Accounts will usually be created and follow some similar naming convention to the organisation. If the organisation does not have a naming convention, the below tables are some examples of how Service Accounts can be named for Power Platform Projects

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| SvAcc-UKSales PPAdmin | Identifies it as a Service account and the business unit the account belongs to. | Service-Account Admin | Generic, does not detail who the account is for or any technology. |

| SvAcc-UK D365 Admin | Identifies the business area unit and technology. | SvAcc-UKSales TimeSheet Proj - PPAdmin | Too much detail, project specific should be avoided. |

Connection References and Connections

Connection References should be used over Connections when creating flows. Connection References allow you to build a flow and point to a connection, but not store the connection (credentials) in the flow itself. When you deploy your solution to your UAT, Test, Pre-Prod or Production environments, you will be prompted to create a connection in that environment or select an existing one. This allows you to maintain a separation between environments, ensuring they are connecting to the right data, with the correct permissions each time.

There is no limit to the uses of Connection References, a single Connection Refence can be used many times across multiple solutions in an Environment. You may want to consider using multiple Connection References with different Service Accounts/Service Principals creating the connections, across multiple projects. Separating these can help identify flows for troubleshooting purposes if you know which solutions/flows use which credentials for the connection.

e.g., Multiple projects use the Accounts table in Dataverse, a flow is updated records multiple times incorrectly. The audit log would show the user comes from the Sales Project, not the Customer Success or Customer Service Project. Reducing the flows to look through from 100, to 10.

Flows in solutions will use Connection References by default but flows outside solutions will use Connections. If moving a flow from outside a solution to inside a solution, a Connection Reference should be created to replace the connection.

A similar naming convention should be adopted for Connection References like Service Principals. It is important to name connection references, as several can be created easily with multiple developers working on a project. Often all of these are not needed and can be consolidated for easier deployment and management of solutions.

| Good Example | Good Reason | Bad Example | Bad Reason |

|---|---|---|---|

| SvPr-Dataverse-Event-2142 | Identifies the connection Service Principal for Dataverse and an Events Application and a project number. | SvPr-Dataverse | Does not identify which application, environment or project this connection reference will be used for. |

| SvAcc-Dataverse-Asset92512 | Identifies the connection as a Service Account, the technology and the solution/application it will be used for. | SvAcc-Asset | Does not identify the technology, making it difficult to understand what the connection reference is for when looking via a solution. |

5 random numbers and letters will be appended to the end of a Connection Reference automatically. While I’ve not included them in the examples above, you can leave them for ease or clean them up as you go, it will not have a significant impact on your flow design.