Learning Guide: Sound and Subtractive Synthesis - JoshuaStorm/WebSynth GitHub Wiki

Note

This page is meant to get you a "working understanding" of synthesis concepts and certainly is not an end-all be-all guide. If any concept is unclear to you, I recommend breaking out the blessed search engine.

Oscillators

An oscillator is something that moves between two points in a periodic fashion. In our environment, oscillators simply oscillate betweeen two signal points (usually -1 to 1, sometimes 0 to 1, sometimes -0.5 to 0.5, etc).

A pendulum swinging between two points in space is oscillating between its two extremes. A sine oscillator in p5.js is oscillating between two extremes of its output signal, -1 to 1. However, oscillators are defined by more than just their end points, they also have waveshape. Below are the four classical waveshapes.

Each of these waveshapes has different harmonic content which you can learn all about, but for now just know they each sound different.

Fundamentals and Harmonics

A fundamental is primary frequency of a sound. If I set a sawtooth oscillator to play at 440Hz (standard A), I am telling it to set its fundamental to 440Hz.

Harmonics are all frequencies above the fundamental that give the sound its character.

There is a lot to learn about harmonics, but here is a brief rundown:

Sinewaves are nothing but afundamental. It has noharmonics

Sawtoothwaves have allharmonicsin theharmonic series

Squarewaves have all oddharmonics

Trianglewaves also have oddharmonics, but they lose amplitude faster.

Ultimately, all of these sounds are actually just a combination of fundamentals and harmonics which are inherently just sine waves. A cool visualization of this can be found here

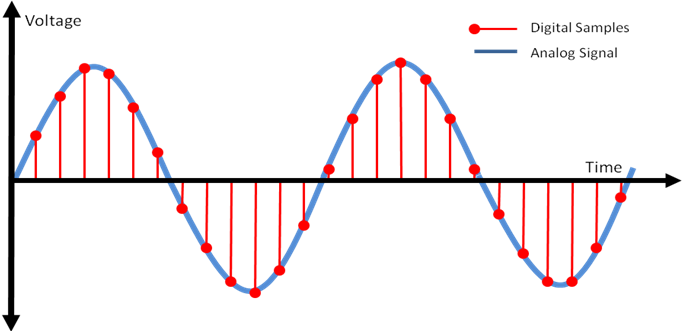

How computers make oscillations

In the real world, sounds are continuous. An analog oscillator can produce a perfectly smooth sine wave. A computer (and in turn, digital oscillator) cannot. At some point a computer's processing is based on bits, which are discrete. Because of this, signal processing on a computer consists of storing samples of a sound, at around 44,100 Hz, each of these samples tells your computer speaker/headphone driver what position to be in. When you play this back at the same rate of the sampling, you get nearly the same sound since the sample rate is so incredibly high.

In order to reproduce these sounds so we can here them, the samples are sent to a digital to analog converter (DAC) which converts the binned samples to a continuous, analog signal by interpolating them.

How p5.js creates produces these samples for us is beyond our layer of abstraction, but know it is inherently based on the above concept.

Pitch/Frequency

The terms pitch and frequency of a sound are generally interchangeable, in most cases I will refer to it as frequency. Traditional musicians generally refer to it as pitch.

The frequency of a sound is the number of times a sound oscillates per second. One oscillation is one movement of a full waveshape. For example, the above picture of the classical waveshapes are each 3 oscillations.

Human hearing is capable of hearing between 12Hz and 28KHz, but this is generally simplified to 20Hz to 20KHz. Each individuals hearing range is different, but more often than not hearing loss occurs in the upper spectrum. For example, my hearing drops off around 16KHz. You can test your own here

MIDI notes

MIDI notes are a way of referring to traditional Western music frequencies in a compact manner. These also mirror the keys on a keyboard:

Amplitude, envelopes, and ADSRs

Amplitude is the volume of a sound.

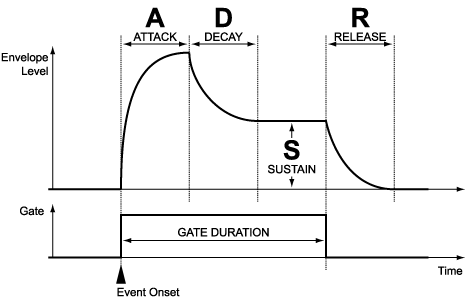

However, amplitude of a sound is not a constant thing. When I slam my car door, the sound does not persist for eternity, the volume changes over time which can be described by an envelope. A simplified envelope is shown below.

This is what we refer to as an ADSR envelope. In reality, an envelope could be defined by any line drawn or function thereof, but a common way to do this in synthesis is with an ADSR.

Arefers toattack. This is the time it takes for the sound to reach its peak amplitude.Drefers todecay. This is the time it takes for the sound to drop to itssustainafter it has reached its peakSrefers tosustain. This is how loud a sound will stay if you hold a note. This is a common source of confusion because all other letters of theADSRdescribe times, whilesustainactually refers to an amplitude.

Remember, an envelope is really just a signal. An envelope can be used to affect different parameters in synthesis, it is just most commonly seen used to describe a sounds amplitude.

Below is a more accurate representation of the ADSRs we use.

Filters

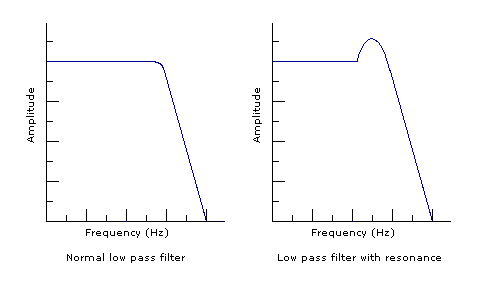

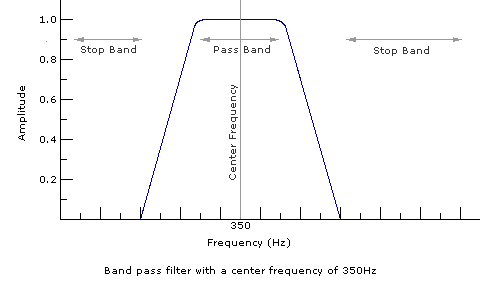

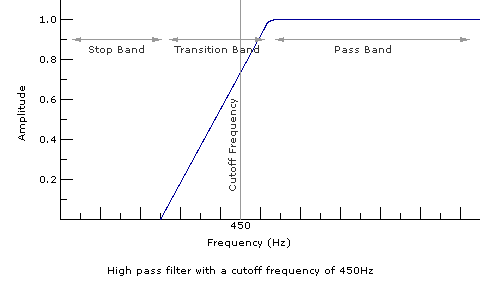

Filters take away the some harmonics of a sound. There are three common forms of filters:

Lowpass- takes away higher harmonics of a sound

Bandpass- takes away all but a 'band' of harmonics

Highpass- takes away the lower harmonics of a sound

Resonance can be thought of as an increase in amplitude of the frequency right before the filter begins to drop off.

An example lowpass filter can be heard here. It is also important to note that, while the filters above shown have "perfect" slopes, many real world filters have different subtle characteristics. This is why you'll hear people talk about the "Moog filter" sound versus a "Steiner Parker style filter" sound.

LFOs - Low Frequency Oscillators

Inherently, LFOs are exactly what they sound like: oscillators that run at low frequencies--Usually under 20Hz. However, they also have a use connotation associated with them in synthesis. They are generally used to modulate--affect in some way--a sound. This can be done in many ways, but common uses for LFOs are affecting the frequency of a sound, the filter frequency, and other parameters.

Synthesizer Architecture

Above are the most basic pieces of a synthesizer, but they effectively make up everything there is in subtractive synthesis. Tons of interesting things of sound design come out of creative uses of the above pieces.

Which comes to synthesizer architecture. We slightly hit on this in the LFO section. These abstractions can be used for more than just their commons uses: an LFO can be used to modulate frequency, or it could be used to modulate the waveshape in someway. An envelope commonly is used to change the amplitude of a sound over time, but it is also commonly used to change a filter over time. Or it could be used to change an LFO over time which changes some other parameter. You could have an oscillator running at audio rate (above 20Hz) changing the frequency of another oscillator that runs to DAC, creating what is called frequency modulation (FM) synthesis... You get the idea.