Light field datasets - IDLabMedia/open-dibr GitHub Wiki

The Depth-Image-Based Renderer (DIBR) takes as input a light field dataset, captured by a multi-view camera setup. This dataset should consist of color and depth information for each input camera. This page elaborates on the picture formats that are supported. Note that audio is not supported and will simply be ignored.

Requirements

Two examples of dataset can be found under Fan and Painter. These illustrate the general two types of datasets that OpenDIBR accepts:

- Images as PNG files (like Painter)

- Videos encoded through H.265 (HEVC) as MP4 files (like Fan). B-frames are not supported, only I- and P-frames.

If you use PNGs, the scene will be static, i.e. objects in the scene will not change in time. If you use videos, the scene will change in time, unless --static is passed on the command line.

Per dataset, all PNGs or MP4s are located in the same folder, which is passed through -i or --input_dir on the command line.

Additionally, each dataset should have a JSON file containing information about the camera intrinsics and extrinsics of each input camera. This file is passed through -j or -input_json on the command line. An example of such a JSON file is:

{

"Axial_system": "COLMAP",

"cameras": [

{

"NameColor": "viewport",

"Position": [

1.65,

1.45,

2.5

],

"Rotation": [

7.7346,

-33.9126,

-0.0

],

"Depth_range": [

0.1,

30.0

],

"Resolution": [

1920,

1080

],

"Projection": "Perspective",

"Focal": [

2058.73,

2058.73

],

"Principle_point": [

960,

540

]

},

{

"NameColor": "v00_texture.mp4",

"NameDepth": "v00_depth.mp4",

"Position": [

1.4765,

1.5491,

2.6004

],

"Rotation": [

7.7346,

-33.9126,

0.0

],

"Depth_range": [

0.35,

12.5

],

"Resolution": [

1920,

1080

],

"Projection": "Perspective",

"Focal": [

2058.73,

2058.73

],

"Principle_point": [

960,

540

],

"BitDepthColor": 8,

"BitDepthDepth": 12

},

.

.

.

]

}

NameColor and NameDepth hold the filenames of the PNG or MP4 files (including the extension).

The Position (in meters) and Rotation (in degrees) of each camera are defined in the axial system indicated by Axial_system. In this case, the "COLMAP" axial system is used, but OpenDIBR supports the following options:

"OPENGL""COLMAP""OMAF": MPEG's OMAF axial system.

The variable Projection describes the projection that was used by the camera that created the light field dataset. Projection should be one of the following strings: ["Perspective", "Equirectangular", "Fisheye_Equidistant"]. More information about these projections can be found in the Blender documentation or on Wikipedia here and here.

- In the case of Perspective projection:

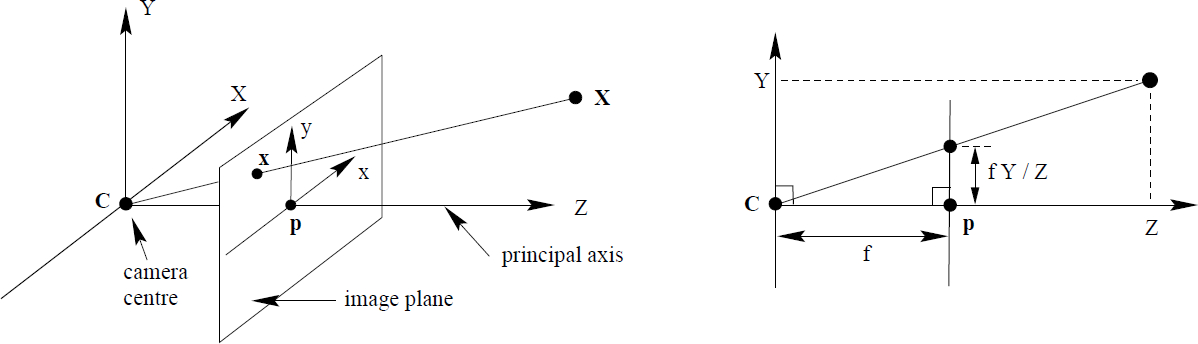

FocalandPrinciple_pointcontain the intrinsics fx, fy, cx and cy of the camera matrix, in pixels.

Here, the focal length (fx,fy) is expressed in pixels instead of millimeters (mm). As an example, say that the camera resolution is 1920x1080, the sensor width is 36mm and horizontal field-of-view FOV_x is 90°. The horizontal focal length in millimeters is therefore

fx_mm = sensor_width_mm / (2*tan(FOV_x/2)) = 18mm

To convert the focal length in millimeters to pixels, do

fx = fx_mm * width / sensor_width_mm = 960.0 pixels

with width = 1920 as the horizontal resolution. The vertical focal length fy is often equal or close to fx, indicating that the pixels are (almost) square.

The principal point (cx,cy) is also expressed in pixels and is often close to half of the resolution (width,height) in pixels. For example if the resolution is (1920,1080), expect something close or equal to (cx,cy) = (960.0,540.0).

-

In the case of Equirectangular projection:

FocalandPrinciple_pointshould be removed from the JSON to be replaced byHor_rangeandVer_range. These variables contain the horizontal and vertical field of view angles (in degrees). For example, 360° cameras will have Hor_range = [-180,180] and Ver_range = [-90,90]. -

In the case of Equidistant projection for fisheye lenses:

FocalandPrinciple_pointshould be removed from the JSON to be replaced byFov. This variable contains the field of view angle (in degrees). For example, fisheye lenses with Fov = 180 will see everything that is in front of them.

Different input cameras are allowed to have different values for Focal and Principle_point, but all input cameras need to have the same values for Projection, Hor_range, Ver_range and Fov.

'Viewport' camera

Each input JSON file should contain a camera with NameColor: viewport, like in the example above. This camera decides the starting position and rotation of the camera used to render to the screen or VR headset. If --vr is not given on the command line, the camera intrinsics Resolution, Focal and Principle_point will also be used.

Supported pixel formats

Again, a difference is made between image and video datasets.

-

Images: PNGs For each camera, two PNGs should be present: one color image and one depth map. The pixel format (as defined through FFmpeg) should be

rgb24/rgb48for the color PNG andgray/gray16befor the depth map PNG. This means that the bit depth per channel per pixel is 8/16 bit for the color and 8/16 bit for the depth map (which only has one channel). These values are communicated throughBitDepthColorandBitDepthDepth, which should be equal to 8 or 16. -

Videos: MP4s For each camera, two MP4 files should be present: one color image and one depth map. They should be encoded using H.265 (HEVC), for example through hevc_nvenc for hardware acceleration. The pixel format (as defined through FFmpeg) should be one of [

yuv420p,yuv420p10le,yuv420p12le] for the color and for the depth map. This means that the bit depth per channel per pixel is 8/10/12 bit for the color and the depth map (which only has one channel). This value is communicated throughBitDepthColorandBitDepthDepth, which should be equal to 8, 10 or 12.

Depth maps

Say that you have a depth value for each pixel of the input image/videos in meters.

Important: if the input camera uses the Perspective projection, we assume that the depth value is the Z-depth. If the projection is Equirectangular or Fisheye_Equidistant however, the Euclidean distance (|CX| on the figure below) to the camera needs to be used. You can convert Euclidean depth to Z-depth for a Perspective camera as follows in python:

cols = np.square(np.arange(width).reshape(1,width) + 0.5 - Principle_point.x).repeat(height, axis=0)

rows = np.square(np.arange(height).reshape(height,1) + 0.5 - Principle_point.y).repeat(width, axis=1)

help_matrix= Focal.x / np.sqrt(Focal.x**2 + cols + rows)

zdepth = np.multiply(euclidean_depth, help_matrix)

Here, Focal and Principle_point are expressed in pixels, like in the JSON file.

The depth will be stored in n-bit pixels, e.g. 8-bit or 12-bit. That means that a depth in meters need to be mapped onto whole numbers in the range [0, 2^n-1], for example [0,255] or [0,4095]. To do this, you first need to choose a near and far plane so that the depth lies in [near, far] for all input images/videos. The mapping from [near, far] range to [0,2^n-1] happens as follows:

depth_n_bit = (1/depth_meters - 1/far) / (1/near-1/far) * (2^n-1)

Note that this mapping is non-linear. Close to the near plane, the depth map precision is at its highest, and the precision quickly falls off as the depth increases to far (just like f(x) = 1/x).

Here is an example of a depth map:

Make your own datasets

Say that you have a set of color and depth images/videos for cameras of which the intrinsics and extrinsics are known. To be able to use these images/videos in OpenDIBR, you will have to create the JSON file with the camera parameters, as discussed above. You will also need to convert your images to PNGs or videos to MP4s with accepted bit depths, if this was not the case yet. Below, some example FFmpeg commands or Python scripts are provided to help you with this.

Note that in the case of HEVC videos, **only I and P-frames are supported, not B-frames. **Also, OpenDIBR assumes that the frame rate of all videos is 30 frames per seconds.

YUV (YCbCr) Color to PNG

This can easily be done through ffmpeg, for example:

ffmpeg -hide_banner -s:v 1920x1080 -r 30 -pix_fmt yuv420p10le -i v00_texture_1920x1080_yuv420p10le.yuv -vf "select=eq(n\,25)" -pix_fmt rgb24 v00_texture.png

Here, 25 is the frame number to be extracted, starting from zero. Be sure to change the resolution -s:v and -pix_fmt to fit your case.

YUV (YCbCr) Depth map to PNG

Example code to convert yuv420p/yuv420p10le/yuv420p12le/yuv420p16le depth map files to gray/gray16be PNG files can be found in scripts/yuv_depth_to_png.py. For some reason, using FFmpeg for this task resulted in incorrect depth maps. For example:

python yuv_depth_to_png.py v00_depth_1920x1080_yuv420p16le.yuv 1920 1080 25 yuv420p16le v00_depth.png

YUV (YCbCr) Color to MP4

This can easily be done through ffmpeg, for example:

ffmpeg -hide_banner -s:v 1920x1080 -r 30 -pix_fmt yuv420p10le -i v00_texture_1920x1080_yuv420p10le.yuv -c:v libx265 -x265-params lossless=1:bframes=0 -preset slow -an -pix_fmt yuv420p v00_texture_1920x1080_yuv420p.mp4

However, lossless compression is often unnecessary and even slows down OpenDIBR's performance. It is better to specify a -crf or -b:v value (the latter gives you more control).

ffmpeg -hide_banner -s:v 1920x1080 -r 30 -pix_fmt yuv420p10le -i v00_texture_1920x1080_yuv420p10le.yuv -c:v libx265 -x265-params bframes=0 -crf 18 -preset slow -an -pix_fmt yuv420p v00_texture_1920x1080_yuv420p.mp4

YUV (YCbCr) Depth map to MP4

This can easily be done through ffmpeg, for example:

ffmpeg -hide_banner -s:v 1920x1080 -r 30 -pix_fmt yuv420p16le -i v00_depth_1920x1080_yuv420p16le.yuv -c:v libx265 -x265-params lossless=1:bframes=0 -preset slow -an -pix_fmt yuv420p12le v00_depth_1920x1080_yuv420p12le.mp4

For depth maps, I do recommend using lossless compression if the scene contains fine details (e.g. Fan).

Other example datasets

More datasets can downloaded from here in folder full_datasets. These datasets are ready to be used by OpenDIBR. These datasets are provided by IDLab MEDIA and were derived from here.

Here is a brief overview of what to expect:

| nr. cameras | type | projection | camera placement | |

|---|---|---|---|---|

| Barbershop_s | 12 | images | Fisheye Equidistant | spread across a sphere (radius 72cm) looking outwards |

| Barbershop_c | 21 | images | Perspective | along a planar grid |

| Zen_Garden_s | 12 | images | Fisheye Equidistant | spread across a sphere (radius 85cm) looking outwards |

| Zen_Garden_c | 21 | images | Perspective | along a planar grid |

The other folders visual_quality and computational_performance also contain datasets, some of them derived of datasets provided by the MPEG-I community (see below). They are also ready to be fed to OpenDIBR. However they do not contain all input views. More information can be found in Replicating results from paper.

MPEG-I members can download more datasets from https://dms.mpeg.expert/ by clicking on MPEG-Content and logging in. The YCbCr (.yuv) files can be downloaded from folder MPEG-I > Part12-ImmersiveVideo > ctc_content. Note that the YCbCr files need to be encoded by H265/HEVC or as PNGs before being fed to OpenDIBR.

| nr. cameras | type | projection | camera placement | |

|---|---|---|---|---|

| A) ClassroomVideo | 15 | videos | Equirectangular 360 degrees | within a sphere with radius 10cm |

| O) Fan | 15 | videos | Perspective | along a planar grid |

| E) Frog | 13 | videos | Perspective | along a horizontal line |

| R) Group | 21 | videos | Perspective | along two wide horizontal arcs |

| J) Kitchen | 25 | videos | Perspective | along a planar grid |

| D) Painter | 16 | videos | Perspective | along a planar grid |