Results: Model Performances in GPML - IBM/AMLSim GitHub Wiki

Example 1: Number of Edges and Density

Common parameters

- Number of vertices (accounts): 27,770

- Alert patterns: 100 triangles as fraud patterns and 1000 fan-in/out patterns with 5 vertices as false alerts

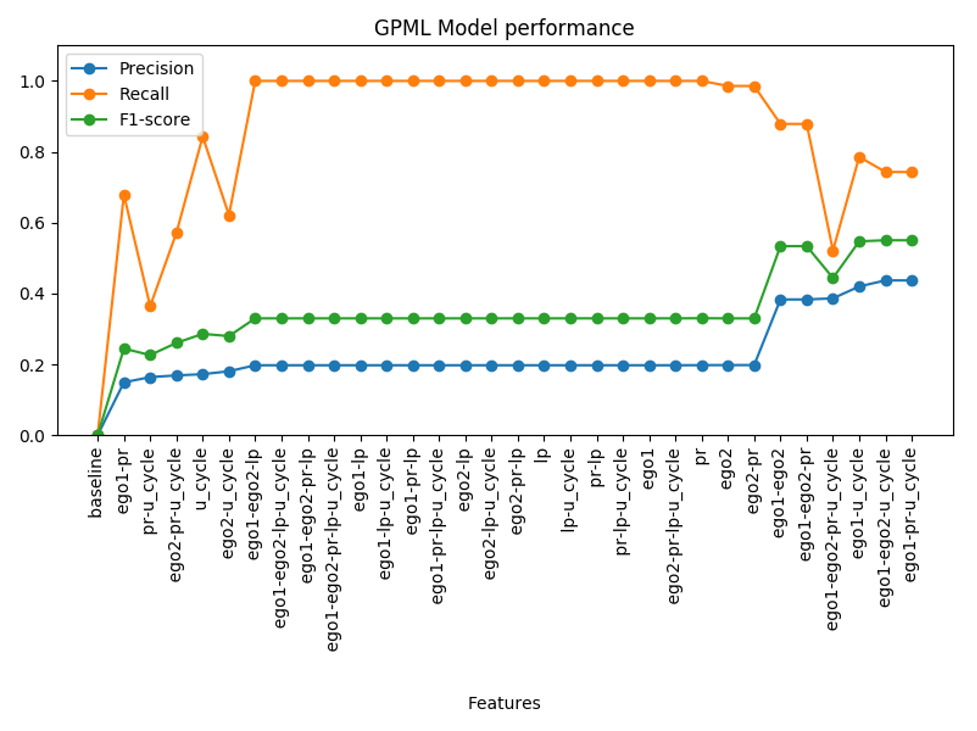

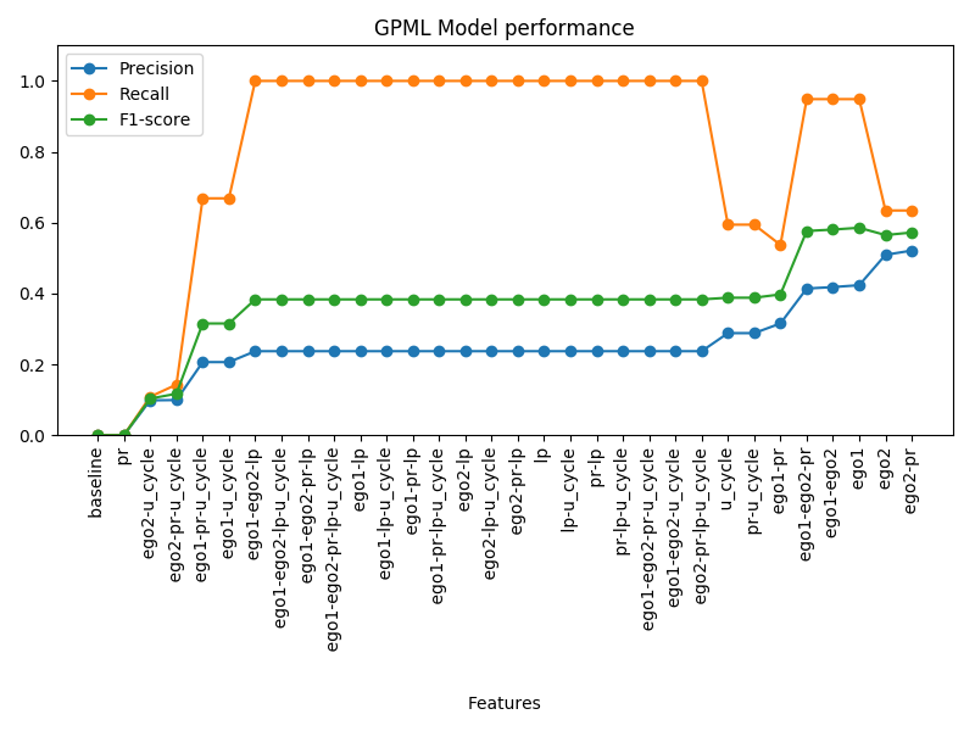

GPML Results

-

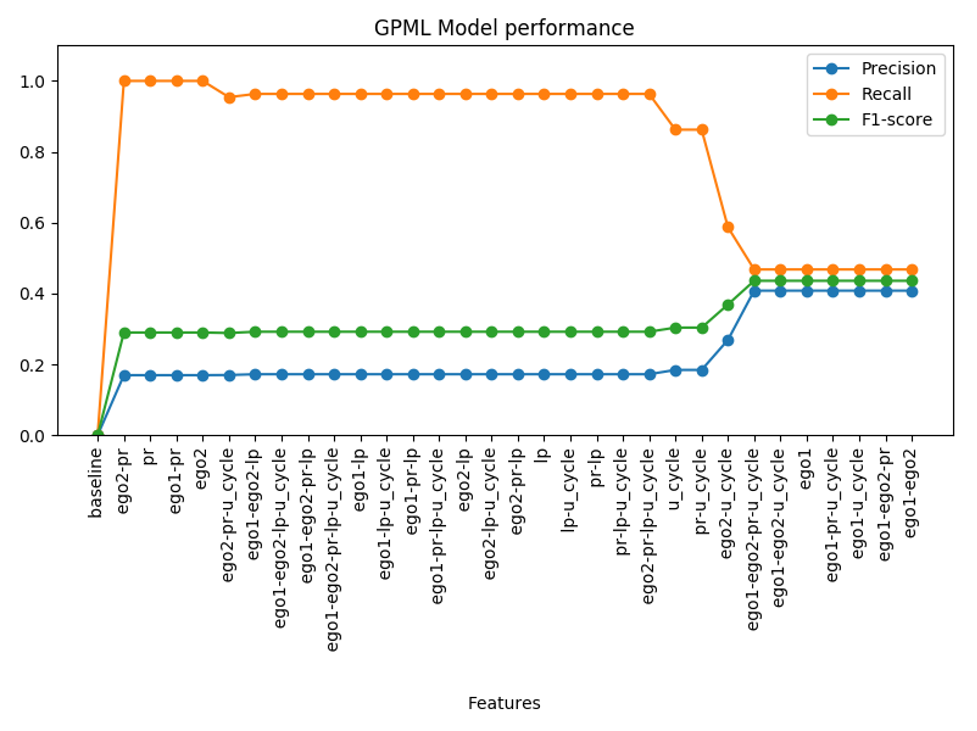

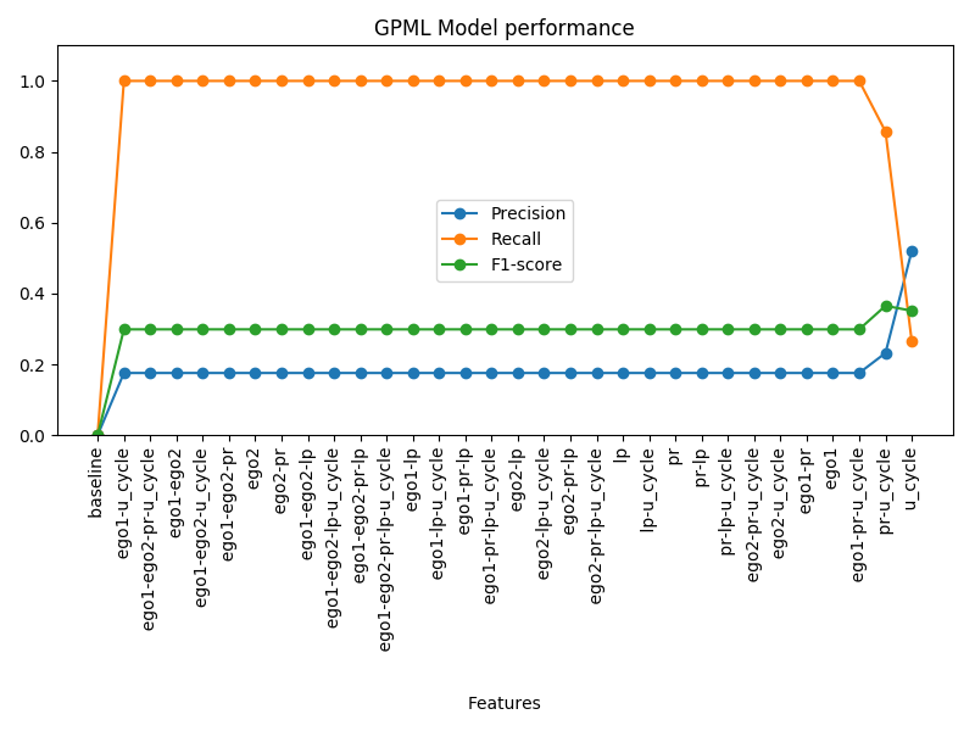

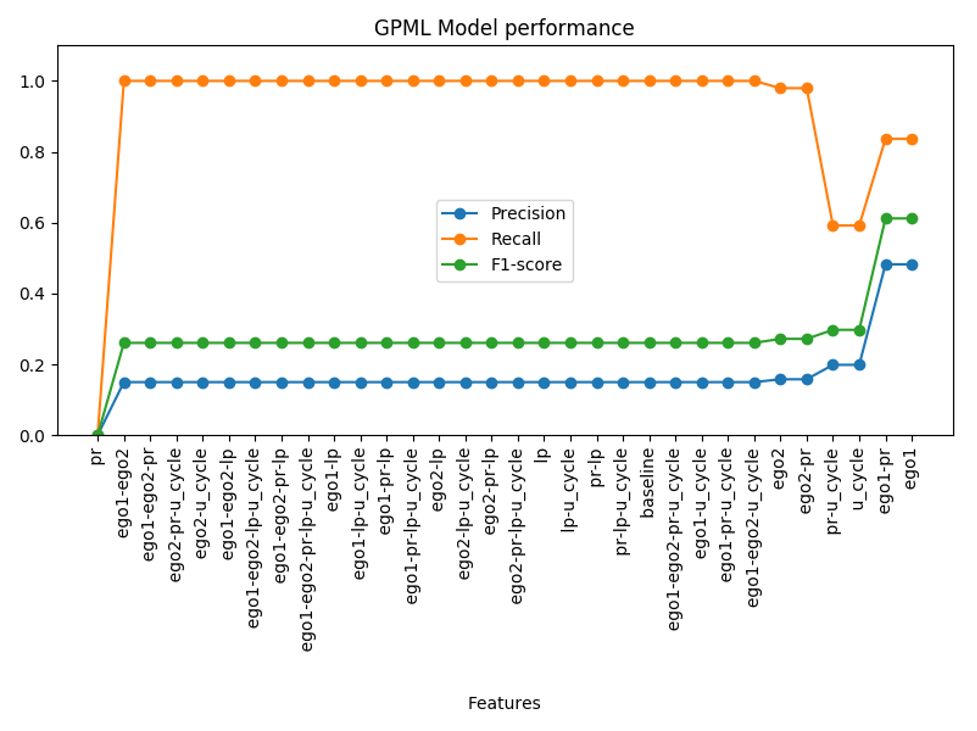

Sparse (56,021 transactions)

-

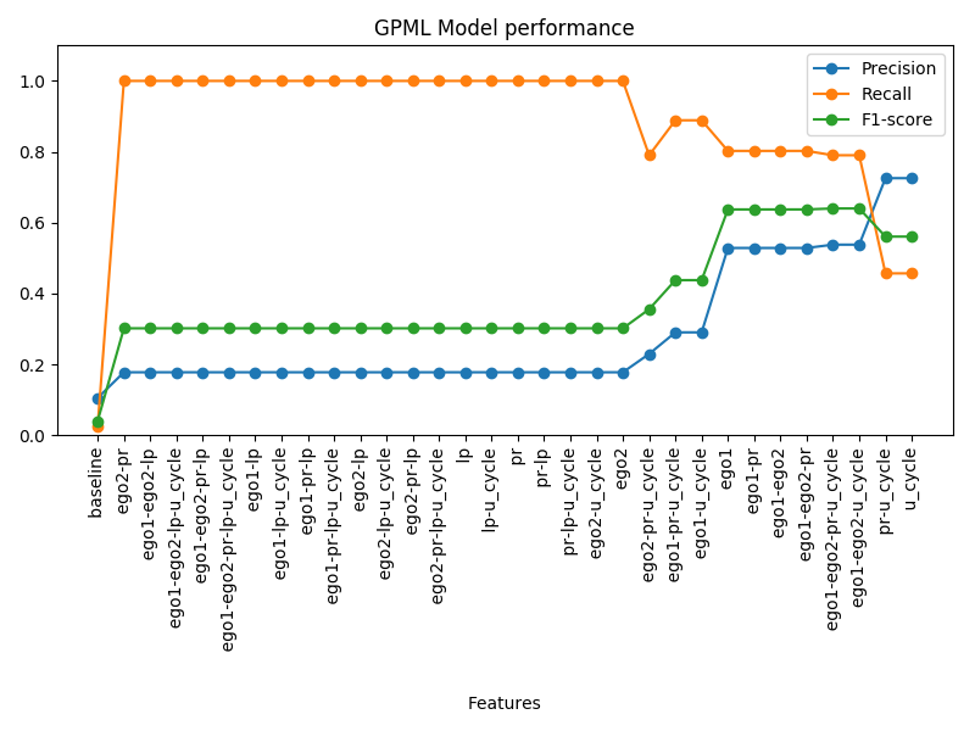

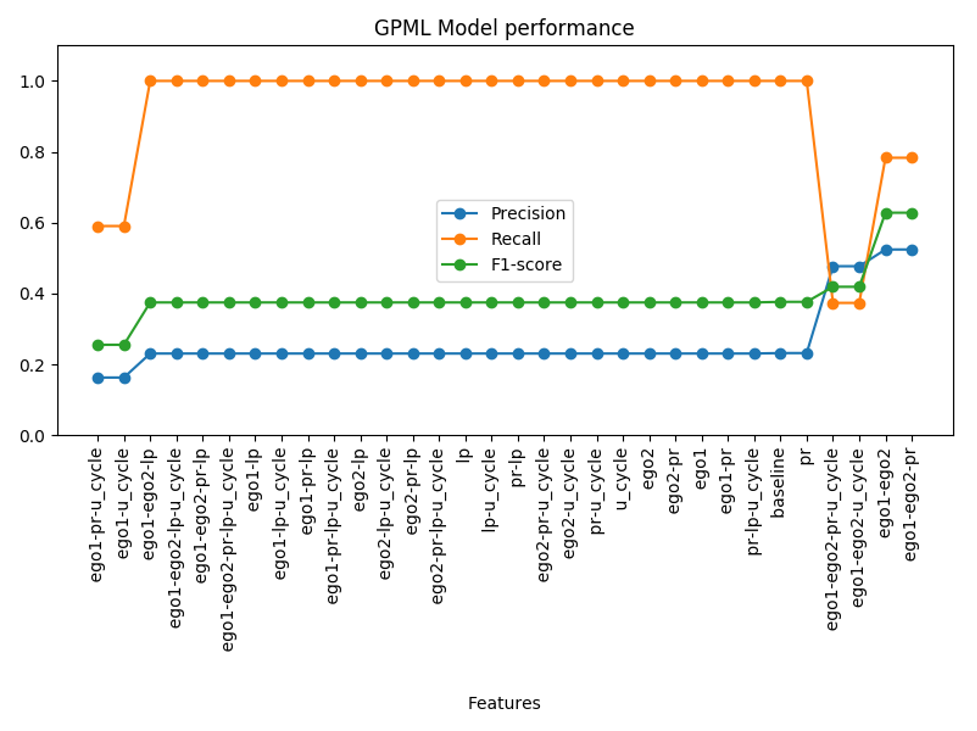

Medium (96,463 transactions)

-

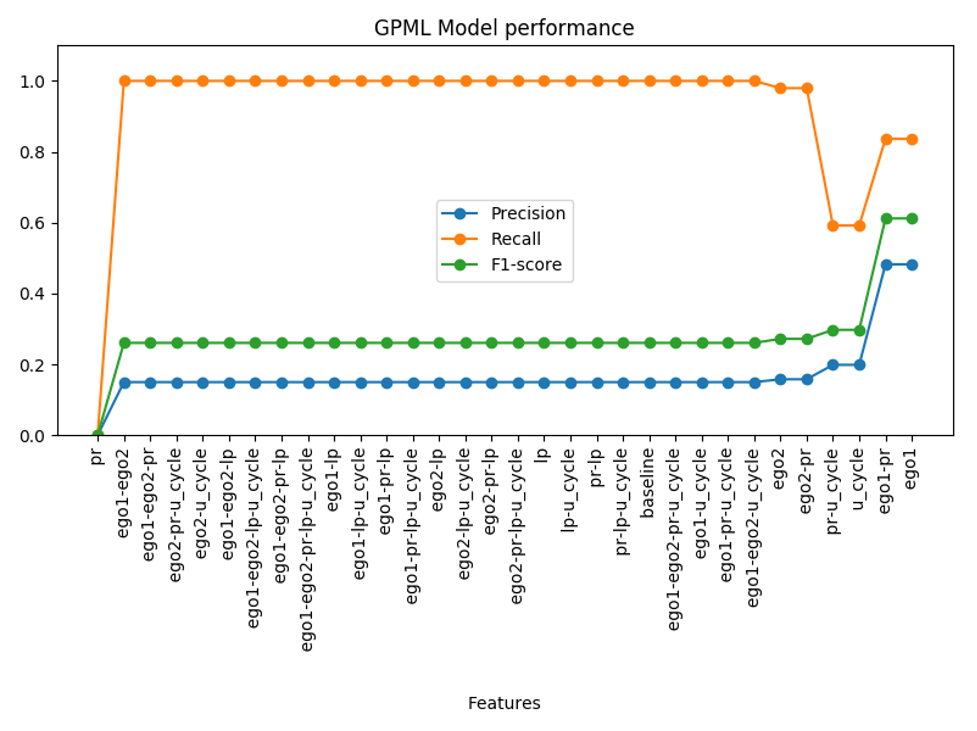

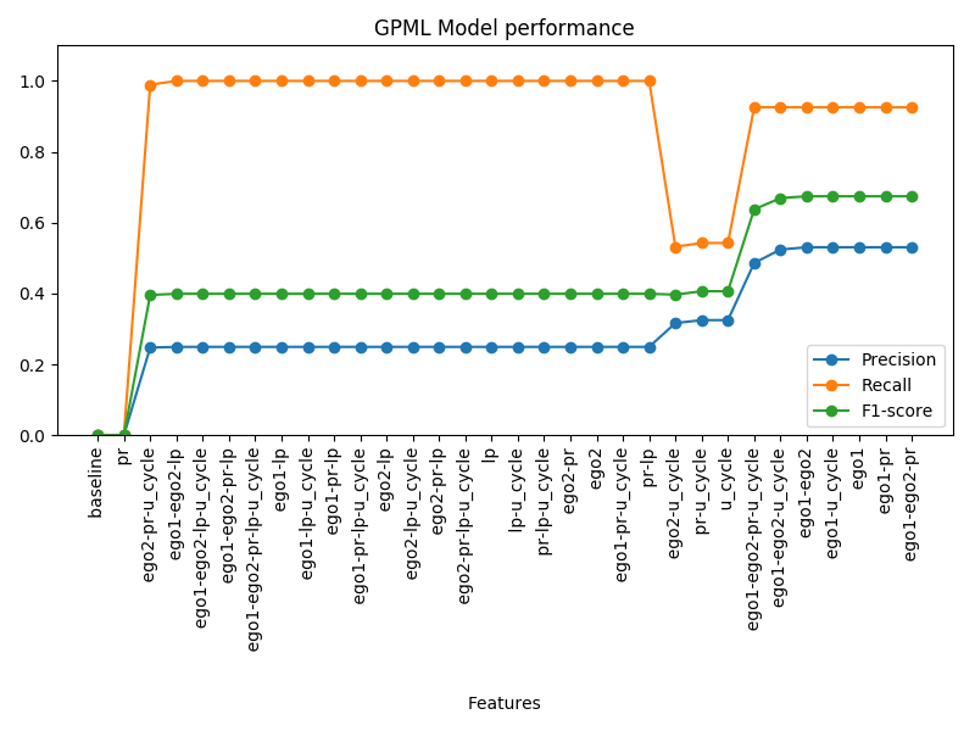

Dense (160,970 transactions)

-

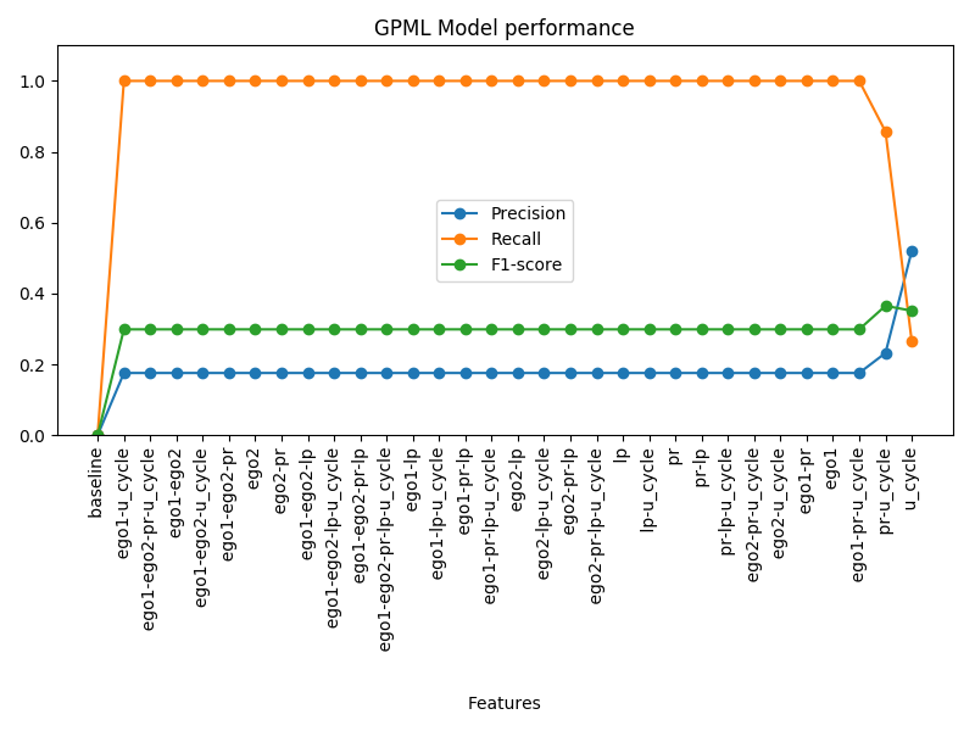

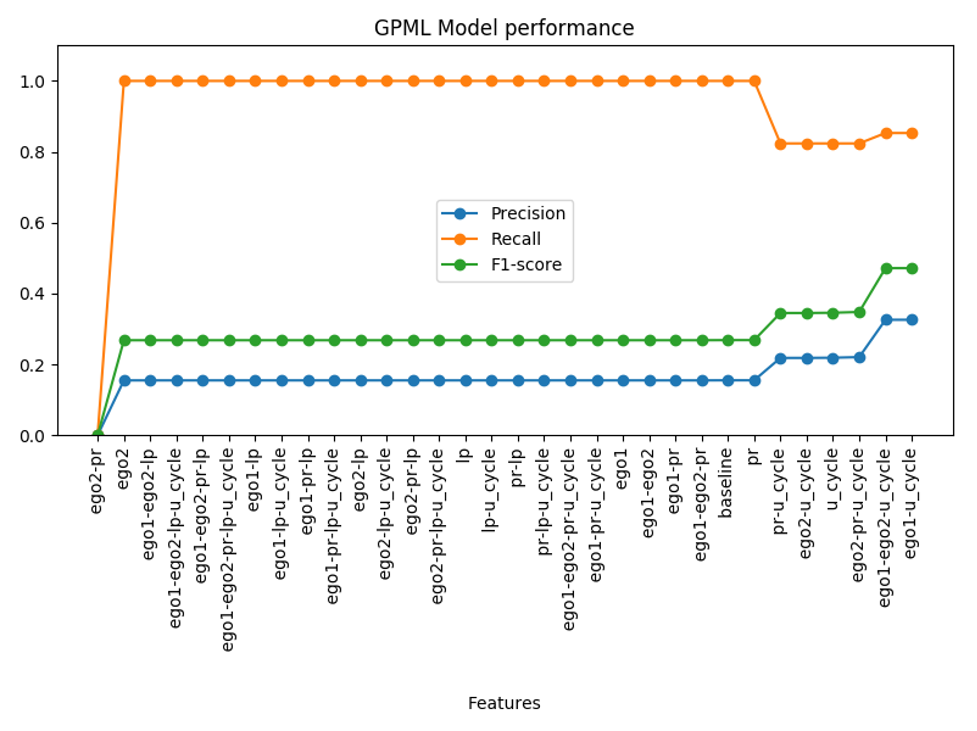

239,385 transactions

-

276,359 transactions

Findings

In dense transaction networks, the combinations which yield the largest precision is undirected cycle feature, but the recall drops to around 0.2. The best number of edges is around 160K.

Example 2: Number of Fraud Patterns and False Alerts

Common parameters:

- Number of vertices (accounts): 27,770

- Number of edges (transactions): 160K

- Fraud pattern: Triangle (3-length cycle)

- False alert pattern: Fan-in/out with 5 accounts

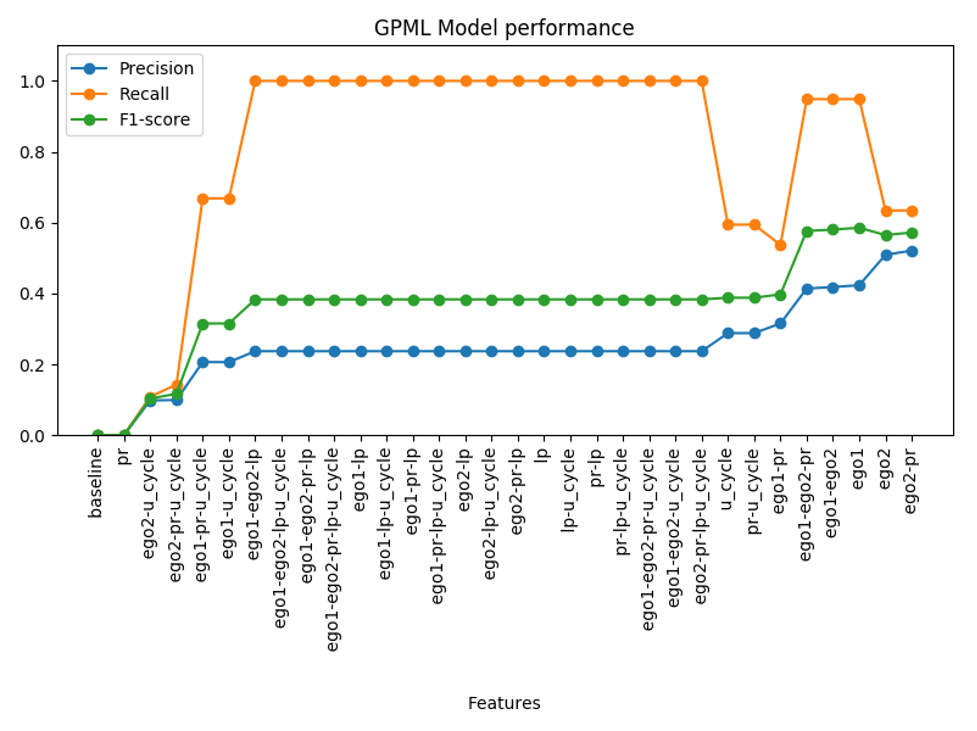

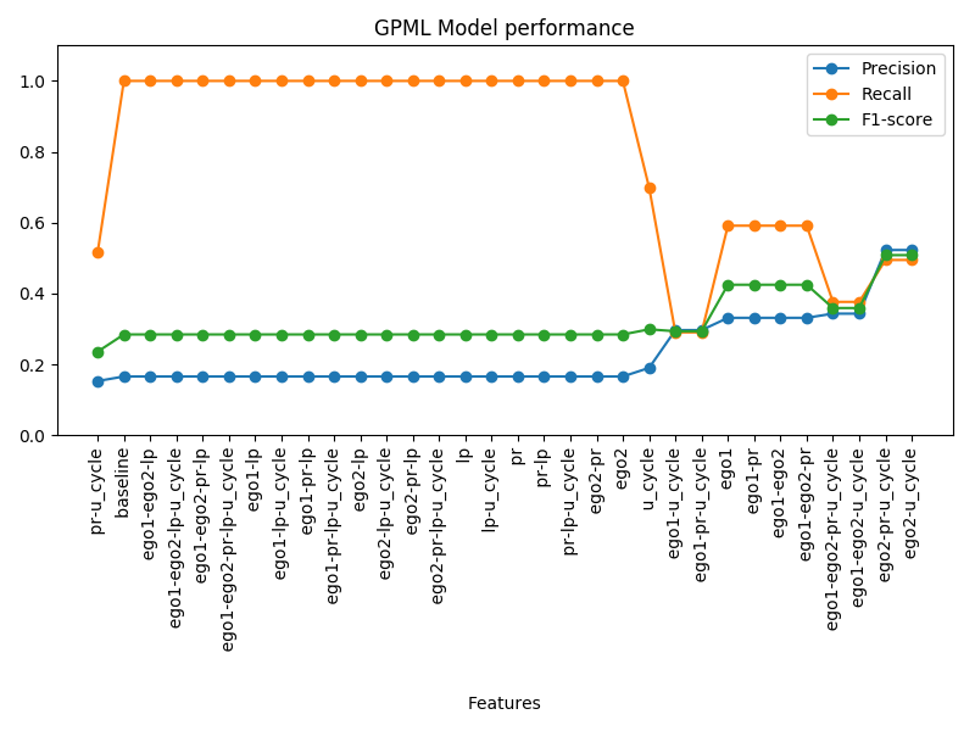

GPML Results

-

50 fraud patterns (500 false alerts)

-

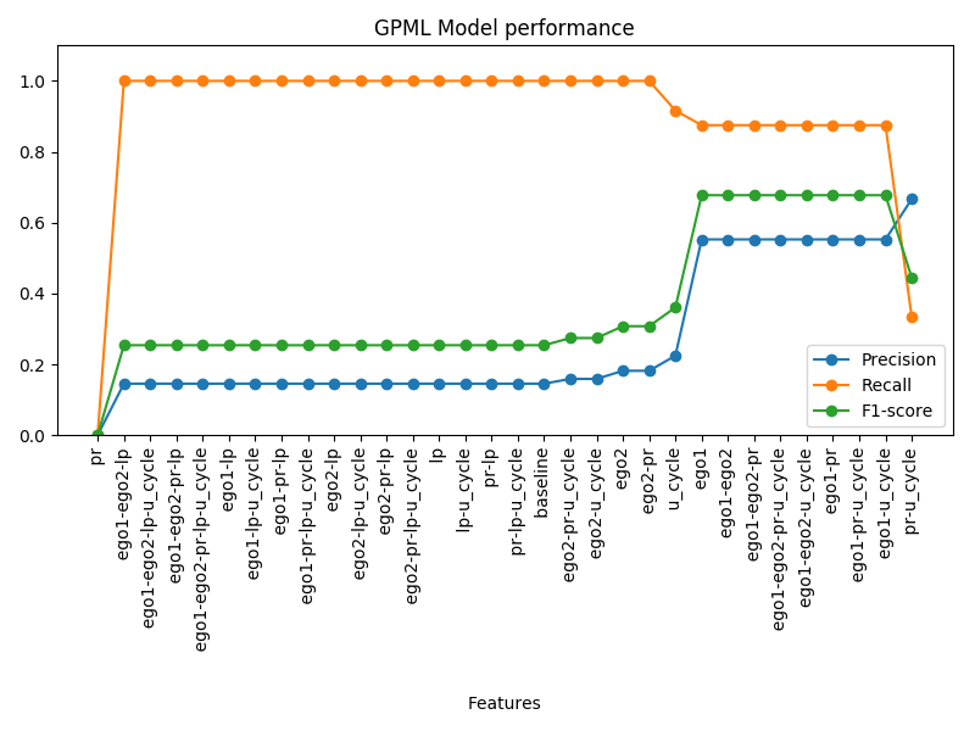

100 fraud patterns (1,000 false alerts)

-

200 fraud patterns (2,000 false alerts)

Findings

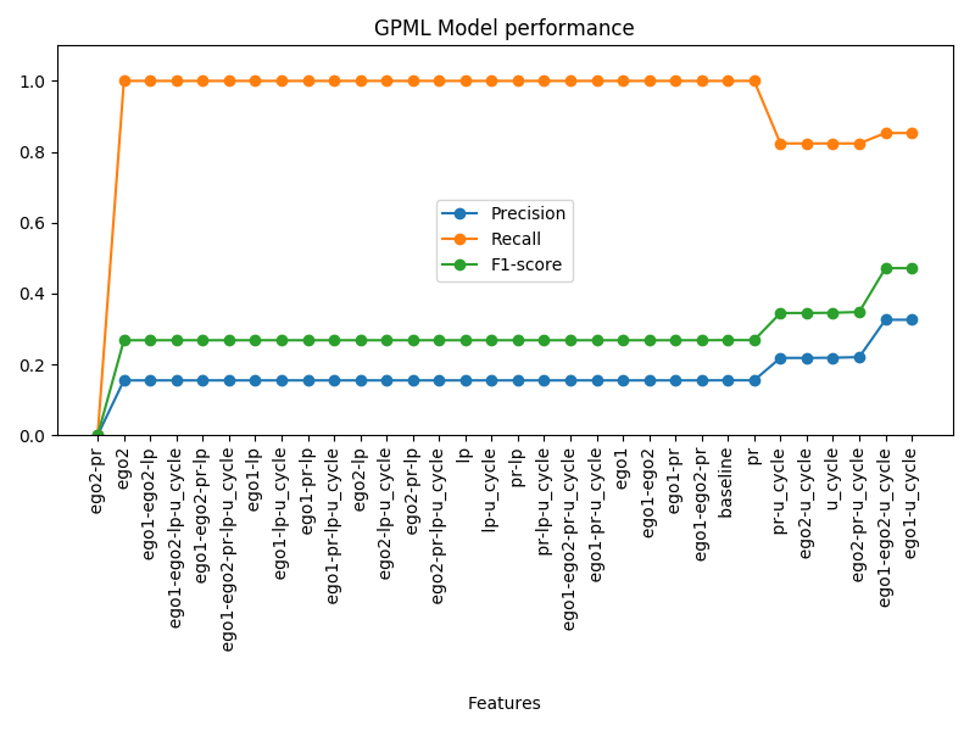

The recall values are more stable with more fraud patterns (more than 0.8 with 200 fraud patterns). The graph feature combinations of undirected cycles and egonet gain the precision and F1-score.

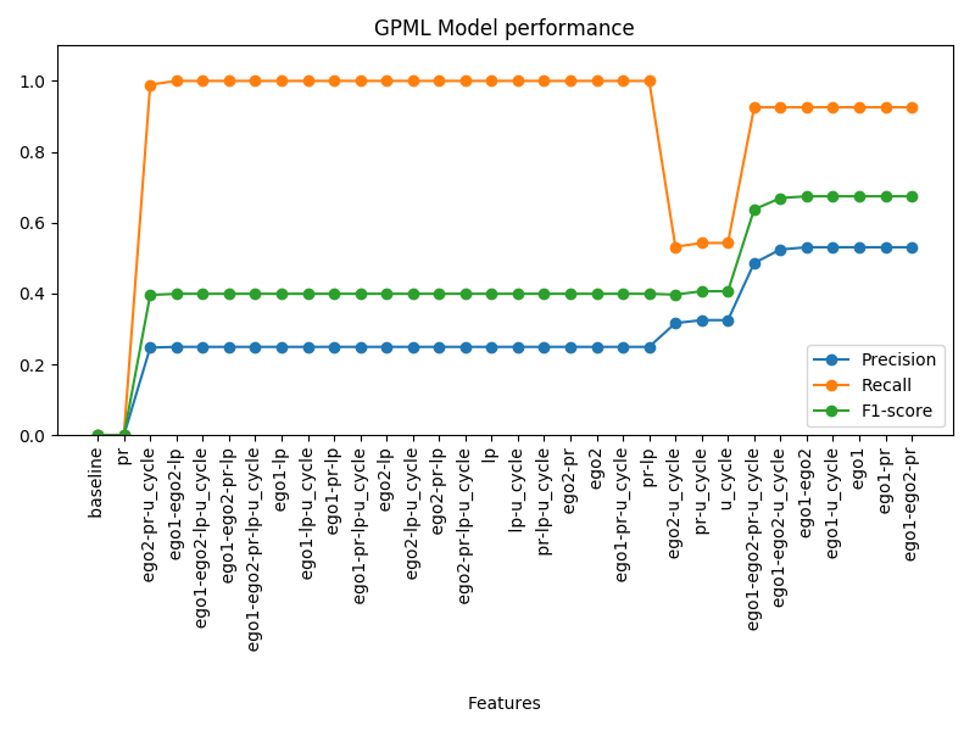

Example 3: Size of Fraud patterns (length of cycle patterns)

Common parameters:

- Number of vertices (accounts): 27,770

- Number of edges (transactions): 160K

100 fraud patterns and 1,000 false alerts

-

Triangle (3-length cycles)

-

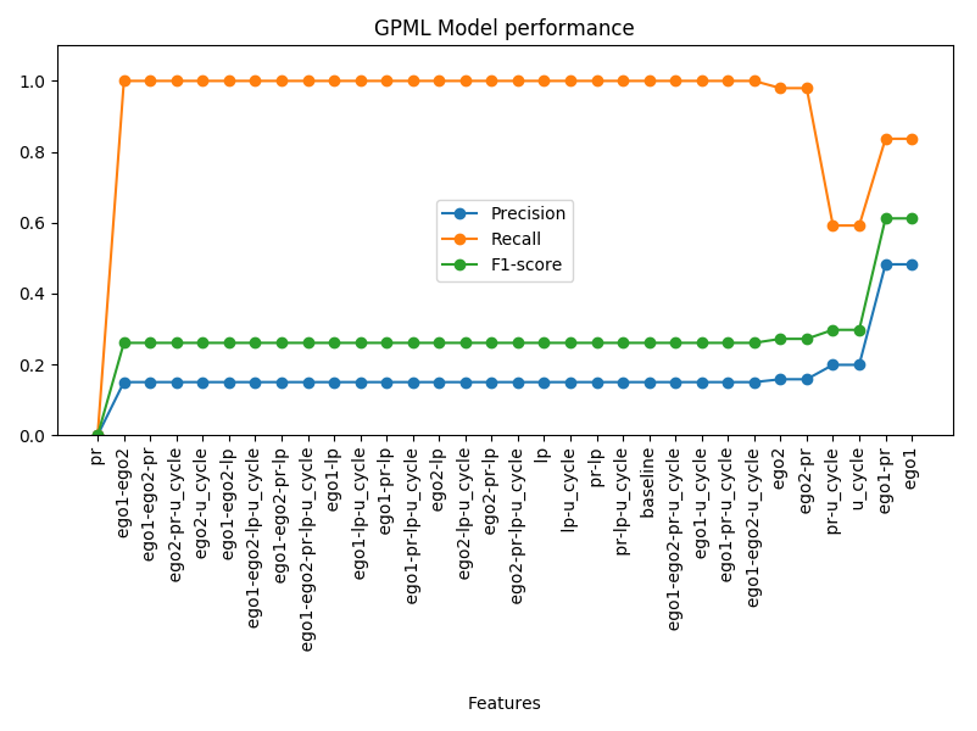

Square (4-length cycles)

-

Pentagon (5-length cycles)

200 fraud patterns and 2,000 false alerts

-

Triangle

-

Square

-

Pentagon

Findings

The recall values are stable with the triangle fraud patterns. With the square and pentagon fraud patterns, the recall values drop to 0.4-0.5 for some features.

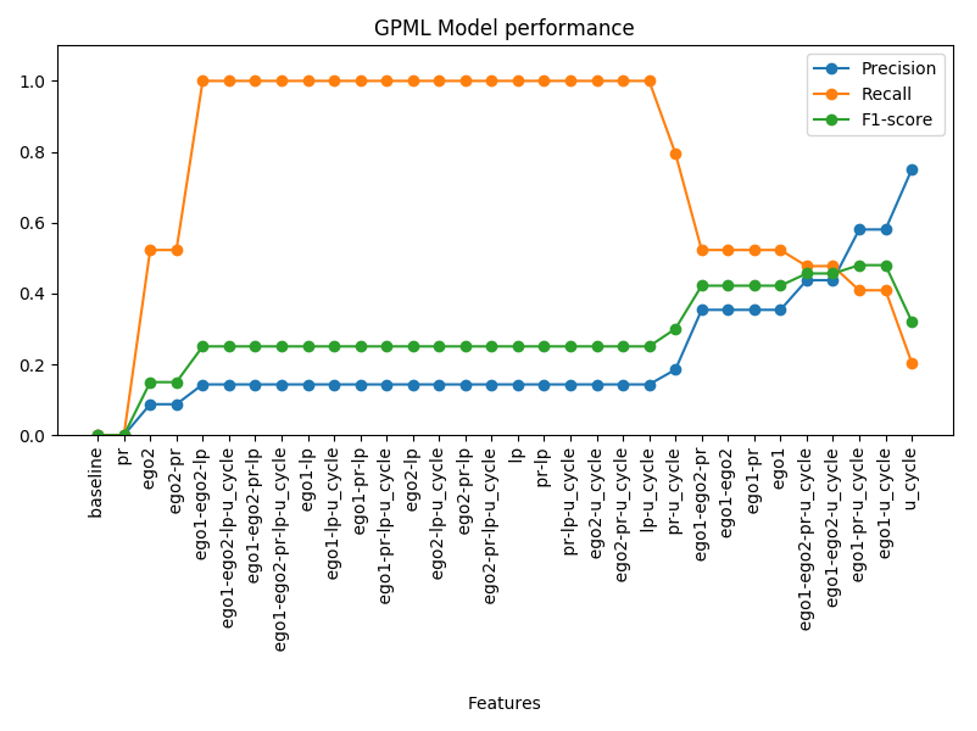

Example 4: Type of Fraud patterns

Common parameters:

- Number of vertices (accounts): 27,770

- Number of edges (transactions): 160K

- False alert patterns: 1000 fan-in/out patterns with 3 vertices

GPML Results

-

100 Pentagons (5-length cycles)

-

100 Fan-in/out (5 vertices)

-

200 Pentagons (5-length cycles)

-

200 Fan-in/out (5 vertices)

Findings

In fan-in/out fraud patterns, there are trade-offs between precision and recall values, and recall values dropped to 0.2-0.4 in some feature combinations.